Ozer Can Devecioglu

Progressive Transfer Learning for Multi-Pass Fundus Image Restoration

Apr 14, 2025

Abstract:Diabetic retinopathy is a leading cause of vision impairment, making its early diagnosis through fundus imaging critical for effective treatment planning. However, the presence of poor quality fundus images caused by factors such as inadequate illumination, noise, blurring and other motion artifacts yields a significant challenge for accurate DR screening. In this study, we propose progressive transfer learning for multi pass restoration to iteratively enhance the quality of degraded fundus images, ensuring more reliable DR screening. Unlike previous methods that often focus on a single pass restoration, multi pass restoration via PTL can achieve a superior blind restoration performance that can even improve most of the good quality fundus images in the dataset. Initially, a Cycle GAN model is trained to restore low quality images, followed by PTL induced restoration passes over the latest restored outputs to improve overall quality in each pass. The proposed method can learn blind restoration without requiring any paired data while surpassing its limitations by leveraging progressive learning and fine tuning strategies to minimize distortions and preserve critical retinal features. To evaluate PTL's effectiveness on multi pass restoration, we conducted experiments on DeepDRiD, a large scale fundus imaging dataset specifically curated for diabetic retinopathy detection. Our result demonstrates state of the art performance, showcasing PTL's potential as a superior approach to iterative image quality restoration.

Blind Underwater Image Restoration using Co-Operational Regressor Networks

Dec 05, 2024

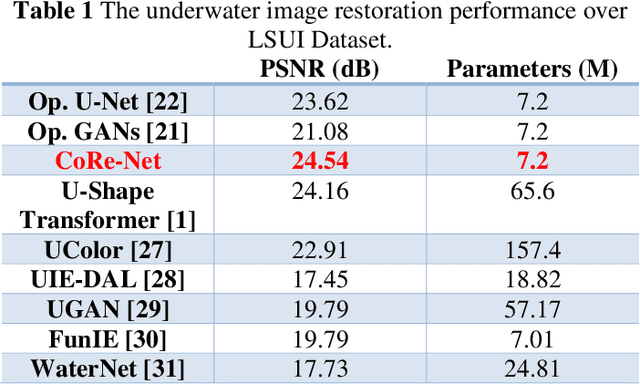

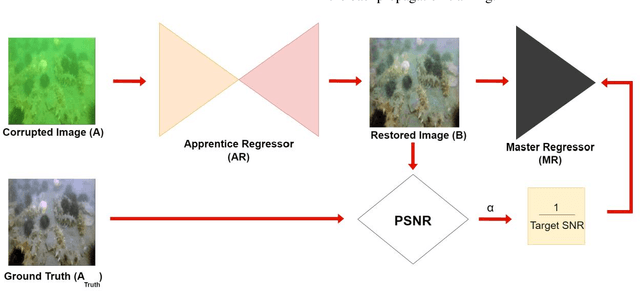

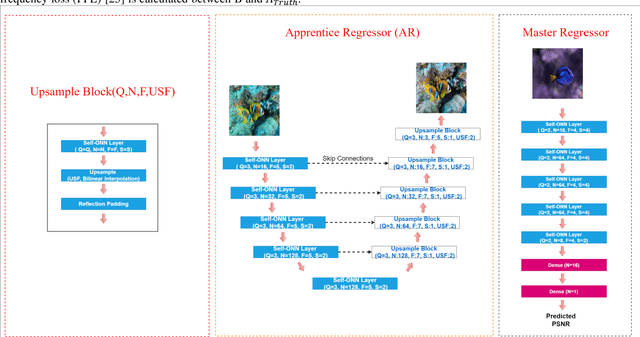

Abstract:The exploration of underwater environments is essential for applications such as biological research, archaeology, and infrastructure maintenanceHowever, underwater imaging is challenging due to the waters unique properties, including scattering, absorption, color distortion, and reduced visibility. To address such visual degradations, a variety of approaches have been proposed covering from basic signal processing methods to deep learning models; however, none of them has proven to be consistently successful. In this paper, we propose a novel machine learning model, Co-Operational Regressor Networks (CoRe-Nets), designed to achieve the best possible underwater image restoration. A CoRe-Net consists of two co-operating networks: the Apprentice Regressor (AR), responsible for image transformation, and the Master Regressor (MR), which evaluates the Peak Signal-to-Noise Ratio (PSNR) of the images generated by the AR and feeds it back to AR. CoRe-Nets are built on Self-Organized Operational Neural Networks (Self-ONNs), which offer a superior learning capability by modulating nonlinearity in kernel transformations. The effectiveness of the proposed model is demonstrated on the benchmark Large Scale Underwater Image (LSUI) dataset. Leveraging the joint learning capabilities of the two cooperating networks, the proposed model achieves the state-of-art restoration performance with significantly reduced computational complexity and often presents such results that can even surpass the visual quality of the ground truth with a 2-pass application. Our results and the optimized PyTorch implementation of the proposed approach are now publicly shared on GitHub.

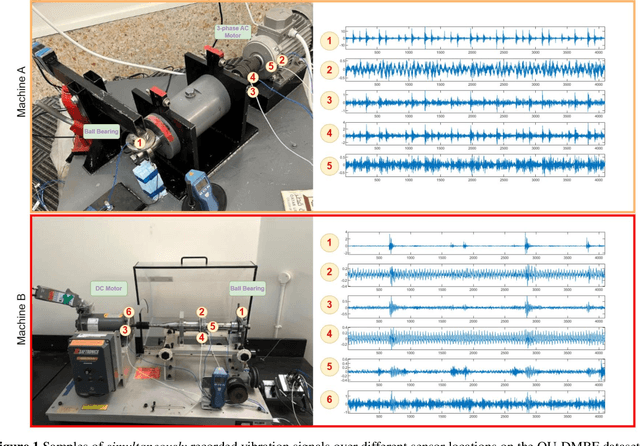

Exploring Sound vs Vibration for Robust Fault Detection on Rotating Machinery

Dec 17, 2023Abstract:Robust and real-time detection of faults on rotating machinery has become an ultimate objective for predictive maintenance in various industries. Vibration-based Deep Learning (DL) methodologies have become the de facto standard for bearing fault detection as they can produce state-of-the-art detection performances under certain conditions. Despite such particular focus on the vibration signal, the utilization of sound, on the other hand, has been neglected whilst only a few studies have been proposed during the last two decades, all of which were based on a conventional ML approach. One major reason is the lack of a benchmark dataset providing a large volume of both vibration and sound data over several working conditions for different machines and sensor locations. In this study, we address this need by presenting the new benchmark Qatar University Dual-Machine Bearing Fault Benchmark dataset (QU-DMBF), which encapsulates sound and vibration data from two different motors operating under 1080 working conditions overall. Then we draw the focus on the major limitations and drawbacks of vibration-based fault detection due to numerous installation and operational conditions. Finally, we propose the first DL approach for sound-based fault detection and perform comparative evaluations between the sound and vibration over the QU-DMBF dataset. A wide range of experimental results shows that the sound-based fault detection method is significantly more robust than its vibration-based counterpart, as it is entirely independent of the sensor location, cost-effective (requiring no sensor and sensor maintenance), and can achieve the same level of the best detection performance by its vibration-based counterpart. With this study, the QU-DMBF dataset, the optimized source codes in PyTorch, and comparative evaluations are now publicly shared.

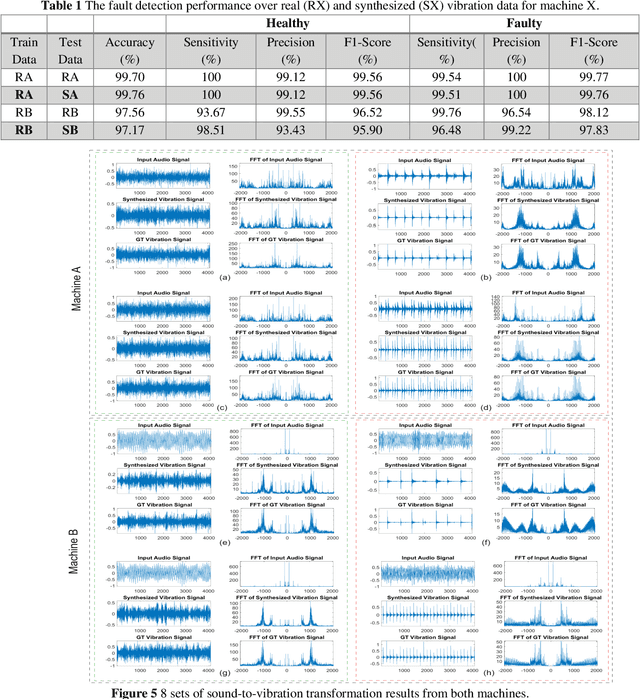

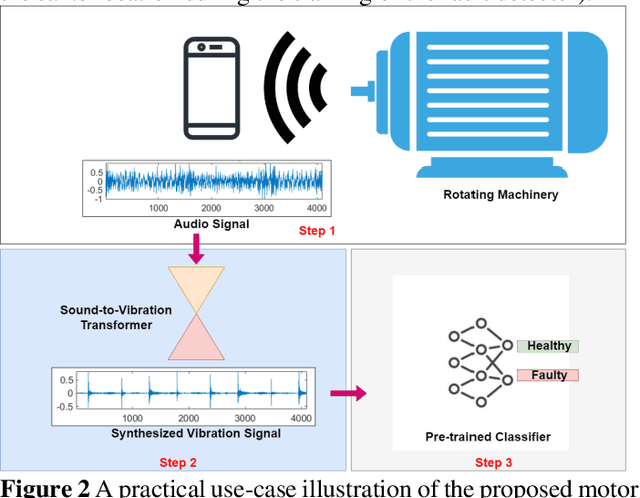

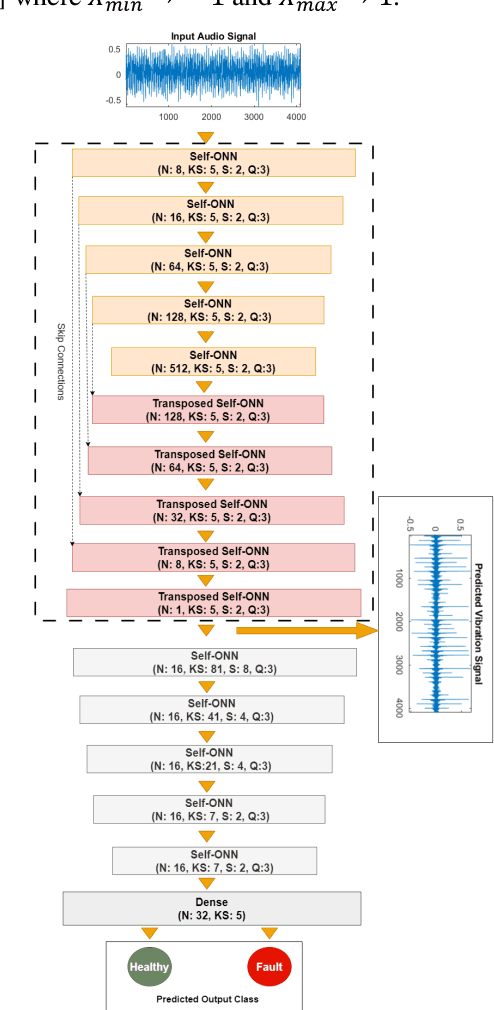

Sound-to-Vibration Transformation for Sensorless Motor Health Monitoring

May 13, 2023

Abstract:Automatic sensor-based detection of motor failures such as bearing faults is crucial for predictive maintenance in various industries. Numerous methodologies have been developed over the years to detect bearing faults. Despite the appearance of numerous different approaches for diagnosing faults in motors have been proposed, vibration-based methods have become the de facto standard and the most commonly used techniques. However, acquiring reliable vibration signals, especially from rotating machinery, can sometimes be infeasibly difficult due to challenging installation and operational conditions (e.g., variations on accelerometer locations on the motor body), which will not only alter the signal patterns significantly but may also induce severe artifacts. Moreover, sensors are costly and require periodic maintenance to sustain a reliable signal acquisition. To address these drawbacks and void the need for vibration sensors, in this study, we propose a novel sound-to-vibration transformation method that can synthesize realistic vibration signals directly from the sound measurements regardless of the working conditions, fault type, and fault severity. As a result, using this transformation, the data acquired by a simple sound recorder, e.g., a mobile phone, can be transformed into the vibration signal, which can then be used for fault detection by a pre-trained model. The proposed method is extensively evaluated over the benchmark Qatar University Dual-Machine Bearing Fault Benchmark dataset (QU-DMBF), which encapsulates sound and vibration data from two different machines operating under various conditions. Experimental results show that this novel approach can synthesize such realistic vibration signals that can directly be used for reliable and highly accurate motor health monitoring.

Improved Active Fire Detection using Operational U-Nets

Apr 19, 2023

Abstract:As a consequence of global warming and climate change, the risk and extent of wildfires have been increasing in many areas worldwide. Warmer temperatures and drier conditions can cause quickly spreading fires and make them harder to control; therefore, early detection and accurate locating of active fires are crucial in environmental monitoring. Using satellite imagery to monitor and detect active fires has been critical for managing forests and public land. Many traditional statistical-based methods and more recent deep-learning techniques have been proposed for active fire detection. In this study, we propose a novel approach called Operational U-Nets for the improved early detection of active fires. The proposed approach utilizes Self-Organized Operational Neural Network (Self-ONN) layers in a compact U-Net architecture. The preliminary experimental results demonstrate that Operational U-Nets not only achieve superior detection performance but can also significantly reduce computational complexity.

Blind Restoration of Real-World Audio by 1D Operational GANs

Dec 30, 2022Abstract:Objective: Despite numerous studies proposed for audio restoration in the literature, most of them focus on an isolated restoration problem such as denoising or dereverberation, ignoring other artifacts. Moreover, assuming a noisy or reverberant environment with limited number of fixed signal-to-distortion ratio (SDR) levels is a common practice. However, real-world audio is often corrupted by a blend of artifacts such as reverberation, sensor noise, and background audio mixture with varying types, severities, and duration. In this study, we propose a novel approach for blind restoration of real-world audio signals by Operational Generative Adversarial Networks (Op-GANs) with temporal and spectral objective metrics to enhance the quality of restored audio signal regardless of the type and severity of each artifact corrupting it. Methods: 1D Operational-GANs are used with generative neuron model optimized for blind restoration of any corrupted audio signal. Results: The proposed approach has been evaluated extensively over the benchmark TIMIT-RAR (speech) and GTZAN-RAR (non-speech) datasets corrupted with a random blend of artifacts each with a random severity to mimic real-world audio signals. Average SDR improvements of over 7.2 dB and 4.9 dB are achieved, respectively, which are substantial when compared with the baseline methods. Significance: This is a pioneer study in blind audio restoration with the unique capability of direct (time-domain) restoration of real-world audio whilst achieving an unprecedented level of performance for a wide SDR range and artifact types. Conclusion: 1D Op-GANs can achieve robust and computationally effective real-world audio restoration with significantly improved performance. The source codes and the generated real-world audio datasets are shared publicly with the research community in a dedicated GitHub repository1.

Zero-Shot Motor Health Monitoring by Blind Domain Transition

Dec 12, 2022Abstract:Continuous long-term monitoring of motor health is crucial for the early detection of abnormalities such as bearing faults (up to 51% of motor failures are attributed to bearing faults). Despite numerous methodologies proposed for bearing fault detection, most of them require normal (healthy) and abnormal (faulty) data for training. Even with the recent deep learning (DL) methodologies trained on the labeled data from the same machine, the classification accuracy significantly deteriorates when one or few conditions are altered. Furthermore, their performance suffers significantly or may entirely fail when they are tested on another machine with entirely different healthy and faulty signal patterns. To address this need, in this pilot study, we propose a zero-shot bearing fault detection method that can detect any fault on a new (target) machine regardless of the working conditions, sensor parameters, or fault characteristics. To accomplish this objective, a 1D Operational Generative Adversarial Network (Op-GAN) first characterizes the transition between normal and fault vibration signals of (a) source machine(s) under various conditions, sensor parameters, and fault types. Then for a target machine, the potential faulty signals can be generated, and over its actual healthy and synthesized faulty signals, a compact, and lightweight 1D Self-ONN fault detector can then be trained to detect the real faulty condition in real time whenever it occurs. To validate the proposed approach, a new benchmark dataset is created using two different motors working under different conditions and sensor locations. Experimental results demonstrate that this novel approach can accurately detect any bearing fault achieving an average recall rate of around 89% and 95% on two target machines regardless of its type, severity, and location.

Blind ECG Restoration by Operational Cycle-GANs

Jan 29, 2022

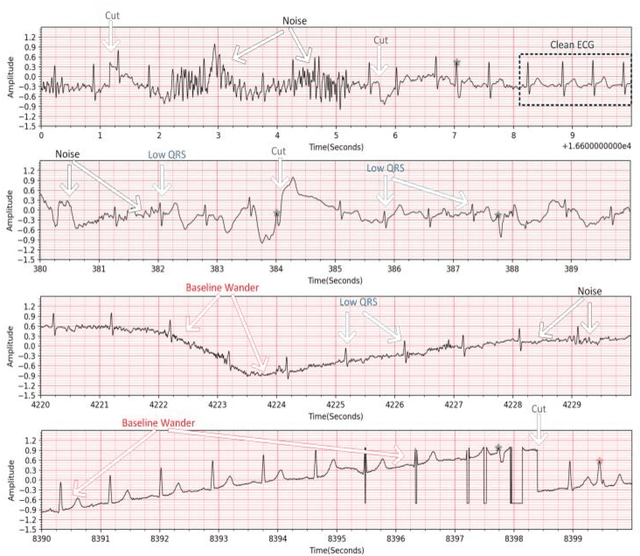

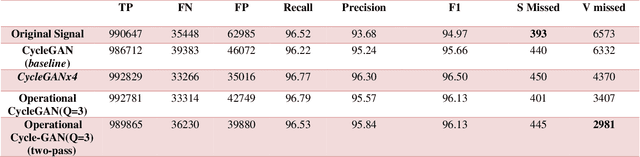

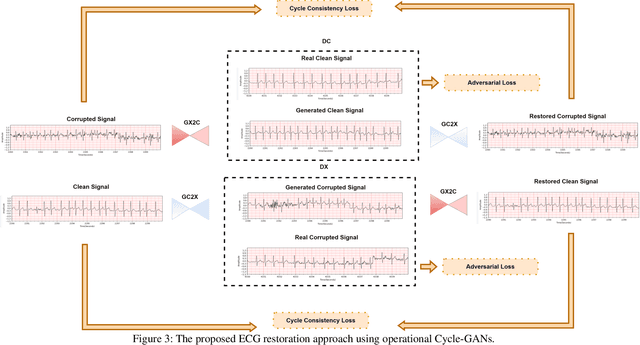

Abstract:Continuous long-term monitoring of electrocardiography (ECG) signals is crucial for the early detection of cardiac abnormalities such as arrhythmia. Non-clinical ECG recordings acquired by Holter and wearable ECG sensors often suffer from severe artifacts such as baseline wander, signal cuts, motion artifacts, variations on QRS amplitude, noise, and other interferences. Usually, a set of such artifacts occur on the same ECG signal with varying severity and duration, and this makes an accurate diagnosis by machines or medical doctors extremely difficult. Despite numerous studies that have attempted ECG denoising, they naturally fail to restore the actual ECG signal corrupted with such artifacts due to their simple and naive noise model. In this study, we propose a novel approach for blind ECG restoration using cycle-consistent generative adversarial networks (Cycle-GANs) where the quality of the signal can be improved to a clinical level ECG regardless of the type and severity of the artifacts corrupting the signal. To further boost the restoration performance, we propose 1D operational Cycle-GANs with the generative neuron model. The proposed approach has been evaluated extensively using one of the largest benchmark ECG datasets from the China Physiological Signal Challenge (CPSC-2020) with more than one million beats. Besides the quantitative and qualitative evaluations, a group of cardiologists performed medical evaluations to validate the quality and usability of the restored ECG, especially for an accurate arrhythmia diagnosis.

Real-Time Patient-Specific ECG Classification by 1D Self-Operational Neural Networks

Sep 30, 2021

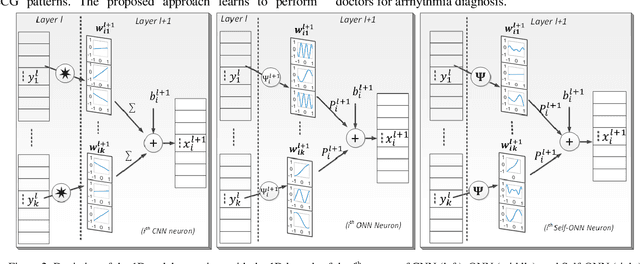

Abstract:Despite the proliferation of numerous deep learning methods proposed for generic ECG classification and arrhythmia detection, compact systems with the real-time ability and high accuracy for classifying patient-specific ECG are still few. Particularly, the scarcity of patient-specific data poses an ultimate challenge to any classifier. Recently, compact 1D Convolutional Neural Networks (CNNs) have achieved the state-of-the-art performance level for the accurate classification of ventricular and supraventricular ectopic beats. However, several studies have demonstrated the fact that the learning performance of the conventional CNNs is limited because they are homogenous networks with a basic (linear) neuron model. In order to address this deficiency and further boost the patient-specific ECG classification performance, in this study, we propose 1D Self-organized Operational Neural Networks (1D Self-ONNs). Due to its self-organization capability, Self-ONNs have the utmost advantage and superiority over conventional ONNs where the prior operator search within the operator set library to find the best possible set of operators is entirely avoided. As the first study where 1D Self-ONNs are ever proposed for a classification task, our results over the MIT-BIH arrhythmia benchmark database demonstrate that 1D Self-ONNs can surpass 1D CNNs with a significant margin while having a similar computational complexity. Under AAMI recommendations and with minimal common training data used, over the entire MIT-BIH dataset 1D Self-ONNs have achieved 98% and 99.04% average accuracies, 76.6% and 93.7% average F1 scores on supra-ventricular and ventricular ectopic beat (VEB) classifications, respectively, which is the highest performance level ever reported.

Early Bearing Fault Diagnosis of Rotating Machinery by 1D Self-Organized Operational Neural Networks

Sep 30, 2021

Abstract:Preventive maintenance of modern electric rotating machinery (RM) is critical for ensuring reliable operation, preventing unpredicted breakdowns and avoiding costly repairs. Recently many studies investigated machine learning monitoring methods especially based on Deep Learning networks focusing mostly on detecting bearing faults; however, none of them addressed bearing fault severity classification for early fault diagnosis with high enough accuracy. 1D Convolutional Neural Networks (CNNs) have indeed achieved good performance for detecting RM bearing faults from raw vibration and current signals but did not classify fault severity. Furthermore, recent studies have demonstrated the limitation in terms of learning capability of conventional CNNs attributed to the basic underlying linear neuron model. Recently, Operational Neural Networks (ONNs) were proposed to enhance the learning capability of CNN by introducing non-linear neuron models and further heterogeneity in the network configuration. In this study, we propose 1D Self-organized ONNs (Self-ONNs) with generative neurons for bearing fault severity classification and providing continuous condition monitoring. Experimental results over the benchmark NSF/IMS bearing vibration dataset using both x- and y-axis vibration signals for inner race and rolling element faults demonstrate that the proposed 1D Self-ONNs achieve significant performance gap against the state-of-the-art (1D CNNs) with similar computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge