Julien Le Sommer

IGE

Neural ocean forecasting from sparse satellite-derived observations: a case-study for SSH dynamics and altimetry data

Dec 15, 2025Abstract:We present an end-to-end deep learning framework for short-term forecasting of global sea surface dynamics based on sparse satellite altimetry data. Building on two state-of-the-art architectures: U-Net and 4DVarNet, originally developed for image segmentation and spatiotemporal interpolation respectively, we adapt the models to forecast the sea level anomaly and sea surface currents over a 7-day horizon using sequences of sparse nadir altimeters observations. The model is trained on data from the GLORYS12 operational ocean reanalysis, with synthetic nadir sampling patterns applied to simulate realistic observational coverage. The forecasting task is formulated as a sequence-to-sequence mapping, with the input comprising partial sea level anomaly (SLA) snapshots and the target being the corresponding future full-field SLA maps. We evaluate model performance using (i) normalized root mean squared error (nRMSE), (ii) averaged effective resolution, (iii) percentage of correctly predicted velocities magnitudes and angles, and benchmark results against the operational Mercator Ocean forecast product. Results show that end-to-end neural forecasts outperform the baseline across all lead times, with particularly notable improvements in high variability regions. Our framework is developed within the OceanBench benchmarking initiative, promoting reproducibility and standardized evaluation in ocean machine learning. These results demonstrate the feasibility and potential of end-to-end neural forecasting models for operational oceanography, even in data-sparse conditions.

Adjoint-based online learning of two-layer quasi-geostrophic baroclinic turbulence

Nov 21, 2024Abstract:For reasons of computational constraint, most global ocean circulation models used for Earth System Modeling still rely on parameterizations of sub-grid processes, and limitations in these parameterizations affect the modeled ocean circulation and impact on predictive skill. An increasingly popular approach is to leverage machine learning approaches for parameterizations, regressing for a map between the resolved state and missing feedbacks in a fluid system as a supervised learning task. However, the learning is often performed in an `offline' fashion, without involving the underlying fluid dynamical model during the training stage. Here, we explore the `online' approach that involves the fluid dynamical model during the training stage for the learning of baroclinic turbulence and its parameterization, with reference to ocean eddy parameterization. Two online approaches are considered: a full adjoint-based online approach, related to traditional adjoint optimization approaches that require a `differentiable' dynamical model, and an approximately online approach that approximates the adjoint calculation and does not require a differentiable dynamical model. The online approaches are found to be generally more skillful and numerically stable than offline approaches. Others details relating to online training, such as window size, machine learning model set up and designs of the loss functions are detailed to aid in further explorations of the online training methodology for Earth System Modeling.

Gradient-free online learning of subgrid-scale dynamics with neural emulators

Nov 02, 2023

Abstract:In this paper, we propose a generic algorithm to train machine learning-based subgrid parametrizations online, i.e., with $\textit{a posteriori}$ loss functions for non-differentiable numerical solvers. The proposed approach leverage neural emulators to train an approximation of the reduced state-space solver, which is then used to allows gradient propagation through temporal integration steps. The algorithm is able to recover most of the benefit of online strategies without having to compute the gradient of the original solver. It is demonstrated that training the neural emulator and parametrization components separately with respective loss quantities is necessary in order to minimize the propagation of some approximation bias.

OceanBench: The Sea Surface Height Edition

Sep 27, 2023

Abstract:The ocean profoundly influences human activities and plays a critical role in climate regulation. Our understanding has improved over the last decades with the advent of satellite remote sensing data, allowing us to capture essential quantities over the globe, e.g., sea surface height (SSH). However, ocean satellite data presents challenges for information extraction due to their sparsity and irregular sampling, signal complexity, and noise. Machine learning (ML) techniques have demonstrated their capabilities in dealing with large-scale, complex signals. Therefore we see an opportunity for ML models to harness the information contained in ocean satellite data. However, data representation and relevant evaluation metrics can be the defining factors when determining the success of applied ML. The processing steps from the raw observation data to a ML-ready state and from model outputs to interpretable quantities require domain expertise, which can be a significant barrier to entry for ML researchers. OceanBench is a unifying framework that provides standardized processing steps that comply with domain-expert standards. It provides plug-and-play data and pre-configured pipelines for ML researchers to benchmark their models and a transparent configurable framework for researchers to customize and extend the pipeline for their tasks. In this work, we demonstrate the OceanBench framework through a first edition dedicated to SSH interpolation challenges. We provide datasets and ML-ready benchmarking pipelines for the long-standing problem of interpolating observations from simulated ocean satellite data, multi-modal and multi-sensor fusion issues, and transfer-learning to real ocean satellite observations. The OceanBench framework is available at github.com/jejjohnson/oceanbench and the dataset registry is available at github.com/quentinf00/oceanbench-data-registry.

Training neural mapping schemes for satellite altimetry with simulation data

Sep 19, 2023Abstract:Satellite altimetry combined with data assimilation and optimal interpolation schemes have deeply renewed our ability to monitor sea surface dynamics. Recently, deep learning (DL) schemes have emerged as appealing solutions to address space-time interpolation problems. The scarcity of real altimetry dataset, in terms of space-time coverage of the sea surface, however impedes the training of state-of-the-art neural schemes on real-world case-studies. Here, we leverage both simulations of ocean dynamics and satellite altimeters to train simulation-based neural mapping schemes for the sea surface height and demonstrate their performance for real altimetry datasets. We analyze further how the ocean simulation dataset used during the training phase impacts this performance. This experimental analysis covers both the resolution from eddy-present configurations to eddy-rich ones, forced simulations vs. reanalyses using data assimilation and tide-free vs. tide-resolving simulations. Our benchmarking framework focuses on a Gulf Stream region for a realistic 5-altimeter constellation using NEMO ocean simulations and 4DVarNet mapping schemes. All simulation-based 4DVarNets outperform the operational observation-driven and reanalysis products, namely DUACS and GLORYS. The more realistic the ocean simulation dataset used during the training phase, the better the mapping. The best 4DVarNet mapping was trained from an eddy-rich and tide-free simulation datasets. It improves the resolved longitudinal scale from 151 kilometers for DUACS and 241 kilometers for GLORYS to 98 kilometers and reduces the root mean squared error (RMSE) by 23% and 61%. These results open research avenues for new synergies between ocean modelling and ocean observation using learning-based approaches.

Scale-aware neural calibration for wide swath altimetry observations

Feb 14, 2023Abstract:Sea surface height (SSH) is a key geophysical parameter for monitoring and studying meso-scale surface ocean dynamics. For several decades, the mapping of SSH products at regional and global scales has relied on nadir satellite altimeters, which provide one-dimensional-only along-track satellite observations of the SSH. The Surface Water and Ocean Topography (SWOT) mission deploys a new sensor that acquires for the first time wide-swath two-dimensional observations of the SSH. This provides new means to observe the ocean at previously unresolved spatial scales. A critical challenge for the exploiting of SWOT data is the separation of the SSH from other signals present in the observations. In this paper, we propose a novel learning-based approach for this SWOT calibration problem. It benefits from calibrated nadir altimetry products and a scale-space decomposition adapted to SWOT swath geometry and the structure of the different processes in play. In a supervised setting, our method reaches the state-of-the-art residual error of ~1.4cm while proposing a correction on the entire spectral from 10km to 1000k

Inversion of sea surface currents from satellite-derived SST-SSH synergies with 4DVarNets

Nov 23, 2022Abstract:Satellite altimetry is a unique way for direct observations of sea surface dynamics. This is however limited to the surface-constrained geostrophic component of sea surface velocities. Ageostrophic dynamics are however expected to be significant for horizontal scales below 100~km and time scale below 10~days. The assimilation of ocean general circulation models likely reveals only a fraction of this ageostrophic component. Here, we explore a learning-based scheme to better exploit the synergies between the observed sea surface tracers, especially sea surface height (SSH) and sea surface temperature (SST), to better inform sea surface currents. More specifically, we develop a 4DVarNet scheme which exploits a variational data assimilation formulation with trainable observations and {\em a priori} terms. An Observing System Simulation Experiment (OSSE) in a region of the Gulf Stream suggests that SST-SSH synergies could reveal sea surface velocities for time scales of 2.5-3.0 days and horizontal scales of 0.5$^\circ$-0.7$^\circ$, including a significant fraction of the ageostrophic dynamics ($\approx$ 47\%). The analysis of the contribution of different observation data, namely nadir along-track altimetry, wide-swath SWOT altimetry and SST data, emphasizes the role of SST features for the reconstruction at horizontal spatial scales ranging from \nicefrac{1}{20}$^\circ$ to \nicefrac{1}{4}$^\circ$.

Neural Fields for Fast and Scalable Interpolation of Geophysical Ocean Variables

Nov 18, 2022

Abstract:Optimal Interpolation (OI) is a widely used, highly trusted algorithm for interpolation and reconstruction problems in geosciences. With the influx of more satellite missions, we have access to more and more observations and it is becoming more pertinent to take advantage of these observations in applications such as forecasting and reanalysis. With the increase in the volume of available data, scalability remains an issue for standard OI and it prevents many practitioners from effectively and efficiently taking advantage of these large sums of data to learn the model hyperparameters. In this work, we leverage recent advances in Neural Fields (NerFs) as an alternative to the OI framework where we show how they can be easily applied to standard reconstruction problems in physical oceanography. We illustrate the relevance of NerFs for gap-filling of sparse measurements of sea surface height (SSH) via satellite altimetry and demonstrate how NerFs are scalable with comparable results to the standard OI. We find that NerFs are a practical set of methods that can be readily applied to geoscience interpolation problems and we anticipate a wider adoption in the future.

A DNN Framework for Learning Lagrangian Drift With Uncertainty

Apr 12, 2022

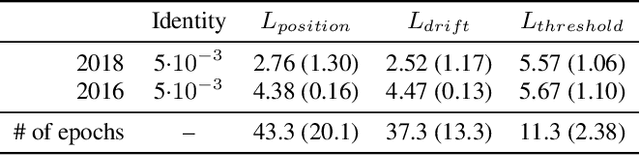

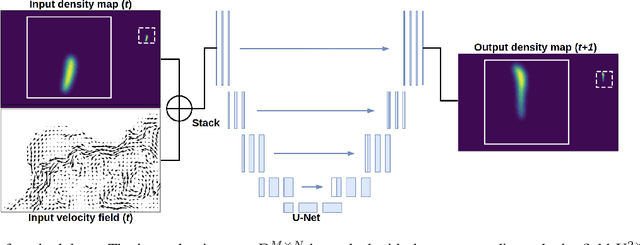

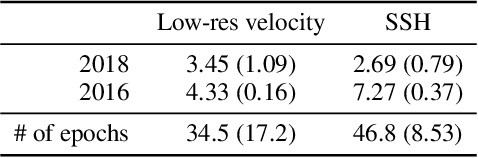

Abstract:Reconstructions of Lagrangian drift, for example for objects lost at sea, are often uncertain due to unresolved physical phenomena within the data. Uncertainty is usually overcome by introducing stochasticity into the drift, but this approach requires specific assumptions for modelling uncertainty. We remove this constraint by presenting a purely data-driven framework for modelling probabilistic drift in flexible environments. We train a CNN to predict the temporal evolution of probability density maps of particle locations from $t$ to $t+1$ given an input velocity field. We generate groundtruth density maps on the basis of ocean circulation model simulations by simulating uncertainty in the initial position of particle trajectories. Several loss functions for regressing the predicted density maps are tested. Through evaluating our model on unseen velocities from a different year, we find its outputs to be in good agreement with numerical simulations, suggesting satisfactory generalisation to different dynamical situations.

A posteriori learning for quasi-geostrophic turbulence parametrization

Apr 08, 2022

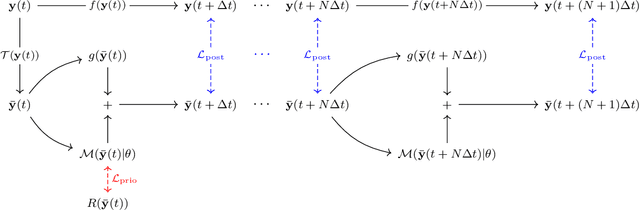

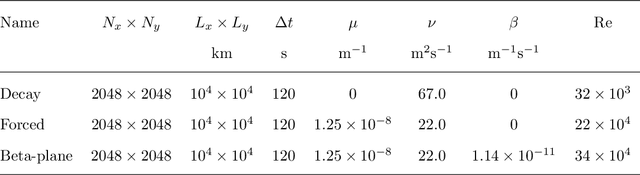

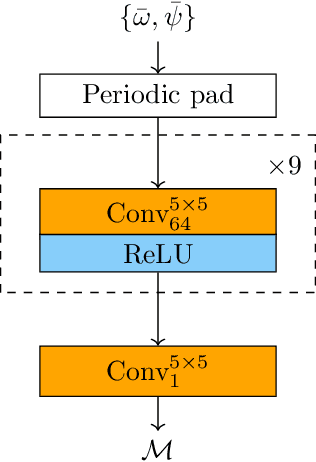

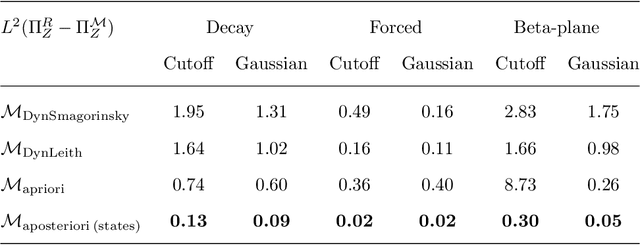

Abstract:The use of machine learning to build subgrid parametrizations for climate models is receiving growing attention. State-of-the-art strategies address the problem as a supervised learning task and optimize algorithms that predict subgrid fluxes based on information from coarse resolution models. In practice, training data are generated from higher resolution numerical simulations transformed in order to mimic coarse resolution simulations. By essence, these strategies optimize subgrid parametrizations to meet so-called $\textit{a priori}$ criteria. But the actual purpose of a subgrid parametrization is to obtain good performance in terms of $\textit{a posteriori}$ metrics which imply computing entire model trajectories. In this paper, we focus on the representation of energy backscatter in two dimensional quasi-geostrophic turbulence and compare parametrizations obtained with different learning strategies at fixed computational complexity. We show that strategies based on $\textit{a priori}$ criteria yield parametrizations that tend to be unstable in direct simulations and describe how subgrid parametrizations can alternatively be trained end-to-end in order to meet $\textit{a posteriori}$ criteria. We illustrate that end-to-end learning strategies yield parametrizations that outperform known empirical and data-driven schemes in terms of performance, stability and ability to apply to different flow configurations. These results support the relevance of differentiable programming paradigms for climate models in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge