Quentin Febvre

OceanSAR-2: A Universal Feature Extractor for SAR Ocean Observation

Jan 12, 2026Abstract:We present OceanSAR-2, the second generation of our foundation model for SAR-based ocean observation. Building on our earlier release, which pioneered self-supervised learning on Sentinel-1 Wave Mode data, OceanSAR-2 relies on improved SSL training and dynamic data curation strategies, which enhances performance while reducing training cost. OceanSAR-2 demonstrates strong transfer performance across downstream tasks, including geophysical pattern classification, ocean surface wind vector and significant wave height estimation, and iceberg detection. We release standardized benchmark datasets, providing a foundation for systematic evaluation and advancement of SAR models for ocean applications.

Efficient Self-Supervised Learning for Earth Observation via Dynamic Dataset Curation

Apr 09, 2025

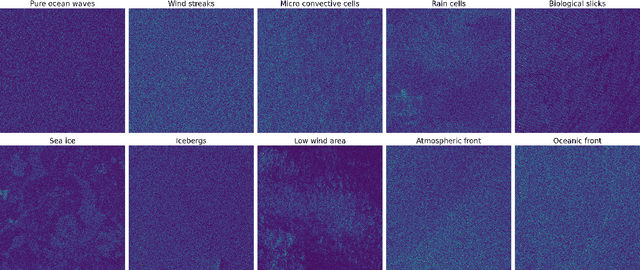

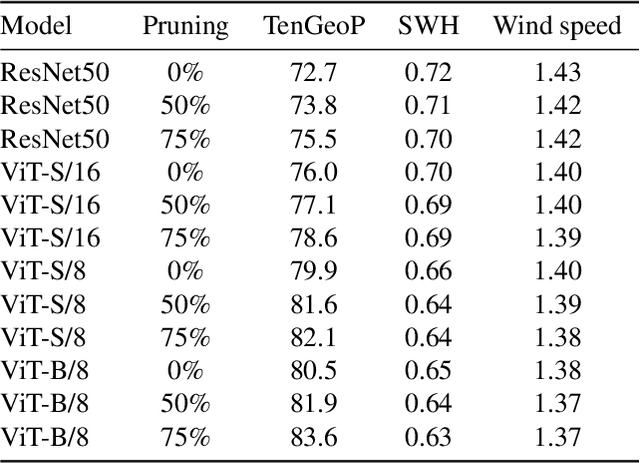

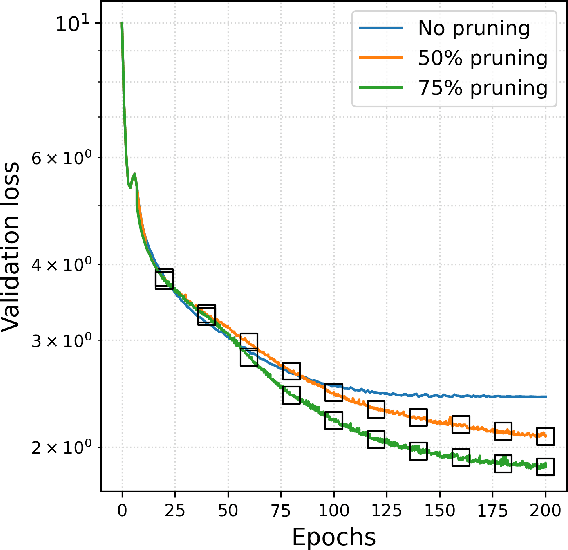

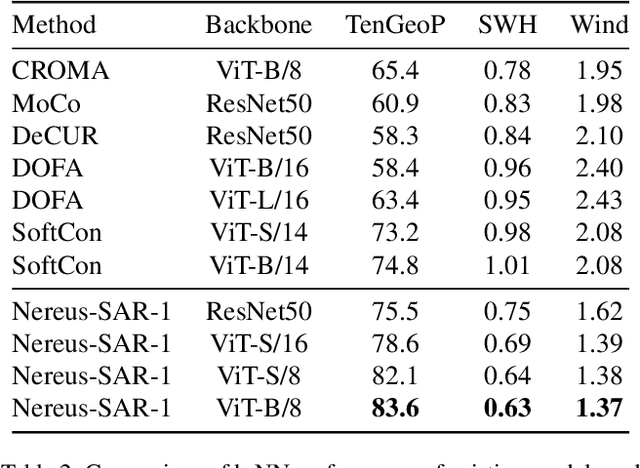

Abstract:Self-supervised learning (SSL) has enabled the development of vision foundation models for Earth Observation (EO), demonstrating strong transferability across diverse remote sensing tasks. While prior work has focused on network architectures and training strategies, the role of dataset curation, especially in balancing and diversifying pre-training datasets, remains underexplored. In EO, this challenge is amplified by the redundancy and heavy-tailed distributions common in satellite imagery, which can lead to biased representations and inefficient training. In this work, we propose a dynamic dataset pruning strategy designed to improve SSL pre-training by maximizing dataset diversity and balance. Our method iteratively refines the training set without requiring a pre-existing feature extractor, making it well-suited for domains where curated datasets are limited or unavailable. We demonstrate our approach on the Sentinel-1 Wave Mode (WV) Synthetic Aperture Radar (SAR) archive, a challenging dataset dominated by ocean observations. We train models from scratch on the entire Sentinel-1 WV archive spanning 10 years. Across three downstream tasks, our results show that dynamic pruning improves both computational efficiency and representation quality, leading to stronger transferability. We also release the weights of Nereus-SAR-1, the first model in the Nereus family, a series of foundation models for ocean observation and analysis using SAR imagery, at github.com/galeio-research/nereus-sar-models/.

OceanBench: The Sea Surface Height Edition

Sep 27, 2023

Abstract:The ocean profoundly influences human activities and plays a critical role in climate regulation. Our understanding has improved over the last decades with the advent of satellite remote sensing data, allowing us to capture essential quantities over the globe, e.g., sea surface height (SSH). However, ocean satellite data presents challenges for information extraction due to their sparsity and irregular sampling, signal complexity, and noise. Machine learning (ML) techniques have demonstrated their capabilities in dealing with large-scale, complex signals. Therefore we see an opportunity for ML models to harness the information contained in ocean satellite data. However, data representation and relevant evaluation metrics can be the defining factors when determining the success of applied ML. The processing steps from the raw observation data to a ML-ready state and from model outputs to interpretable quantities require domain expertise, which can be a significant barrier to entry for ML researchers. OceanBench is a unifying framework that provides standardized processing steps that comply with domain-expert standards. It provides plug-and-play data and pre-configured pipelines for ML researchers to benchmark their models and a transparent configurable framework for researchers to customize and extend the pipeline for their tasks. In this work, we demonstrate the OceanBench framework through a first edition dedicated to SSH interpolation challenges. We provide datasets and ML-ready benchmarking pipelines for the long-standing problem of interpolating observations from simulated ocean satellite data, multi-modal and multi-sensor fusion issues, and transfer-learning to real ocean satellite observations. The OceanBench framework is available at github.com/jejjohnson/oceanbench and the dataset registry is available at github.com/quentinf00/oceanbench-data-registry.

Training neural mapping schemes for satellite altimetry with simulation data

Sep 19, 2023Abstract:Satellite altimetry combined with data assimilation and optimal interpolation schemes have deeply renewed our ability to monitor sea surface dynamics. Recently, deep learning (DL) schemes have emerged as appealing solutions to address space-time interpolation problems. The scarcity of real altimetry dataset, in terms of space-time coverage of the sea surface, however impedes the training of state-of-the-art neural schemes on real-world case-studies. Here, we leverage both simulations of ocean dynamics and satellite altimeters to train simulation-based neural mapping schemes for the sea surface height and demonstrate their performance for real altimetry datasets. We analyze further how the ocean simulation dataset used during the training phase impacts this performance. This experimental analysis covers both the resolution from eddy-present configurations to eddy-rich ones, forced simulations vs. reanalyses using data assimilation and tide-free vs. tide-resolving simulations. Our benchmarking framework focuses on a Gulf Stream region for a realistic 5-altimeter constellation using NEMO ocean simulations and 4DVarNet mapping schemes. All simulation-based 4DVarNets outperform the operational observation-driven and reanalysis products, namely DUACS and GLORYS. The more realistic the ocean simulation dataset used during the training phase, the better the mapping. The best 4DVarNet mapping was trained from an eddy-rich and tide-free simulation datasets. It improves the resolved longitudinal scale from 151 kilometers for DUACS and 241 kilometers for GLORYS to 98 kilometers and reduces the root mean squared error (RMSE) by 23% and 61%. These results open research avenues for new synergies between ocean modelling and ocean observation using learning-based approaches.

Scale-aware neural calibration for wide swath altimetry observations

Feb 14, 2023Abstract:Sea surface height (SSH) is a key geophysical parameter for monitoring and studying meso-scale surface ocean dynamics. For several decades, the mapping of SSH products at regional and global scales has relied on nadir satellite altimeters, which provide one-dimensional-only along-track satellite observations of the SSH. The Surface Water and Ocean Topography (SWOT) mission deploys a new sensor that acquires for the first time wide-swath two-dimensional observations of the SSH. This provides new means to observe the ocean at previously unresolved spatial scales. A critical challenge for the exploiting of SWOT data is the separation of the SSH from other signals present in the observations. In this paper, we propose a novel learning-based approach for this SWOT calibration problem. It benefits from calibrated nadir altimetry products and a scale-space decomposition adapted to SWOT swath geometry and the structure of the different processes in play. In a supervised setting, our method reaches the state-of-the-art residual error of ~1.4cm while proposing a correction on the entire spectral from 10km to 1000k

Learning Neural Optimal Interpolation Models and Solvers

Nov 14, 2022Abstract:The reconstruction of gap-free signals from observation data is a critical challenge for numerous application domains, such as geoscience and space-based earth observation, when the available sensors or the data collection processes lead to irregularly-sampled and noisy observations. Optimal interpolation (OI), also referred to as kriging, provides a theoretical framework to solve interpolation problems for Gaussian processes (GP). The associated computational complexity being rapidly intractable for n-dimensional tensors and increasing numbers of observations, a rich literature has emerged to address this issue using ensemble methods, sparse schemes or iterative approaches. Here, we introduce a neural OI scheme. It exploits a variational formulation with convolutional auto-encoders and a trainable iterative gradient-based solver. Theoretically equivalent to the OI formulation, the trainable solver asymptotically converges to the OI solution when dealing with both stationary and non-stationary linear spatio-temporal GPs. Through a bi-level optimization formulation, we relate the learning step and the selection of the training loss to the theoretical properties of the OI, which is an unbiased estimator with minimal error variance. Numerical experiments for 2D+t synthetic GP datasets demonstrate the relevance of the proposed scheme to learn computationally-efficient and scalable OI models and solvers from data. As illustrated for a real-world interpolation problems for satellite-derived geophysical dynamics, the proposed framework also extends to non-linear and multimodal interpolation problems and significantly outperforms state-of-the-art interpolation methods, when dealing with very high missing data rates.

4DVarNet-SSH: end-to-end learning of variational interpolation schemes for nadir and wide-swath satellite altimetry

Nov 10, 2022Abstract:The reconstruction of sea surface currents from satellite altimeter data is a key challenge in spatial oceanography, especially with the upcoming wide-swath SWOT (Surface Ocean and Water Topography) altimeter mission. Operational systems however generally fail to retrieve mesoscale dynamics for horizontal scales below 100km and time-scale below 10 days. Here, we address this challenge through the 4DVarnet framework, an end-to-end neural scheme backed on a variational data assimilation formulation. We introduce a parametrization of the 4DVarNet scheme dedicated to the space-time interpolation of satellite altimeter data. Within an observing system simulation experiment (NATL60), we demonstrate the relevance of the proposed approach both for nadir and nadir+swot altimeter configurations for two contrasted case-study regions in terms of upper ocean dynamics. We report relative improvement with respect to the operational optimal interpolation between 30% and 60% in terms of reconstruction error. Interestingly, for the nadir+swot altimeter configuration, we reach resolved space-time scales below 70km and 7days. The code is open-source to enable reproductibility and future collaborative developments. Beyond its applicability to large-scale domains, we also address uncertainty quantification issues and generalization properties of the proposed learning setting. We discuss further future research avenues and extensions to other ocean data assimilation and space oceanography challenges.

Multimodal 4DVarNets for the reconstruction of sea surface dynamics from SST-SSH synergies

Jul 04, 2022

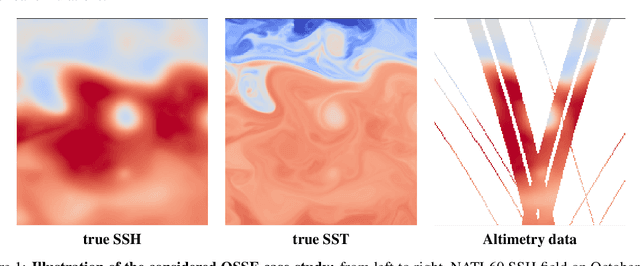

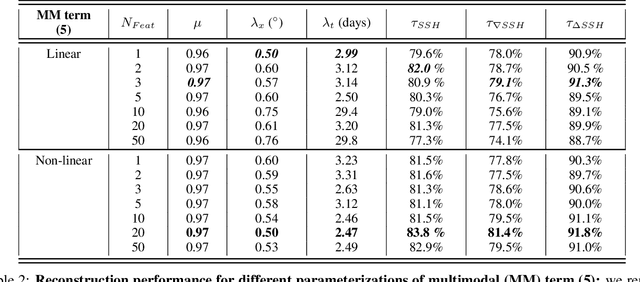

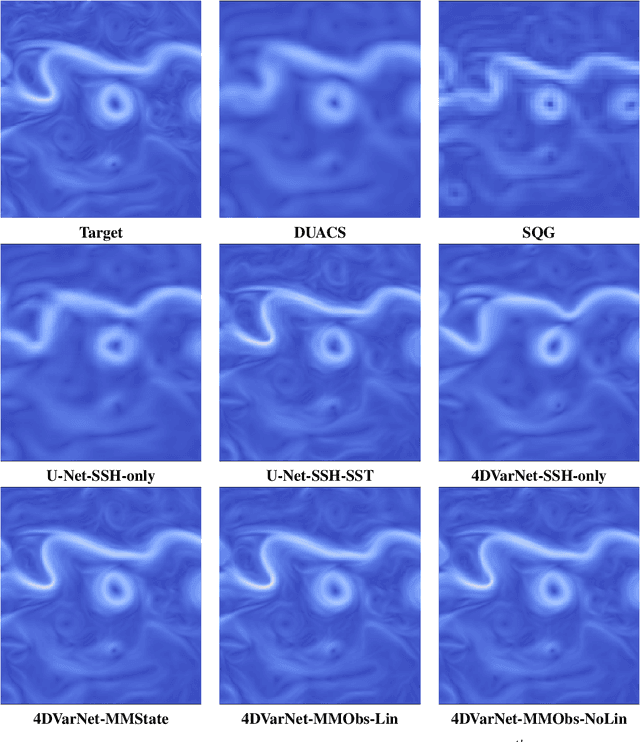

Abstract:Due to the irregular space-time sampling of sea surface observations, the reconstruction of sea surface dynamics is a challenging inverse problem. While satellite altimetry provides a direct observation of the sea surface height (SSH), which relates to the divergence-free component of sea surface currents, the associated sampling pattern prevents from retrieving fine-scale sea surface dynamics, typically below a 10-day time scale. By contrast, other satellite sensors provide higher-resolution observations of sea surface tracers such as sea surface temperature (SST). Multimodal inversion schemes then arise as an appealing strategy. Though theoretical evidence supports the existence of an explicit relationship between sea surface temperature and sea surface dynamics under specific dynamical regimes, the generalization to the variety of upper ocean dynamical regimes is complex. Here, we investigate this issue from a physics-informed learning perspective. We introduce a trainable multimodal inversion scheme for the reconstruction of sea surface dynamics from multi-source satellite-derived observations. The proposed 4DVarNet schemes combine a variational formulation involving trainable observation and a priori terms with a trainable gradient-based solver. We report an application to the reconstruction of the divergence-free component of sea surface dynamics from satellite-derived SSH and SST data. An observing system simulation experiment for a Gulf Stream region supports the relevance of our approach compared with state-of-the-art schemes. We report relative improvement greater than 50% compared with the operational altimetry product in terms of root mean square error and resolved space-time scales. We discuss further the application and extension of the proposed approach for the reconstruction and forecasting of geophysical dynamics from irregularly-sampled satellite observations.

Joint calibration and mapping of satellite altimetry data using trainable variational models

Oct 07, 2021

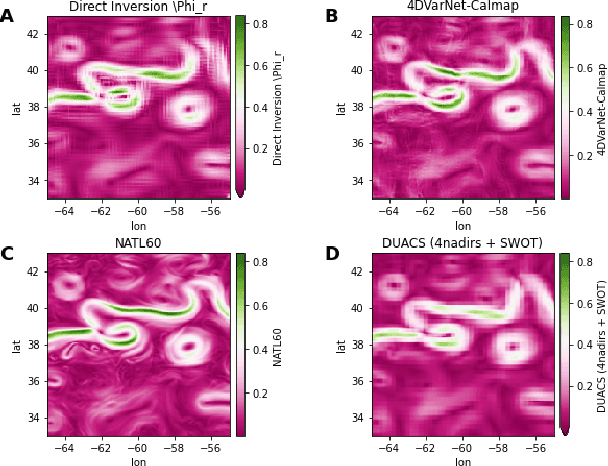

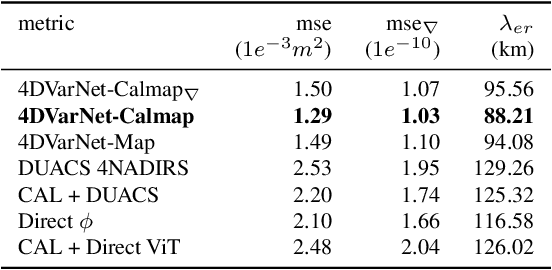

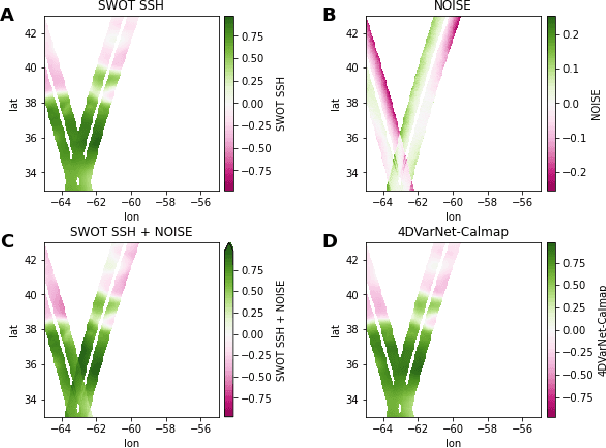

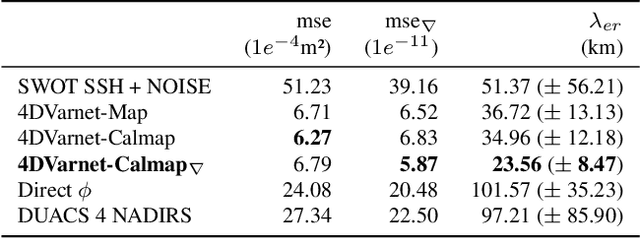

Abstract:Satellite radar altimeters are a key source of observation of ocean surface dynamics. However, current sensor technology and mapping techniques do not yet allow to systematically resolve scales smaller than 100km. With their new sensors, upcoming wide-swath altimeter missions such as SWOT should help resolve finer scales. Current mapping techniques rely on the quality of the input data, which is why the raw data go through multiple preprocessing stages before being used. Those calibration stages are improved and refined over many years and represent a challenge when a new type of sensor start acquiring data. Here we show how a data-driven variational data assimilation framework could be used to jointly learn a calibration operator and an interpolator from non-calibrated data . The proposed framework significantly outperforms the operational state-of-the-art mapping pipeline and truly benefits from wide-swath data to resolve finer scales on the global map as well as in the SWOT sensor geometry.

Reversible designs for extreme memory cost reduction of CNN training

Oct 24, 2019

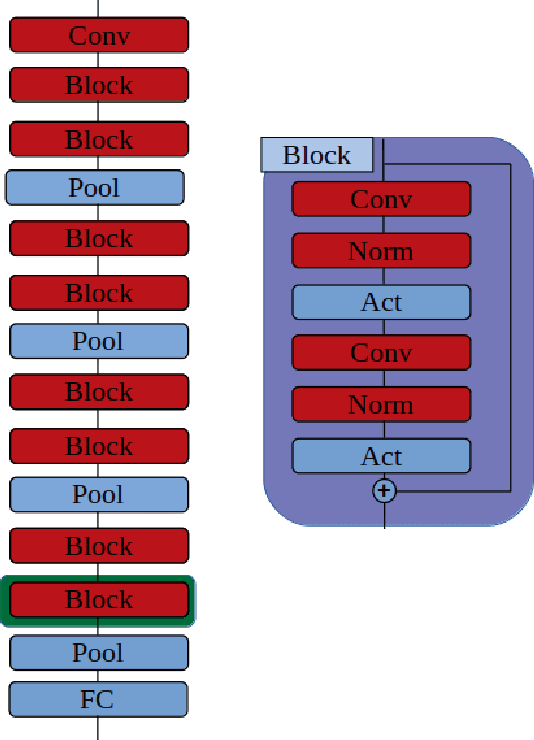

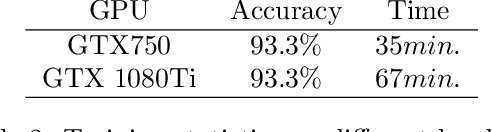

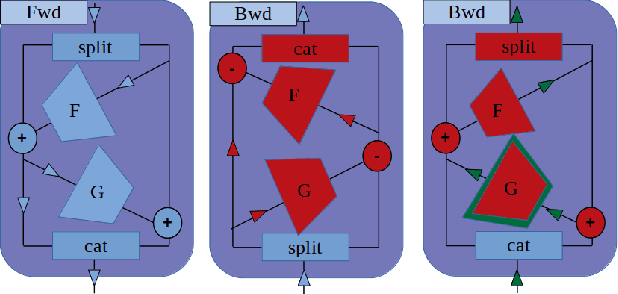

Abstract:Training Convolutional Neural Networks (CNN) is a resource intensive task that requires specialized hardware for efficient computation. One of the most limiting bottleneck of CNN training is the memory cost associated with storing the activation values of hidden layers needed for the computation of the weights gradient during the backward pass of the backpropagation algorithm. Recently, reversible architectures have been proposed to reduce the memory cost of training large CNN by reconstructing the input activation values of hidden layers from their output during the backward pass, circumventing the need to accumulate these activations in memory during the forward pass. In this paper, we push this idea to the extreme and analyze reversible network designs yielding minimal training memory footprint. We investigate the propagation of numerical errors in long chains of invertible operations and analyze their effect on training. We introduce the notion of pixel-wise memory cost to characterize the memory footprint of model training, and propose a new model architecture able to efficiently train arbitrarily deep neural networks with a minimum memory cost of 352 bytes per input pixel. This new kind of architecture enables training large neural networks on very limited memory, opening the door for neural network training on embedded devices or non-specialized hardware. For instance, we demonstrate training of our model to 93.3% accuracy on the CIFAR10 dataset within 67 minutes on a low-end Nvidia GTX750 GPU with only 1GB of memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge