Juan M. Gandarias

Robotics and Mechatronics lab, Systems Engineering and Automation Department, University of Malaga, Malaga, Spain

A Multipurpose Interface for Close- and Far-Proximity Control of Mobile Collaborative Robots

Jun 04, 2024

Abstract:This letter introduces an innovative visuo-haptic interface to control Mobile Collaborative Robots (MCR). Thanks to a passive detachable mechanism, the interface can be attached/detached from a robot, offering two control modes: local control (attached) and teleoperation (detached). These modes are integrated with a robot whole-body controller and presented in a unified close- and far-proximity control framework for MCR. The earlier introduction of the haptic component in this interface enabled users to execute intricate loco-manipulation tasks via admittance-type control, effectively decoupling task dynamics and enhancing human capabilities. In contrast, this ongoing work proposes a novel design that integrates a visual component. This design utilizes Visual-Inertial Odometry (VIO) for teleoperation, estimating the interface's pose through stereo cameras and an Inertial Measurement Unit (IMU). The estimated pose serves as the reference for the robot's end-effector in teleoperation mode. Hence, the interface offers complete flexibility and adaptability, enabling any user to operate an MCR seamlessly without needing expert knowledge. In this letter, we primarily focus on the new visual feature, and first present a performance evaluation of different VIO-based methods for teleoperation. Next, the interface's usability is analyzed in a home-care application and compared to an alternative designed by a commercial MoCap system. Results show comparable performance in terms of accuracy, completion time, and usability. Nevertheless, the proposed interface is low-cost, poses minimal wearability constraints, and can be used anywhere and anytime without needing external devices or additional equipment, offering a versatile and accessible solution for teleoperation.

Robot-Assisted Navigation for Visually Impaired through Adaptive Impedance and Path Planning

Oct 23, 2023

Abstract:This paper presents a framework to navigate visually impaired people through unfamiliar environments by means of a mobile manipulator. The Human-Robot system consists of three key components: a mobile base, a robotic arm, and the human subject who gets guided by the robotic arm via physically coupling their hand with the cobot's end-effector. These components, receiving a goal from the user, traverse a collision-free set of waypoints in a coordinated manner, while avoiding static and dynamic obstacles through an obstacle avoidance unit and a novel human guidance planner. With this aim, we also present a legs tracking algorithm that utilizes 2D LiDAR sensors integrated into the mobile base to monitor the human pose. Additionally, we introduce an adaptive pulling planner responsible for guiding the individual back to the intended path if they veer off course. This is achieved by establishing a target arm end-effector position and dynamically adjusting the impedance parameters in real-time through a impedance tuning unit. To validate the framework we present a set of experiments both in laboratory settings with 12 healthy blindfolded subjects and a proof-of-concept demonstration in a real-world scenario.

The Bridge between Xsens Motion-Capture and Robot Operating System : Enabling Robots with Online 3D Human Motion Tracking

Jun 30, 2023Abstract:This document introduces the bridge between the leading inertial motion-capture systems for 3D human tracking and the most used robotics software framework. 3D kinematic data provided by Xsens are translated into ROS messages to make them usable by robots and a Unified Robotics Description Format (URDF) model of the human kinematics is generated, which can be run and displayed in ROS 3D visualizer, RViz. The code to implement the to-ROS-bridge is a ROS package called xsens_mvn_ros and is available on GitHub at https://github.com/hrii-iit/xsens_mvn_ros The main documentation can be found at https://hrii-iit.github.io/xsens_mvn_ros/index.html

Bayesian and Neural Inference on LSTM-based Object Recognition from Tactile and Kinesthetic Information

Jun 10, 2023

Abstract:Recent advances in the field of intelligent robotic manipulation pursue providing robotic hands with touch sensitivity. Haptic perception encompasses the sensing modalities encountered in the sense of touch (e.g., tactile and kinesthetic sensations). This letter focuses on multimodal object recognition and proposes analytical and data-driven methodologies to fuse tactile- and kinesthetic-based classification results. The procedure is as follows: a three-finger actuated gripper with an integrated high-resolution tactile sensor performs squeeze-and-release Exploratory Procedures (EPs). The tactile images and kinesthetic information acquired using angular sensors on the finger joints constitute the time-series datasets of interest. Each temporal dataset is fed to a Long Short-term Memory (LSTM) Neural Network, which is trained to classify in-hand objects. The LSTMs provide an estimation of the posterior probability of each object given the corresponding measurements, which after fusion allows to estimate the object through Bayesian and Neural inference approaches. An experiment with 36-classes is carried out to evaluate and compare the performance of the fused, tactile, and kinesthetic perception systems.The results show that the Bayesian-based classifiers improves capabilities for object recognition and outperforms the Neural-based approach.

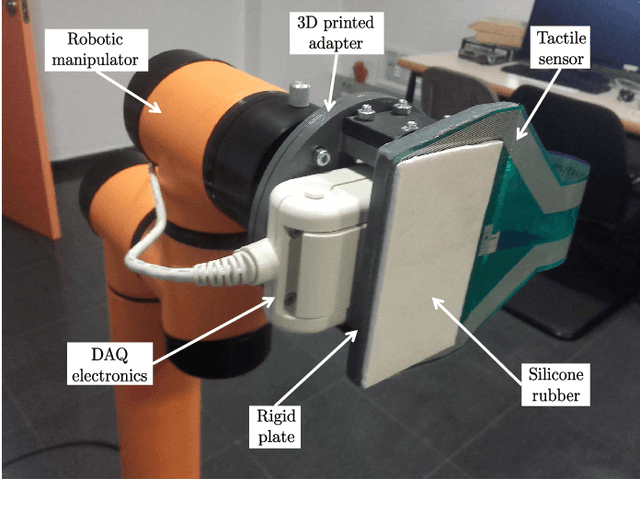

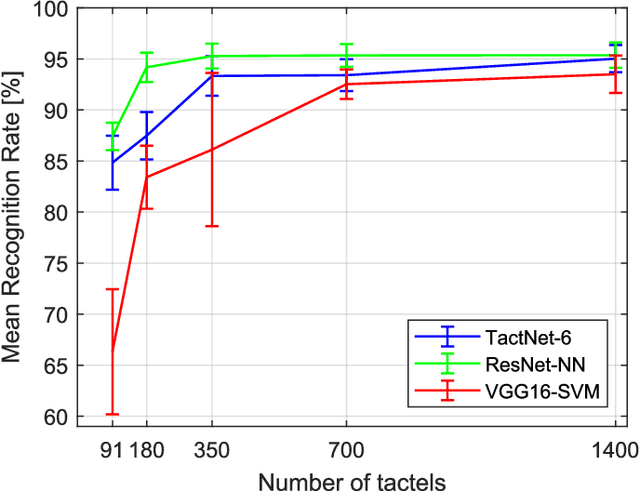

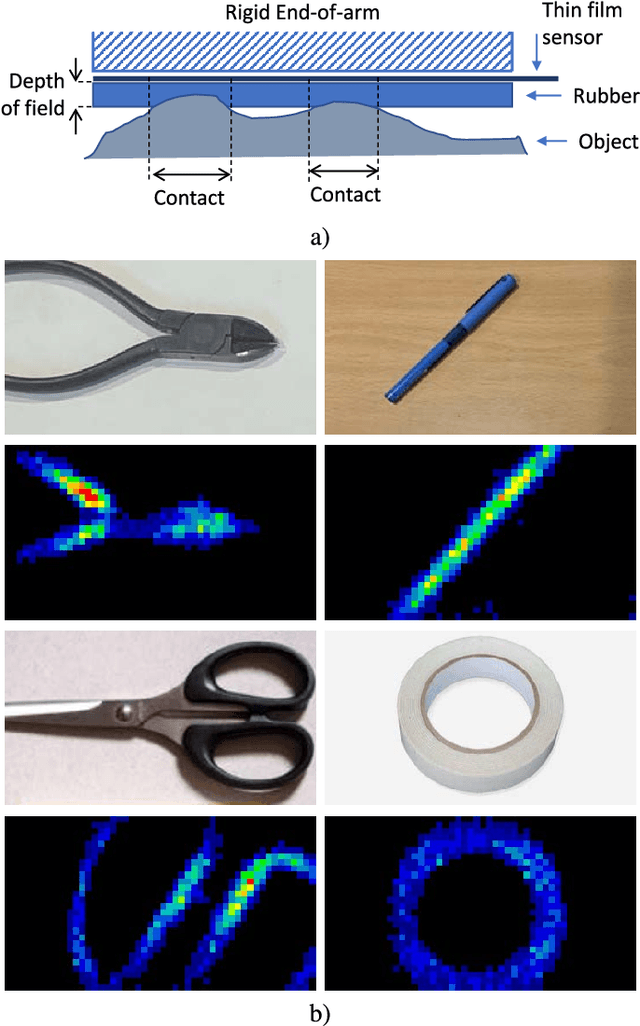

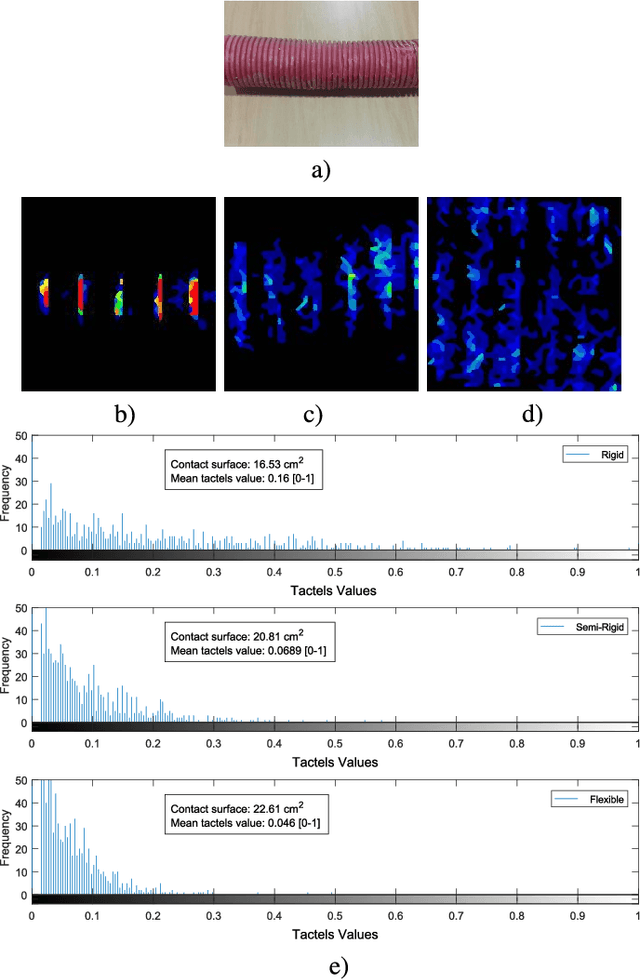

CNN-based Methods for Object Recognition with High-Resolution Tactile Sensors

May 21, 2023

Abstract:Novel high-resolution pressure-sensor arrays allow treating pressure readings as standard images. Computer vision algorithms and methods such as Convolutional Neural Networks (CNN) can be used to identify contact objects. In this paper, a high-resolution tactile sensor has been attached to a robotic end-effector to identify contacted objects. Two CNN-based approaches have been employed to classify pressure images. These methods include a transfer learning approach using a pre-trained CNN on an RGB-images dataset and a custom-made CNN (TactNet) trained from scratch with tactile information. The transfer learning approach can be carried out by retraining the classification layers of the network or replacing these layers with an SVM. Overall, 11 configurations based on these methods have been tested: 8 transfer learning-based, and 3 TactNet-based. Moreover, a study of the performance of the methods and a comparative discussion with the current state-of-the-art on tactile object recognition is presented.

Markerless 3D human pose tracking through multiple cameras and AI: Enabling high accuracy, robustness, and real-time performance

Mar 31, 2023

Abstract:Tracking 3D human motion in real-time is crucial for numerous applications across many fields. Traditional approaches involve attaching artificial fiducial objects or sensors to the body, limiting their usability and comfort-of-use and consequently narrowing their application fields. Recent advances in Artificial Intelligence (AI) have allowed for markerless solutions. However, most of these methods operate in 2D, while those providing 3D solutions compromise accuracy and real-time performance. To address this challenge and unlock the potential of visual pose estimation methods in real-world scenarios, we propose a markerless framework that combines multi-camera views and 2D AI-based pose estimation methods to track 3D human motion. Our approach integrates a Weighted Least Square (WLS) algorithm that computes 3D human motion from multiple 2D pose estimations provided by an AI-driven method. The method is integrated within the Open-VICO framework allowing simulation and real-world execution. Several experiments have been conducted, which have shown high accuracy and real-time performance, demonstrating the high level of readiness for real-world applications and the potential to revolutionize human motion capture.

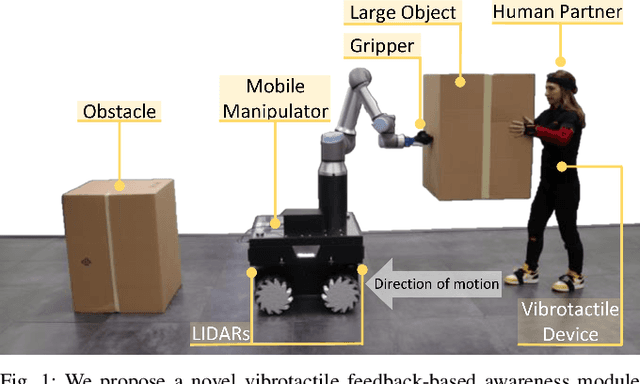

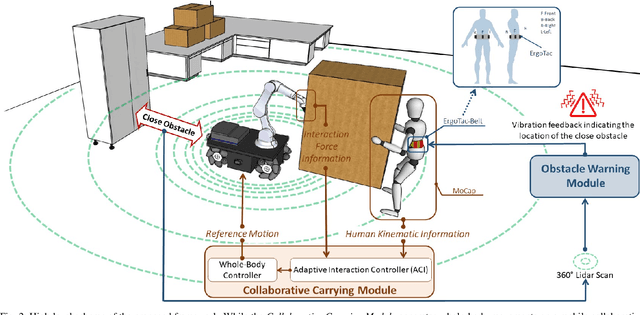

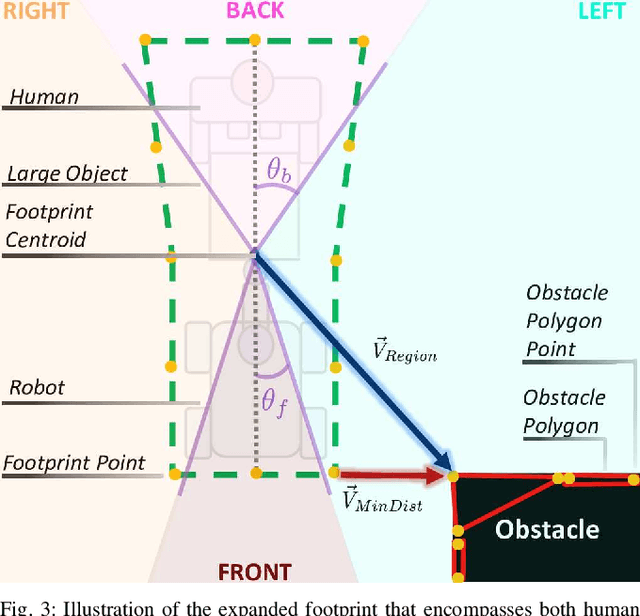

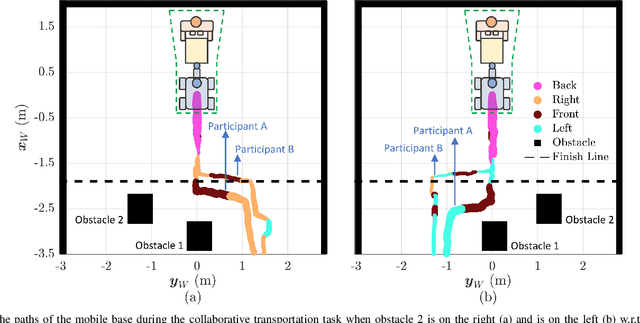

Enhancing Human-Robot Collaboration Transportation through Obstacle-Aware Vibrotactile Feedback

Feb 06, 2023

Abstract:Transporting large and heavy objects can benefit from Human-Robot Collaboration (HRC), increasing the contribution of robots to our daily tasks and reducing the risk of injuries to the human operator. This approach usually posits the human collaborator as the leader, while the robot has the follower role. Hence, it is essential for the leader to be aware of the environmental situation. However, when transporting a large object, the operator's situational awareness can be compromised as the object may occlude different parts of the environment. This paper proposes a novel haptic-based environmental awareness module for a collaborative transportation framework that informs the human operator about surrounding obstacles. The robot uses two LIDARs to detect the obstacles in the surroundings. The warning module alerts the operator through a haptic belt with four vibrotactile devices that provide feedback about the location and proximity of the obstacles. By enhancing the operator's awareness of the surroundings, the proposed module improves the safety of the human-robot team in co-carrying scenarios by preventing collisions. Experiments with two non-expert subjects in two different situations are conducted. The results show that the human partner can successfully lead the co-transportation system in an unknown environment with hidden obstacles thanks to the haptic feedback.

An Open Tele-Impedance Framework to Generate Large Datasets for Contact-Rich Tasks in Robotic Manipulation

Sep 21, 2022

Abstract:Using large datasets in machine learning has led to outstanding results, in some cases outperforming humans in tasks that were believed impossible for machines. However, achieving human-level performance when dealing with physically interactive tasks, e.g., in contact-rich robotic manipulation, is still a big challenge. It is well known that regulating the Cartesian impedance for such operations is of utmost importance for their successful execution. Approaches like reinforcement Learning (RL) can be a promising paradigm for solving such problems. More precisely, approaches that use task-agnostic expert demonstrations to bootstrap learning when solving new tasks have a huge potential since they can exploit large datasets. However, existing data collection systems are expensive, complex, or do not allow for impedance regulation. This work represents a first step towards a data collection framework suitable for collecting large datasets of impedance-based expert demonstrations compatible with the RL problem formulation, where a novel action space is used. The framework is designed according to requirements acquired after an extensive analysis of available data collection frameworks for robotics manipulation. The result is a low-cost and open-access tele-impedance framework which makes human experts capable of demonstrating contact-rich tasks.

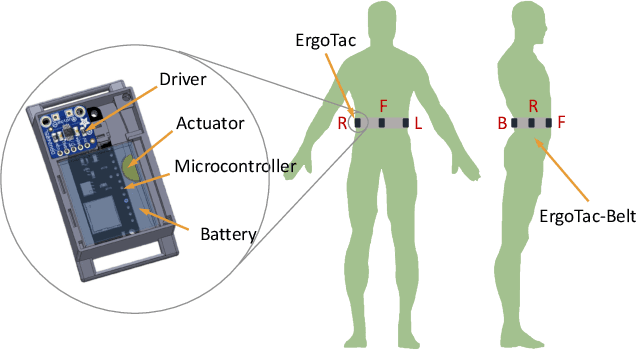

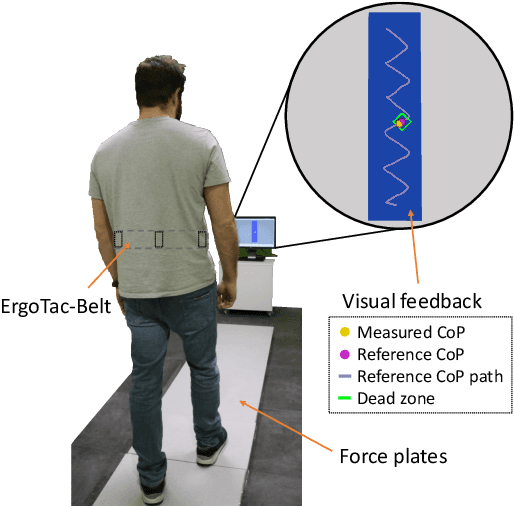

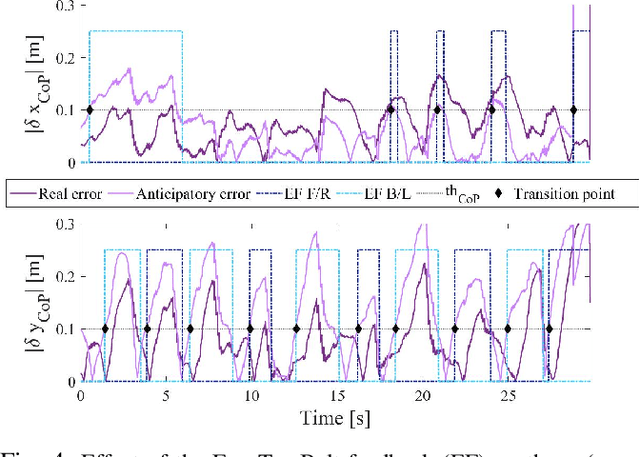

ErgoTac-Belt: Anticipatory Vibrotactile Feedback to Lead \\Centre of Pressure during Walking

Jul 08, 2022

Abstract:Balance and gait disorders are the second leading cause of falls, which, along with consequent injuries, are reported as major public health problems all over the world. For patients who do not require mechanical support, vibrotactile feedback interfaces have proven to be a successful approach in restoring balance. Most of the existing strategies assess trunk or head tilt and velocity or plantar forces, and are limited to the analysis of stance. On the other hand, central to balance control is the need to maintain the body's centre of pressure (CoP) within feasible limits of the support polygon (SP), as in standing, or on track to a new SP, as in walking. Hence, this paper proposes an exploratory study to investigate whether vibrotactile feedback can be employed to lead human CoP during walking. The ErgoTac-Belt vibrotactile device is introduced to instruct the users about the direction to take, both in the antero-posterior and medio-lateral axes. An anticipatory strategy is adopted here, to give the users enough time to react to the stimuli. Experiments on ten healthy subjects demonstrated the promising capability of the proposed device to guide the users' CoP along a predefined reference path, with similar performance as the one achieved with visual feedback. Future developments will investigate our strategy and device in guiding the CoP of elderly or individuals with vestibular impairments, who may not be aware of or, able to figure out, a safe and ergonomic CoP path.

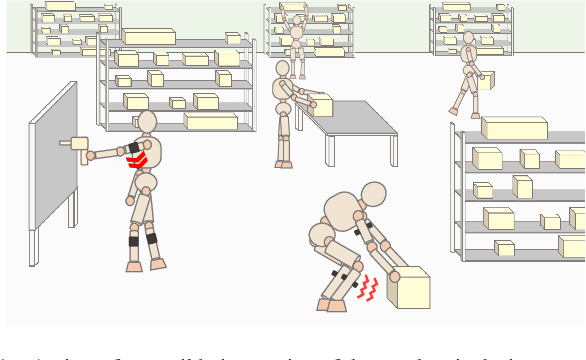

Performance Analysis of Vibrotactile and Slide-and-Squeeze Haptic Feedback Devices for Limbs Postural Adjustment

Jul 08, 2022

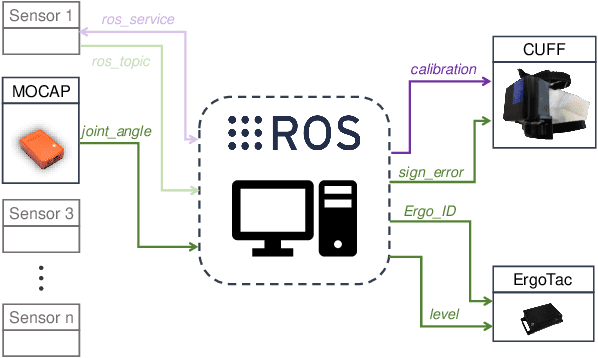

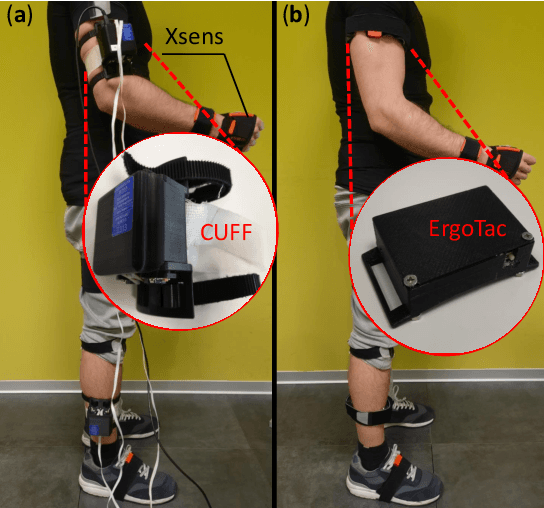

Abstract:Recurrent or sustained awkward body postures are among the most frequently cited risk factors to the development of work-related musculoskeletal disorders (MSDs). To prevent workers from adopting harmful configurations but also to guide them toward more ergonomic ones, wearable haptic devices may be the ideal solution. In this paper, a vibrotactile unit, called ErgoTac, and a slide-and-squeeze unit, called CUFF, were evaluated in a limbs postural correction setting. Their capability of providing single-joint (shoulder or knee) and multi-joint (shoulder and knee at once) guidance was compared in twelve healthy subjects, using quantitative task-related metrics and subjective quantitative evaluation. An integrated environment was also built to ease communication and data sharing between the involved sensor and feedback systems. Results show good acceptability and intuitiveness for both devices. ErgoTac appeared as the suitable feedback device for the shoulder, while the CUFF may be the effective solution for the knee. This comparative study, although preliminary, was propaedeutic to the potential integration of the two devices for effective whole-body postural corrections, with the aim to develop a feedback and assistive apparatus to increase workers' awareness about risky working conditions and therefore to prevent MSDs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge