Wansoo Kim

Robotics Department, Hanyang University ERICA, Republic of Korea

Multitask Learning for Multiple Recognition Tasks: A Framework for Lower-limb Exoskeleton Robot Applications

Jun 26, 2023Abstract:To control the lower-limb exoskeleton robot effectively, it is essential to accurately recognize user status and environmental conditions. Previous studies have typically addressed these recognition challenges through independent models for each task, resulting in an inefficient model development process. In this study, we propose a Multitask learning approach that can address multiple recognition challenges simultaneously. This approach can enhance data efficiency by enabling knowledge sharing between each recognition model. We demonstrate the effectiveness of this approach using Gait phase recognition (GPR) and Terrain classification (TC) as examples, the most conventional recognition tasks in lower-limb exoskeleton robots. We first created a high-performing GPR model that achieved a Root mean square error (RMSE) value of 2.345 $\pm$ 0.08 and then utilized its knowledge-sharing backbone feature network to learn a TC model with an extremely limited dataset. Using a limited dataset for the TC model allows us to validate the data efficiency of our proposed Multitask learning approach. We compared the accuracy of the proposed TC model against other TC baseline models. The proposed model achieved 99.5 $\pm$ 0.044% accuracy with a limited dataset, outperforming other baseline models, demonstrating its effectiveness in terms of data efficiency. Future research will focus on extending the Multitask learning framework to encompass additional recognition tasks.

Investigating the Usability of Collaborative Robot control through Hands-Free Operation using Eye gaze and Augmented Reality

Jun 22, 2023Abstract:This paper proposes a novel operation for controlling a mobile robot using a head-mounted device. Conventionally, robots are operated using computers or a joystick, which creates limitations in usability and flexibility because control equipment has to be carried by hand. This lack of flexibility may prevent workers from multitasking or carrying objects while operating the robot. To address this limitation, we propose a hands-free method to operate the mobile robot with a human gaze in an Augmented Reality (AR) environment. The proposed work is demonstrated using the HoloLens 2 to control the mobile robot, Robotnik Summit-XL, through the eye-gaze in AR. Stable speed control and navigation of the mobile robot were achieved through admittance control which was calculated using the gaze position. The experiment was conducted to compare the usability between the joystick and the proposed operation, and the results were validated through surveys (i.e., SUS, SEQ). The survey results from the participants after the experiments showed that the wearer of the HoloLens accurately operated the mobile robot in a collaborative manner. The results for both the joystick and the HoloLens were marked as easy to use with above-average usability. This suggests that the HoloLens can be used as a replacement for the joystick to allow hands-free robot operation and has the potential to increase the efficiency of human-robot collaboration in situations when hands-free controls are needed.

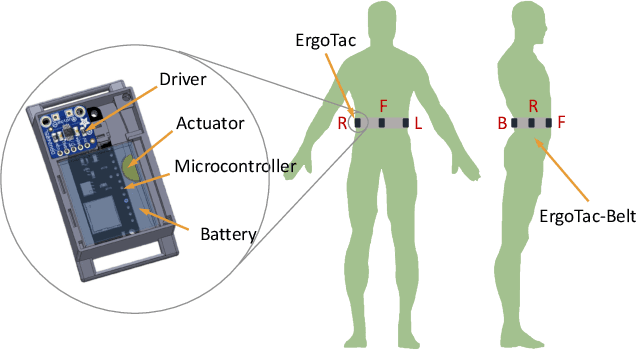

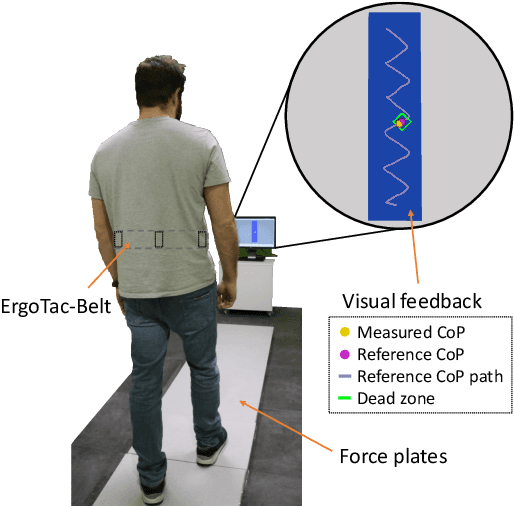

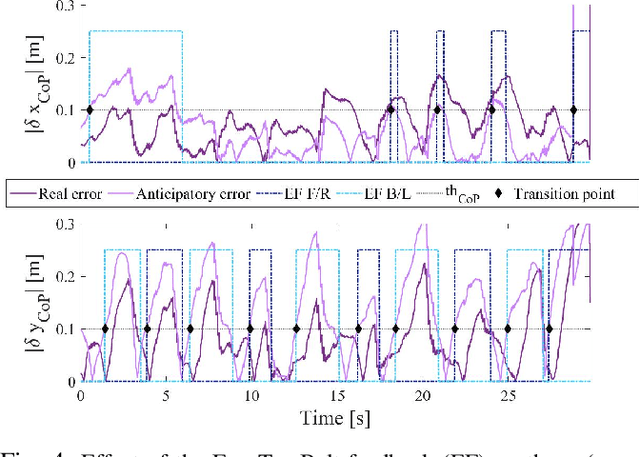

ErgoTac-Belt: Anticipatory Vibrotactile Feedback to Lead \\Centre of Pressure during Walking

Jul 08, 2022

Abstract:Balance and gait disorders are the second leading cause of falls, which, along with consequent injuries, are reported as major public health problems all over the world. For patients who do not require mechanical support, vibrotactile feedback interfaces have proven to be a successful approach in restoring balance. Most of the existing strategies assess trunk or head tilt and velocity or plantar forces, and are limited to the analysis of stance. On the other hand, central to balance control is the need to maintain the body's centre of pressure (CoP) within feasible limits of the support polygon (SP), as in standing, or on track to a new SP, as in walking. Hence, this paper proposes an exploratory study to investigate whether vibrotactile feedback can be employed to lead human CoP during walking. The ErgoTac-Belt vibrotactile device is introduced to instruct the users about the direction to take, both in the antero-posterior and medio-lateral axes. An anticipatory strategy is adopted here, to give the users enough time to react to the stimuli. Experiments on ten healthy subjects demonstrated the promising capability of the proposed device to guide the users' CoP along a predefined reference path, with similar performance as the one achieved with visual feedback. Future developments will investigate our strategy and device in guiding the CoP of elderly or individuals with vestibular impairments, who may not be aware of or, able to figure out, a safe and ergonomic CoP path.

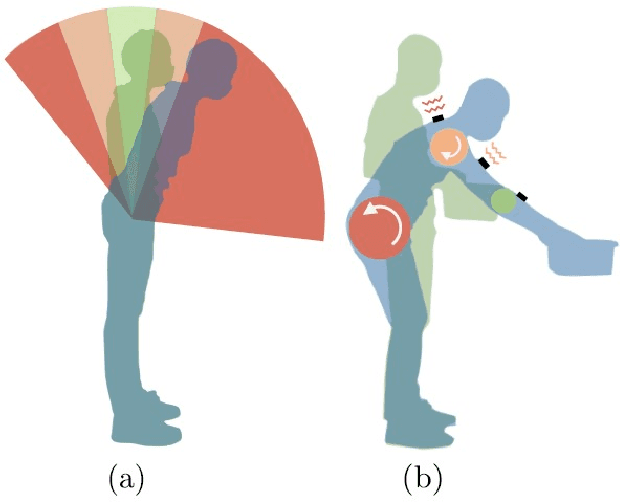

A Directional Vibrotactile Feedback Interface for Ergonomic Postural Adjustment

May 04, 2022

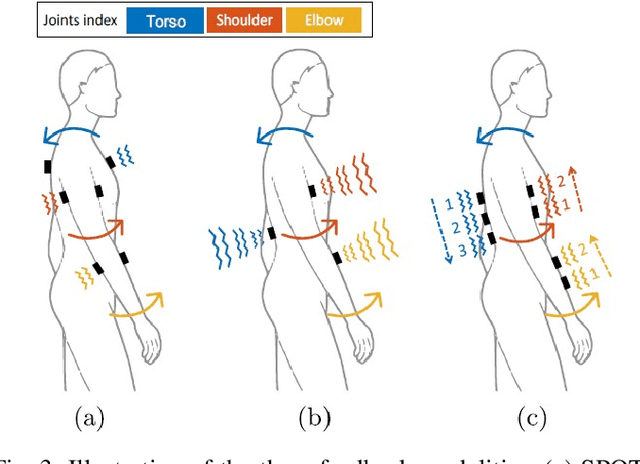

Abstract:The objective of this paper is to develop and evaluate a directional vibrotactile feedback interface as a guidance tool for postural adjustments during work. In contrast to the existing active and wearable systems such as exoskeletons, we aim to create a lightweight and intuitive interface, capable of guiding its wearers towards more ergonomic and healthy working conditions. To achieve this, a vibrotactile device called ErgoTac is employed to develop three different feedback modalities that are able to provide a directional guidance at the body segments towards a desired pose. In addition, an evaluation is made to find the most suitable, comfortable, and intuitive feedback modality for the user. Therefore, these modalities are first compared experimentally on fifteen subjects wearing eight ErgoTac devices to achieve targeted arm and torso configurations. The most effective directional feedback modality is then evaluated on five subjects in a set of experiments in which an ergonomic optimisation module provides the optimised body posture while performing heavy lifting or forceful exertion tasks. The results yield strong evidence on the usefulness and the intuitiveness of one of the developed modalities in providing guidance towards ergonomic working conditions, by minimising the effect of an external load on body joints. We believe that the integration of such low-cost devices in workplaces can help address the well-known and complex problem of work-related musculoskeletal disorders.

* 12 pages. 13 figures. Now published in IEEE Transactions on Haptics DOI: 10.1109/TOH.2021.3112795

Learning Cooperative Dynamic Manipulation Skills from Human Demonstration Videos

Apr 08, 2022

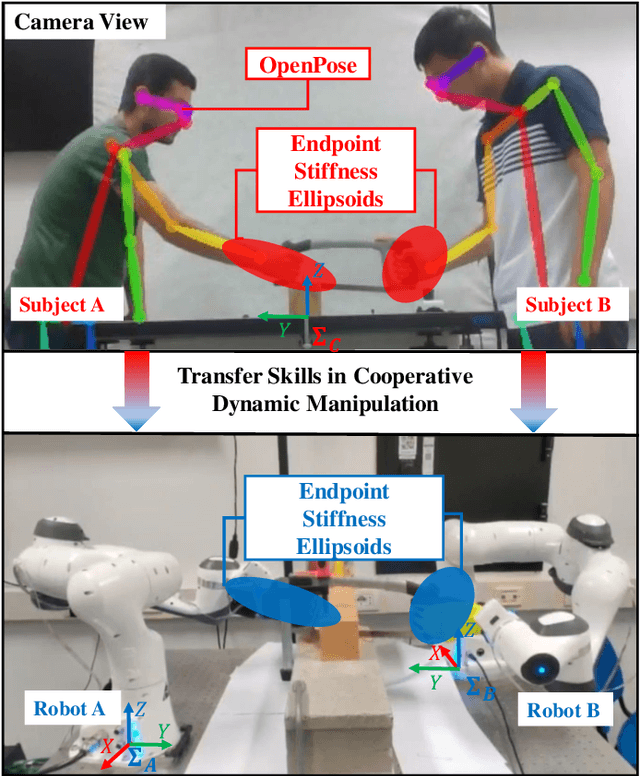

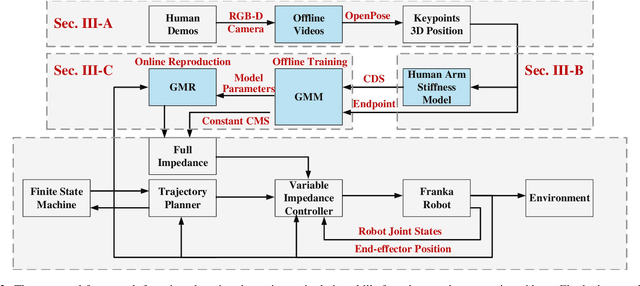

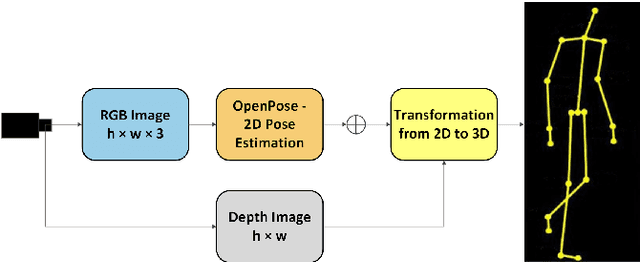

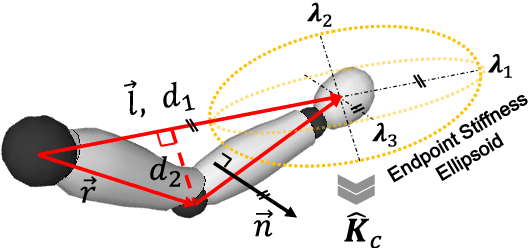

Abstract:This article proposes a method for learning and robotic replication of dynamic collaborative tasks from offline videos. The objective is to extend the concept of learning from demonstration (LfD) to dynamic scenarios, benefiting from widely available or easily producible offline videos. To achieve this goal, we decode important dynamic information, such as the Configuration Dependent Stiffness (CDS), which reveals the contribution of arm pose to the arm endpoint stiffness, from a three-dimensional human skeleton model. Next, through encoding of the CDS via Gaussian Mixture Model (GMM) and decoding via Gaussian Mixture Regression (GMR), the robot's Cartesian impedance profile is estimated and replicated. We demonstrate the proposed method in a collaborative sawing task with leader-follower structure, considering environmental constraints and dynamic uncertainties. The experimental setup includes two Panda robots, which replicate the leader-follower roles and the impedance profiles extracted from a two-persons sawing video.

An Online Multi-Index Approach to Human Ergonomics Assessment in the Workplace

Nov 11, 2021

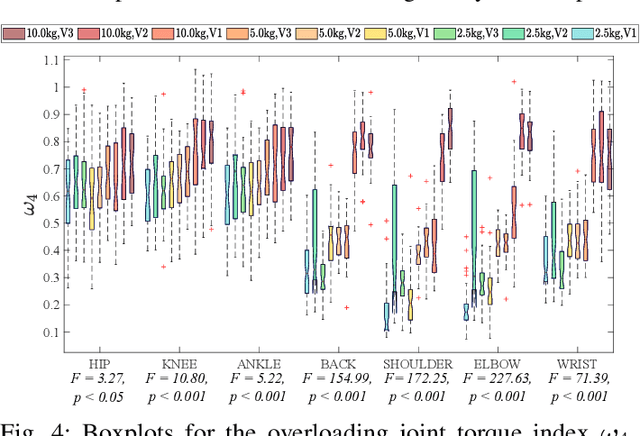

Abstract:Work-related musculoskeletal disorders (WMSDs) remain one of the major occupational safety and health problems in the European Union nowadays. Thus, continuous tracking of workers' exposure to the factors that may contribute to their development is paramount. This paper introduces an online approach to monitor kinematic and dynamic quantities on the workers, providing on the spot an estimate of the physical load required in their daily jobs. A set of ergonomic indexes is defined to account for multiple potential contributors to WMSDs, also giving importance to the subject-specific requirements of the workers. To evaluate the proposed framework, a thorough experimental analysis was conducted on twelve human subjects considering tasks that represent typical working activities in the manufacturing sector. For each task, the ergonomic indexes that better explain the underlying physical load were identified, following a statistical analysis, and supported by the outcome of a surface electromyography (sEMG) analysis. A comparison was also made with a well-recognised and standard tool to evaluate human ergonomics in the workplace, to highlight the benefits introduced by the proposed framework. Results demonstrate the high potential of the proposed framework in identifying the physical risk factors, and therefore to adopt preventive measures. Another equally important contribution of this study is the creation of a comprehensive database on human kinodynamic measurements, which hosts multiple sensory data of healthy subjects performing typical industrial tasks.

Neural Analysis and Synthesis: Reconstructing Speech from Self-Supervised Representations

Oct 28, 2021

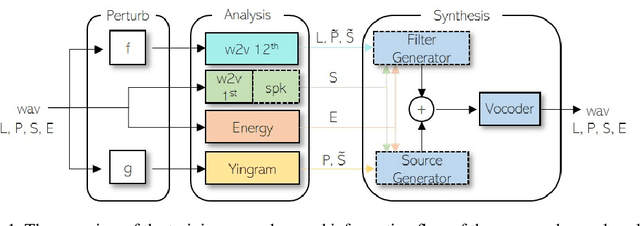

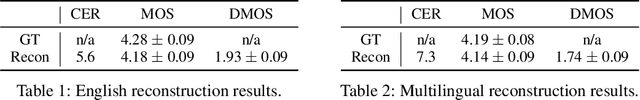

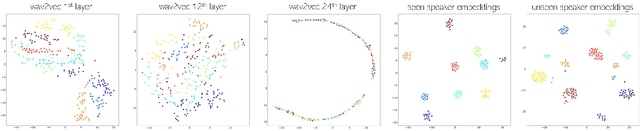

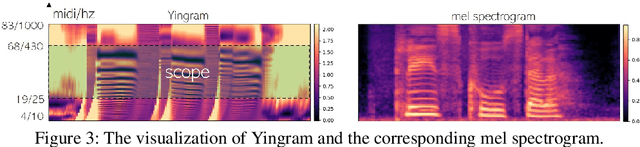

Abstract:We present a neural analysis and synthesis (NANSY) framework that can manipulate voice, pitch, and speed of an arbitrary speech signal. Most of the previous works have focused on using information bottleneck to disentangle analysis features for controllable synthesis, which usually results in poor reconstruction quality. We address this issue by proposing a novel training strategy based on information perturbation. The idea is to perturb information in the original input signal (e.g., formant, pitch, and frequency response), thereby letting synthesis networks selectively take essential attributes to reconstruct the input signal. Because NANSY does not need any bottleneck structures, it enjoys both high reconstruction quality and controllability. Furthermore, NANSY does not require any labels associated with speech data such as text and speaker information, but rather uses a new set of analysis features, i.e., wav2vec feature and newly proposed pitch feature, Yingram, which allows for fully self-supervised training. Taking advantage of fully self-supervised training, NANSY can be easily extended to a multilingual setting by simply training it with a multilingual dataset. The experiments show that NANSY can achieve significant improvement in performance in several applications such as zero-shot voice conversion, pitch shift, and time-scale modification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge