Mattia Leonori

HRI2 Lab, Istituto Italiano di Tecnologia, Genoa, Italy

Robot-Assisted Navigation for Visually Impaired through Adaptive Impedance and Path Planning

Oct 23, 2023

Abstract:This paper presents a framework to navigate visually impaired people through unfamiliar environments by means of a mobile manipulator. The Human-Robot system consists of three key components: a mobile base, a robotic arm, and the human subject who gets guided by the robotic arm via physically coupling their hand with the cobot's end-effector. These components, receiving a goal from the user, traverse a collision-free set of waypoints in a coordinated manner, while avoiding static and dynamic obstacles through an obstacle avoidance unit and a novel human guidance planner. With this aim, we also present a legs tracking algorithm that utilizes 2D LiDAR sensors integrated into the mobile base to monitor the human pose. Additionally, we introduce an adaptive pulling planner responsible for guiding the individual back to the intended path if they veer off course. This is achieved by establishing a target arm end-effector position and dynamically adjusting the impedance parameters in real-time through a impedance tuning unit. To validate the framework we present a set of experiments both in laboratory settings with 12 healthy blindfolded subjects and a proof-of-concept demonstration in a real-world scenario.

A Human Motion Compensation Framework for a Supernumerary Robotic Arm

Oct 16, 2023

Abstract:Supernumerary robotic arms (SRAs) can be used as the third arm to complement and augment the abilities of human users. The user carrying a SRA forms a connected kinodynamic chain, which can be viewed as a special class of floating-base robot systems. However, unlike general floating-base robot systems, human users are the bases of SRAs and they have their subjective behaviors/motions. This implies that human body motions can unintentionally affect the SRA's end-effector movements. To address this challenge, we propose a framework to compensate for the human whole-body motions that interfere with the SRA's end-effector trajectories. The SRA system in this study consists of a 6-degree-of-freedom lightweight arm and a wearable interface. The wearable interface allows users to adjust the installation position of the SRA to fit different body shapes. An inertial measurement unit (IMU)-based sensory interface can provide the body skeleton motion feedback of the human user in real time. By simplifying the floating-base kinematics model, we design an effective motion planner by reconstructing the Jacobian matrix of the SRA. Under the proposed framework, the performance of the reconstructed Jacobian method is assessed by comparing the results obtained with the classical nullspace-based method through two sets of experiments.

The Bridge between Xsens Motion-Capture and Robot Operating System : Enabling Robots with Online 3D Human Motion Tracking

Jun 30, 2023Abstract:This document introduces the bridge between the leading inertial motion-capture systems for 3D human tracking and the most used robotics software framework. 3D kinematic data provided by Xsens are translated into ROS messages to make them usable by robots and a Unified Robotics Description Format (URDF) model of the human kinematics is generated, which can be run and displayed in ROS 3D visualizer, RViz. The code to implement the to-ROS-bridge is a ROS package called xsens_mvn_ros and is available on GitHub at https://github.com/hrii-iit/xsens_mvn_ros The main documentation can be found at https://hrii-iit.github.io/xsens_mvn_ros/index.html

Markerless 3D human pose tracking through multiple cameras and AI: Enabling high accuracy, robustness, and real-time performance

Mar 31, 2023

Abstract:Tracking 3D human motion in real-time is crucial for numerous applications across many fields. Traditional approaches involve attaching artificial fiducial objects or sensors to the body, limiting their usability and comfort-of-use and consequently narrowing their application fields. Recent advances in Artificial Intelligence (AI) have allowed for markerless solutions. However, most of these methods operate in 2D, while those providing 3D solutions compromise accuracy and real-time performance. To address this challenge and unlock the potential of visual pose estimation methods in real-world scenarios, we propose a markerless framework that combines multi-camera views and 2D AI-based pose estimation methods to track 3D human motion. Our approach integrates a Weighted Least Square (WLS) algorithm that computes 3D human motion from multiple 2D pose estimations provided by an AI-driven method. The method is integrated within the Open-VICO framework allowing simulation and real-world execution. Several experiments have been conducted, which have shown high accuracy and real-time performance, demonstrating the high level of readiness for real-world applications and the potential to revolutionize human motion capture.

Open-VICO: An Open-Source Gazebo Toolkit for Multi-Camera-based Skeleton Tracking in Human-Robot Collaboration

Mar 28, 2022

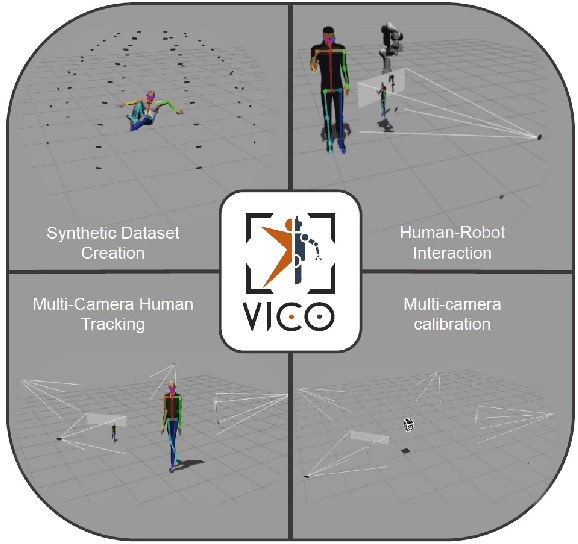

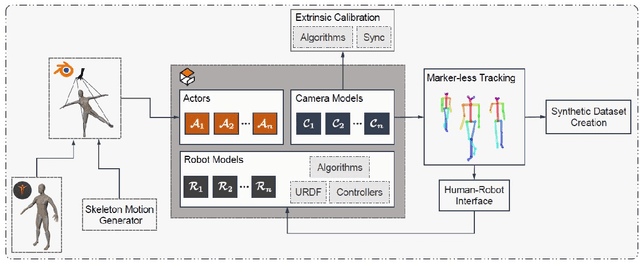

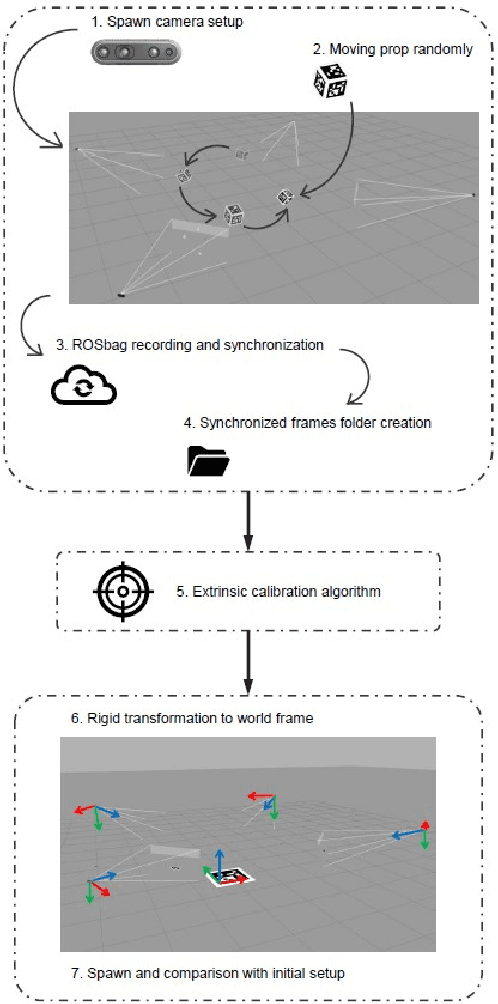

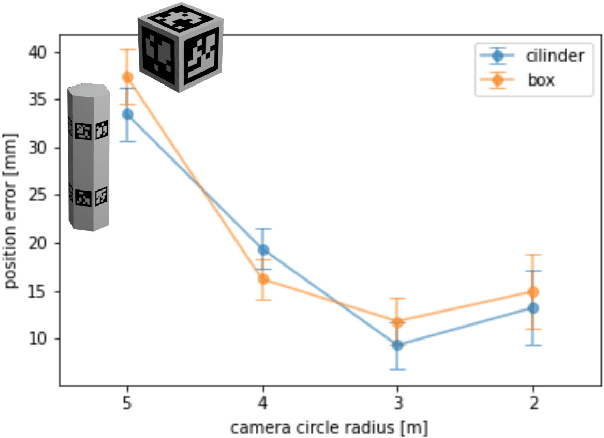

Abstract:Simulation tools are essential for robotics research, especially for those domains in which safety is crucial, such as Human-Robot Collaboration (HRC). However, it is challenging to simulate human behaviors, and existing robotics simulators do not integrate functional human models. This work presents Open-VICO~\footnote{\url{https://gitlab.iit.it/hrii-public/open-vico}}, an open-source toolkit to integrate virtual human models in Gazebo focusing on vision-based human tracking. In particular, Open-VICO allows to combine in the same simulation environment realistic human kinematic models, multi-camera vision setups, and human-tracking techniques along with numerous robot and sensor models thanks to Gazebo. The possibility to incorporate pre-recorded human skeleton motion with Motion Capture systems broadens the landscape of human performance behavioral analysis within Human-Robot Interaction (HRI) settings. To describe the functionalities and stress the potential of the toolkit four specific examples, chosen among relevant literature challenges in the field, are developed using our simulation utils: i) 3D multi-RGB-D camera calibration in simulation, ii) creation of a synthetic human skeleton tracking dataset based on OpenPose, iii) multi-camera scenario for human skeleton tracking in simulation, and iv) a human-robot interaction example. The key of this work is to create a straightforward pipeline which we hope will motivate research on new vision-based algorithms and methodologies for lightweight human-tracking and flexible human-robot applications.

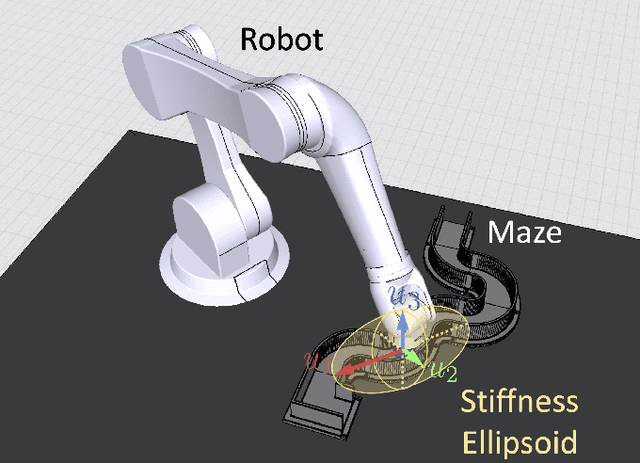

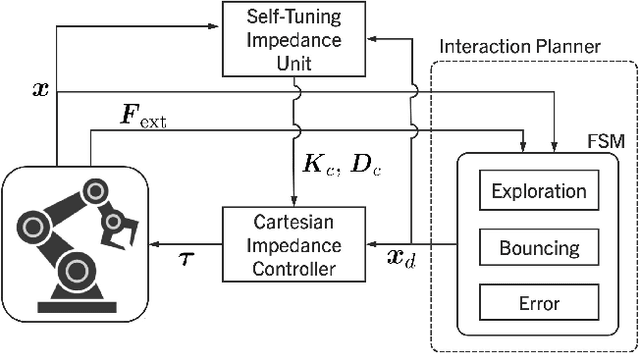

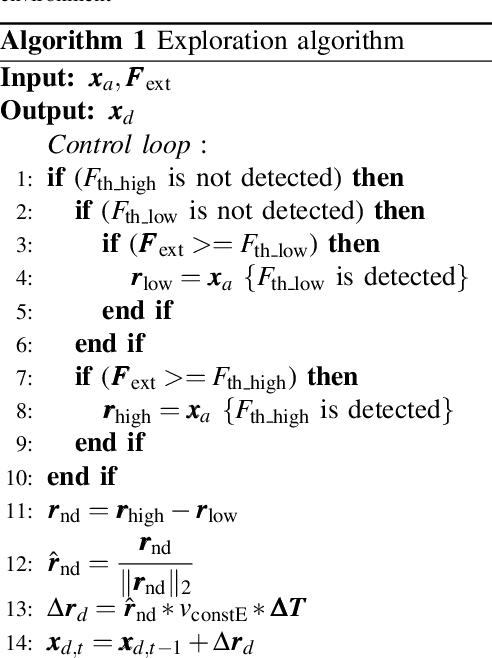

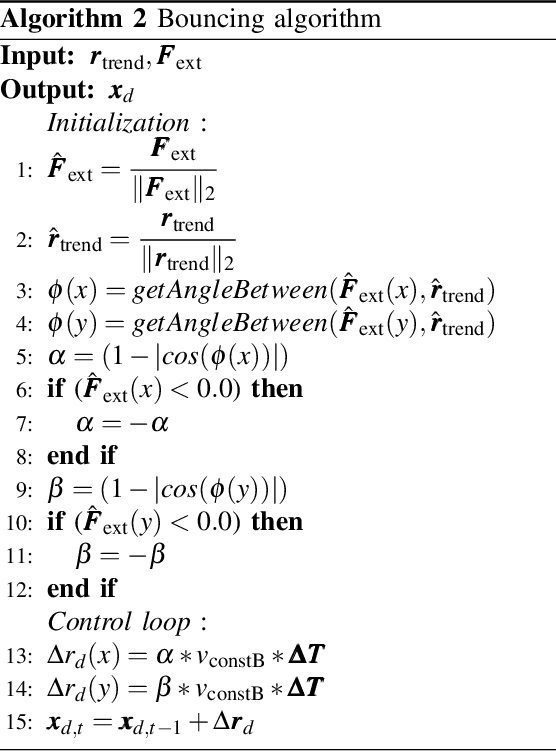

A Self-Tuning Impedance-based Interaction Planner for Robotic Haptic Exploration

Mar 10, 2022

Abstract:This paper presents a novel interaction planning method that exploits impedance tuning techniques in response to environmental uncertainties and unpredictable conditions using haptic information only. The proposed algorithm plans the robot's trajectory based on the haptic interaction with the environment and adapts planning strategies as needed. Two approaches are considered: Exploration and Bouncing strategies. The Exploration strategy takes the actual motion of the robot into account in planning, while the Bouncing strategy exploits the forces and the motion vector of the robot. Moreover, self-tuning impedance is performed according to the planned trajectory to ensure stable contact and low contact forces. In order to show the performance of the proposed methodology, two experiments with a torque-controller robotic arm are carried out. The first considers a maze exploration without obstacles, whereas the second includes obstacles. The proposed method performance is analyzed and compared against previously proposed solutions in both cases. Experimental results demonstrate that: i) the robot can successfully plan its trajectory autonomously in the most feasible direction according to the interaction with the environment, and ii) a stable interaction with an unknown environment despite the uncertainties is achieved. Finally, a scalability demonstration is carried out to show the potential of the proposed method under multiple scenarios.

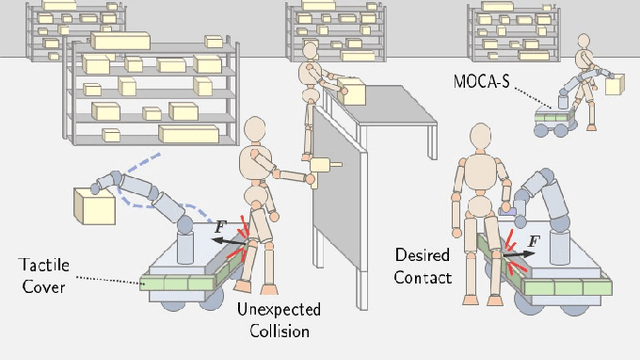

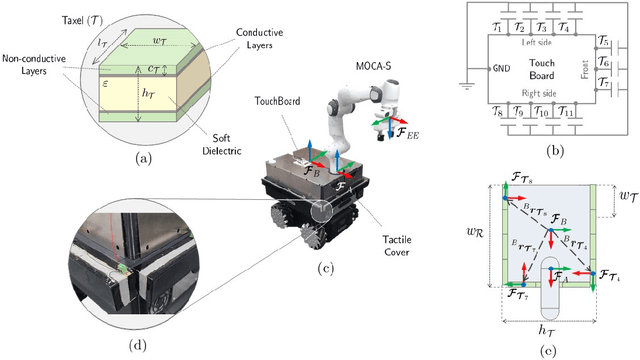

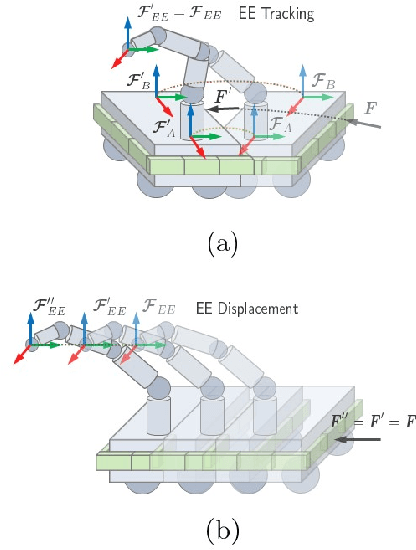

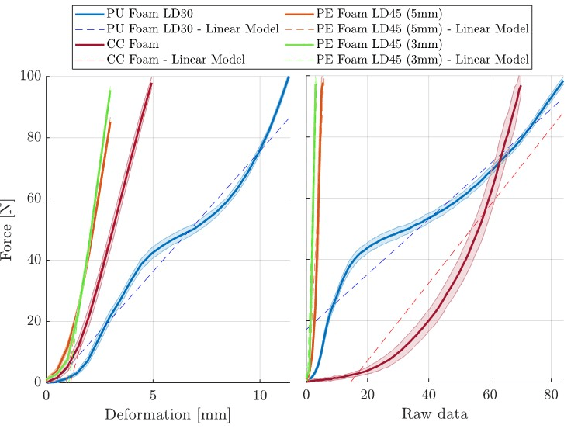

MOCA-S: A Sensitive Mobile Collaborative Robotic Assistant exploiting Low-Cost Capacitive Tactile Cover and Whole-Body Control

Feb 27, 2022

Abstract:Safety is one of the most fundamental aspects of robotics, especially when it comes to collaborative robots (cobots) that are expected to physically interact with humans. Although a large body of literature has focused on safety-related aspects for fixed-based cobots, a low effort has been put into developing collaborative mobile manipulators. In response to this need, this work presents MOCA-S, i.e., Sensitive Mobile Collaborative Robotic Assistant, that integrates a low-cost, capacitive tactile cover to measure interaction forces applied to the robot base. The tactile cover comprises a set of 11 capacitive large-area tactile sensors distributed as a 1-D tactile array around the base. Characterization of the tactile sensors with different materials is included. Moreover, two expanded whole-body controllers that exploit the platform's tactile cover and the loco-manipulation features are proposed. These controllers are tested in two experiments, demonstrating the potential of MOCA-S for safe physical Human-Robot Interaction (pHRI). Finally, an experiment is carried out in which an undesired collision occurs between MOCA-S and a human during a loco-manipulation task. The results demonstrate the intrinsic safety of MOCA-S and the proposed controllers, suggesting a new step towards creating safe mobile manipulators.

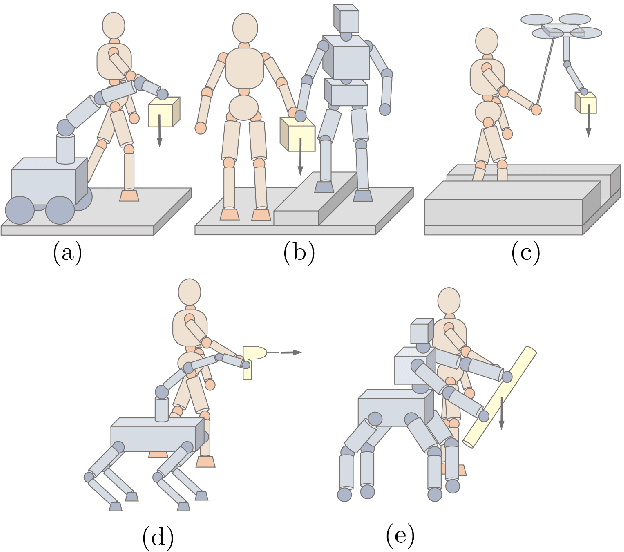

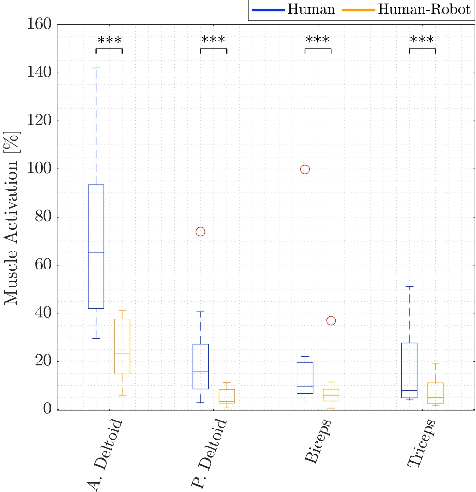

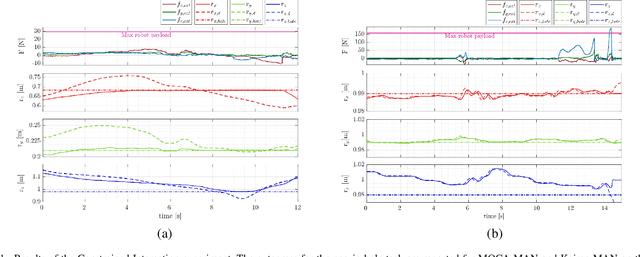

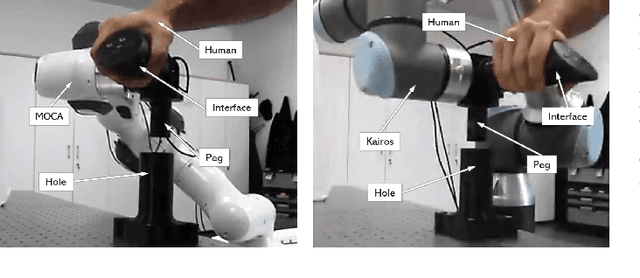

SUPER-MAN: SUPERnumerary Robotic Bodies for Physical Assistance in HuMAN-Robot Conjoined Actions

Jan 17, 2022

Abstract:This paper presents a mobile supernumerary robotic approach to physical assistance in human-robot conjoined actions. The study starts with the description of the SUPER-MAN concept. The idea is to develop and utilize mobile collaborative systems that can follow human loco-manipulation commands to perform industrial tasks through three main components: i) a physical interface, ii) a human-robot interaction controller and iii) a supernumerary robotic body. Next, we present two possible implementations within the framework - from theoretical and hardware perspectives. The first system is called MOCA-MAN, and is composed of a redundant torque-controlled robotic arm and an omni-directional mobile platform. The second one is called Kairos-MAN, formed by a high-payload 6-DoF velocity-controlled robotic arm and an omni-directional mobile platform. The systems share the same admittance interface, through which user wrenches are translated to loco-manipulation commands, generated by whole-body controllers of each system. Besides, a thorough user-study with multiple and cross-gender subjects is presented to reveal the quantitative performance of the two systems in effort demanding and dexterous tasks. Moreover, we provide qualitative results from the NASA-TLX questionnaire to demonstrate the SUPER-MAN approach's potential and its acceptability from the users' viewpoint.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge