Toshiaki Tsuji

Graduate School of Science and Engineering, Saitama University, Saitama, Japan

Hierarchical Proportion Models for Motion Generation via Integration of Motion Primitives

Feb 03, 2026Abstract:Imitation learning (IL) enables robots to acquire human-like motion skills from demonstrations, but it still requires extensive high-quality data and retraining to handle complex or long-horizon tasks. To improve data efficiency and adaptability, this study proposes a hierarchical IL framework that integrates motion primitives with proportion-based motion synthesis. The proposed method employs a two-layer architecture, where the upper layer performs long-term planning, while a set of lower-layer models learn individual motion primitives, which are combined according to specific proportions. Three model variants are introduced to explore different trade-offs between learning flexibility, computational cost, and adaptability: a learning-based proportion model, a sampling-based proportion model, and a playback-based proportion model, which differ in how the proportions are determined and whether the upper layer is trainable. Through real-robot pick-and-place experiments, the proposed models successfully generated complex motions not included in the primitive set. The sampling-based and playback-based proportion models achieved more stable and adaptable motion generation than the standard hierarchical model, demonstrating the effectiveness of proportion-based motion integration for practical robot learning.

Imitation Learning Based on Disentangled Representation Learning of Behavioral Characteristics

Sep 05, 2025Abstract:In the field of robot learning, coordinating robot actions through language instructions is becoming increasingly feasible. However, adapting actions to human instructions remains challenging, as such instructions are often qualitative and require exploring behaviors that satisfy varying conditions. This paper proposes a motion generation model that adapts robot actions in response to modifier directives human instructions imposing behavioral conditions during task execution. The proposed method learns a mapping from modifier directives to actions by segmenting demonstrations into short sequences, assigning weakly supervised labels corresponding to specific modifier types. We evaluated our method in wiping and pick and place tasks. Results show that it can adjust motions online in response to modifier directives, unlike conventional batch-based methods that cannot adapt during execution.

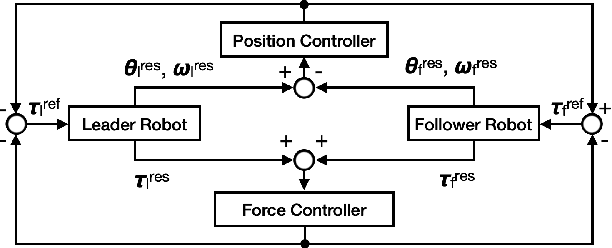

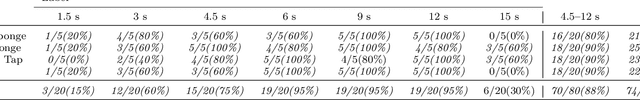

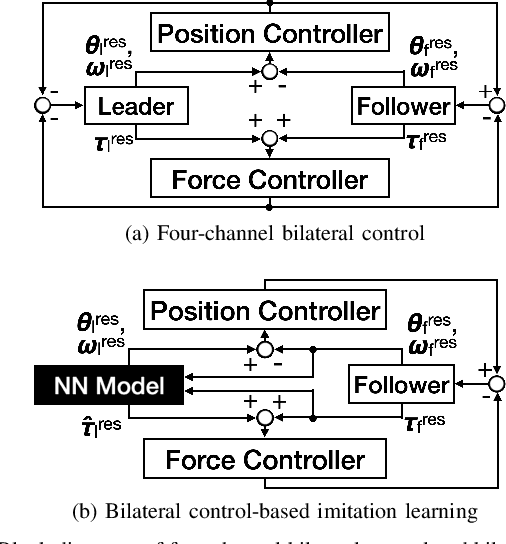

Fast Bilateral Teleoperation and Imitation Learning Using Sensorless Force Control via Accurate Dynamics Model

Jul 08, 2025Abstract:In recent years, the advancement of imitation learning has led to increased interest in teleoperating low-cost manipulators to collect demonstration data. However, most existing systems rely on unilateral control, which only transmits target position values. While this approach is easy to implement and suitable for slow, non-contact tasks, it struggles with fast or contact-rich operations due to the absence of force feedback. This work demonstrates that fast teleoperation with force feedback is feasible even with force-sensorless, low-cost manipulators by leveraging 4-channel bilateral control. Based on accurately identified manipulator dynamics, our method integrates nonlinear terms compensation, velocity and external force estimation, and variable gain corresponding to inertial variation. Furthermore, using data collected by 4-channel bilateral control, we show that incorporating force information into both the input and output of learned policies improves performance in imitation learning. These results highlight the practical effectiveness of our system for high-fidelity teleoperation and data collection on affordable hardware.

A Survey on Imitation Learning for Contact-Rich Tasks in Robotics

Jun 16, 2025Abstract:This paper comprehensively surveys research trends in imitation learning for contact-rich robotic tasks. Contact-rich tasks, which require complex physical interactions with the environment, represent a central challenge in robotics due to their nonlinear dynamics and sensitivity to small positional deviations. The paper examines demonstration collection methodologies, including teaching methods and sensory modalities crucial for capturing subtle interaction dynamics. We then analyze imitation learning approaches, highlighting their applications to contact-rich manipulation. Recent advances in multimodal learning and foundation models have significantly enhanced performance in complex contact tasks across industrial, household, and healthcare domains. Through systematic organization of current research and identification of challenges, this survey provides a foundation for future advancements in contact-rich robotic manipulation.

Motion ReTouch: Motion Modification Using Four-Channel Bilateral Control

Feb 28, 2025Abstract:Recent research has demonstrated the usefulness of imitation learning in autonomous robot operation. In particular, teaching using four-channel bilateral control, which can obtain position and force information, has been proven effective. However, control performance that can easily execute high-speed, complex tasks in one go has not yet been achieved. We propose a method called Motion ReTouch, which retroactively modifies motion data obtained using four-channel bilateral control. The proposed method enables modification of not only position but also force information. This was achieved by the combination of multilateral control and motion-copying system. The proposed method was verified in experiments with a real robot, and the success rate of the test tube transfer task was improved, demonstrating the possibility of modification force information.

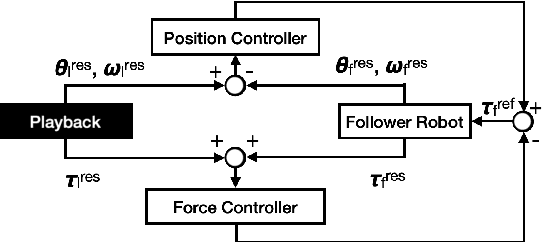

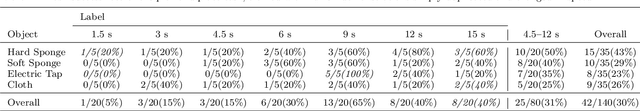

Variable-Speed Teaching-Playback as Real-World Data Augmentation for Imitation Learning

Dec 04, 2024

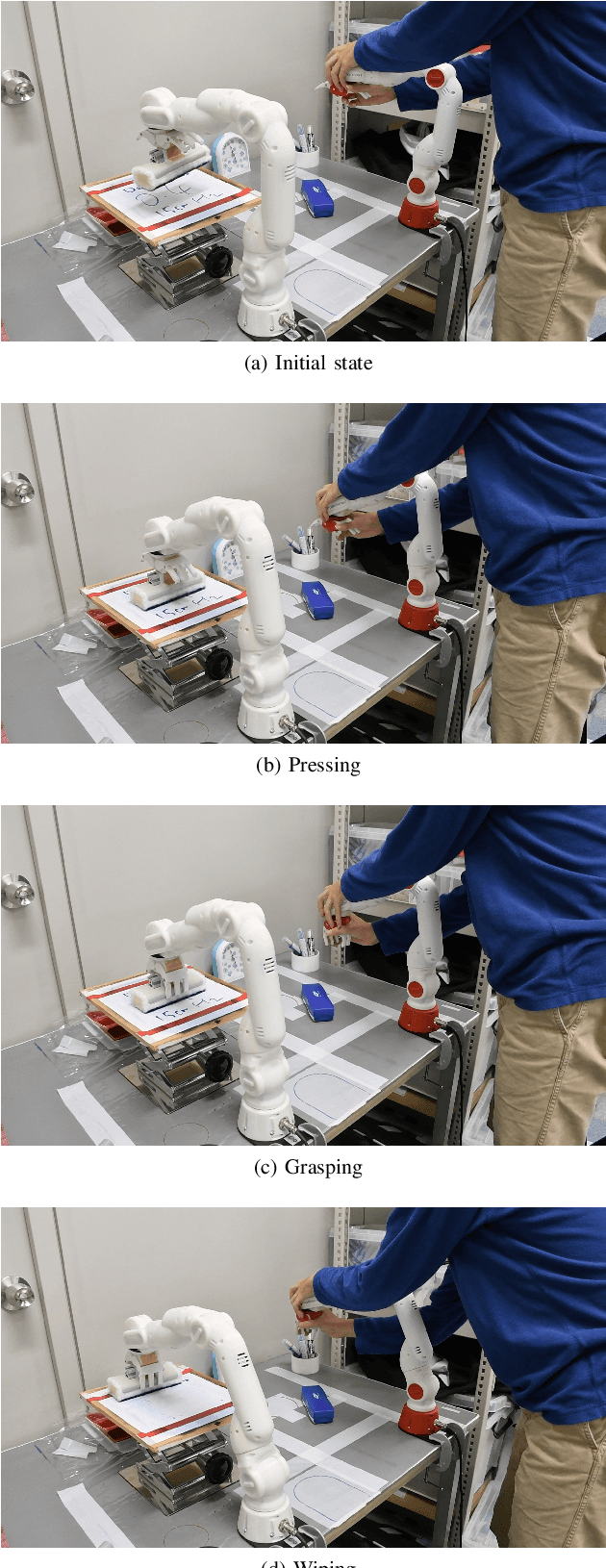

Abstract:Because imitation learning relies on human demonstrations in hard-to-simulate settings, the inclusion of force control in this method has resulted in a shortage of training data, even with a simple change in speed. Although the field of data augmentation has addressed the lack of data, conventional methods of data augmentation for robot manipulation are limited to simulation-based methods or downsampling for position control. This paper proposes a novel method of data augmentation that is applicable to force control and preserves the advantages of real-world datasets. We applied teaching-playback at variable speeds as real-world data augmentation to increase both the quantity and quality of environmental reactions at variable speeds. An experiment was conducted on bilateral control-based imitation learning using a method of imitation learning equipped with position-force control. We evaluated the effect of real-world data augmentation on two tasks, pick-and-place and wiping, at variable speeds, each from two human demonstrations at fixed speed. The results showed a maximum 55% increase in success rate from a simple change in speed of real-world reactions and improved accuracy along the duration/frequency command by gathering environmental reactions at variable speeds.

Error-Feedback Model for Output Correction in Bilateral Control-Based Imitation Learning

Nov 19, 2024Abstract:In recent years, imitation learning using neural networks has enabled robots to perform flexible tasks. However, since neural networks operate in a feedforward structure, they do not possess a mechanism to compensate for output errors. To address this limitation, we developed a feedback mechanism to correct these errors. By employing a hierarchical structure for neural networks comprising lower and upper layers, the lower layer was controlled to follow the upper layer. Additionally, using a multi-layer perceptron in the lower layer, which lacks an internal state, enhanced the error feedback. In the character-writing task, this model demonstrated improved accuracy in writing previously untrained characters. In the character-writing task, this model demonstrated improved accuracy in writing previously untrained characters. Through autonomous control with error feedback, we confirmed that the lower layer could effectively track the output of the upper layer. This study represents a promising step toward integrating neural networks with control theories.

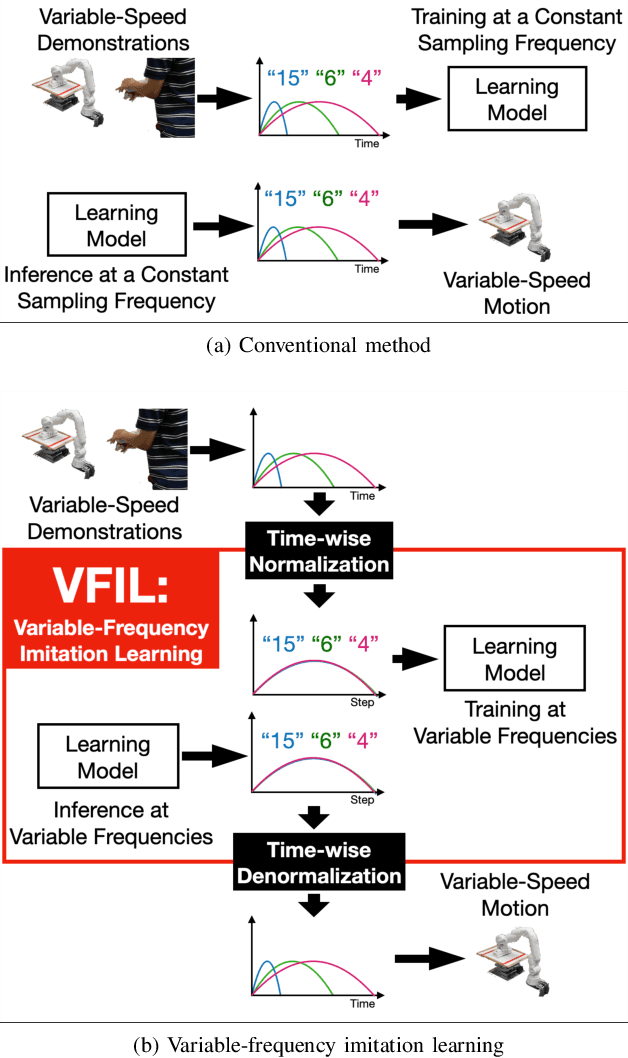

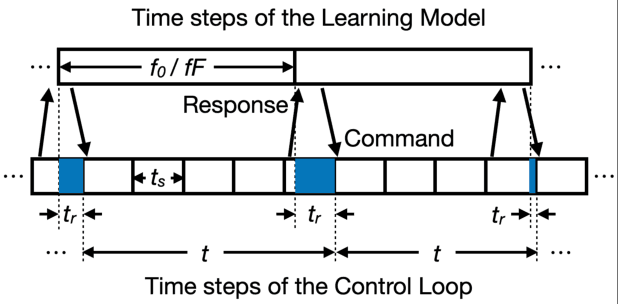

Variable-Frequency Imitation Learning for Variable-Speed Motion

Nov 19, 2024

Abstract:Conventional methods of imitation learning for variable-speed motion have difficulty extrapolating speeds because they rely on learning models running at a constant sampling frequency. This study proposes variable-frequency imitation learning (VFIL), a novel method for imitation learning with learning models trained to run at variable sampling frequencies along with the desired speeds of motion. The experimental results showed that the proposed method improved the velocity-wise accuracy along both the interpolated and extrapolated frequency labels, in addition to a 12.5 % increase in the overall success rate.

Mamba as a motion encoder for robotic imitation learning

Sep 04, 2024

Abstract:Recent advancements in imitation learning, particularly with the integration of LLM techniques, are set to significantly improve robots' dexterity and adaptability. In this study, we propose using Mamba, a state-of-the-art architecture with potential applications in LLMs, for robotic imitation learning, highlighting its ability to function as an encoder that effectively captures contextual information. By reducing the dimensionality of the state space, Mamba operates similarly to an autoencoder. It effectively compresses the sequential information into state variables while preserving the essential temporal dynamics necessary for accurate motion prediction. Experimental results in tasks such as cup placing and case loading demonstrate that despite exhibiting higher estimation errors, Mamba achieves superior success rates compared to Transformers in practical task execution. This performance is attributed to Mamba's structure, which encompasses the state space model. Additionally, the study investigates Mamba's capacity to serve as a real-time motion generator with a limited amount of training data.

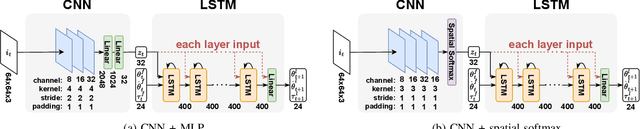

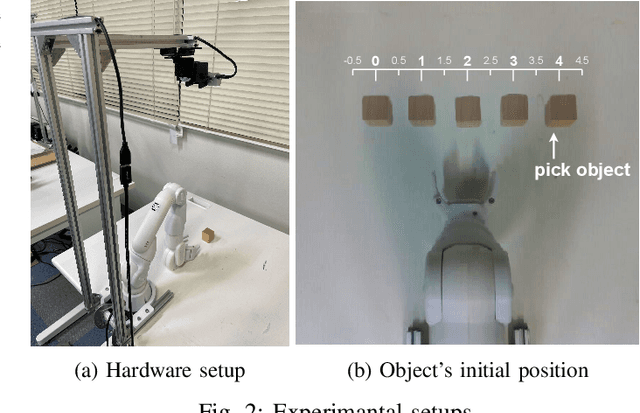

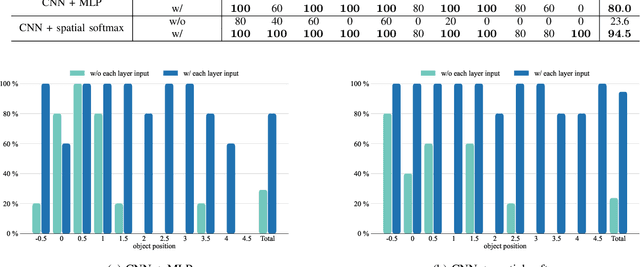

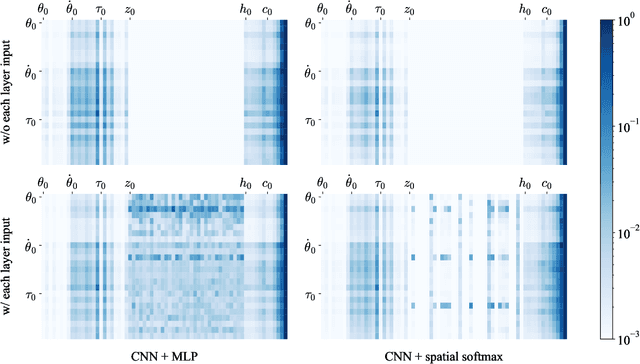

Imitation Learning Inputting Image Feature to Each Layer of Neural Network

Jan 19, 2024

Abstract:Imitation learning enables robots to learn and replicate human behavior from training data. Recent advances in machine learning enable end-to-end learning approaches that directly process high-dimensional observation data, such as images. However, these approaches face a critical challenge when processing data from multiple modalities, inadvertently ignoring data with a lower correlation to the desired output, especially when using short sampling periods. This paper presents a useful method to address this challenge, which amplifies the influence of data with a relatively low correlation to the output by inputting the data into each neural network layer. The proposed approach effectively incorporates diverse data sources into the learning process. Through experiments using a simple pick-and-place operation with raw images and joint information as input, significant improvements in success rates are demonstrated even when dealing with data from short sampling periods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge