Koki Yamane

Fast Bilateral Teleoperation and Imitation Learning Using Sensorless Force Control via Accurate Dynamics Model

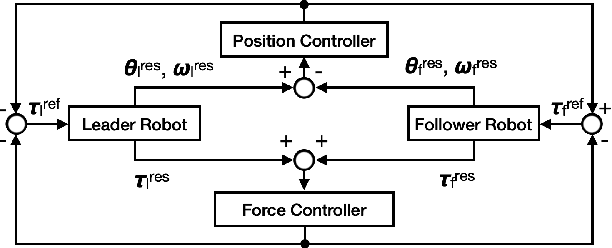

Jul 08, 2025Abstract:In recent years, the advancement of imitation learning has led to increased interest in teleoperating low-cost manipulators to collect demonstration data. However, most existing systems rely on unilateral control, which only transmits target position values. While this approach is easy to implement and suitable for slow, non-contact tasks, it struggles with fast or contact-rich operations due to the absence of force feedback. This work demonstrates that fast teleoperation with force feedback is feasible even with force-sensorless, low-cost manipulators by leveraging 4-channel bilateral control. Based on accurately identified manipulator dynamics, our method integrates nonlinear terms compensation, velocity and external force estimation, and variable gain corresponding to inertial variation. Furthermore, using data collected by 4-channel bilateral control, we show that incorporating force information into both the input and output of learned policies improves performance in imitation learning. These results highlight the practical effectiveness of our system for high-fidelity teleoperation and data collection on affordable hardware.

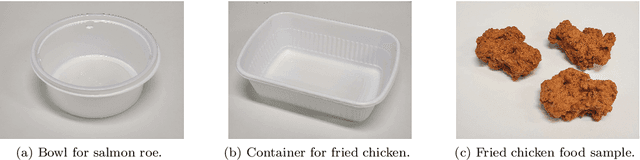

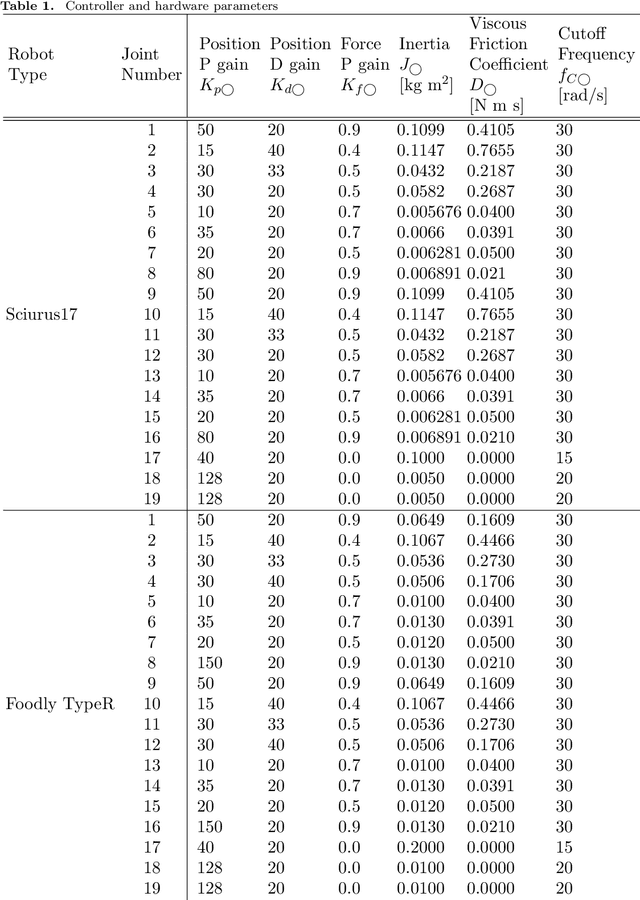

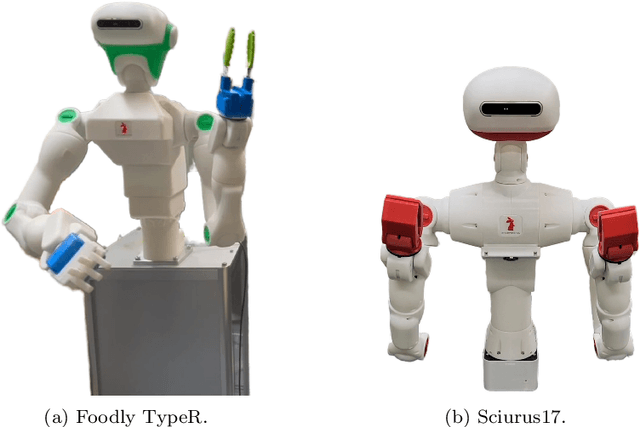

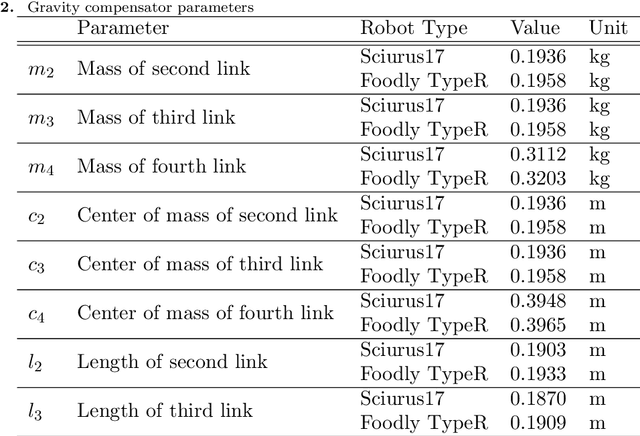

Motion Generation for Food Topping Challenge 2024: Serving Salmon Roe Bowl and Picking Fried Chicken

Apr 30, 2025

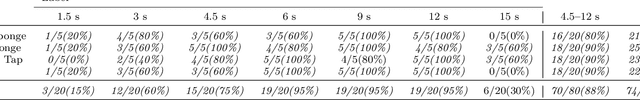

Abstract:Although robots have been introduced in many industries, food production robots are yet to be widely employed because the food industry requires not only delicate movements to handle food but also complex movements that adapt to the environment. Force control is important for handling delicate objects such as food. In addition, achieving complex movements is possible by making robot motions based on human teachings. Four-channel bilateral control is proposed, which enables the simultaneous teaching of position and force information. Moreover, methods have been developed to reproduce motions obtained through human teachings and generate adaptive motions using learning. We demonstrated the effectiveness of these methods for food handling tasks in the Food Topping Challenge at the 2024 IEEE International Conference on Robotics and Automation (ICRA 2024). For the task of serving salmon roe on rice, we achieved the best performance because of the high reproducibility and quick motion of the proposed method. Further, for the task of picking fried chicken, we successfully picked the most pieces of fried chicken among all participating teams. This paper describes the implementation and performance of these methods.

Variable-Speed Teaching-Playback as Real-World Data Augmentation for Imitation Learning

Dec 04, 2024

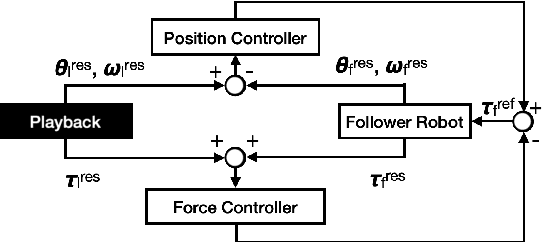

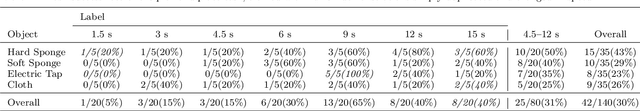

Abstract:Because imitation learning relies on human demonstrations in hard-to-simulate settings, the inclusion of force control in this method has resulted in a shortage of training data, even with a simple change in speed. Although the field of data augmentation has addressed the lack of data, conventional methods of data augmentation for robot manipulation are limited to simulation-based methods or downsampling for position control. This paper proposes a novel method of data augmentation that is applicable to force control and preserves the advantages of real-world datasets. We applied teaching-playback at variable speeds as real-world data augmentation to increase both the quantity and quality of environmental reactions at variable speeds. An experiment was conducted on bilateral control-based imitation learning using a method of imitation learning equipped with position-force control. We evaluated the effect of real-world data augmentation on two tasks, pick-and-place and wiping, at variable speeds, each from two human demonstrations at fixed speed. The results showed a maximum 55% increase in success rate from a simple change in speed of real-world reactions and improved accuracy along the duration/frequency command by gathering environmental reactions at variable speeds.

Four-Axis Adaptive Fingers Hand for Object Insertion: FAAF Hand

Jul 30, 2024

Abstract:Robots operating in the real world face significant but unavoidable issues in object localization that must be dealt with. A typical approach to address this is the addition of compliance mechanisms to hardware to absorb and compensate for some of these errors. However, for fine-grained manipulation tasks, the location and choice of appropriate compliance mechanisms are critical for success. For objects to be inserted in a target site on a flat surface, the object must first be successfully aligned with the opening of the slot, as well as correctly oriented along its central axis, before it can be inserted. We developed the Four-Axis Adaptive Finger Hand (FAAF hand) that is equipped with fingers that can passively adapt in four axes (x, y, z, yaw) enabling it to perform insertion tasks including lid fitting in the presence of significant localization errors. Furthermore, this adaptivity allows the use of simple control methods without requiring contact sensors or other devices. Our results confirm the ability of the FAAF hand on challenging insertion tasks of square and triangle-shaped pegs (or prisms) and placing of container lids in the presence of position errors in all directions and rotational error along the object's central axis, using a simple control scheme.

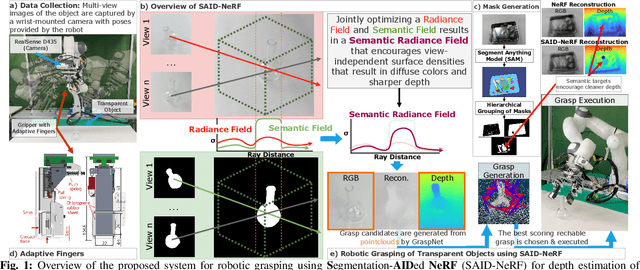

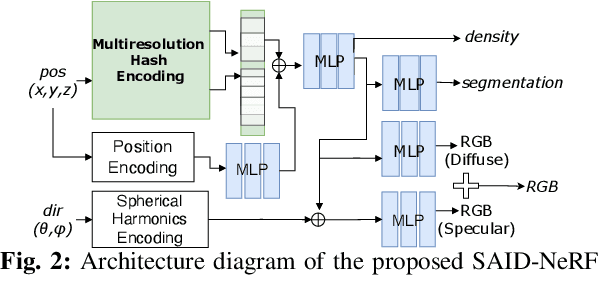

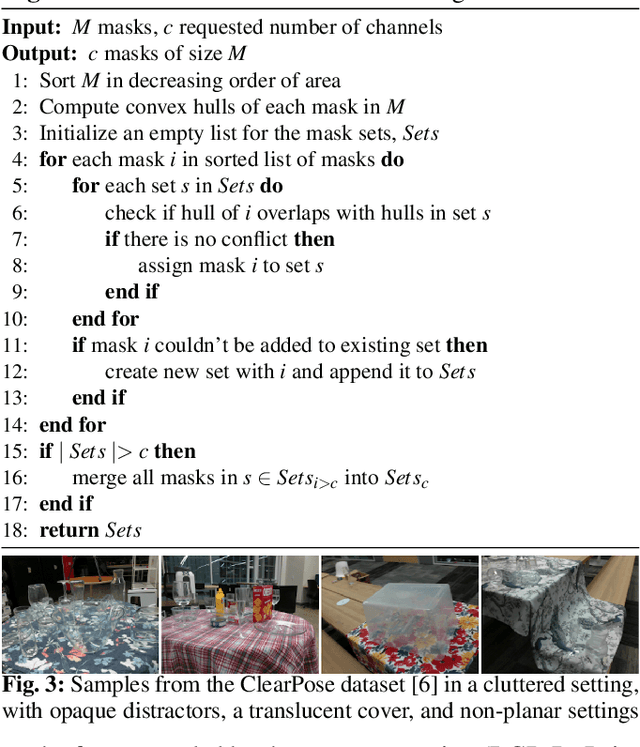

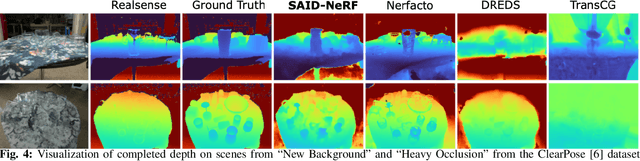

SAID-NeRF: Segmentation-AIDed NeRF for Depth Completion of Transparent Objects

Mar 28, 2024

Abstract:Acquiring accurate depth information of transparent objects using off-the-shelf RGB-D cameras is a well-known challenge in Computer Vision and Robotics. Depth estimation/completion methods are typically employed and trained on datasets with quality depth labels acquired from either simulation, additional sensors or specialized data collection setups and known 3d models. However, acquiring reliable depth information for datasets at scale is not straightforward, limiting training scalability and generalization. Neural Radiance Fields (NeRFs) are learning-free approaches and have demonstrated wide success in novel view synthesis and shape recovery. However, heuristics and controlled environments (lights, backgrounds, etc) are often required to accurately capture specular surfaces. In this paper, we propose using Visual Foundation Models (VFMs) for segmentation in a zero-shot, label-free way to guide the NeRF reconstruction process for these objects via the simultaneous reconstruction of semantic fields and extensions to increase robustness. Our proposed method Segmentation-AIDed NeRF (SAID-NeRF) shows significant performance on depth completion datasets for transparent objects and robotic grasping.

Loss Function Considering Dead Zone for Neural Networks

Feb 01, 2024

Abstract:It is important to reveal the inverse dynamics of manipulators to improve control performance of model-based control. Neural networks (NNs) are promising techniques to represent complicated inverse dynamics while they require a large amount of motion data. However, motion data in dead zones of actuators is not suitable for training models decreasing the number of useful training data. In this study, based on the fact that the manipulator joint does not work irrespective of input torque in dead zones, we propose a new loss function that considers only errors of joints not in dead zones. The proposed method enables to increase in the amount of motion data available for training and the accuracy of the inverse dynamics computation. Experiments on actual equipment using a three-degree-of-freedom (DOF) manipulator showed higher accuracy than conventional methods. We also confirmed and discussed the behavior of the model of the proposed method in dead zones.

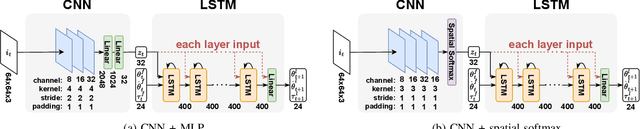

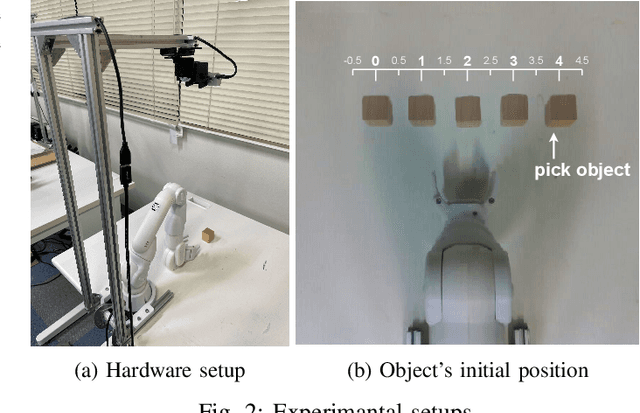

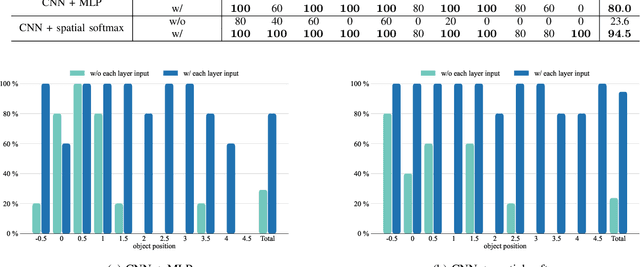

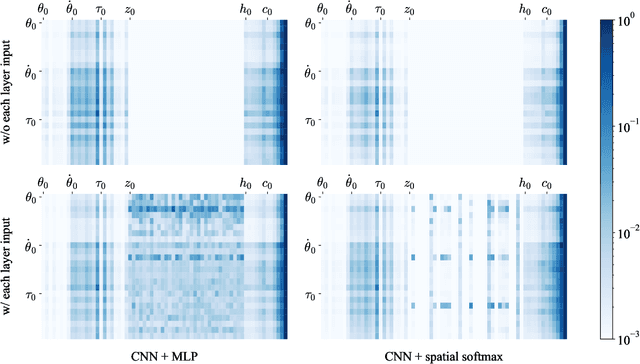

Imitation Learning Inputting Image Feature to Each Layer of Neural Network

Jan 19, 2024

Abstract:Imitation learning enables robots to learn and replicate human behavior from training data. Recent advances in machine learning enable end-to-end learning approaches that directly process high-dimensional observation data, such as images. However, these approaches face a critical challenge when processing data from multiple modalities, inadvertently ignoring data with a lower correlation to the desired output, especially when using short sampling periods. This paper presents a useful method to address this challenge, which amplifies the influence of data with a relatively low correlation to the output by inputting the data into each neural network layer. The proposed approach effectively incorporates diverse data sources into the learning process. Through experiments using a simple pick-and-place operation with raw images and joint information as input, significant improvements in success rates are demonstrated even when dealing with data from short sampling periods.

Soft and Rigid Object Grasping With Cross-Structure Hand Using Bilateral Control-Based Imitation Learning

Nov 16, 2023

Abstract:Object grasping is an important ability required for various robot tasks. In particular, tasks that require precise force adjustments during operation, such as grasping an unknown object or using a grasped tool, are difficult for humans to program in advance. Recently, AI-based algorithms that can imitate human force skills have been actively explored as a solution. In particular, bilateral control-based imitation learning achieves human-level motion speeds with environmental adaptability, only requiring human demonstration and without programming. However, owing to hardware limitations, its grasping performance remains limited, and tasks that involves grasping various objects are yet to be achieved. Here, we developed a cross-structure hand to grasp various objects. We experimentally demonstrated that the integration of bilateral control-based imitation learning and the cross-structure hand is effective for grasping various objects and harnessing tools.

Laboratory Automation: Precision Insertion with Adaptive Fingers utilizing Contact through Sliding with Tactile-based Pose Estimation

Sep 28, 2023

Abstract:Micro well-plates are commonly used apparatus in chemical and biological experiments that are a few centimeters in thickness with wells in them. The task we aim to solve is to place (insert) them onto a well-plate holder with grooves a few millimeters in height. Our insertion task has the following facets: 1) There is uncertainty in the detection of the position and pose of the well-plate and well-plate holder, 2) the accuracy required is in the order of millimeter to sub-millimeter, 3) the well-plate holder is not fastened, and moves with external force, 4) the groove is shallow, and 5) the width of the groove is small. Addressing these challenges, we developed a) an adaptive finger gripper with accurate detection of finger position (for (1)), b) grasped object pose estimation using tactile sensors (for (1)), c) a method to insert the well-plate into the target holder by sliding the well-plate while maintaining contact with the edge of the holder (for (2-4)), and d) estimating the orientation of the edge and aligning the well-plate so that the holder does not move when maintaining contact with the edge (for (5)). We show a significantly high success rate on the insertion task of the well-plate, even though under added noise. An accompanying video is available at the following link: https://drive.google.com/file/d/1UxyJ3XIxqXPnHcpfw-PYs5T5oYQxoc6i/view?usp=sharing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge