Kuniyuki Takahashi

Four-Axis Adaptive Fingers Hand for Object Insertion: FAAF Hand

Jul 30, 2024

Abstract:Robots operating in the real world face significant but unavoidable issues in object localization that must be dealt with. A typical approach to address this is the addition of compliance mechanisms to hardware to absorb and compensate for some of these errors. However, for fine-grained manipulation tasks, the location and choice of appropriate compliance mechanisms are critical for success. For objects to be inserted in a target site on a flat surface, the object must first be successfully aligned with the opening of the slot, as well as correctly oriented along its central axis, before it can be inserted. We developed the Four-Axis Adaptive Finger Hand (FAAF hand) that is equipped with fingers that can passively adapt in four axes (x, y, z, yaw) enabling it to perform insertion tasks including lid fitting in the presence of significant localization errors. Furthermore, this adaptivity allows the use of simple control methods without requiring contact sensors or other devices. Our results confirm the ability of the FAAF hand on challenging insertion tasks of square and triangle-shaped pegs (or prisms) and placing of container lids in the presence of position errors in all directions and rotational error along the object's central axis, using a simple control scheme.

Stable Object Placing using Curl and Diff Features of Vision-based Tactile Sensors

Mar 28, 2024

Abstract:Ensuring stable object placement is crucial to prevent objects from toppling over, breaking, or causing spills. When an object makes initial contact to a surface, and some force is exerted, the moment of rotation caused by the instability of the object's placing can cause the object to rotate in a certain direction (henceforth referred to as direction of corrective rotation). Existing methods often employ a Force/Torque (F/T) sensor to estimate the direction of corrective rotation by detecting the moment of rotation as a torque. However, its effectiveness may be hampered by sensor noise and the tension of the external wiring of robot cables. To address these issues, we propose a method for stable object placing using GelSights, vision-based tactile sensors, as an alternative to F/T sensors. Our method estimates the direction of corrective rotation of objects using the displacement of the black dot pattern on the elastomeric surface of GelSight. We calculate the Curl from vector analysis, indicative of the rotational field magnitude and direction of the displacement of the black dots pattern. Simultaneously, we calculate the difference (Diff) of displacement between the left and right fingers' GelSight's black dots. Then, the robot can manipulate the objects' pose using Curl and Diff features, facilitating stable placing. Across experiments, handling 18 differently characterized objects, our method achieves precise placing accuracy (less than 1-degree error) in nearly 100% of cases. An accompanying video is available at the following link: https://youtu.be/fQbmCksVHlU

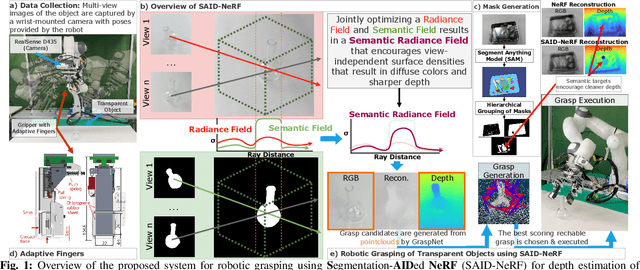

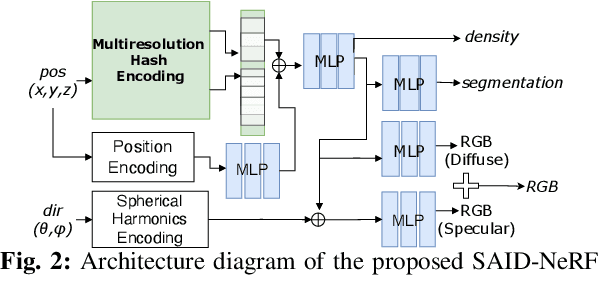

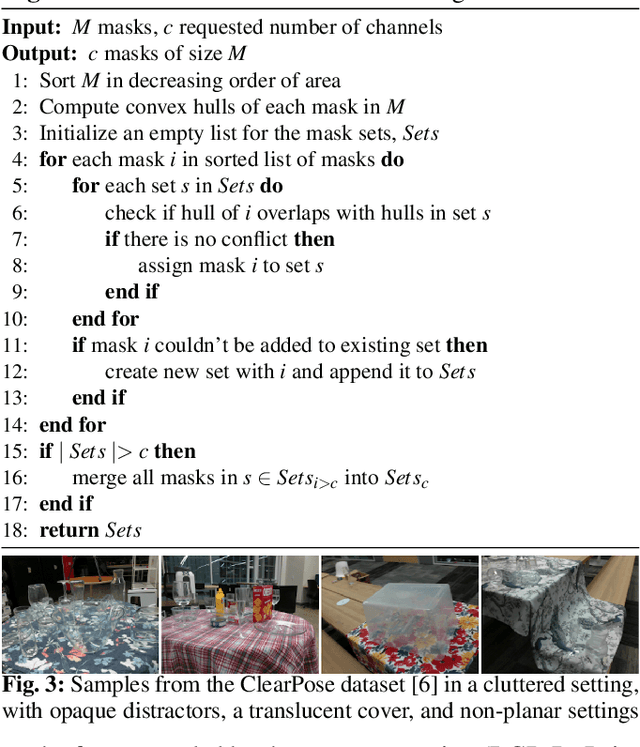

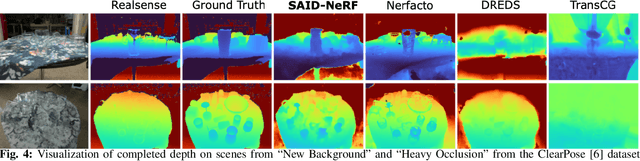

SAID-NeRF: Segmentation-AIDed NeRF for Depth Completion of Transparent Objects

Mar 28, 2024

Abstract:Acquiring accurate depth information of transparent objects using off-the-shelf RGB-D cameras is a well-known challenge in Computer Vision and Robotics. Depth estimation/completion methods are typically employed and trained on datasets with quality depth labels acquired from either simulation, additional sensors or specialized data collection setups and known 3d models. However, acquiring reliable depth information for datasets at scale is not straightforward, limiting training scalability and generalization. Neural Radiance Fields (NeRFs) are learning-free approaches and have demonstrated wide success in novel view synthesis and shape recovery. However, heuristics and controlled environments (lights, backgrounds, etc) are often required to accurately capture specular surfaces. In this paper, we propose using Visual Foundation Models (VFMs) for segmentation in a zero-shot, label-free way to guide the NeRF reconstruction process for these objects via the simultaneous reconstruction of semantic fields and extensions to increase robustness. Our proposed method Segmentation-AIDed NeRF (SAID-NeRF) shows significant performance on depth completion datasets for transparent objects and robotic grasping.

Laboratory Automation: Precision Insertion with Adaptive Fingers utilizing Contact through Sliding with Tactile-based Pose Estimation

Sep 28, 2023

Abstract:Micro well-plates are commonly used apparatus in chemical and biological experiments that are a few centimeters in thickness with wells in them. The task we aim to solve is to place (insert) them onto a well-plate holder with grooves a few millimeters in height. Our insertion task has the following facets: 1) There is uncertainty in the detection of the position and pose of the well-plate and well-plate holder, 2) the accuracy required is in the order of millimeter to sub-millimeter, 3) the well-plate holder is not fastened, and moves with external force, 4) the groove is shallow, and 5) the width of the groove is small. Addressing these challenges, we developed a) an adaptive finger gripper with accurate detection of finger position (for (1)), b) grasped object pose estimation using tactile sensors (for (1)), c) a method to insert the well-plate into the target holder by sliding the well-plate while maintaining contact with the edge of the holder (for (2-4)), and d) estimating the orientation of the edge and aligning the well-plate so that the holder does not move when maintaining contact with the edge (for (5)). We show a significantly high success rate on the insertion task of the well-plate, even though under added noise. An accompanying video is available at the following link: https://drive.google.com/file/d/1UxyJ3XIxqXPnHcpfw-PYs5T5oYQxoc6i/view?usp=sharing

Two-fingered Hand with Gear-type Synchronization Mechanism with Magnet for Improved Small and Offset Objects Grasping: F2 Hand

Sep 20, 2023

Abstract:A problem that plagues robotic grasping is the misalignment of the object and gripper due to difficulties in precise localization, actuation, etc. Under-actuated robotic hands with compliant mechanisms are used to adapt and compensate for these inaccuracies. However, these mechanisms come at the cost of controllability and coordination. For instance, adaptive functions that let the fingers of a two-fingered gripper adapt independently may affect the coordination necessary for grasping small objects. In this work, we develop a two-fingered robotic hand capable of grasping objects that are offset from the gripper's center, while still having the requisite coordination for grasping small objects via a novel gear-type synchronization mechanism with a magnet. This gear synchronization mechanism allows the adaptive finger's tips to be aligned enabling it to grasp objects as small as toothpicks and washers. The magnetic component allows this coordination to automatically turn off when needed, allowing for the grasping of objects that are offset/misaligned from the gripper. This equips the hand with the capability of grasping light, fragile objects (strawberries, creampuffs, etc) to heavy frying pan lids, all while maintaining their position and posture which is vital in numerous applications that require precise positioning or careful manipulation.

Goal-Image Conditioned Dynamic Cable Manipulation through Bayesian Inference and Multi-Objective Black-Box Optimization

Jan 27, 2023

Abstract:To perform dynamic cable manipulation to realize the configuration specified by a target image, we formulate dynamic cable manipulation as a stochastic forward model. Then, we propose a method to handle uncertainty by maximizing the expectation, which also considers estimation errors of the trained model. To avoid issues like multiple local minima and requirement of differentiability by gradient-based methods, we propose using a black-box optimization (BBO) to optimize joint angles to realize a goal image. Among BBO, we use the Tree-structured Parzen Estimator (TPE), a type of Bayesian optimization. By incorporating constraints into the TPE, the optimized joint angles are constrained within the range of motion. Since TPE is population-based, it is better able to detect multiple feasible configurations using the estimated inverse model. We evaluated image similarity between the target and cable images captured by executing the robot using optimal transport distance. The results show that the proposed method improves accuracy compared to conventional gradient-based approaches and methods that use deterministic models that do not consider uncertainty.

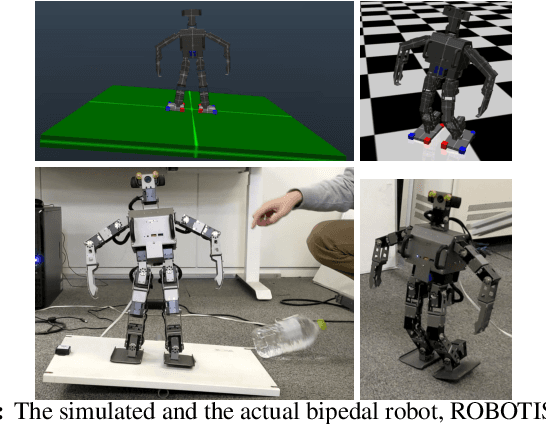

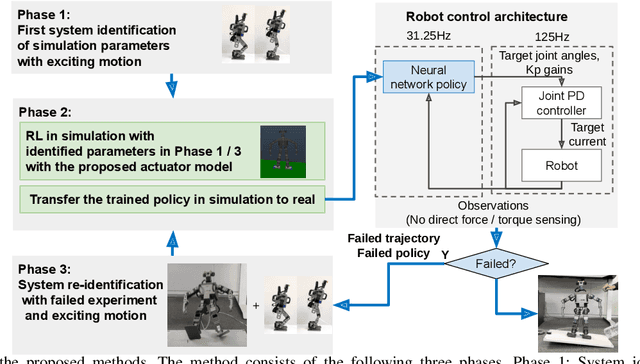

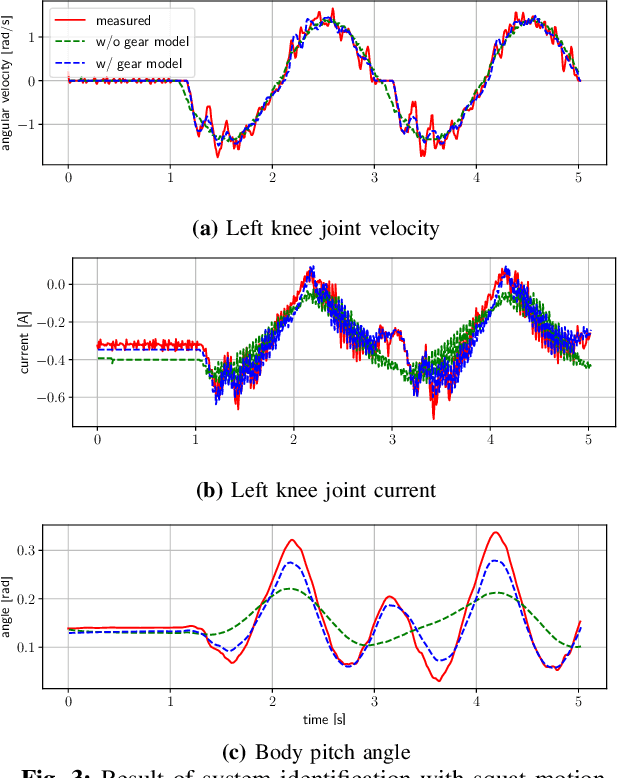

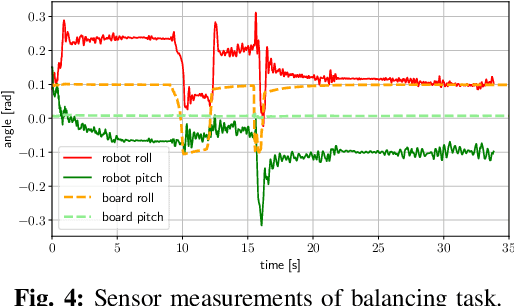

Sim-to-Real Learning of Robust Compliant Bipedal Locomotion on Torque Sensor-Less Gear-Driven Humanoid

Apr 08, 2022

Abstract:In deep reinforcement learning, sim-to-real is the mainstream method as it needs a large number of trials, however, it is challenging to transfer trained policy due to reality gap. In particular, it is known that the characteristics of actuators in leg robots have a considerable influence on the reality gap, and this is also noticeable in high reduction ratio gears. Therefore, we propose a new simulation model of high reduction ratio gears to reduce the reality gap. The instability of the bipedal locomotion causes the sim-to-real transfer to fail catastrophically, making system identification of the physical parameters of the simulation difficult. Thus, we also propose a system identification method that utilizes the failure experience. The realistic simulations obtained by these improvements allow the robot to perform compliant bipedal locomotion by reinforcement learning. The effectiveness of the method is verified using a actual biped robot, ROBOTIS-OP3, and the sim-to-real transferred policy archived to stabilize the robot under severe disturbances and walk on uneven terrain without force and torque sensors.

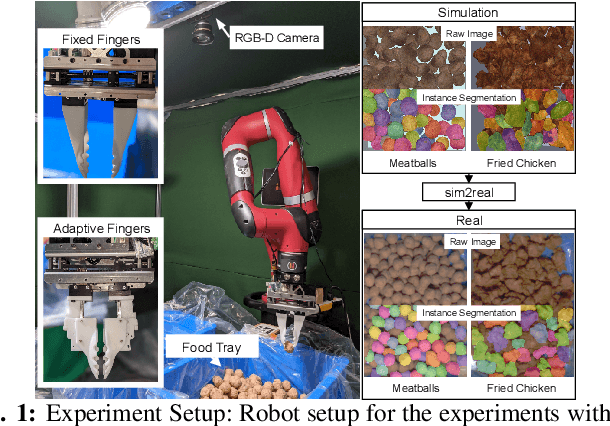

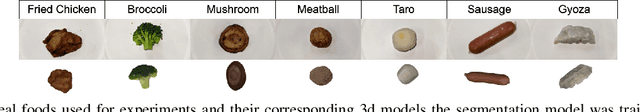

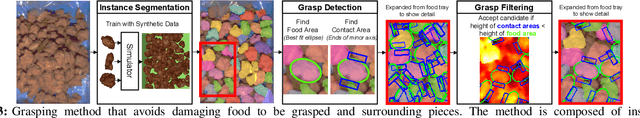

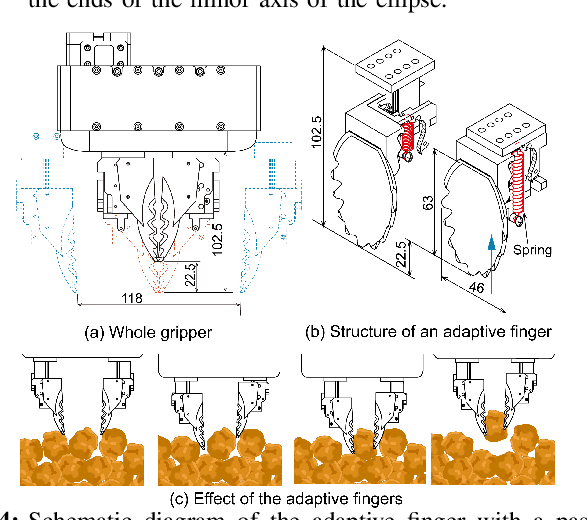

Cluttered Food Grasping with Adaptive Fingers and Synthetic-Data Trained Object Detection

Mar 10, 2022

Abstract:The food packaging industry handles an immense variety of food products with wide-ranging shapes and sizes, even within one kind of food. Menus are also diverse and change frequently, making automation of pick-and-place difficult. A popular approach to bin-picking is to first identify each piece of food in the tray by using an instance segmentation method. However, human annotations to train these methods are unreliable and error-prone since foods are packed close together with unclear boundaries and visual similarity making separation of pieces difficult. To address this problem, we propose a method that trains purely on synthetic data and successfully transfers to the real world using sim2real methods by creating datasets of filled food trays using high-quality 3d models of real pieces of food for the training instance segmentation models. Another concern is that foods are easily damaged during grasping. We address this by introducing two additional methods -- a novel adaptive finger mechanism to passively retract when a collision occurs, and a method to filter grasps that are likely to cause damage to neighbouring pieces of food during a grasp. We demonstrate the effectiveness of the proposed method on several kinds of real foods.

Collision-free Path Planning on Arbitrary Optimization Criteria in the Latent Space through cGANs

Feb 26, 2022

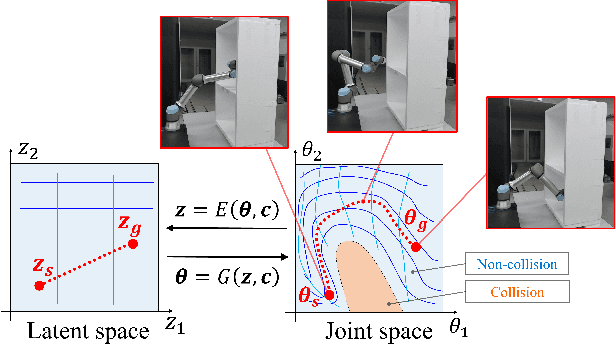

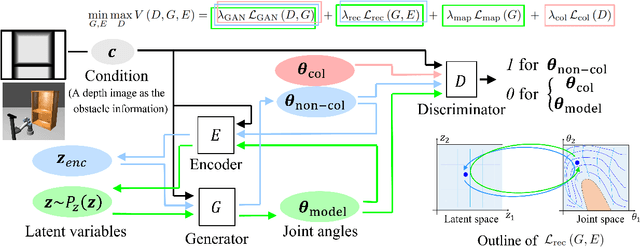

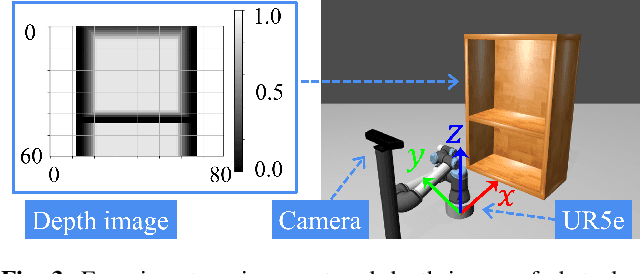

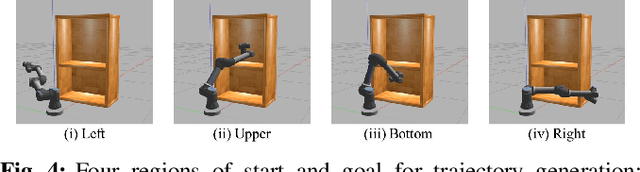

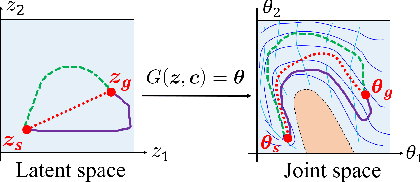

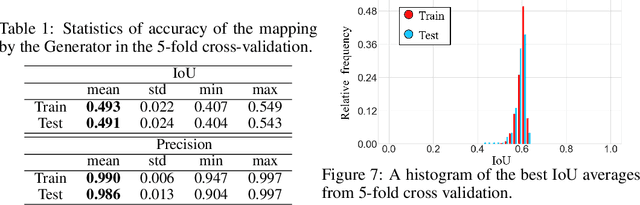

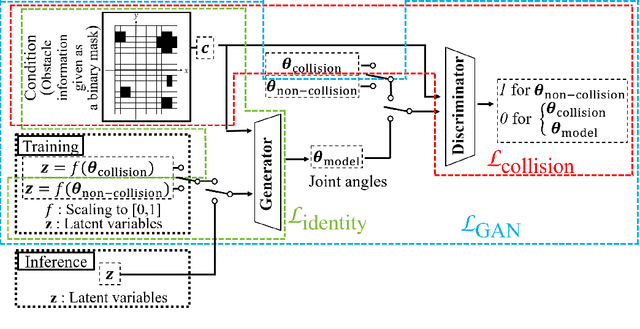

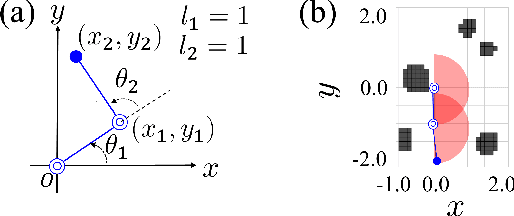

Abstract:We propose a new method for collision-free path planning by Conditional Generative Adversarial Networks (cGANs) by mapping its latent space to only the collision-free areas of the robot joint space when an obstacle map is given as a condition. When manipulating a robot arm, it is necessary to generate a trajectory that avoids contact with the robot itself or the surrounding environment for safety reasons, and it is convenient to generate multiple arbitrary trajectories appropriate for respective purposes. In the proposed method, various trajectories to avoid obstacles can be generated by connecting the start and goal with arbitrary line segments in this latent space. Our method simply provides this collision-free latent space after which any planner, using any optimization conditions, can be used to generate the most suitable paths on the fly. We successfully verified this method with a simulated and actual UR5e 6-DoF robotic arm. We confirmed that different trajectories can be generated according to different optimization conditions.

Collision-free Path Planning in the Latent Space through cGANs

Feb 15, 2022

Abstract:We show a new method for collision-free path planning by cGANs by mapping its latent space to only the collision-free areas of the robot joint space. Our method simply provides this collision-free latent space after which any planner, using any optimization conditions, can be used to generate the most suitable paths on the fly. We successfully verified this method with a simulated two-link robot arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge