Jesús M. Gómez-de-Gabriel

Robotics and Mechatronics Group, University of Malaga, Spain

A Reactive performance-based Shared Control Framework for Assistive Robotic Manipulators

Nov 06, 2023Abstract:In Physical Human--Robot Interaction (pHRI) grippers, humans and robots may contribute simultaneously to actions, so it is necessary to determine how to combine their commands. Control may be swapped from one to the other within certain limits, or input commands may be combined according to some criteria. The Assist-As-Needed (AAN) paradigm focuses on this second approach, as the controller is expected to provide the minimum required assistance to users. Some AAN systems rely on predicting human intention to adjust actions. However, if prediction is too hard, reactive AAN systems may weigh input commands into an emergent one. This paper proposes a novel AAN reactive control system for a robot gripper where input commands are weighted by their respective local performances. Thus, rather than minimizing tracking errors or differences to expected velocities, humans receive more help depending on their needs. The system has been tested using a gripper attached to a sensitive robot arm, which provides evaluation parameters. Tests consisted of completing an on-air planar path with both arms. After the robot gripped a person's forearm, the path and current position of the robot were displayed on a screen to provide feedback to the human. The proposed control has been compared to results without assistance and to impedance control for benchmarking. A statistical analysis of the results proves that global performance improved and tracking errors decreased for ten volunteers with the proposed controller. Besides, unlike impedance control, the proposed one does not significantly affect exerted forces, command variation, or disagreement, measured as the angular difference between human and output command. Results support that the proposed control scheme fits the AAN paradigm, although future work will require further tests for more complex environments and tasks.

Bayesian and Neural Inference on LSTM-based Object Recognition from Tactile and Kinesthetic Information

Jun 10, 2023

Abstract:Recent advances in the field of intelligent robotic manipulation pursue providing robotic hands with touch sensitivity. Haptic perception encompasses the sensing modalities encountered in the sense of touch (e.g., tactile and kinesthetic sensations). This letter focuses on multimodal object recognition and proposes analytical and data-driven methodologies to fuse tactile- and kinesthetic-based classification results. The procedure is as follows: a three-finger actuated gripper with an integrated high-resolution tactile sensor performs squeeze-and-release Exploratory Procedures (EPs). The tactile images and kinesthetic information acquired using angular sensors on the finger joints constitute the time-series datasets of interest. Each temporal dataset is fed to a Long Short-term Memory (LSTM) Neural Network, which is trained to classify in-hand objects. The LSTMs provide an estimation of the posterior probability of each object given the corresponding measurements, which after fusion allows to estimate the object through Bayesian and Neural inference approaches. An experiment with 36-classes is carried out to evaluate and compare the performance of the fused, tactile, and kinesthetic perception systems.The results show that the Bayesian-based classifiers improves capabilities for object recognition and outperforms the Neural-based approach.

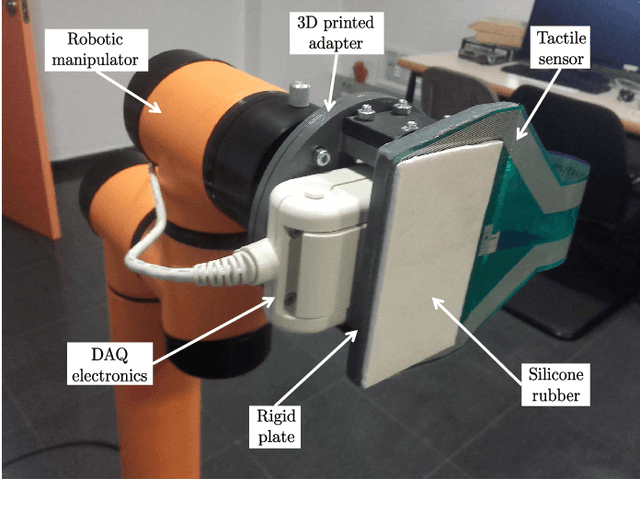

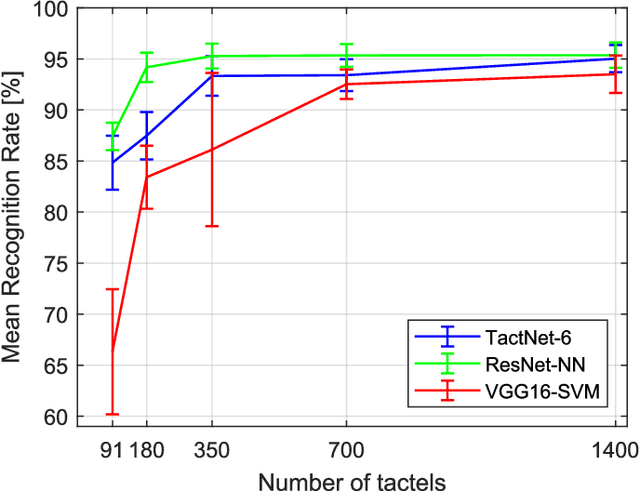

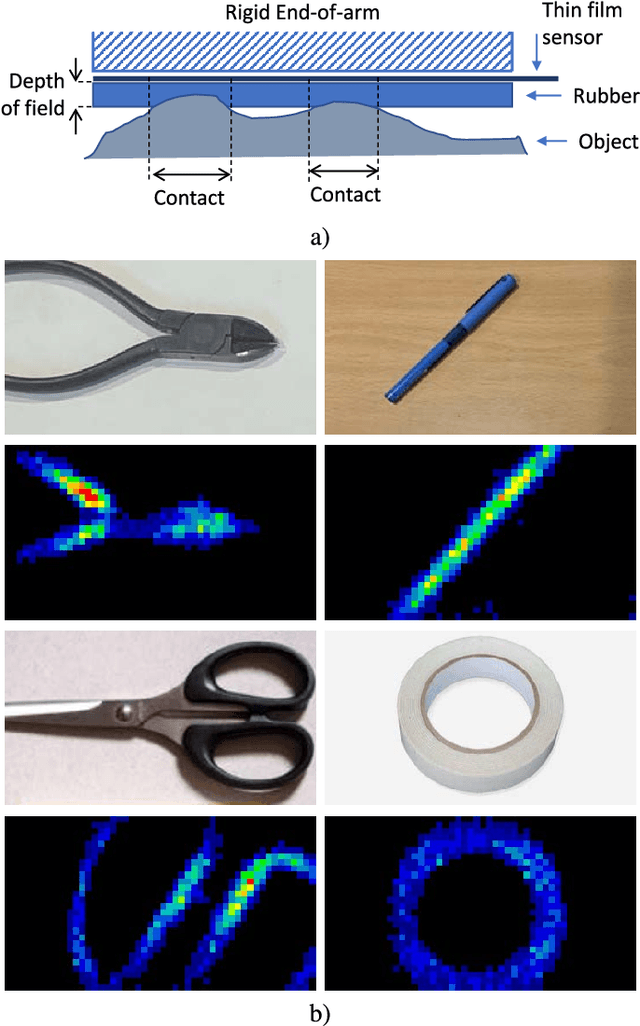

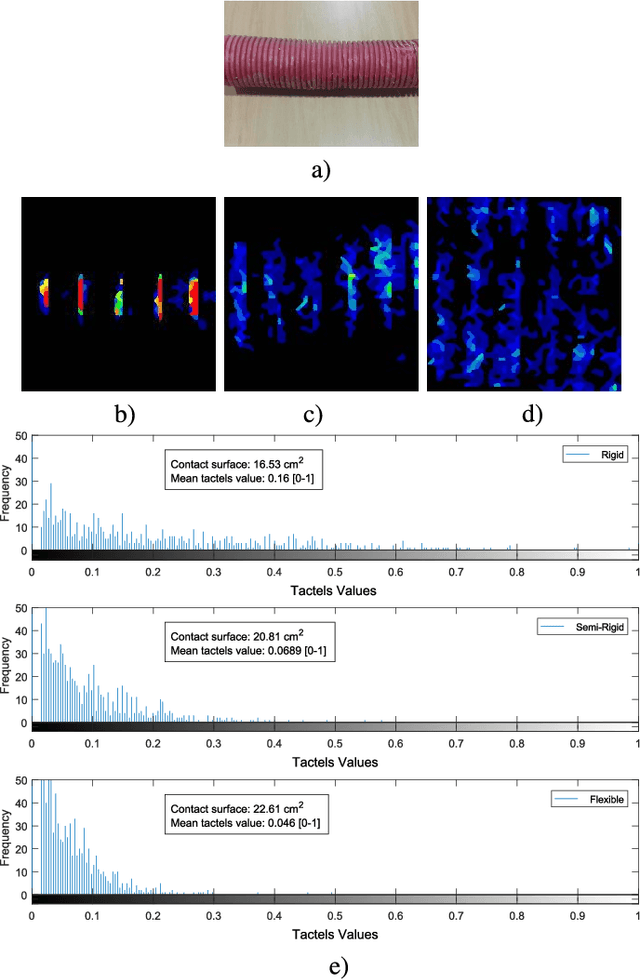

CNN-based Methods for Object Recognition with High-Resolution Tactile Sensors

May 21, 2023

Abstract:Novel high-resolution pressure-sensor arrays allow treating pressure readings as standard images. Computer vision algorithms and methods such as Convolutional Neural Networks (CNN) can be used to identify contact objects. In this paper, a high-resolution tactile sensor has been attached to a robotic end-effector to identify contacted objects. Two CNN-based approaches have been employed to classify pressure images. These methods include a transfer learning approach using a pre-trained CNN on an RGB-images dataset and a custom-made CNN (TactNet) trained from scratch with tactile information. The transfer learning approach can be carried out by retraining the classification layers of the network or replacing these layers with an SVM. Overall, 11 configurations based on these methods have been tested: 8 transfer learning-based, and 3 TactNet-based. Moreover, a study of the performance of the methods and a comparative discussion with the current state-of-the-art on tactile object recognition is presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge