Juan L. Gamella

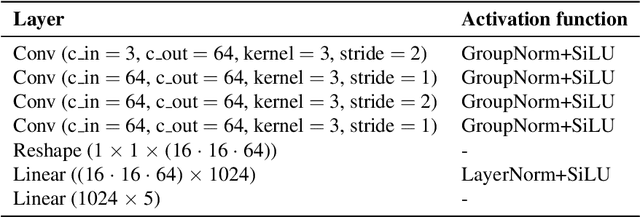

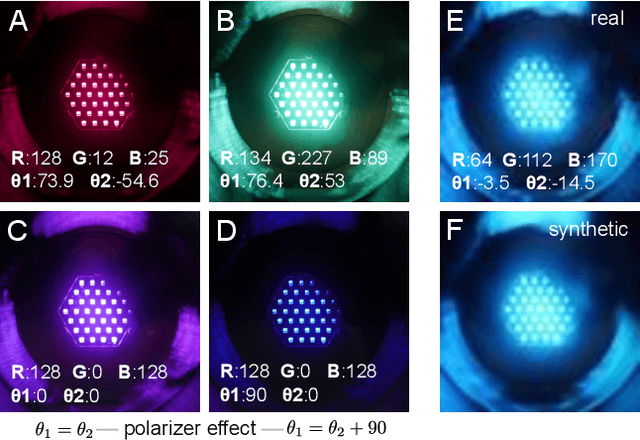

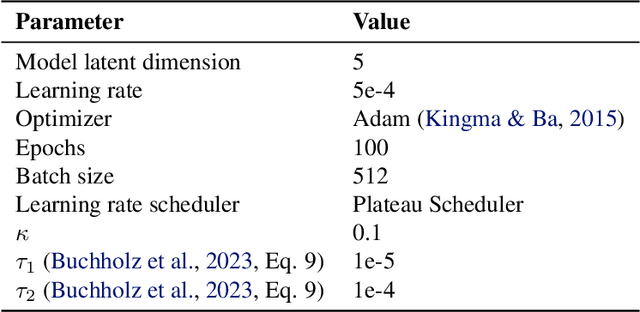

Sanity Checking Causal Representation Learning on a Simple Real-World System

Feb 27, 2025

Abstract:We evaluate methods for causal representation learning (CRL) on a simple, real-world system where these methods are expected to work. The system consists of a controlled optical experiment specifically built for this purpose, which satisfies the core assumptions of CRL and where the underlying causal factors (the inputs to the experiment) are known, providing a ground truth. We select methods representative of different approaches to CRL and find that they all fail to recover the underlying causal factors. To understand the failure modes of the evaluated algorithms, we perform an ablation on the data by substituting the real data-generating process with a simpler synthetic equivalent. The results reveal a reproducibility problem, as most methods already fail on this synthetic ablation despite its simple data-generating process. Additionally, we observe that common assumptions on the mixing function are crucial for the performance of some of the methods but do not hold in the real data. Our efforts highlight the contrast between the theoretical promise of the state of the art and the challenges in its application. We hope the benchmark serves as a simple, real-world sanity check to further develop and validate methodology, bridging the gap towards CRL methods that work in practice. We make all code and datasets publicly available at github.com/simonbing/CRLSanityCheck

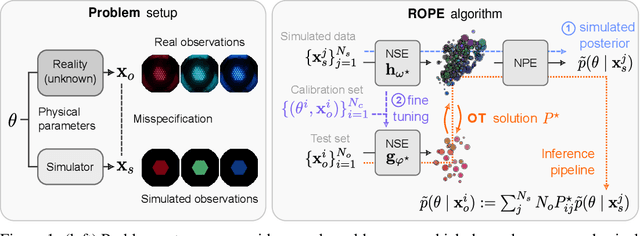

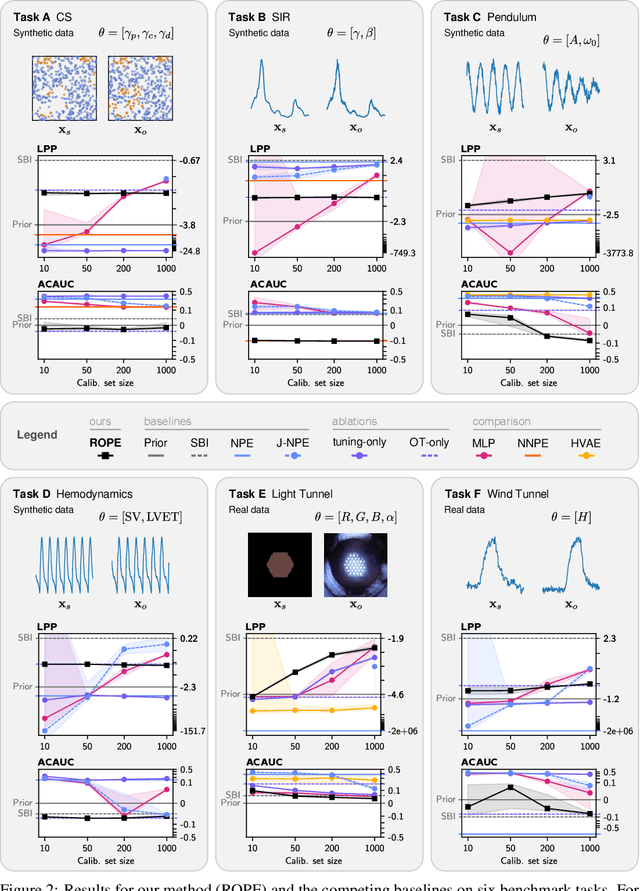

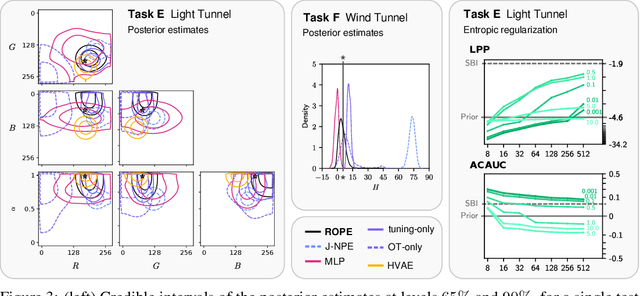

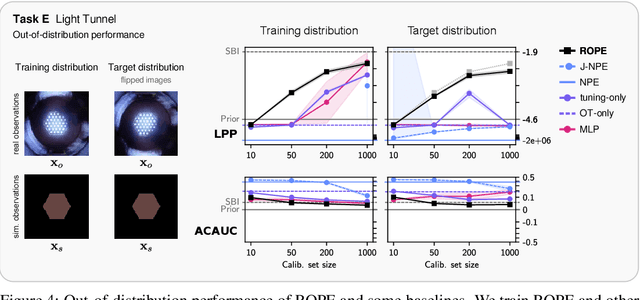

Addressing Misspecification in Simulation-based Inference through Data-driven Calibration

May 14, 2024

Abstract:Driven by steady progress in generative modeling, simulation-based inference (SBI) has enabled inference over stochastic simulators. However, recent work has demonstrated that model misspecification can harm SBI's reliability. This work introduces robust posterior estimation (ROPE), a framework that overcomes model misspecification with a small real-world calibration set of ground truth parameter measurements. We formalize the misspecification gap as the solution of an optimal transport problem between learned representations of real-world and simulated observations. Assuming the prior distribution over the parameters of interest is known and well-specified, our method offers a controllable balance between calibrated uncertainty and informative inference under all possible misspecifications of the simulator. Our empirical results on four synthetic tasks and two real-world problems demonstrate that ROPE outperforms baselines and consistently returns informative and calibrated credible intervals.

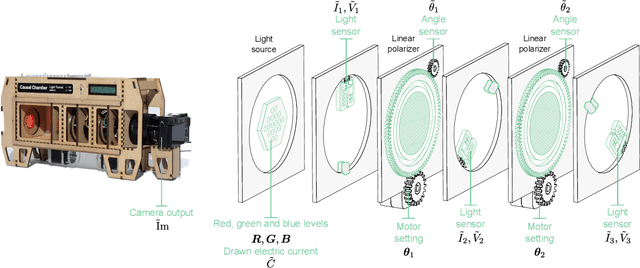

The Causal Chambers: Real Physical Systems as a Testbed for AI Methodology

Apr 17, 2024

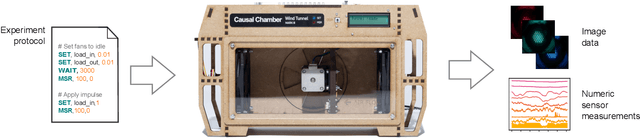

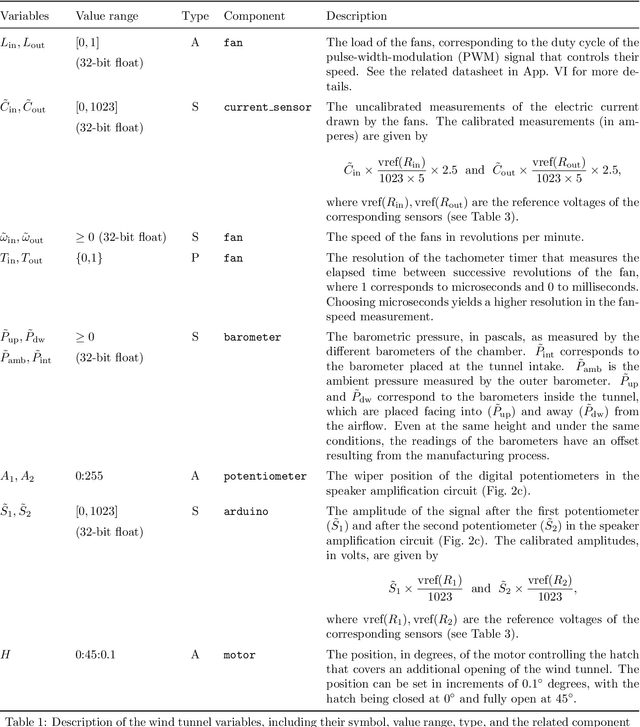

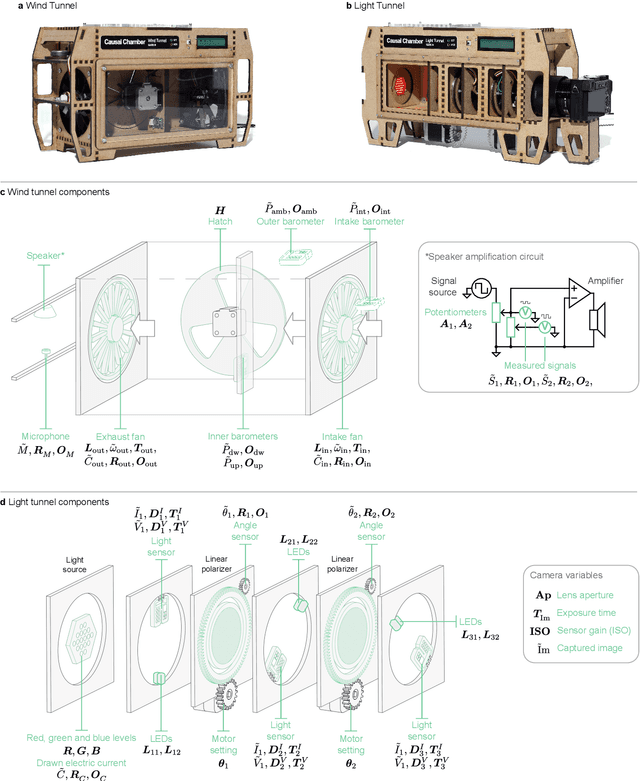

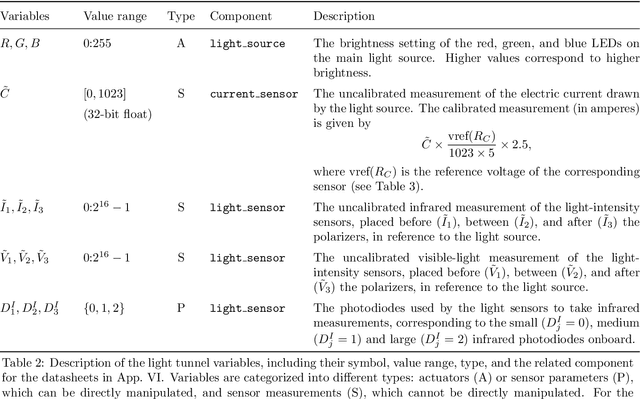

Abstract:In some fields of AI, machine learning and statistics, the validation of new methods and algorithms is often hindered by the scarcity of suitable real-world datasets. Researchers must often turn to simulated data, which yields limited information about the applicability of the proposed methods to real problems. As a step forward, we have constructed two devices that allow us to quickly and inexpensively produce large datasets from non-trivial but well-understood physical systems. The devices, which we call causal chambers, are computer-controlled laboratories that allow us to manipulate and measure an array of variables from these physical systems, providing a rich testbed for algorithms from a variety of fields. We illustrate potential applications through a series of case studies in fields such as causal discovery, out-of-distribution generalization, change point detection, independent component analysis, and symbolic regression. For applications to causal inference, the chambers allow us to carefully perform interventions. We also provide and empirically validate a causal model of each chamber, which can be used as ground truth for different tasks. All hardware and software is made open source, and the datasets are publicly available at causalchamber.org or through the Python package causalchamber.

Characterization and Greedy Learning of Gaussian Structural Causal Models under Unknown Interventions

Dec 22, 2022Abstract:We consider the problem of recovering the causal structure underlying observations from different experimental conditions when the targets of the interventions in each experiment are unknown. We assume a linear structural causal model with additive Gaussian noise and consider interventions that perturb their targets while maintaining the causal relationships in the system. Different models may entail the same distributions, offering competing causal explanations for the given observations. We fully characterize this equivalence class and offer identifiability results, which we use to derive a greedy algorithm called GnIES to recover the equivalence class of the data-generating model without knowledge of the intervention targets. In addition, we develop a novel procedure to generate semi-synthetic data sets with known causal ground truth but distributions closely resembling those of a real data set of choice. We leverage this procedure and evaluate the performance of GnIES on synthetic, real, and semi-synthetic data sets. Despite the strong Gaussian distributional assumption, GnIES is robust to an array of model violations and competitive in recovering the causal structure in small- to large-sample settings. We provide, in the Python packages "gnies" and "sempler", implementations of GnIES and our semi-synthetic data generation procedure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge