Joseph B. Soriaga

ReQuestNet: A Foundational Learning model for Channel Estimation

Aug 12, 2025Abstract:In this paper, we present a novel neural architecture for channel estimation (CE) in 5G and beyond, the Recurrent Equivariant UERS Estimation Network (ReQuestNet). It incorporates several practical considerations in wireless communication systems, such as ability to handle variable number of resource block (RB), dynamic number of transmit layers, physical resource block groups (PRGs) bundling size (BS), demodulation reference signal (DMRS) patterns with a single unified model, thereby, drastically simplifying the CE pipeline. Besides it addresses several limitations of the legacy linear MMSE solutions, for example, by being independent of other reference signals and particularly by jointly processing MIMO layers and differently precoded channels with unknown precoding at the receiver. ReQuestNet comprises of two sub-units, CoarseNet followed by RefinementNet. CoarseNet performs per PRG, per transmit-receive (Tx-Rx) stream channel estimation, while RefinementNet refines the CoarseNet channel estimate by incorporating correlations across differently precoded PRGs, and correlation across multiple input multiple output (MIMO) channel spatial dimensions (cross-MIMO). Simulation results demonstrate that ReQuestNet significantly outperforms genie minimum mean squared error (MMSE) CE across a wide range of channel conditions, delay-Doppler profiles, achieving up to 10dB gain at high SNRs. Notably, ReQuestNet generalizes effectively to unseen channel profiles, efficiently exploiting inter-PRG and cross-MIMO correlations under dynamic PRG BS and varying transmit layer allocations.

Vision-Assisted Digital Twin Creation for mmWave Beam Management

Jan 31, 2024

Abstract:In the context of communication networks, digital twin technology provides a means to replicate the radio frequency (RF) propagation environment as well as the system behaviour, allowing for a way to optimize the performance of a deployed system based on simulations. One of the key challenges in the application of Digital Twin technology to mmWave systems is the prevalent channel simulators' stringent requirements on the accuracy of the 3D Digital Twin, reducing the feasibility of the technology in real applications. We propose a practical Digital Twin creation pipeline and a channel simulator, that relies only on a single mounted camera and position information. We demonstrate the performance benefits compared to methods that do not explicitly model the 3D environment, on downstream sub-tasks in beam acquisition, using the real-world dataset of the DeepSense6G challenge

Transformer-Based Neural Surrogate for Link-Level Path Loss Prediction from Variable-Sized Maps

Oct 10, 2023Abstract:Estimating path loss for a transmitter-receiver location is key to many use-cases including network planning and handover. Machine learning has become a popular tool to predict wireless channel properties based on map data. In this work, we present a transformer-based neural network architecture that enables predicting link-level properties from maps of various dimensions and from sparse measurements. The map contains information about buildings and foliage. The transformer model attends to the regions that are relevant for path loss prediction and, therefore, scales efficiently to maps of different size. Further, our approach works with continuous transmitter and receiver coordinates without relying on discretization. In experiments, we show that the proposed model is able to efficiently learn dominant path losses from sparse training data and generalizes well when tested on novel maps.

Beyond Codebook-Based Analog Beamforming at mmWave: Compressed Sensing and Machine Learning Methods

Nov 03, 2022

Abstract:Analog beamforming is the predominant approach for millimeter wave (mmWave) communication given its favorable characteristics for limited-resource devices. In this work, we aim at reducing the spectral efficiency gap between analog and digital beamforming methods. We propose a method for refined beam selection based on the estimated raw channel. The channel estimation, an underdetermined problem, is solved using compressed sensing (CS) methods leveraging angular domain sparsity of the channel. To reduce the complexity of CS methods, we propose dictionary learning iterative soft-thresholding algorithm, which jointly learns the sparsifying dictionary and signal reconstruction. We evaluate the proposed method on a realistic mmWave setup and show considerable performance improvement with respect to code-book based analog beamforming approaches.

Deep Learning-based Channel Estimation for Wideband Hybrid MmWave Massive MIMO

May 10, 2022

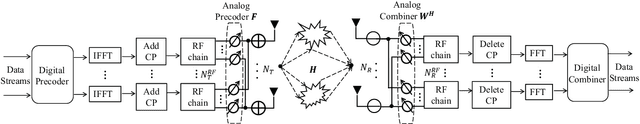

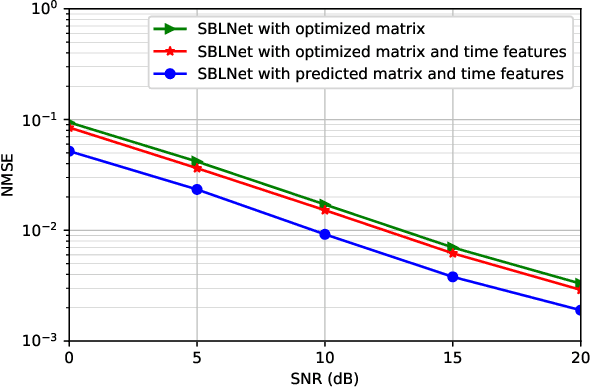

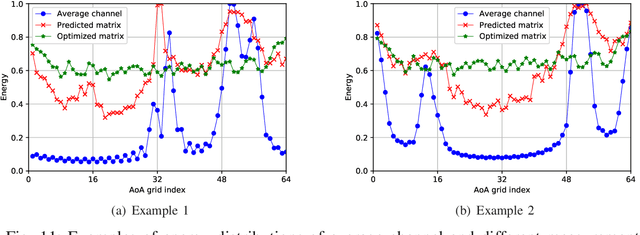

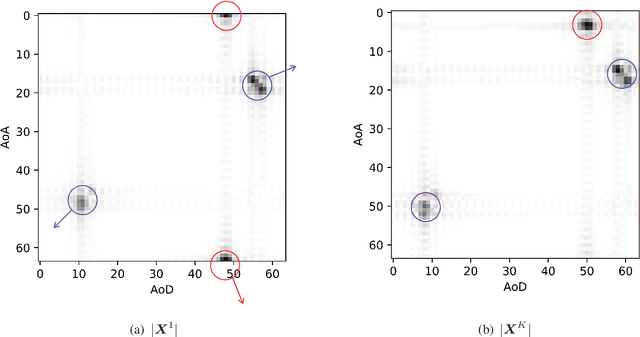

Abstract:Hybrid analog-digital (HAD) architecture is widely adopted in practical millimeter wave (mmWave) massive multiple-input multiple-output (MIMO) systems to reduce hardware cost and energy consumption. However, channel estimation in the context of HAD is challenging due to only limited radio frequency (RF) chains at transceivers. Although various compressive sensing (CS) algorithms have been developed to solve this problem by exploiting inherent channel sparsity and sparsity structures, practical effects, such as power leakage and beam squint, can still make the real channel features deviate from the assumed models and result in performance degradation. Also, the high complexity of CS algorithms caused by a large number of iterations hinders their applications in practice. To tackle these issues, we develop a deep learning (DL)-based channel estimation approach where the sparse Bayesian learning (SBL) algorithm is unfolded into a deep neural network (DNN). In each SBL layer, Gaussian variance parameters of the sparse angular domain channel are updated by a tailored DNN, which is able to effectively capture complicated channel sparsity structures in various domains. Besides, the measurement matrix is jointly optimized for performance improvement. Then, the proposed approach is extended to the multi-block case where channel correlation in time is further exploited to adaptively predict the measurement matrix and facilitate the update of Gaussian variance parameters. Based on simulation results, the proposed approaches significantly outperform existing approaches but with reduced complexity.

MIMO-GAN: Generative MIMO Channel Modeling

Mar 16, 2022

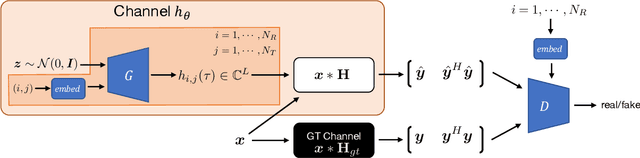

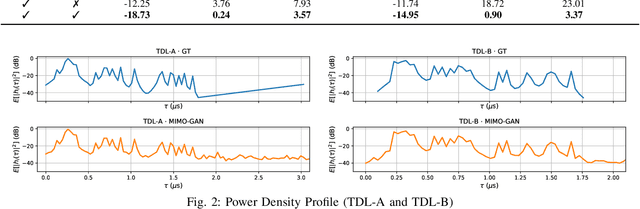

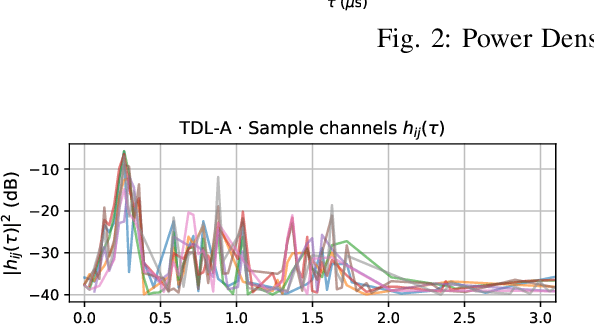

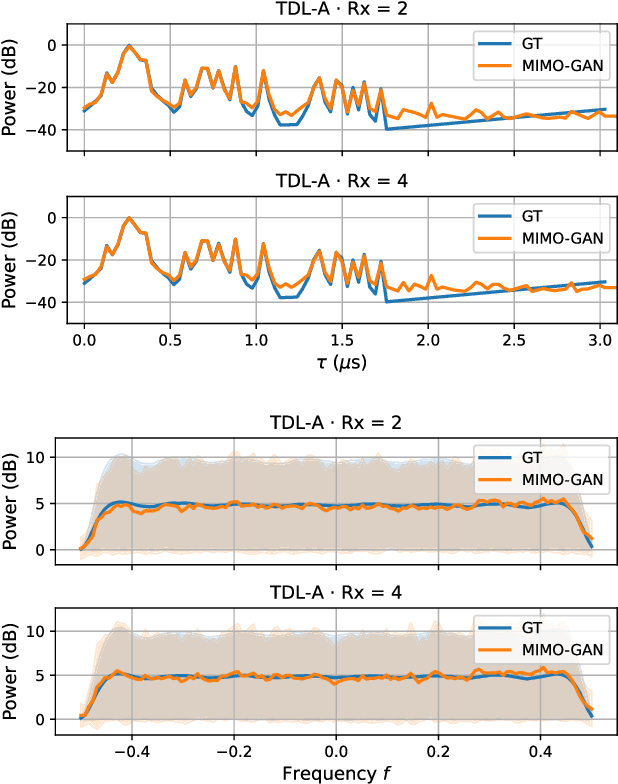

Abstract:We propose generative channel modeling to learn statistical channel models from channel input-output measurements. Generative channel models can learn more complicated distributions and represent the field data more faithfully. They are tractable and easy to sample from, which can potentially speed up the simulation rounds. To achieve this, we leverage advances in GAN, which helps us learn an implicit distribution over stochastic MIMO channels from observed measurements. In particular, our approach MIMO-GAN implicitly models the wireless channel as a distribution of time-domain band-limited impulse responses. We evaluate MIMO-GAN on 3GPP TDL MIMO channels and observe high-consistency in capturing power, delay and spatial correlation statistics of the underlying channel. In particular, we observe MIMO-GAN achieve errors of under 3.57 ns average delay and -18.7 dB power.

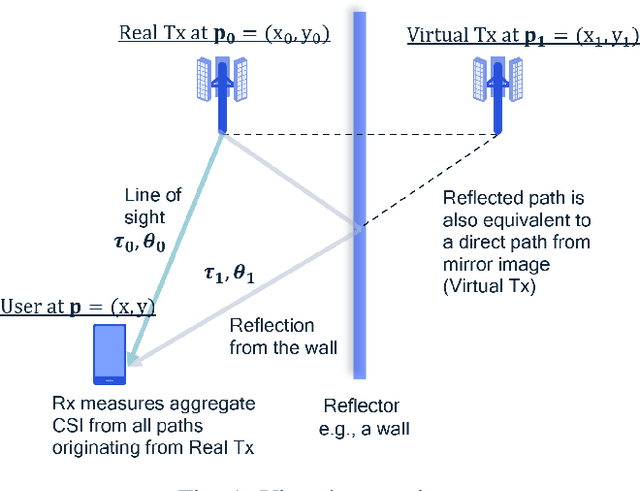

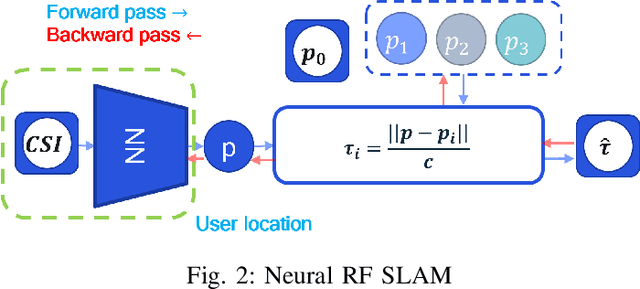

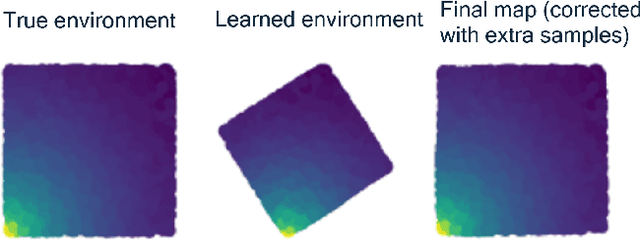

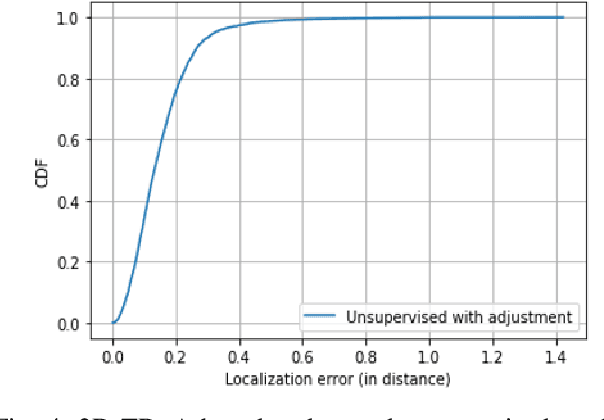

Neural RF SLAM for unsupervised positioning and mapping with channel state information

Mar 15, 2022

Abstract:We present a neural network architecture for jointly learning user locations and environment mapping up to isometry, in an unsupervised way, from channel state information (CSI) values with no location information. The model is based on an encoder-decoder architecture. The encoder network maps CSI values to the user location. The decoder network models the physics of propagation by parametrizing the environment using virtual anchors. It aims at reconstructing, from the encoder output and virtual anchor location, the set of time of flights (ToFs) that are extracted from CSI using super-resolution methods. The neural network task is set prediction and is accordingly trained end-to-end. The proposed model learns an interpretable latent, i.e., user location, by just enforcing a physics-based decoder. It is shown that the proposed model achieves sub-meter accuracy on synthetic ray tracing based datasets with single anchor SISO setup while recovering the environment map up to 4cm median error in a 2D environment and 15cm in a 3D environment

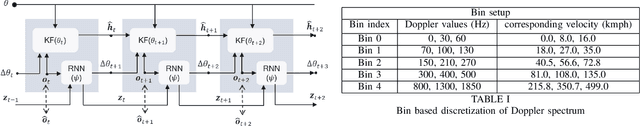

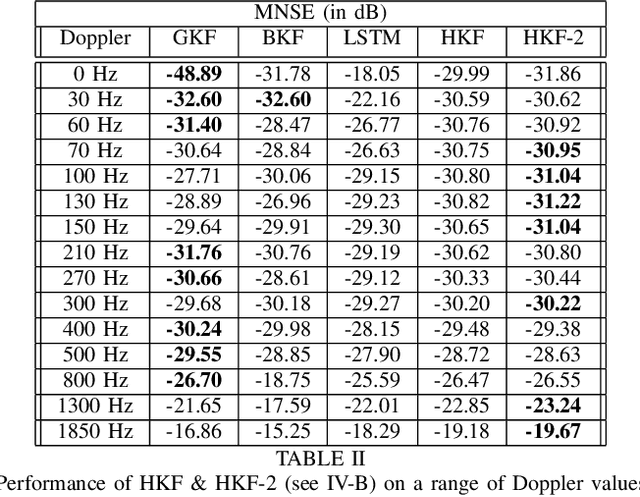

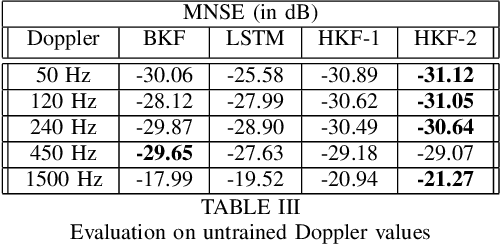

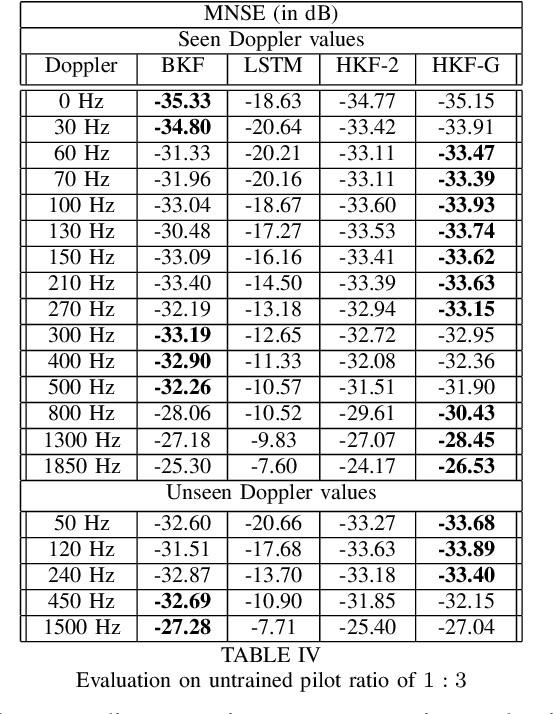

Neural Augmentation of Kalman Filter with Hypernetwork for Channel Tracking

Sep 26, 2021

Abstract:We propose Hypernetwork Kalman Filter (HKF) for tracking applications with multiple different dynamics. The HKF combines generalization power of Kalman filters with expressive power of neural networks. Instead of keeping a bank of Kalman filters and choosing one based on approximating the actual dynamics, HKF adapts itself to each dynamics based on the observed sequence. Through extensive experiments on CDL-B channel model, we show that the HKF can be used for tracking the channel over a wide range of Doppler values, matching Kalman filter performance with genie Doppler information. At high Doppler values, it achieves around 2dB gain over genie Kalman filter. The HKF generalizes well to unseen Doppler, SNR values and pilot patterns unlike LSTM, which suffers from severe performance degradation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge