Thomas M. Hehn

Transformer-Based Neural Surrogate for Link-Level Path Loss Prediction from Variable-Sized Maps

Oct 10, 2023Abstract:Estimating path loss for a transmitter-receiver location is key to many use-cases including network planning and handover. Machine learning has become a popular tool to predict wireless channel properties based on map data. In this work, we present a transformer-based neural network architecture that enables predicting link-level properties from maps of various dimensions and from sparse measurements. The map contains information about buildings and foliage. The transformer model attends to the regions that are relevant for path loss prediction and, therefore, scales efficiently to maps of different size. Further, our approach works with continuous transmitter and receiver coordinates without relying on discretization. In experiments, we show that the proposed model is able to efficiently learn dominant path losses from sparse training data and generalizes well when tested on novel maps.

How do Cross-View and Cross-Modal Alignment Affect Representations in Contrastive Learning?

Nov 23, 2022Abstract:Various state-of-the-art self-supervised visual representation learning approaches take advantage of data from multiple sensors by aligning the feature representations across views and/or modalities. In this work, we investigate how aligning representations affects the visual features obtained from cross-view and cross-modal contrastive learning on images and point clouds. On five real-world datasets and on five tasks, we train and evaluate 108 models based on four pretraining variations. We find that cross-modal representation alignment discards complementary visual information, such as color and texture, and instead emphasizes redundant depth cues. The depth cues obtained from pretraining improve downstream depth prediction performance. Also overall, cross-modal alignment leads to more robust encoders than pre-training by cross-view alignment, especially on depth prediction, instance segmentation, and object detection.

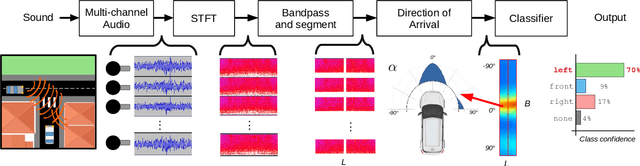

Hearing What You Cannot See: Acoustic Detection Around Corners

Jul 30, 2020

Abstract:This work proposes to use passive acoustic perception as an additional sensing modality for intelligent vehicles. We demonstrate that approaching vehicles behind blind corners can be detected by sound before such vehicles enter in line-of-sight. We have equipped a hybrid Prius research vehicle with a roof-mounted microphone array, and show on data collected with this sensor setup that wall reflections provide information on the presence and direction of approaching vehicles. A novel method is presented to classify if and from what direction a vehicle is approaching before it is visible, using as input Direction-of-Arrival features that can be efficiently computed from the streaming microphone array data. Since the ego-vehicle position within the local geometry affects the perceived patterns, we systematically study several locations and acoustic environments, and investigate generalization across these environments. With a static ego-vehicle, an accuracy of 92% is achieved on the hidden vehicle classification task, and approaching vehicles are on average detected correctly 2.25 seconds in advance. By stochastic exploring configurations using fewer microphones, we find that on par performance can be achieved with only 7 out of 56 available positions in the array. Finally, we demonstrate positive results on acoustic detection while the vehicle is driving, and study failure cases to identify future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge