Hearing What You Cannot See: Acoustic Detection Around Corners

Paper and Code

Jul 30, 2020

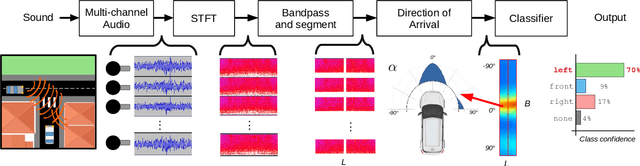

This work proposes to use passive acoustic perception as an additional sensing modality for intelligent vehicles. We demonstrate that approaching vehicles behind blind corners can be detected by sound before such vehicles enter in line-of-sight. We have equipped a hybrid Prius research vehicle with a roof-mounted microphone array, and show on data collected with this sensor setup that wall reflections provide information on the presence and direction of approaching vehicles. A novel method is presented to classify if and from what direction a vehicle is approaching before it is visible, using as input Direction-of-Arrival features that can be efficiently computed from the streaming microphone array data. Since the ego-vehicle position within the local geometry affects the perceived patterns, we systematically study several locations and acoustic environments, and investigate generalization across these environments. With a static ego-vehicle, an accuracy of 92% is achieved on the hidden vehicle classification task, and approaching vehicles are on average detected correctly 2.25 seconds in advance. By stochastic exploring configurations using fewer microphones, we find that on par performance can be achieved with only 7 out of 56 available positions in the array. Finally, we demonstrate positive results on acoustic detection while the vehicle is driving, and study failure cases to identify future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge