Hamed Pezeshki

Beyond Codebook-Based Analog Beamforming at mmWave: Compressed Sensing and Machine Learning Methods

Nov 03, 2022

Abstract:Analog beamforming is the predominant approach for millimeter wave (mmWave) communication given its favorable characteristics for limited-resource devices. In this work, we aim at reducing the spectral efficiency gap between analog and digital beamforming methods. We propose a method for refined beam selection based on the estimated raw channel. The channel estimation, an underdetermined problem, is solved using compressed sensing (CS) methods leveraging angular domain sparsity of the channel. To reduce the complexity of CS methods, we propose dictionary learning iterative soft-thresholding algorithm, which jointly learns the sparsifying dictionary and signal reconstruction. We evaluate the proposed method on a realistic mmWave setup and show considerable performance improvement with respect to code-book based analog beamforming approaches.

Multi-Modal Beam Prediction Challenge 2022: Towards Generalization

Sep 15, 2022

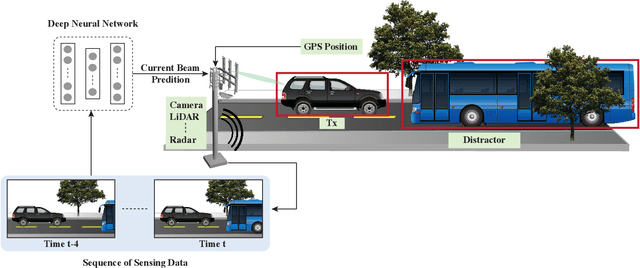

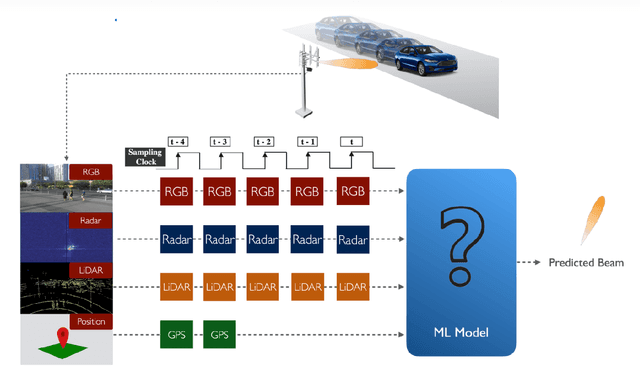

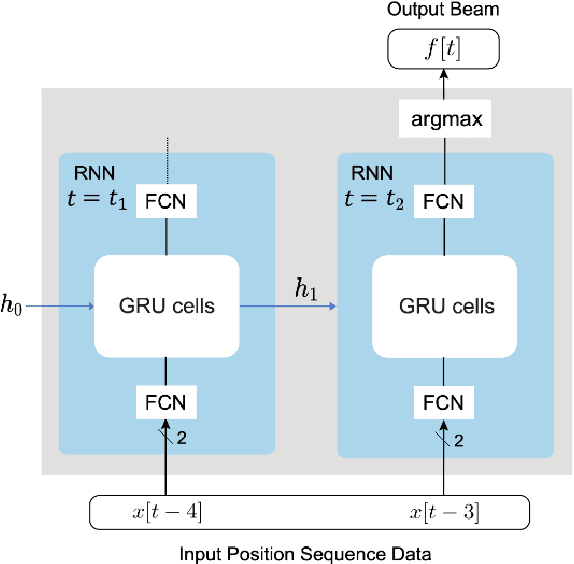

Abstract:Beam management is a challenging task for millimeter wave (mmWave) and sub-terahertz communication systems, especially in scenarios with highly-mobile users. Leveraging external sensing modalities such as vision, LiDAR, radar, position, or a combination of them, to address this beam management challenge has recently attracted increasing interest from both academia and industry. This is mainly motivated by the dependency of the beam direction decision on the user location and the geometry of the surrounding environment -- information that can be acquired from the sensory data. To realize the promised beam management gains, such as the significant reduction in beam alignment overhead, in practice, however, these solutions need to account for important aspects. For example, these multi-modal sensing aided beam selection approaches should be able to generalize their learning to unseen scenarios and should be able to operate in realistic dense deployments. The "Multi-Modal Beam Prediction Challenge 2022: Towards Generalization" competition is offered to provide a platform for investigating these critical questions. In order to facilitate the generalizability study, the competition offers a large-scale multi-modal dataset with co-existing communication and sensing data collected across multiple real-world locations and different times of the day. In this paper, along with the detailed descriptions of the problem statement and the development dataset, we provide a baseline solution that utilizes the user position data to predict the optimal beam indices. The objective of this challenge is to go beyond a simple feasibility study and enable necessary research in this direction, paving the way towards generalizable multi-modal sensing-aided beam management for real-world future communication systems.

Position Aided Beam Prediction in the Real World: How Useful GPS Locations Actually Are?

May 23, 2022

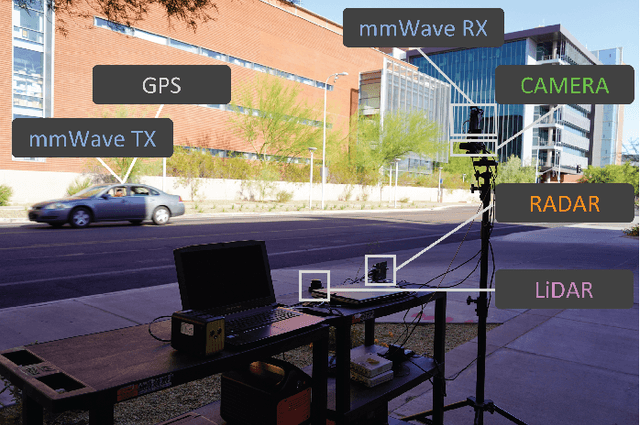

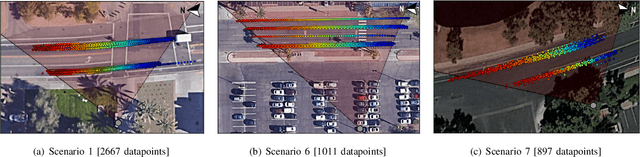

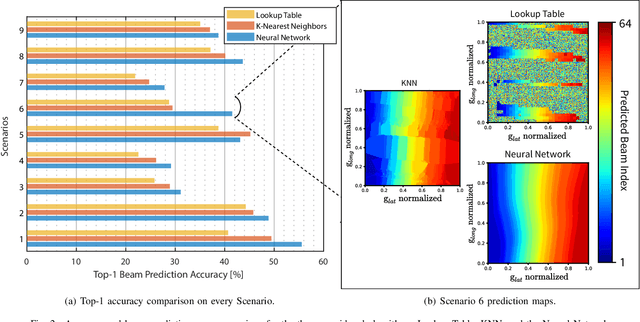

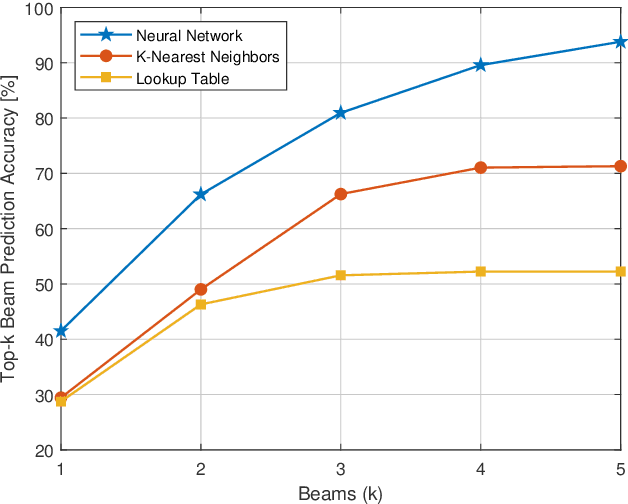

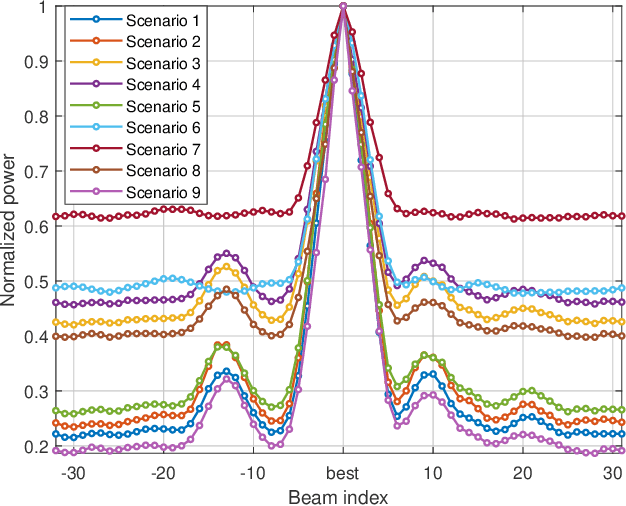

Abstract:Millimeter-wave (mmWave) communication systems rely on narrow beams for achieving sufficient receive signal power. Adjusting these beams is typically associated with large training overhead, which becomes particularly critical for highly-mobile applications. Intuitively, since optimal beam selection can benefit from the knowledge of the positions of communication terminals, there has been increasing interest in leveraging position data to reduce the overhead in mmWave beam prediction. Prior work, however, studied this problem using only synthetic data that generally does not accurately represent real-world measurements. In this paper, we investigate position-aided beam prediction using a real-world large-scale dataset to derive insights into precisely how much overhead can be saved in practice. Furthermore, we analyze which machine learning algorithms perform best, what factors degrade inference performance in real data, and which machine learning metrics are more meaningful in capturing the actual communication system performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge