Umut Demirhan

Enabling ISAC in Real World: Beam-Based User Identification with Machine Learning

Nov 10, 2024

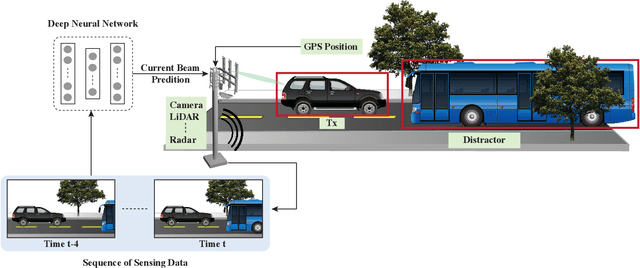

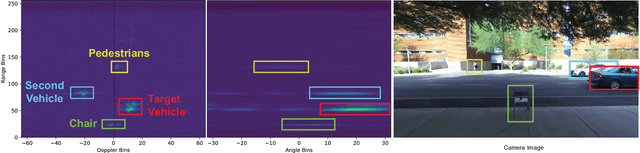

Abstract:Leveraging perception from radar data can assist multiple communication tasks, especially in highly-mobile and large-scale MIMO systems. One particular challenge, however, is how to distinguish the communication user (object) from the other mobile objects in the sensing scene. This paper formulates this \textit{user identification} problem and develops two solutions, a baseline model-based solution that maps the objects angles from the radar scene to communication beams and a scalable deep learning solution that is agnostic to the number of candidate objects. Using the DeepSense 6G dataset, which have real-world measurements, the developed deep learning approach achieves more than $93.4\%$ communication user identification accuracy, highlighting a promising path for enabling integrated radar-communication applications in the real world.

Learning Beamforming in Cell-Free Massive MIMO ISAC Systems

Sep 26, 2024Abstract:Beamforming design is critical for the efficient operation of integrated sensing and communication (ISAC) MIMO systems. ISAC beamforming design in cell-free massive MIMO systems, compared to colocated MIMO systems, is more challenging due to the additional complexity of the distributed large number of access points (APs). To address this problem, this paper first shows that graph neural networks (GNNs) are a suitable machine learning framework. Then, it develops a novel heterogeneous GNN model inspired by the specific characteristics of the cell-free ISAC MIMO systems. This model enables the low-complexity scaling of the cell-free ISAC system and does not require full retraining when additional APs are added or removed. Our results show that the proposed architecture can achieve near-optimal performance, and applies well to various network structures.

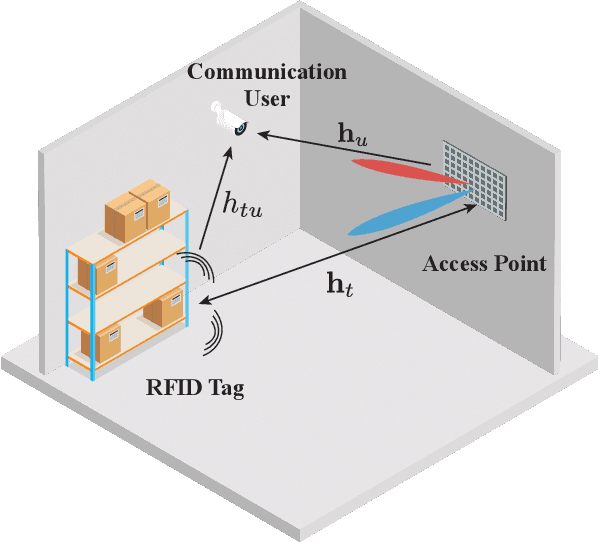

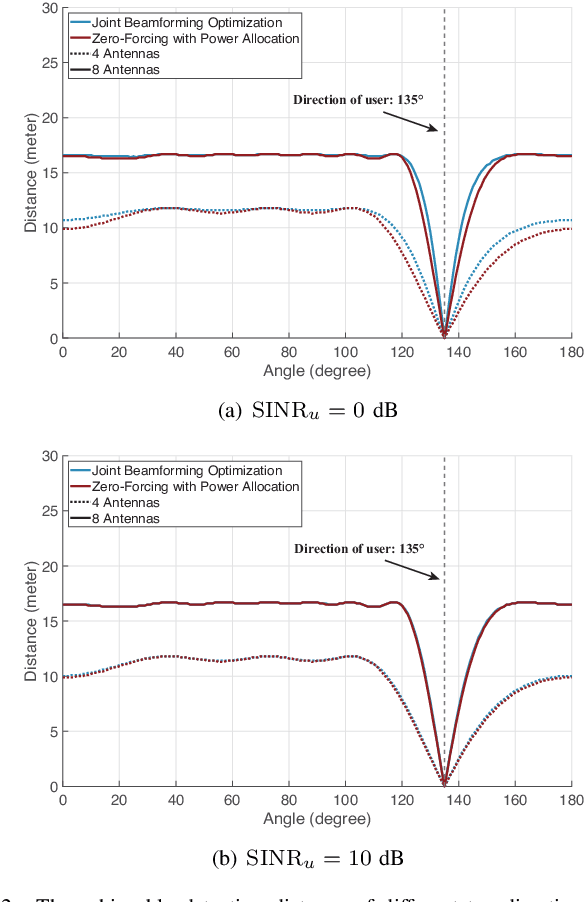

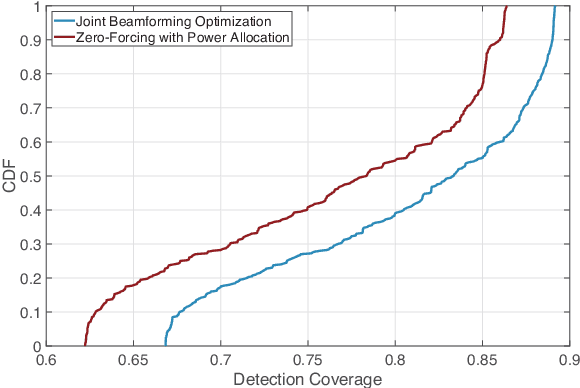

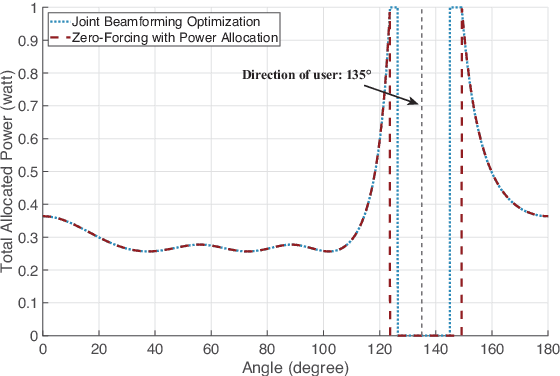

ISAC with Backscattering RFID Tags: Joint Beamforming Design

Jan 31, 2024

Abstract:In this paper, we explore an integrated sensing and communication (ISAC) system with backscattering RFID tags. In this setup, an access point employs a communication beam to serve a user while leveraging a sensing beam to detect an RFID tag. Under the total transmit power constraint of the system, our objective is to design sensing and communication beams by considering the tag detection and communication requirements. First, we adopt zero-forcing to design the beamforming vectors, followed by solving a convex optimization problem to determine the power allocation between sensing and communication. Then, we study a joint beamforming design problem with the goal of minimizing the total transmit power while satisfying the tag detection and communication requirements. To resolve this, we re-formulate the non-convex constraints into convex second-order cone constraints. The simulation results demonstrate that, under different communication SINR requirements, joint beamforming optimization outperforms the zero-forcing-based method in terms of achievable detection distance, offering a promising approach for the ISAC-backscattering systems.

Millimeter Wave V2V Beam Tracking using Radar: Algorithms and Real-World Demonstration

Aug 03, 2023Abstract:Utilizing radar sensing for assisting communication has attracted increasing interest thanks to its potential in dynamic environments. A particularly interesting problem for this approach appears in the vehicle-to-vehicle (V2V) millimeter wave and terahertz communication scenarios, where the narrow beams change with the movement of both vehicles. To address this problem, in this work, we develop a radar-aided beam-tracking framework, where a single initial beam and a set of radar measurements over a period of time are utilized to predict the future beams after this time duration. Within this framework, we develop two approaches with the combination of various degrees of radar signal processing and machine learning. To evaluate the feasibility of the solutions in a realistic scenario, we test their performance on a real-world V2V dataset. Our results indicated the importance of high angular resolution radar for this task and affirmed the potential of using radar for the V2V beam management problems.

Cell-Free ISAC MIMO Systems: Joint Sensing and Communication Beamforming

Jan 26, 2023Abstract:This paper considers a cell-free integrated sensing and communication (ISAC) MIMO system, where distributed MIMO access points are jointly serving the communication users and sensing the targets. For this setup, we first develop two baseline approaches that separately design the sensing and communication beamforming vectors, namely communication-prioritized sensing beamforming and sensing-prioritized communication beamforming. Then, we consider the joint sensing and communication (JSC) beamforming design and derive the optimal structure of these JSC beamforming vectors based on a max-min fairness formulation. The results show that the developed JSC beamforming is capable of achieving nearly the same communication signal-to-interference-plus-noise ratio (SINR) that of the communication-prioritized sensing beamforming solutions with almost the same sensing SNR of the sensing-prioritized communication beamforming approaches, yielding a promising strategy for cell-free ISAC MIMO systems.

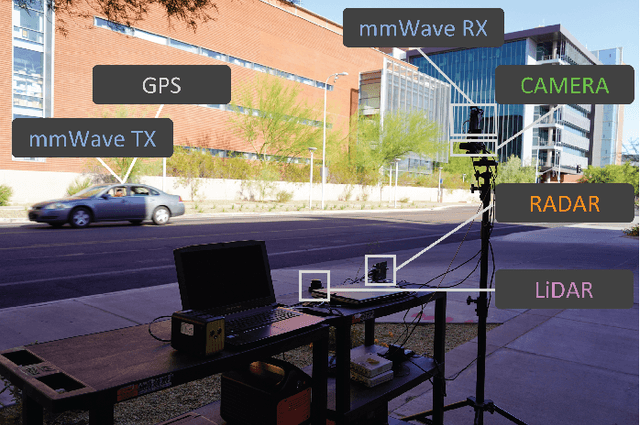

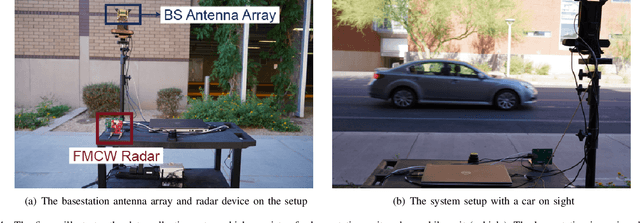

DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset

Nov 17, 2022

Abstract:This article presents the DeepSense 6G dataset, which is a large-scale dataset based on real-world measurements of co-existing multi-modal sensing and communication data. The DeepSense 6G dataset is built to advance deep learning research in a wide range of applications in the intersection of multi-modal sensing, communication, and positioning. This article provides a detailed overview of the DeepSense dataset structure, adopted testbeds, data collection and processing methodology, deployment scenarios, and example applications, with the objective of facilitating the adoption and reproducibility of multi-modal sensing and communication datasets.

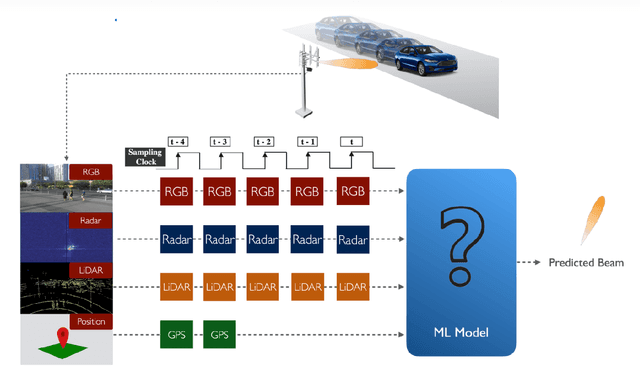

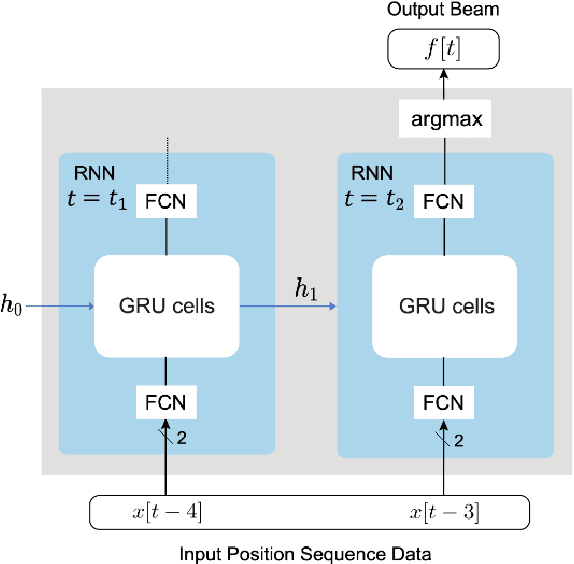

Multi-Modal Beam Prediction Challenge 2022: Towards Generalization

Sep 15, 2022

Abstract:Beam management is a challenging task for millimeter wave (mmWave) and sub-terahertz communication systems, especially in scenarios with highly-mobile users. Leveraging external sensing modalities such as vision, LiDAR, radar, position, or a combination of them, to address this beam management challenge has recently attracted increasing interest from both academia and industry. This is mainly motivated by the dependency of the beam direction decision on the user location and the geometry of the surrounding environment -- information that can be acquired from the sensory data. To realize the promised beam management gains, such as the significant reduction in beam alignment overhead, in practice, however, these solutions need to account for important aspects. For example, these multi-modal sensing aided beam selection approaches should be able to generalize their learning to unseen scenarios and should be able to operate in realistic dense deployments. The "Multi-Modal Beam Prediction Challenge 2022: Towards Generalization" competition is offered to provide a platform for investigating these critical questions. In order to facilitate the generalizability study, the competition offers a large-scale multi-modal dataset with co-existing communication and sensing data collected across multiple real-world locations and different times of the day. In this paper, along with the detailed descriptions of the problem statement and the development dataset, we provide a baseline solution that utilizes the user position data to predict the optimal beam indices. The objective of this challenge is to go beyond a simple feasibility study and enable necessary research in this direction, paving the way towards generalizable multi-modal sensing-aided beam management for real-world future communication systems.

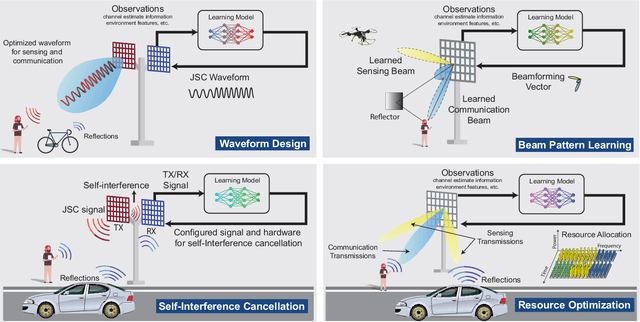

Integrated Sensing and Communication for 6G: Ten Key Machine Learning Roles

Aug 08, 2022

Abstract:Integrating sensing and communication is a defining theme for future wireless systems. This is motivated by the promising performance gains, especially as they assist each other, and by the better utilization of the wireless and hardware resources. Realizing these gains in practice, however, is subject to several challenges where leveraging machine learning can provide a potential solution. This article focuses on ten key machine learning roles for joint sensing and communication, sensing-aided communication, and communication-aided sensing systems, explains why and how machine learning can be utilized, and highlights important directions for future research. The article also presents real-world results for some of these machine learning roles based on the large-scale real-world dataset DeepSense 6G, which could be adopted in investigating a wide range of integrated sensing and communication problems.

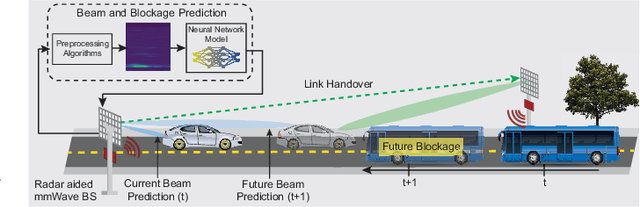

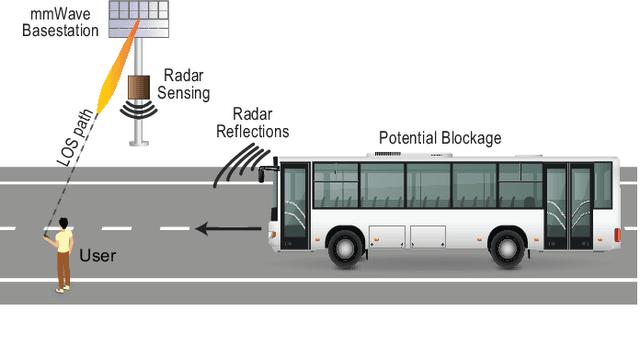

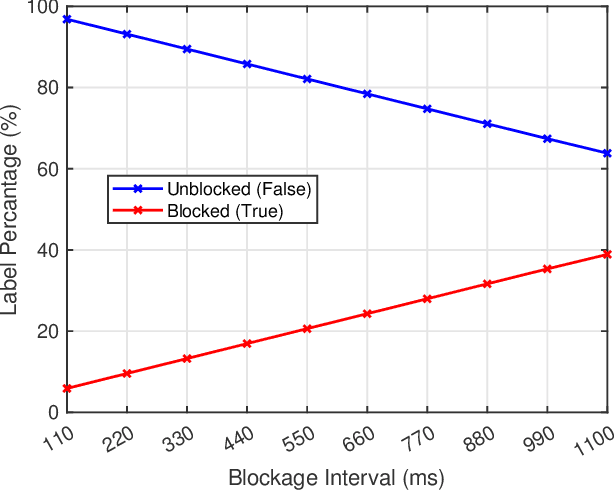

Radar Aided Proactive Blockage Prediction in Real-World Millimeter Wave Systems

Nov 29, 2021

Abstract:Millimeter wave (mmWave) and sub-terahertz communication systems rely mainly on line-of-sight (LOS) links between the transmitters and receivers. The sensitivity of these high-frequency LOS links to blockages, however, challenges the reliability and latency requirements of these communication networks. In this paper, we propose to utilize radar sensors to provide sensing information about the surrounding environment and moving objects, and leverage this information to proactively predict future link blockages before they happen. This is motivated by the low cost of the radar sensors, their ability to efficiently capture important features such as the range, angle, velocity of the moving scatterers (candidate blockages), and their capability to capture radar frames at relatively high speed. We formulate the radar-aided proactive blockage prediction problem and develop two solutions for this problem based on classical radar object tracking and deep neural networks. The two solutions are designed to leverage domain knowledge and the understanding of the blockage prediction problem. To accurately evaluate the proposed solutions, we build a large-scale real-world dataset, based on the DeepSense framework, gathering co-existing radar and mmWave communication measurements of more than $10$ thousand data points and various blockage objects (vehicles, bikes, humans, etc.). The evaluation results, based on this dataset, show that the proposed approaches can predict future blockages $1$ second before they happen with more than $90\%$ $F_1$ score (and more than $90\%$ accuracy). These results, among others, highlight a promising solution for blockage prediction and reliability enhancement in future wireless mmWave and terahertz communication systems.

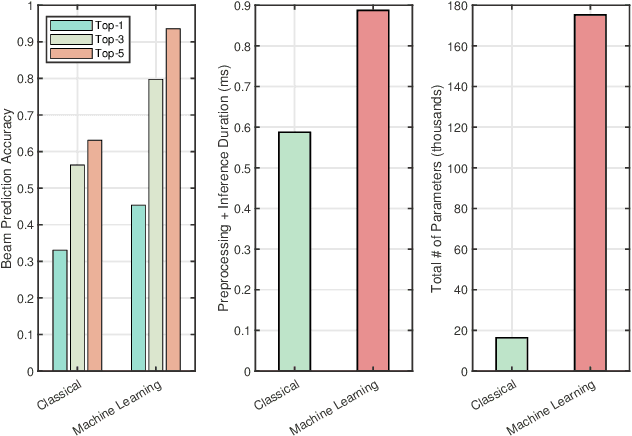

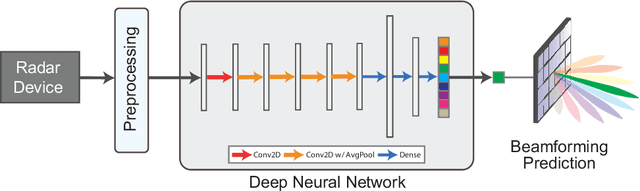

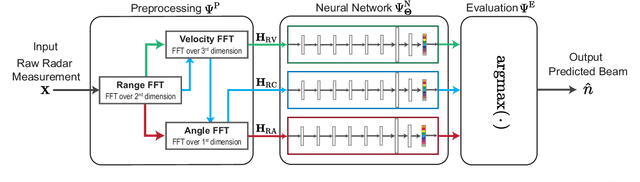

Radar Aided 6G Beam Prediction: Deep Learning Algorithms and Real-World Demonstration

Nov 18, 2021

Abstract:This paper presents the first machine learning based real-world demonstration for radar-aided beam prediction in a practical vehicular communication scenario. Leveraging radar sensory data at the communication terminals provides important awareness about the transmitter/receiver locations and the surrounding environment. This awareness could be utilized to reduce or even eliminate the beam training overhead in millimeter wave (mmWave) and sub-terahertz (THz) MIMO communication systems, which enables a wide range of highly-mobile low-latency applications. In this paper, we develop deep learning based radar-aided beam prediction approaches for mmWave/sub-THz systems. The developed solutions leverage domain knowledge for radar signal processing to extract the relevant features fed to the learning models. This optimizes their performance, complexity, and inference time. The proposed radar-aided beam prediction solutions are evaluated using the large-scale real-world dataset DeepSense 6G, which comprises co-existing mmWave beam training and radar measurements. In addition to completely eliminating the radar/communication calibration overhead, the experimental results showed that the proposed algorithms are able to achieve around $90\%$ top-5 beam prediction accuracy while saving $93\%$ of the beam training overhead. This highlights a promising direction for addressing the beam management overhead challenges in mmWave/THz communication systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge