Tawfik Osman

Pixel-Level GPS Localization and Denoising using Computer Vision and 6G Communication Beams

Jul 28, 2024Abstract:Accurate localization is crucial for various applications, including autonomous vehicles and next-generation wireless networks. However, the reliability and precision of Global Navigation Satellite Systems (GNSS), such as the Global Positioning System (GPS), are compromised by multi-path errors and non-line-of-sight scenarios. This paper presents a novel approach to enhance GPS accuracy by combining visual data from RGB cameras with wireless signals captured at millimeter-wave (mmWave) and sub-terahertz (sub-THz) basestations. We propose a sensing-aided framework for (i) site-specific GPS data characterization and (ii) GPS position de-noising that utilizes multi-modal visual and wireless information. Our approach is validated in a realistic Vehicle-to-Infrastructure (V2I) scenario using a comprehensive real-world dataset, demonstrating a substantial reduction in localization error to sub-meter levels. This method represents a significant advancement in achieving precise localization, particularly beneficial for high-mobility applications in 5G and beyond networks.

Vehicle Cameras Guide mmWave Beams: Approach and Real-World V2V Demonstration

Aug 20, 2023Abstract:Accurately aligning millimeter-wave (mmWave) and terahertz (THz) narrow beams is essential to satisfy reliability and high data rates of 5G and beyond wireless communication systems. However, achieving this objective is difficult, especially in vehicle-to-vehicle (V2V) communication scenarios, where both transmitter and receiver are constantly mobile. Recently, additional sensing modalities, such as visual sensors, have attracted significant interest due to their capability to provide accurate information about the wireless environment. To that end, in this paper, we develop a deep learning solution for V2V scenarios to predict future beams using images from a 360 camera attached to the vehicle. The developed solution is evaluated on a real-world multi-modal mmWave V2V communication dataset comprising co-existing 360 camera and mmWave beam training data. The proposed vision-aided solution achieves $\approx 85\%$ top-5 beam prediction accuracy while significantly reducing the beam training overhead. This highlights the potential of utilizing vision for enabling highly-mobile V2V communications.

Camera Based mmWave Beam Prediction: Towards Multi-Candidate Real-World Scenarios

Aug 14, 2023Abstract:Leveraging sensory information to aid the millimeter-wave (mmWave) and sub-terahertz (sub-THz) beam selection process is attracting increasing interest. This sensory data, captured for example by cameras at the basestations, has the potential of significantly reducing the beam sweeping overhead and enabling highly-mobile applications. The solutions developed so far, however, have mainly considered single-candidate scenarios, i.e., scenarios with a single candidate user in the visual scene, and were evaluated using synthetic datasets. To address these limitations, this paper extensively investigates the sensing-aided beam prediction problem in a real-world multi-object vehicle-to-infrastructure (V2I) scenario and presents a comprehensive machine learning-based framework. In particular, this paper proposes to utilize visual and positional data to predict the optimal beam indices as an alternative to the conventional beam sweeping approaches. For this, a novel user (transmitter) identification solution has been developed, a key step in realizing sensing-aided multi-candidate and multi-user beam prediction solutions. The proposed solutions are evaluated on the large-scale real-world DeepSense $6$G dataset. Experimental results in realistic V2I communication scenarios indicate that the proposed solutions achieve close to $100\%$ top-5 beam prediction accuracy for the scenarios with single-user and close to $95\%$ top-5 beam prediction accuracy for multi-candidate scenarios. Furthermore, the proposed approach can identify the probable transmitting candidate with more than $93\%$ accuracy across the different scenarios. This highlights a promising approach for nearly eliminating the beam training overhead in mmWave/THz communication systems.

Real-World Evaluation of Full-Duplex Millimeter Wave Communication Systems

Jul 20, 2023Abstract:Noteworthy strides continue to be made in the development of full-duplex millimeter wave (mmWave) communication systems, but most of this progress has been built on theoretical models and validated through simulation. In this work, we conduct a long overdue real-world evaluation of full-duplex mmWave systems using off-the-shelf 60 GHz phased arrays. Using an experimental full-duplex base station, we collect over 200,000 measurements of self-interference by electronically sweeping its transmit and receive beams across a dense spatial profile, shedding light on the effects of the environment, array positioning, and beam steering direction. We then call attention to five key challenges faced by practical full-duplex mmWave systems and, with these in mind, propose a general framework for beamforming-based full-duplex solutions. Guided by this framework, we introduce a novel solution called STEER+, a more robust version of recent work called STEER, and experimentally evaluate both in a real-world setting with actual downlink and uplink users. Rather than purely minimize self-interference as with STEER, STEER+ makes use of additional measurements to maximize spectral efficiency, which proves to make it much less sensitive to one's choice of design parameters. We experimentally show that STEER+ can reliably reduce self-interference to near or below the noise floor while maintaining high SNR on the downlink and uplink, thus enabling full-duplex operation purely via beamforming.

A Digital Twin Assisted Framework for Interference Nulling in Millimeter Wave MIMO Systems

Jan 30, 2023

Abstract:Millimeter wave (mmWave) and terahertz MIMO systems rely on pre-defined beamforming codebooks for both initial access and data transmission. However, most of the existing codebooks adopt pre-defined beams that focus mainly on improving the gain of their target users, without taking interference into account, which could incur critical performance degradation in dense networks. To address this problem, in this paper, we propose a sample-efficient digital twin-assisted beam pattern design framework that learns how to form the beam pattern to reject the signals from the interfering directions. The proposed approach does not require any explicit channel knowledge or any coordination with the interferers. The adoption of the digital twin improves the sample efficiency by better leveraging the underlying signal relationship and by incorporating a demand-based data acquisition strategy. Simulation results show that the developed signal model-based learning framework can significantly reduce the actual interaction with the radio environment (i.e., the number of measurements) compared to the model-unaware design, leading to a more practical and efficient interference-aware beam design approach.

DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset

Nov 17, 2022

Abstract:This article presents the DeepSense 6G dataset, which is a large-scale dataset based on real-world measurements of co-existing multi-modal sensing and communication data. The DeepSense 6G dataset is built to advance deep learning research in a wide range of applications in the intersection of multi-modal sensing, communication, and positioning. This article provides a detailed overview of the DeepSense dataset structure, adopted testbeds, data collection and processing methodology, deployment scenarios, and example applications, with the objective of facilitating the adoption and reproducibility of multi-modal sensing and communication datasets.

Online Beam Learning with Interference Nulling for Millimeter Wave MIMO Systems

Sep 09, 2022

Abstract:Employing large antenna arrays is a key characteristic of millimeter wave (mmWave) and terahertz communication systems. Due to the hardware constraints and the lack of channel knowledge, codebook based beamforming/combining is normally adopted to achieve the desired array gain. However, most of the existing codebooks focus only on improving the gain of their target user, without taking interference into account. This can incur critical performance degradation in dense networks. In this paper, we propose a sample-efficient online reinforcement learning based beam pattern design algorithm that learns how to shape the beam pattern to null the interfering directions. The proposed approach does not require any explicit channel knowledge or any coordination with the interferers. Simulation results show that the developed solution is capable of learning well-shaped beam patterns that significantly suppress the interference while sacrificing tolerable beamforming/combing gain from the desired user. Furthermore, a hardware proof-of-concept prototype based on mmWave phased arrays is built and used to implement and evaluate the developed online beam learning solutions in realistic scenarios. The learned beam patterns, measured in an anechoic chamber, show the performance gains of the developed framework and highlight a promising machine learning based beam/codebook optimization direction for mmWave and terahertz systems.

Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Datasets

Nov 15, 2021

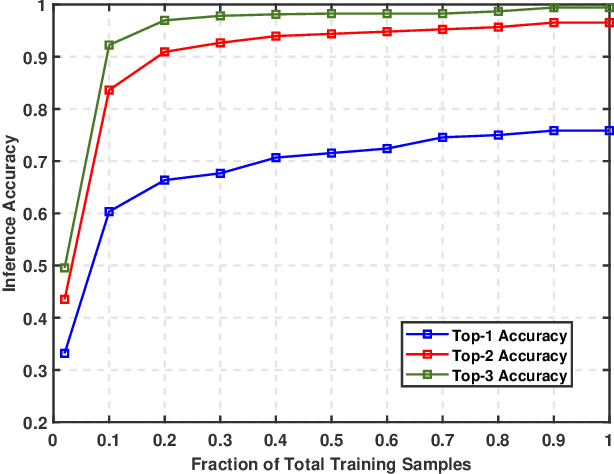

Abstract:Enabling highly-mobile millimeter wave (mmWave) and terahertz (THz) wireless communication applications requires overcoming the critical challenges associated with the large antenna arrays deployed at these systems. In particular, adjusting the narrow beams of these antenna arrays typically incurs high beam training overhead that scales with the number of antennas. To address these challenges, this paper proposes a multi-modal machine learning based approach that leverages positional and visual (camera) data collected from the wireless communication environment for fast beam prediction. The developed framework has been tested on a real-world vehicular dataset comprising practical GPS, camera, and mmWave beam training data. The results show the proposed approach achieves more than $\approx$ 75\% top-1 beam prediction accuracy and close to 100\% top-3 beam prediction accuracy in realistic communication scenarios.

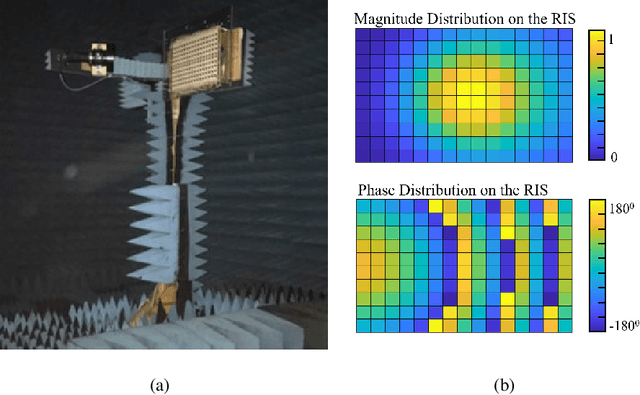

Design and Evaluation of Reconfigurable Intelligent Surfaces in Real-World Environment

Sep 16, 2021

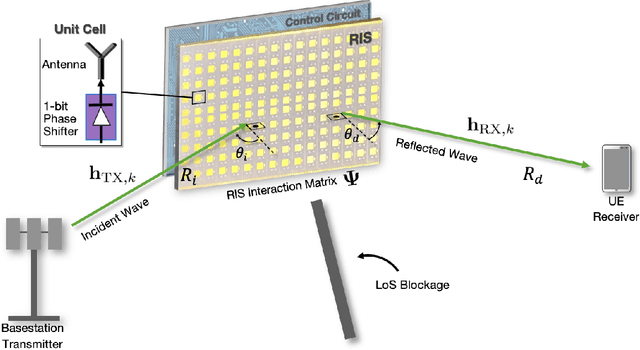

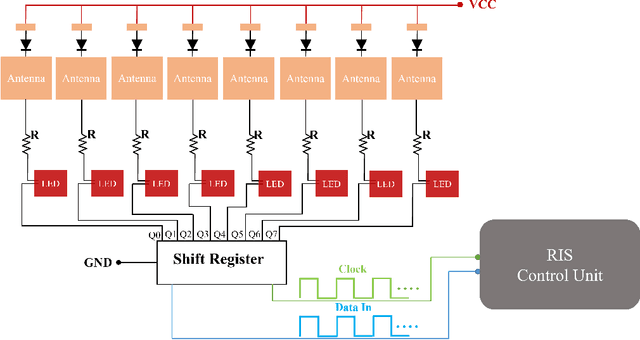

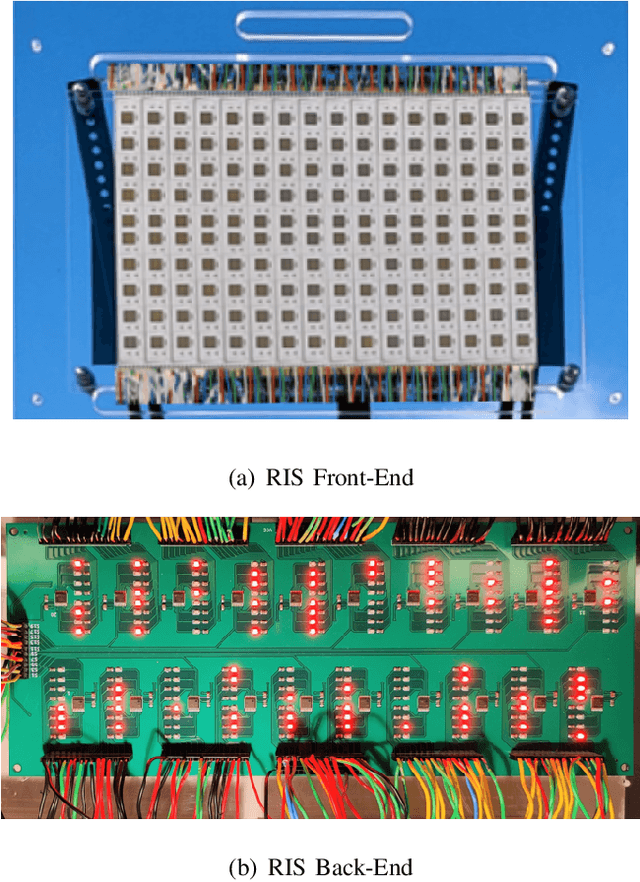

Abstract:Reconfigurable intelligent surfaces (RISs) have promising coverage and data rate gains for wireless communication systems in 5G and beyond. Prior work has mainly focused on analyzing the performance of these surfaces using computer simulations or lab-level prototypes. To draw accurate insights about the actual performance of these systems, this paper develops an RIS proof-of-concept prototype and extensively evaluates its potential gains in the field and under realistic wireless communication settings. In particular, a 160-element reconfigurable surface, operating at a 5.8GHz band, is first designed, fabricated, and accurately measured in the anechoic chamber. This surface is then integrated into a wireless communication system and the beamforming gains, path-loss, and coverage improvements are evaluated in realistic outdoor communication scenarios. When both the transmitter and receiver employ directional antennas and with 5m and 10m distances between the transmitter-RIS and RIS-receiver, the developed RIS achieves $15$-$20$dB gain in the signal-to-noise ratio (SNR) in a range of $\pm60^\circ$ beamforming angles. In terms of coverage, and considering a far-field experiment with a blockage between a base station and a grid of mobile users and with an average distance of $35m$ between base station (BS) and the user (through the RIS), the RIS provides an average SNR improvement of $6$dB (max $8$dB) within an area $> 75$m$^2$. Thanks to the scalable RIS design, these SNR gains can be directly increased with larger RIS areas. For example, a 1,600-element RIS with the same design is expected to provide around $26$dB SNR gain for a similar deployment. These results, among others, draw useful insights into the design and performance of RIS systems and provide an important proof for their potential gains in real-world far-field wireless communication environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge