Muhammad Alrabeiah

Electrical Engineering Department, King Saud University, Saudi Arabia

Camera Based mmWave Beam Prediction: Towards Multi-Candidate Real-World Scenarios

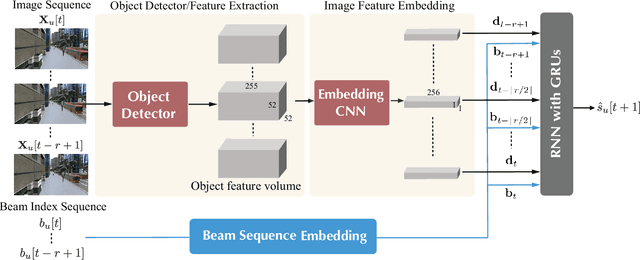

Aug 14, 2023Abstract:Leveraging sensory information to aid the millimeter-wave (mmWave) and sub-terahertz (sub-THz) beam selection process is attracting increasing interest. This sensory data, captured for example by cameras at the basestations, has the potential of significantly reducing the beam sweeping overhead and enabling highly-mobile applications. The solutions developed so far, however, have mainly considered single-candidate scenarios, i.e., scenarios with a single candidate user in the visual scene, and were evaluated using synthetic datasets. To address these limitations, this paper extensively investigates the sensing-aided beam prediction problem in a real-world multi-object vehicle-to-infrastructure (V2I) scenario and presents a comprehensive machine learning-based framework. In particular, this paper proposes to utilize visual and positional data to predict the optimal beam indices as an alternative to the conventional beam sweeping approaches. For this, a novel user (transmitter) identification solution has been developed, a key step in realizing sensing-aided multi-candidate and multi-user beam prediction solutions. The proposed solutions are evaluated on the large-scale real-world DeepSense $6$G dataset. Experimental results in realistic V2I communication scenarios indicate that the proposed solutions achieve close to $100\%$ top-5 beam prediction accuracy for the scenarios with single-user and close to $95\%$ top-5 beam prediction accuracy for multi-candidate scenarios. Furthermore, the proposed approach can identify the probable transmitting candidate with more than $93\%$ accuracy across the different scenarios. This highlights a promising approach for nearly eliminating the beam training overhead in mmWave/THz communication systems.

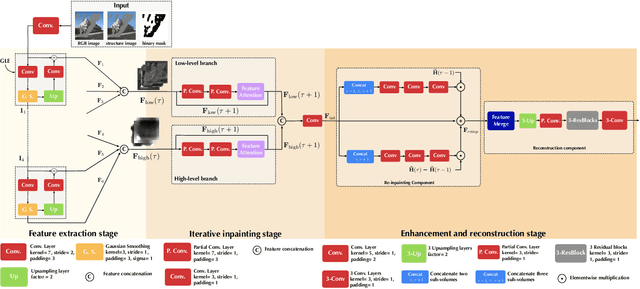

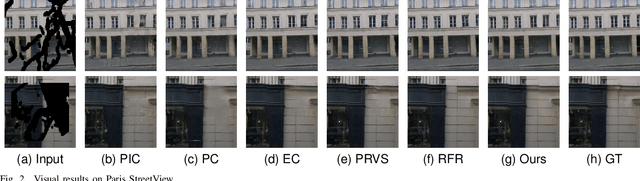

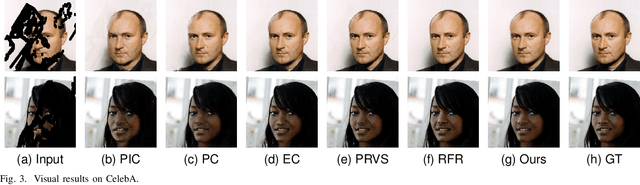

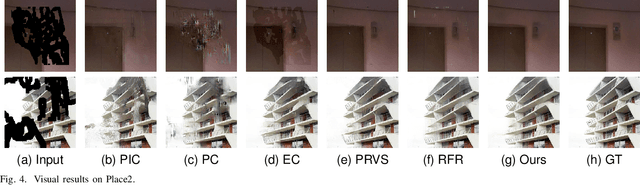

Progressive with Purpose: Guiding Progressive Inpainting DNNs through Context and Structure

Sep 21, 2022

Abstract:The advent of deep learning in the past decade has significantly helped advance image inpainting. Although achieving promising performance, deep learning-based inpainting algorithms still struggle from the distortion caused by the fusion of structural and contextual features, which are commonly obtained from, respectively, deep and shallow layers of a convolutional encoder. Motivated by this observation, we propose a novel progressive inpainting network that maintains the structural and contextual integrity of a processed image. More specifically, inspired by the Gaussian and Laplacian pyramids, the core of the proposed network is a feature extraction module named GLE. Stacking GLE modules enables the network to extract image features from different image frequency components. This ability is important to maintain structural and contextual integrity, for high frequency components correspond to structural information while low frequency components correspond to contextual information. The proposed network utilizes the GLE features to progressively fill in missing regions in a corrupted image in an iterative manner. Our benchmarking experiments demonstrate that the proposed method achieves clear improvement in performance over many state-of-the-art inpainting algorithms.

Blockage Prediction Using Wireless Signatures: Deep Learning Enables Real-World Demonstration

Nov 16, 2021

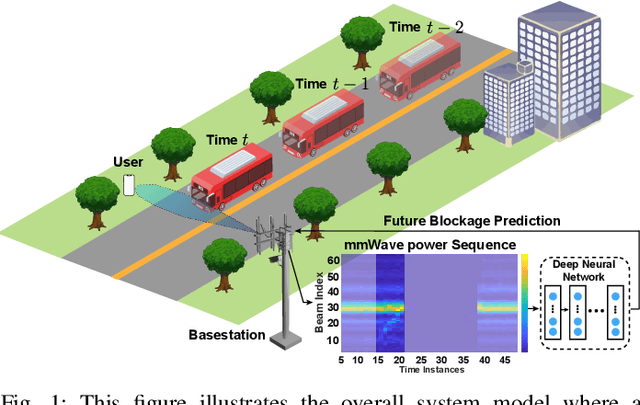

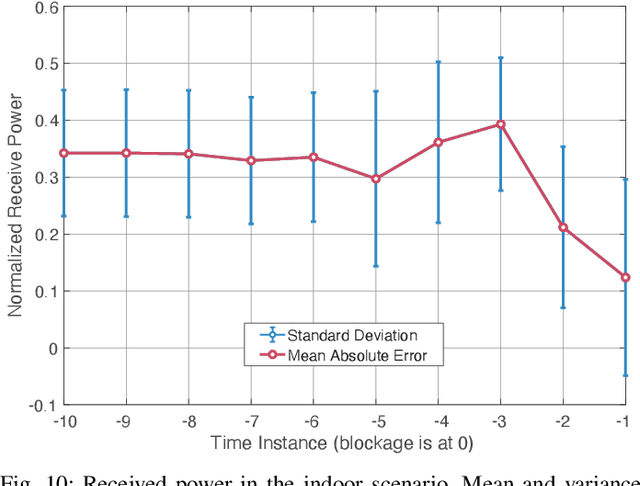

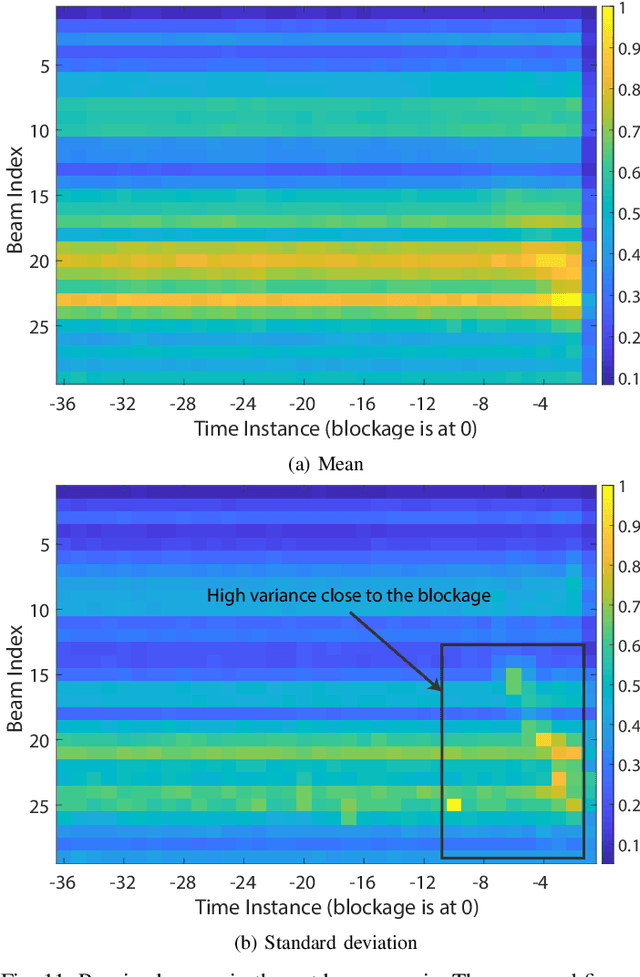

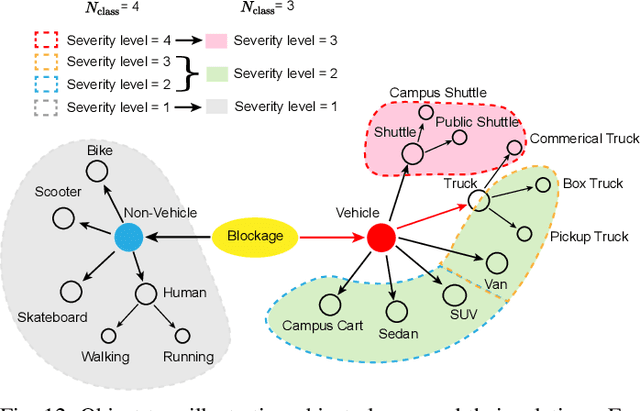

Abstract:Overcoming the link blockage challenges is essential for enhancing the reliability and latency of millimeter wave (mmWave) and sub-terahertz (sub-THz) communication networks. Previous approaches relied mainly on either (i) multiple-connectivity, which under-utilizes the network resources, or on (ii) the use of out-of-band and non-RF sensors to predict link blockages, which is associated with increased cost and system complexity. In this paper, we propose a novel solution that relies only on in-band mmWave wireless measurements to proactively predict future dynamic line-of-sight (LOS) link blockages. The proposed solution utilizes deep neural networks and special patterns of received signal power, that we call pre-blockage wireless signatures to infer future blockages. Specifically, the developed machine learning models attempt to predict: (i) If a future blockage will occur? (ii) When will this blockage happen? (iii) What is the type of the blockage? And (iv) what is the direction of the moving blockage? To evaluate our proposed approach, we build a large-scale real-world dataset comprising nearly $0.5$ million data points (mmWave measurements) for both indoor and outdoor blockage scenarios. The results, using this dataset, show that the proposed approach can successfully predict the occurrence of future dynamic blockages with more than 85\% accuracy. Further, for the outdoor scenario with highly-mobile vehicular blockages, the proposed model can predict the exact time of the future blockage with less than $80$ms error for blockages happening within the future $500$ms. These results, among others, highlight the promising gains of the proposed proactive blockage prediction solution which could potentially enhance the reliability and latency of future wireless networks.

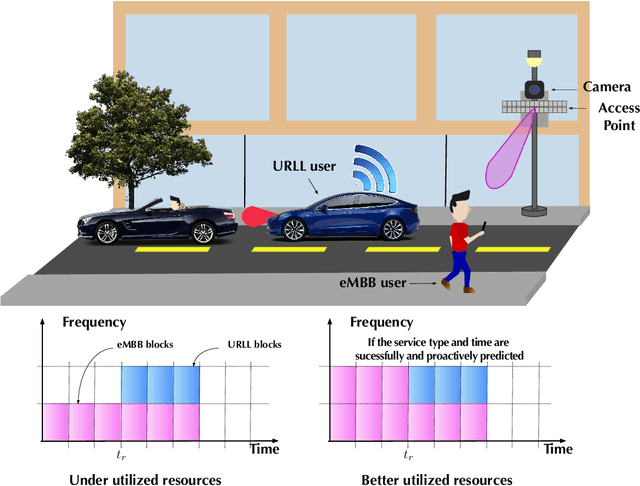

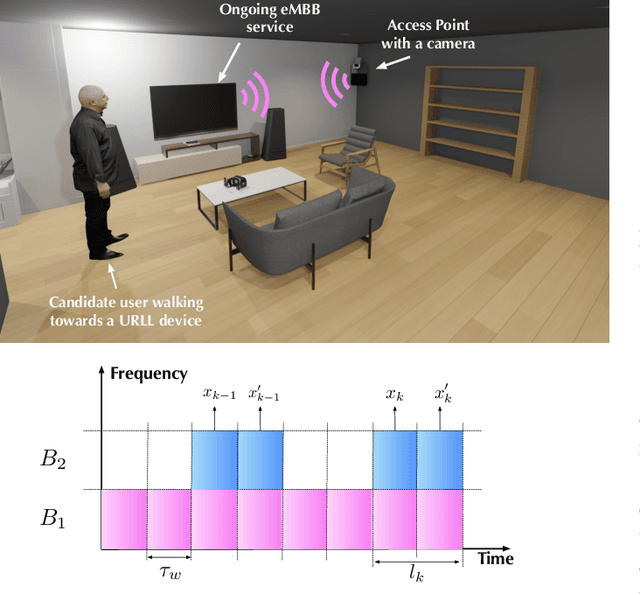

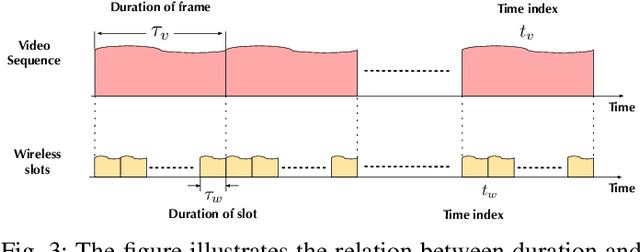

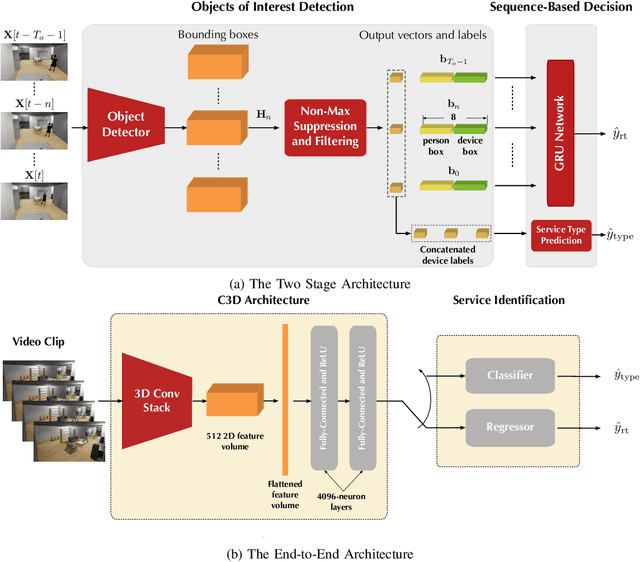

Computer Vision Aided URLL Communications: Proactive Service Identification and Coexistence

Mar 18, 2021

Abstract:The support of coexisting ultra-reliable and low-latency (URLL) and enhanced Mobile BroadBand (eMBB) services is a key challenge for the current and future wireless communication networks. Those two types of services introduce strict, and in some time conflicting, resource allocation requirements that may result in a power-struggle between reliability, latency, and resource utilization in wireless networks. The difficulty in addressing that challenge could be traced back to the predominant reactive approach in allocating the wireless resources. This allocation operation is carried out based on received service requests and global network statistics, which may not incorporate a sense of \textit{proaction}. Therefore, this paper proposes a novel framework termed \textit{service identification} to develop novel proactive resource allocation algorithms. The developed framework is based on visual data (captured for example by RGB cameras) and deep learning (e.g., deep neural networks). The ultimate objective of this framework is to equip future wireless networks with the ability to analyze user behavior, anticipate incoming services, and perform proactive resource allocation. To demonstrate the potential of the proposed framework, a wireless network scenario with two coexisting URLL and eMBB services is considered, and two deep learning algorithms are designed to utilize RGB video frames and predict incoming service type and its request time. An evaluation dataset based on the considered scenario is developed and used to evaluate the performance of the two algorithms. The results confirm the anticipated value of proaction to wireless networks; the proposed models enable efficient network performance ensuring more than $85\%$ utilization of the network resources at $\sim 98\%$ reliability. This highlights a promising direction for the future vision-aided wireless communication networks.

Reinforcement Learning of Beam Codebooks in Millimeter Wave and Terahertz MIMO Systems

Feb 22, 2021

Abstract:Millimeter wave (mmWave) and terahertz MIMO systems rely on pre-defined beamforming codebooks for both initial access and data transmission. Being pre-defined, however, these codebooks are commonly not optimized for specific environments, user distributions, and/or possible hardware impairments. This leads to large codebook sizes with high beam training overhead which increases the initial access/tracking latency and makes it hard for these systems to support highly mobile applications. To overcome these limitations, this paper develops a deep reinforcement learning framework that learns how to iteratively optimize the codebook beam patterns (shapes) relying only on the receive power measurements and without requiring any explicit channel knowledge. The developed model learns how to autonomously adapt the beam patterns to best match the surrounding environment, user distribution, hardware impairments, and array geometry. Further, this approach does not require any knowledge about the channel, array geometry, RF hardware, or user positions. To reduce the learning time, the proposed model designs a novel Wolpertinger-variant architecture that is capable of efficiently searching for an optimal policy in a large discrete action space, which is important for large antenna arrays with quantized phase shifters. This complex-valued neural network architecture design respects the practical RF hardware constraints such as the constant-modulus and quantized phase shifter constraints. Simulation results based on the publicly available DeepMIMO dataset confirm the ability of the developed framework to learn near-optimal beam patterns for both line-of-sight (LOS) and non-LOS scenarios and for arrays with hardware impairments without requiring any channel knowledge.

Vision-Aided 6G Wireless Communications: Blockage Prediction and Proactive Handoff

Feb 20, 2021

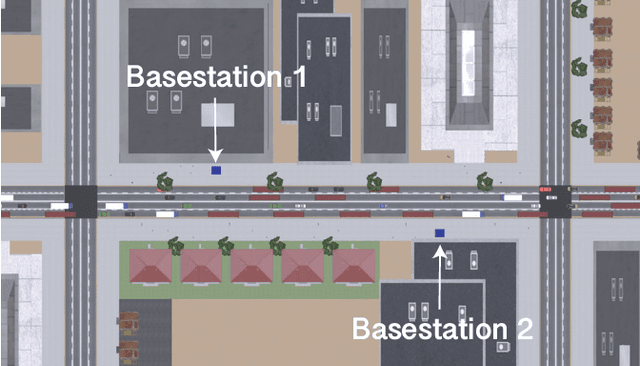

Abstract:The sensitivity to blockages is a key challenge for the high-frequency (5G millimeter wave and 6G sub-terahertz) wireless networks. Since these networks mainly rely on line-of-sight (LOS) links, sudden link blockages highly threaten the reliability of the networks. Further, when the LOS link is blocked, the network typically needs to hand off the user to another LOS basestation, which may incur critical time latency, especially if a search over a large codebook of narrow beams is needed. A promising way to tackle the reliability and latency challenges lies in enabling proaction in wireless networks. Proaction basically allows the network to anticipate blockages, especially dynamic blockages, and initiate user hand-off beforehand. This paper presents a complete machine learning framework for enabling proaction in wireless networks relying on visual data captured, for example, by RGB cameras deployed at the base stations. In particular, the paper proposes a vision-aided wireless communication solution that utilizes bimodal machine learning to perform proactive blockage prediction and user hand-off. The bedrock of this solution is a deep learning algorithm that learns from visual and wireless data how to predict incoming blockages. The predictions of this algorithm are used by the wireless network to proactively initiate hand-off decisions and avoid any unnecessary latency. The algorithm is developed on a vision-wireless dataset generated using the ViWi data-generation framework. Experimental results on two basestations with different cameras indicate that the algorithm is capable of accurately detecting incoming blockages more than $\sim 90\%$ of the time. Such blockage prediction ability is directly reflected in the accuracy of proactive hand-off, which also approaches $87\%$. This highlights a promising direction for enabling high reliability and low latency in future wireless networks.

Reinforcement Learning for Beam Pattern Design in Millimeter Wave and Massive MIMO Systems

Feb 18, 2021

Abstract:Employing large antenna arrays is a key characteristic of millimeter wave (mmWave) and terahertz communication systems. However, due to the adoption of fully analog or hybrid analog/digital architectures, as well as non-ideal hardware or arbitrary/unknown array geometries, the accurate channel state information becomes hard to acquire. This impedes the design of beamforming/combining vectors that are crucial to fully exploit the potential of large-scale antenna arrays in providing sufficient receive signal power. In this paper, we develop a novel framework that leverages deep reinforcement learning (DRL) and a Wolpertinger-variant architecture and learns how to iteratively optimize the beam pattern (shape) for serving one or a small set of users relying only on the receive power measurements and without requiring any explicit channel knowledge. The proposed model accounts for key hardware constraints such as the phase-only, constant-modulus, and quantized-angle constraints. Further, the proposed framework can efficiently optimize the beam patterns for systems with non-ideal hardware and for arrays with unknown or arbitrary array geometries. Simulation results show that the developed solution is capable of finding near-optimal beam patterns based only on the receive power measurements.

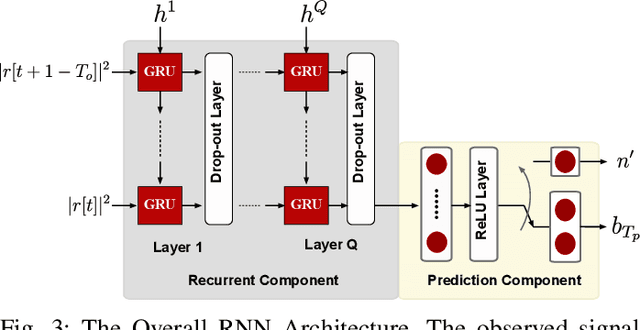

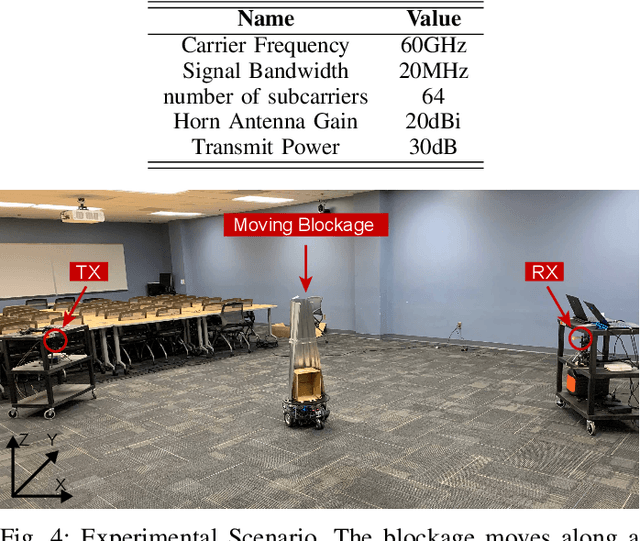

Deep Learning for Moving Blockage Prediction using Real Millimeter Wave Measurements

Feb 08, 2021

Abstract:Millimeter wave (mmWave) communication is a key component of 5G and beyond. Harvesting the gains of the large bandwidth and low latency at mmWave systems, however, is challenged by the sensitivity of mmWave signals to blockages; a sudden blockage in the line of sight (LOS) link leads to abrupt disconnection, which affects the reliability of the network. In addition, searching for an alternative base station to re-establish the link could result in needless latency overhead. In this paper, we address these challenges collectively by utilizing machine learning to anticipate dynamic blockages proactively. The proposed approach sees a machine learning algorithm learning to predict future blockages by observing what we refer to as the pre-blockage signature. To evaluate our proposed approach, we build a mmWave communication setup with a moving blockage and collect a dataset of received power sequences. Simulation results on a real dataset show that blockage occurrence could be predicted with more than 85% accuracy and the exact time instance of blockage occurrence can be obtained with low error. This highlights the potential of the proposed solution for dynamic blockage prediction and proactive hand-off, which enhances the reliability and latency of future wireless networks.

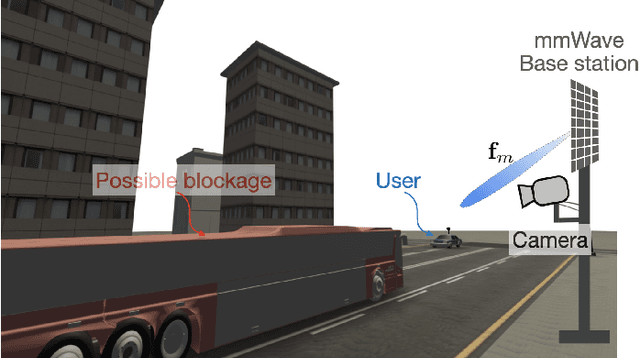

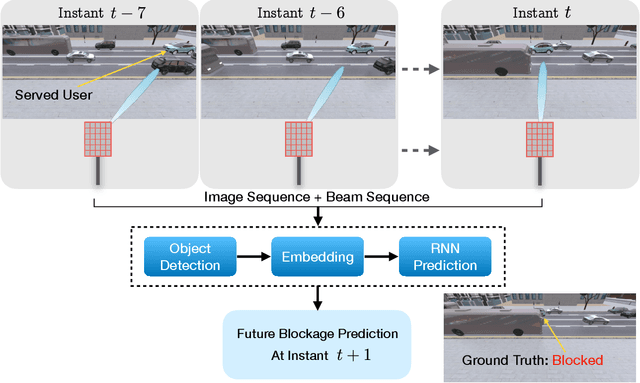

Vision-Aided Dynamic Blockage Prediction for 6G Wireless Communication Networks

Jun 18, 2020

Abstract:Unlocking the full potential of millimeter-wave and sub-terahertz wireless communication networks hinges on realizing unprecedented low-latency and high-reliability requirements. The challenge in meeting those requirements lies partly in the sensitivity of signals in the millimeter-wave and sub-terahertz frequency ranges to blockages. One promising way to tackle that challenge is to help a wireless network develop a sense of its surrounding using machine learning. This paper attempts to do that by utilizing deep learning and computer vision. It proposes a novel solution that proactively predicts \textit{dynamic} link blockages. More specifically, it develops a deep neural network architecture that learns from observed sequences of RGB images and beamforming vectors how to predict possible future link blockages. The proposed architecture is evaluated on a publicly available dataset that represents a synthetic dynamic communication scenario with multiple moving users and blockages. It scores a link-blockage prediction accuracy in the neighborhood of 86\%, a performance that is unlikely to be matched without utilizing visual data.

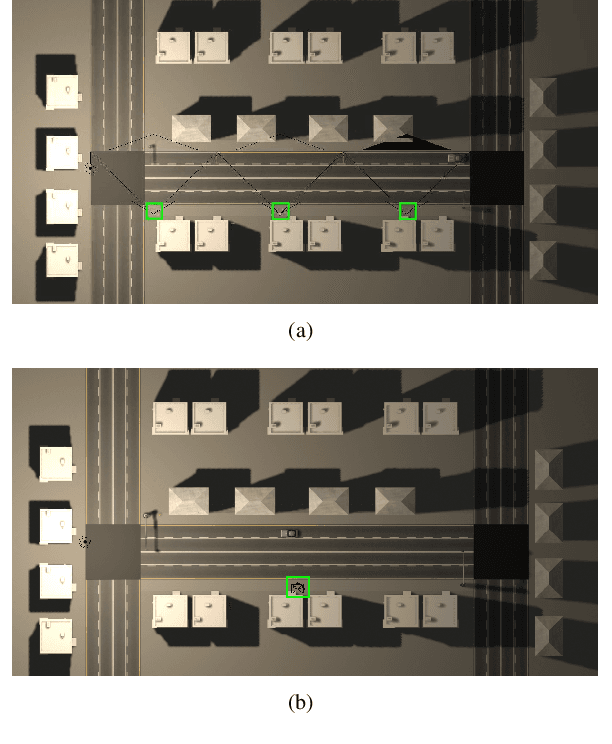

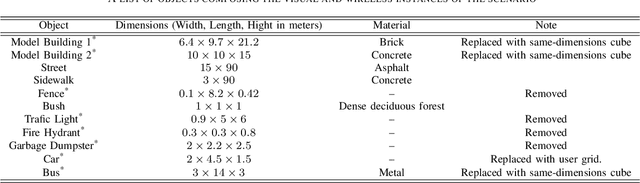

ViWi: A Deep Learning Dataset Framework for Vision-Aided Wireless Communications

Nov 14, 2019

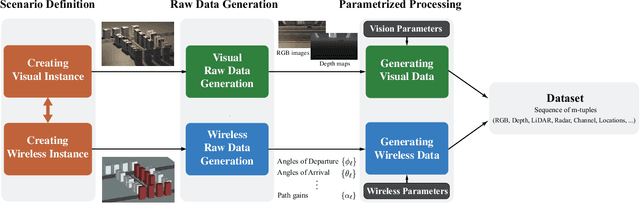

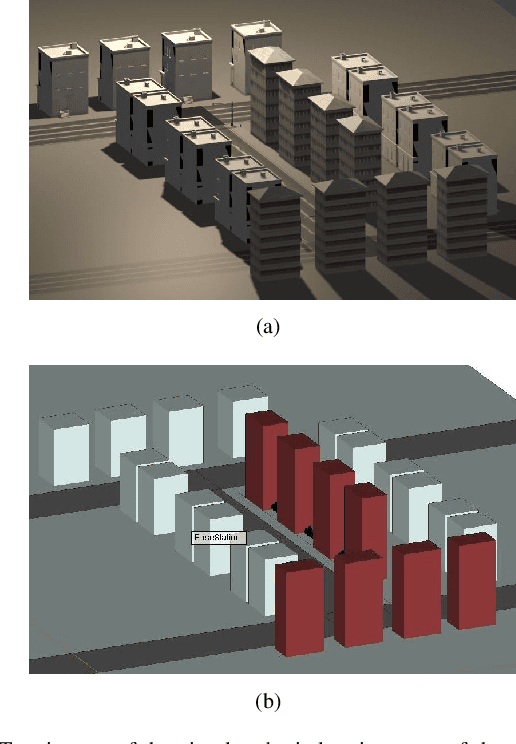

Abstract:The growing role that artificial intelligence and specifically machine learning is playing in shaping the future of wireless communications has opened up many new and intriguing research directions. This paper motivates the research in the novel direction of \textit{vision-aided wireless communications}, which aims at leveraging visual sensory information in tackling wireless communication problems. Like any new research direction driven by machine learning, obtaining a development dataset poses the first and most important challenge to vision-aided wireless communications. This paper addresses this issue by introducing the Vision-Wireless (ViWi) dataset framework. It is developed to be a parametric, systematic, and scalable data generation framework. It utilizes advanced 3D-modeling and ray-tracing softwares to generate high-fidelity synthetic wireless and vision data samples for the same scenes. The result is a framework that does not only offer a way to generate training and testing datasets but helps provide a common ground on which the quality of different machine learning-powered solutions could be assessed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge