Andrew Hredzak

DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset

Nov 17, 2022

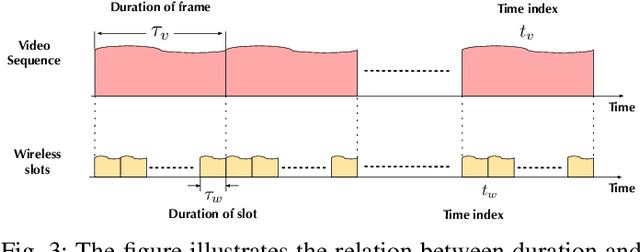

Abstract:This article presents the DeepSense 6G dataset, which is a large-scale dataset based on real-world measurements of co-existing multi-modal sensing and communication data. The DeepSense 6G dataset is built to advance deep learning research in a wide range of applications in the intersection of multi-modal sensing, communication, and positioning. This article provides a detailed overview of the DeepSense dataset structure, adopted testbeds, data collection and processing methodology, deployment scenarios, and example applications, with the objective of facilitating the adoption and reproducibility of multi-modal sensing and communication datasets.

Millimeter Wave Drones with Cameras: Computer Vision Aided Wireless Beam Prediction

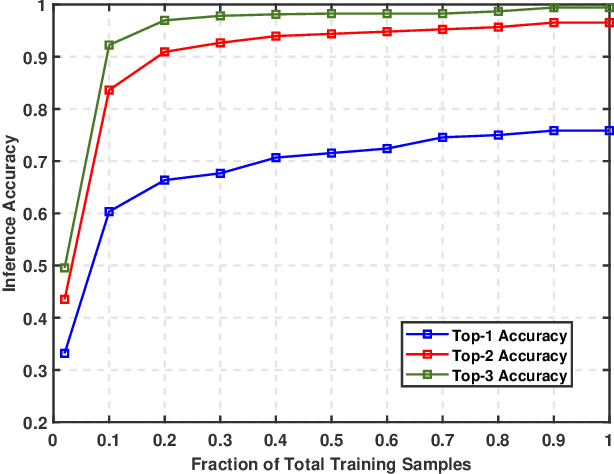

Nov 14, 2022Abstract:Millimeter wave (mmWave) and terahertz (THz) drones have the potential to enable several futuristic applications such as coverage extension, enhanced security monitoring, and disaster management. However, these drones need to deploy large antenna arrays and use narrow directive beams to maintain a sufficient link budget. The large beam training overhead associated with these arrays makes adjusting these narrow beams challenging for highly-mobile drones. To address these challenges, this paper proposes a vision-aided machine learning-based approach that leverages visual data collected from cameras installed on the drones to enable fast and accurate beam prediction. Further, to facilitate the evaluation of the proposed solution, we build a synthetic drone communication dataset consisting of co-existing wireless and visual data. The proposed vision-aided solution achieves a top-$1$ beam prediction accuracy of $\approx 91\%$ and close to $100\%$ top-$3$ accuracy. These results highlight the efficacy of the proposed solution towards enabling highly mobile mmWave/THz drone communication.

Towards Real-World 6G Drone Communication: Position and Camera Aided Beam Prediction

May 24, 2022

Abstract:Millimeter-wave (mmWave) and terahertz (THz) communication systems typically deploy large antenna arrays to guarantee sufficient receive signal power. The beam training overhead associated with these arrays, however, make it hard for these systems to support highly-mobile applications such as drone communication. To overcome this challenge, this paper proposes a machine learning-based approach that leverages additional sensory data, such as visual and positional data, for fast and accurate mmWave/THz beam prediction. The developed framework is evaluated on a real-world multi-modal mmWave drone communication dataset comprising of co-existing camera, practical GPS, and mmWave beam training data. The proposed sensing-aided solution achieves a top-1 beam prediction accuracy of 86.32% and close to 100% top-3 and top-5 accuracies, while considerably reducing the beam training overhead. This highlights a promising solution for enabling highly mobile 6G drone communications.

Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Datasets

Nov 15, 2021

Abstract:Enabling highly-mobile millimeter wave (mmWave) and terahertz (THz) wireless communication applications requires overcoming the critical challenges associated with the large antenna arrays deployed at these systems. In particular, adjusting the narrow beams of these antenna arrays typically incurs high beam training overhead that scales with the number of antennas. To address these challenges, this paper proposes a multi-modal machine learning based approach that leverages positional and visual (camera) data collected from the wireless communication environment for fast beam prediction. The developed framework has been tested on a real-world vehicular dataset comprising practical GPS, camera, and mmWave beam training data. The results show the proposed approach achieves more than $\approx$ 75\% top-1 beam prediction accuracy and close to 100\% top-3 beam prediction accuracy in realistic communication scenarios.

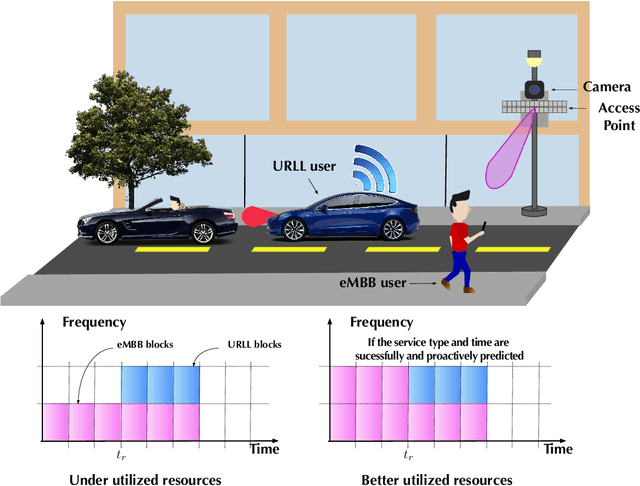

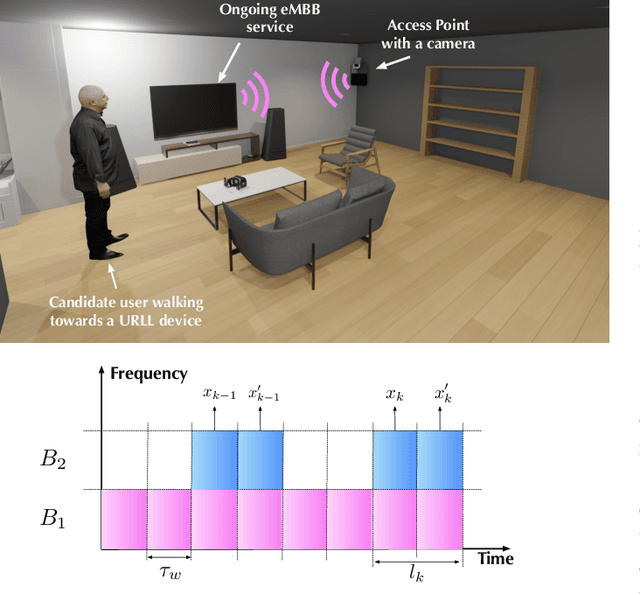

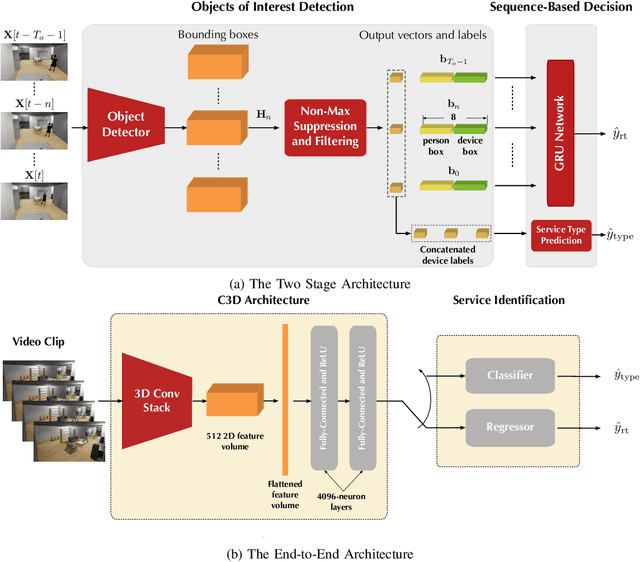

Computer Vision Aided URLL Communications: Proactive Service Identification and Coexistence

Mar 18, 2021

Abstract:The support of coexisting ultra-reliable and low-latency (URLL) and enhanced Mobile BroadBand (eMBB) services is a key challenge for the current and future wireless communication networks. Those two types of services introduce strict, and in some time conflicting, resource allocation requirements that may result in a power-struggle between reliability, latency, and resource utilization in wireless networks. The difficulty in addressing that challenge could be traced back to the predominant reactive approach in allocating the wireless resources. This allocation operation is carried out based on received service requests and global network statistics, which may not incorporate a sense of \textit{proaction}. Therefore, this paper proposes a novel framework termed \textit{service identification} to develop novel proactive resource allocation algorithms. The developed framework is based on visual data (captured for example by RGB cameras) and deep learning (e.g., deep neural networks). The ultimate objective of this framework is to equip future wireless networks with the ability to analyze user behavior, anticipate incoming services, and perform proactive resource allocation. To demonstrate the potential of the proposed framework, a wireless network scenario with two coexisting URLL and eMBB services is considered, and two deep learning algorithms are designed to utilize RGB video frames and predict incoming service type and its request time. An evaluation dataset based on the considered scenario is developed and used to evaluate the performance of the two algorithms. The results confirm the anticipated value of proaction to wireless networks; the proposed models enable efficient network performance ensuring more than $85\%$ utilization of the network resources at $\sim 98\%$ reliability. This highlights a promising direction for the future vision-aided wireless communication networks.

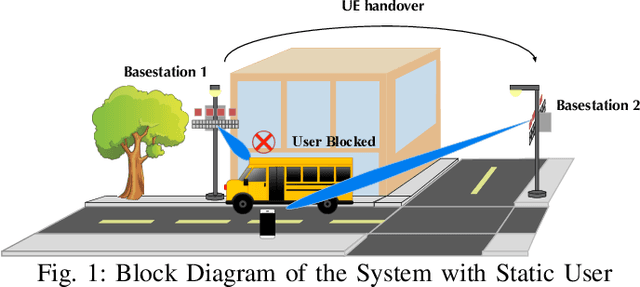

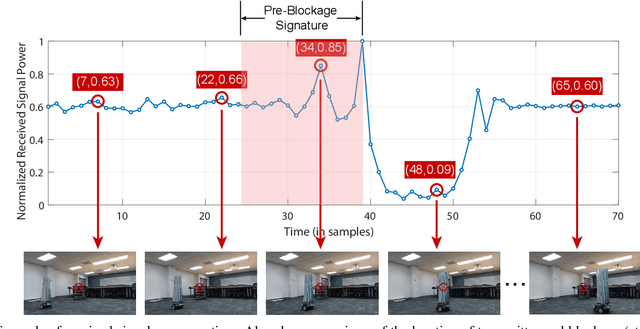

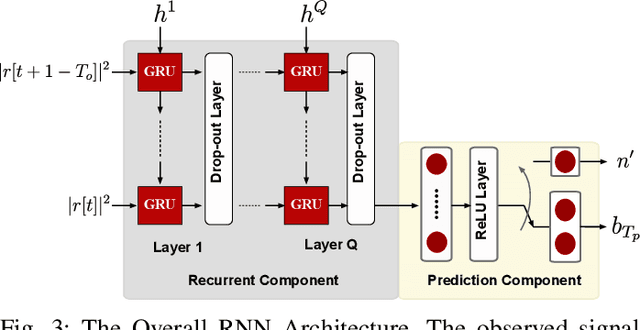

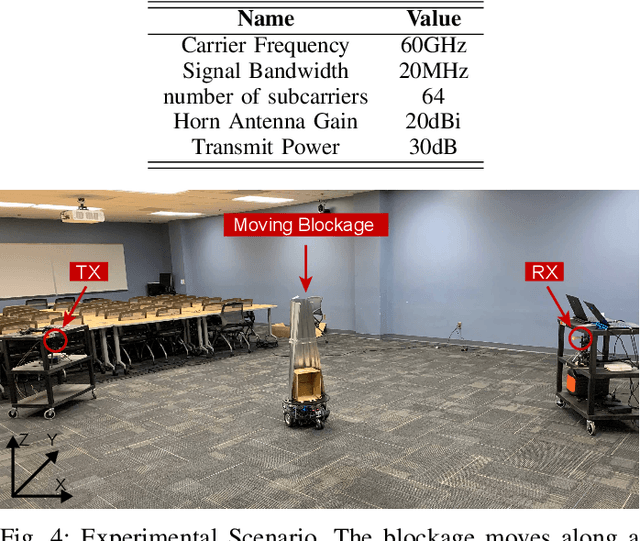

Deep Learning for Moving Blockage Prediction using Real Millimeter Wave Measurements

Feb 08, 2021

Abstract:Millimeter wave (mmWave) communication is a key component of 5G and beyond. Harvesting the gains of the large bandwidth and low latency at mmWave systems, however, is challenged by the sensitivity of mmWave signals to blockages; a sudden blockage in the line of sight (LOS) link leads to abrupt disconnection, which affects the reliability of the network. In addition, searching for an alternative base station to re-establish the link could result in needless latency overhead. In this paper, we address these challenges collectively by utilizing machine learning to anticipate dynamic blockages proactively. The proposed approach sees a machine learning algorithm learning to predict future blockages by observing what we refer to as the pre-blockage signature. To evaluate our proposed approach, we build a mmWave communication setup with a moving blockage and collect a dataset of received power sequences. Simulation results on a real dataset show that blockage occurrence could be predicted with more than 85% accuracy and the exact time instance of blockage occurrence can be obtained with low error. This highlights the potential of the proposed solution for dynamic blockage prediction and proactive hand-off, which enhances the reliability and latency of future wireless networks.

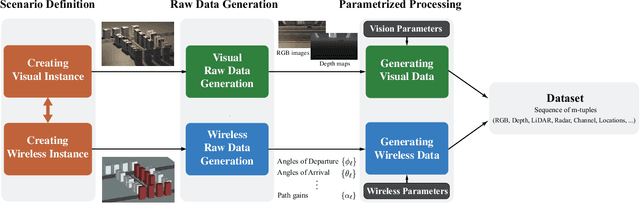

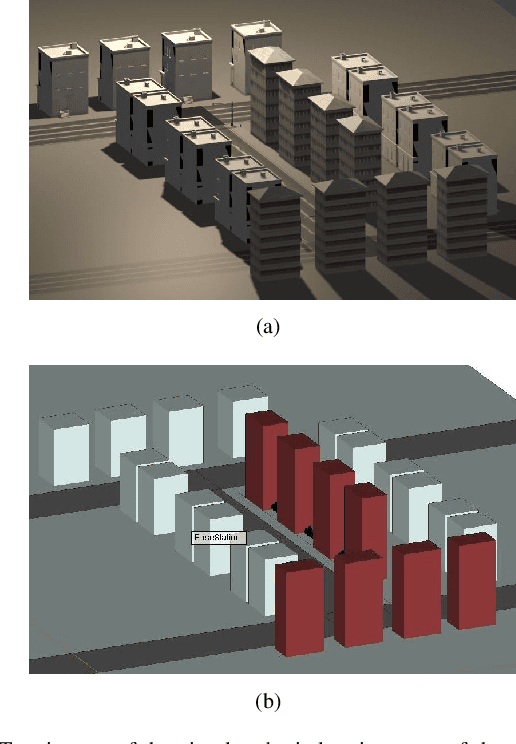

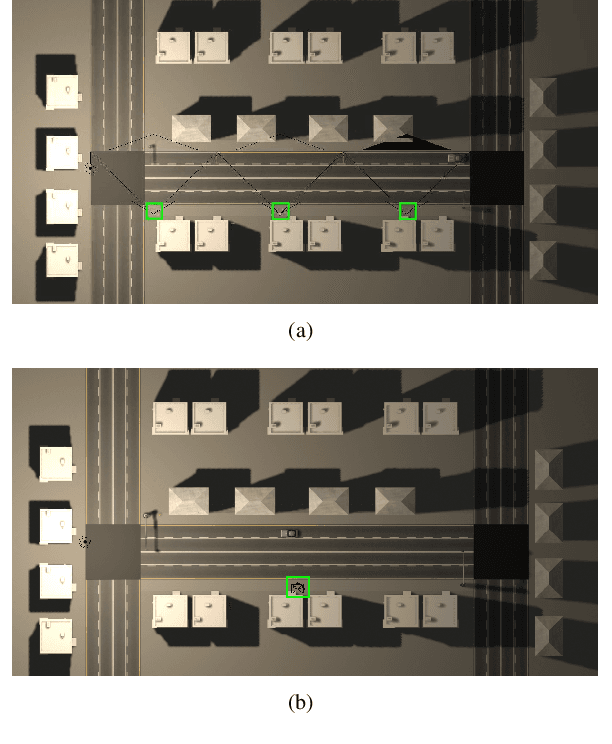

ViWi: A Deep Learning Dataset Framework for Vision-Aided Wireless Communications

Nov 14, 2019

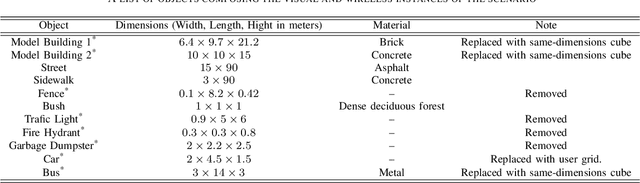

Abstract:The growing role that artificial intelligence and specifically machine learning is playing in shaping the future of wireless communications has opened up many new and intriguing research directions. This paper motivates the research in the novel direction of \textit{vision-aided wireless communications}, which aims at leveraging visual sensory information in tackling wireless communication problems. Like any new research direction driven by machine learning, obtaining a development dataset poses the first and most important challenge to vision-aided wireless communications. This paper addresses this issue by introducing the Vision-Wireless (ViWi) dataset framework. It is developed to be a parametric, systematic, and scalable data generation framework. It utilizes advanced 3D-modeling and ray-tracing softwares to generate high-fidelity synthetic wireless and vision data samples for the same scenes. The result is a framework that does not only offer a way to generate training and testing datasets but helps provide a common ground on which the quality of different machine learning-powered solutions could be assessed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge