João Morais

Wireless Dataset Similarity: Measuring Distances in Supervised and Unsupervised Machine Learning

Jan 03, 2026Abstract:This paper introduces a task- and model-aware framework for measuring similarity between wireless datasets, enabling applications such as dataset selection/augmentation, simulation-to-real (sim2real) comparison, task-specific synthetic data generation, and informing decisions on model training/adaptation to new deployments. We evaluate candidate dataset distance metrics by how well they predict cross-dataset transferability: if two datasets have a small distance, a model trained on one should perform well on the other. We apply the framework on an unsupervised task, channel state information (CSI) compression, using autoencoders. Using metrics based on UMAP embeddings, combined with Wasserstein and Euclidean distances, we achieve Pearson correlations exceeding 0.85 between dataset distances and train-on-one/test-on-another task performance. We also apply the framework to a supervised beam prediction in the downlink using convolutional neural networks. For this task, we derive a label-aware distance by integrating supervised UMAP and penalties for dataset imbalance. Across both tasks, the resulting distances outperform traditional baselines and consistently exhibit stronger correlations with model transferability, supporting task-relevant comparisons between wireless datasets.

Comparing Stochastic and Ray-tracing Datasets in Machine Learning for Wireless Applications

Dec 13, 2025Abstract:Machine learning for wireless systems is commonly studied using standardized stochastic channel models (e.g., TDL/CDL/UMa) because of their legacy in wireless communication standardization and their ability to generate data at scale. However, some of their structural assumptions may diverge from real-world propagation. This paper asks when these models are sufficient and when ray-traced (RT) data - a proxy for the real world - provides tangible benefits. To answer these questions, we conduct an empirical study on two representative tasks: CSI compression and temporal channel prediction. Models are trained and evaluated using in-domain, cross-domain, and small-data fine-tuning protocols. Across settings, we observe that stochastic-only evaluation may over- or under-estimate performance relative to RT. These findings support a task-aware recipe where stochastic models can be leveraged for scalable pre-training and for tasks that do not rely on strong spatiotemporal coupling. When that coupling matters, pre-training and evaluation should be grounded in spatially consistent or geometrically similar RT scenarios. This study provides initial guidance to inform future discussions on benchmarking and standardization.

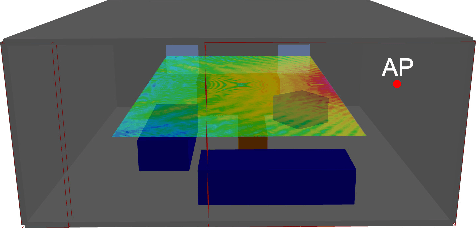

Localization in Digital Twin MIMO Networks: A Case for Massive Fingerprinting

Mar 14, 2024Abstract:Localization in outdoor wireless systems typically requires transmitting specific reference signals to estimate distance (trilateration methods) or angle (triangulation methods). These cause overhead on communication, need a LoS link to work well, and require multiple base stations, often imposing synchronization or specific hardware requirements. Fingerprinting has none of these drawbacks, but building its database requires high human effort to collect real-world measurements. For a long time, this issue limited the size of databases and thus their performance. This work proposes significantly reducing human effort in building fingerprinting databases by populating them with \textit{digital twin RF maps}. These RF maps are built from ray-tracing simulations on a digital replica of the environment across several frequency bands and beamforming configurations. Online user fingerprints are then matched against this spatial database. The approach was evaluated with practical simulations using realistic propagation models and user measurements. Our experiments show sub-meter localization errors on a NLoS location 95\% of the time using sensible user measurement report sizes. Results highlight the promising potential of the proposed digital twin approach for ubiquitous wide-area 6G localization.

Device-Agnostic Millimeter Wave Beam Selection using Machine Learning

Nov 22, 2022Abstract:Most research in the area of machine learning-based user beam selection considers a structure where the model proposes appropriate user beams. However, this design requires a specific model for each user-device beam codebook, where a model learned for a device with a particular codebook can not be reused for another device with a different codebook. Moreover, this design requires training and test samples for each antenna placement configuration/codebook. This paper proposes a device-agnostic beam selection framework that leverages context information to propose appropriate user beams using a generic model and a post processing unit. The generic neural network predicts the potential angles of arrival, and the post processing unit maps these directions to beams based on the specific device's codebook. The proposed beam selection framework works well for user devices with antenna configuration/codebook unseen in the training dataset. Also, the proposed generic network has the option to be trained with a dataset mixed of samples with different antenna configurations/codebooks, which significantly eases the burden of effective model training.

DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset

Nov 17, 2022

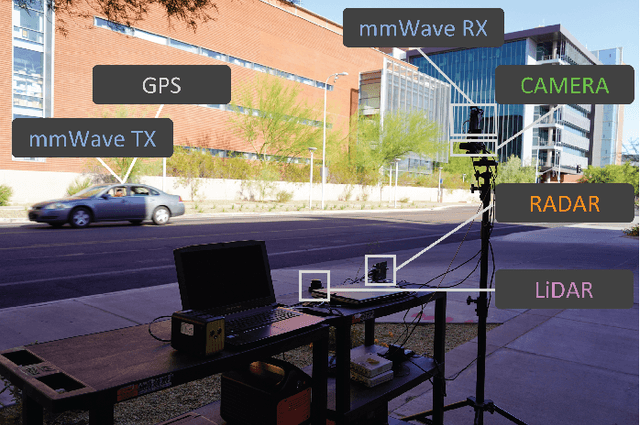

Abstract:This article presents the DeepSense 6G dataset, which is a large-scale dataset based on real-world measurements of co-existing multi-modal sensing and communication data. The DeepSense 6G dataset is built to advance deep learning research in a wide range of applications in the intersection of multi-modal sensing, communication, and positioning. This article provides a detailed overview of the DeepSense dataset structure, adopted testbeds, data collection and processing methodology, deployment scenarios, and example applications, with the objective of facilitating the adoption and reproducibility of multi-modal sensing and communication datasets.

Multi-Modal Beam Prediction Challenge 2022: Towards Generalization

Sep 15, 2022

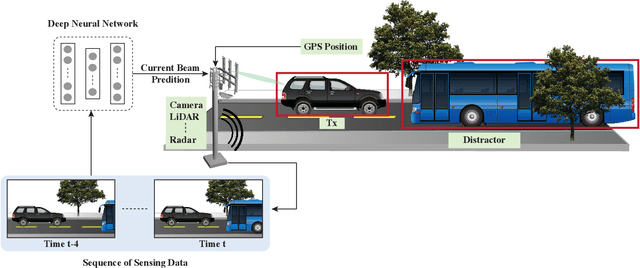

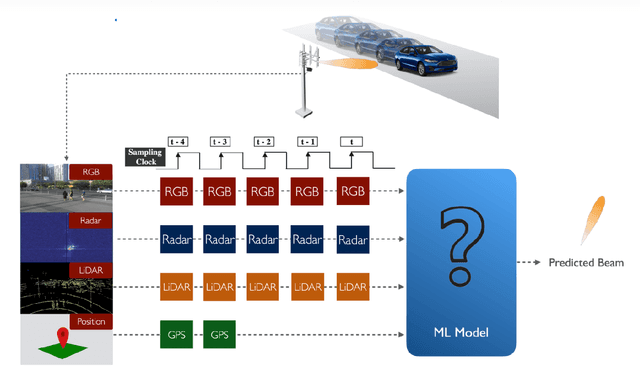

Abstract:Beam management is a challenging task for millimeter wave (mmWave) and sub-terahertz communication systems, especially in scenarios with highly-mobile users. Leveraging external sensing modalities such as vision, LiDAR, radar, position, or a combination of them, to address this beam management challenge has recently attracted increasing interest from both academia and industry. This is mainly motivated by the dependency of the beam direction decision on the user location and the geometry of the surrounding environment -- information that can be acquired from the sensory data. To realize the promised beam management gains, such as the significant reduction in beam alignment overhead, in practice, however, these solutions need to account for important aspects. For example, these multi-modal sensing aided beam selection approaches should be able to generalize their learning to unseen scenarios and should be able to operate in realistic dense deployments. The "Multi-Modal Beam Prediction Challenge 2022: Towards Generalization" competition is offered to provide a platform for investigating these critical questions. In order to facilitate the generalizability study, the competition offers a large-scale multi-modal dataset with co-existing communication and sensing data collected across multiple real-world locations and different times of the day. In this paper, along with the detailed descriptions of the problem statement and the development dataset, we provide a baseline solution that utilizes the user position data to predict the optimal beam indices. The objective of this challenge is to go beyond a simple feasibility study and enable necessary research in this direction, paving the way towards generalizable multi-modal sensing-aided beam management for real-world future communication systems.

Position Aided Beam Prediction in the Real World: How Useful GPS Locations Actually Are?

May 23, 2022

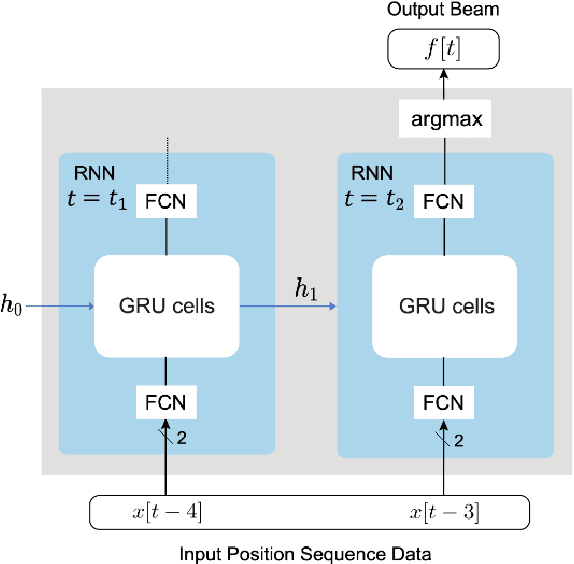

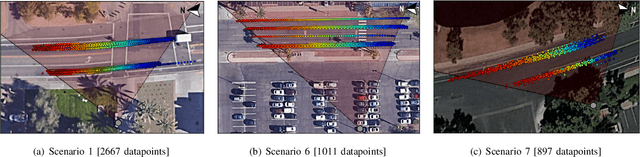

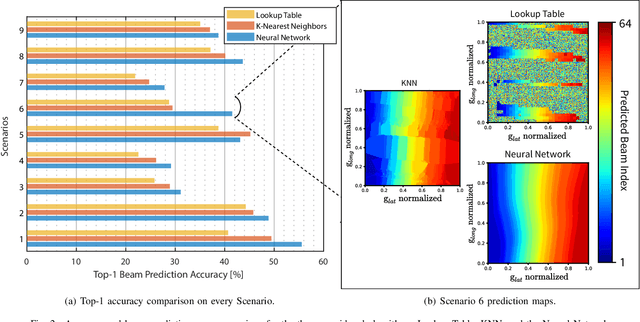

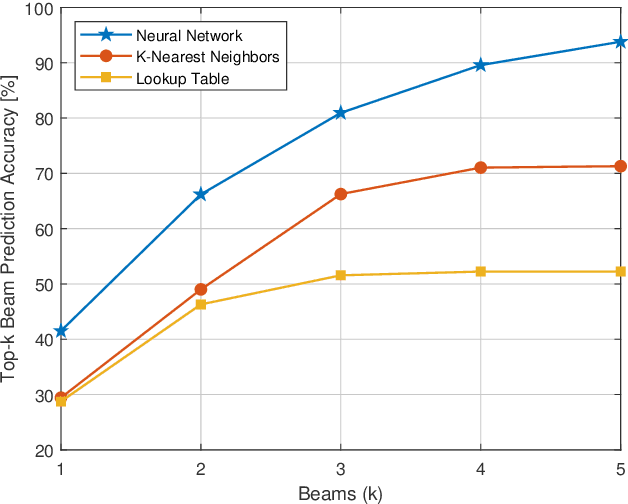

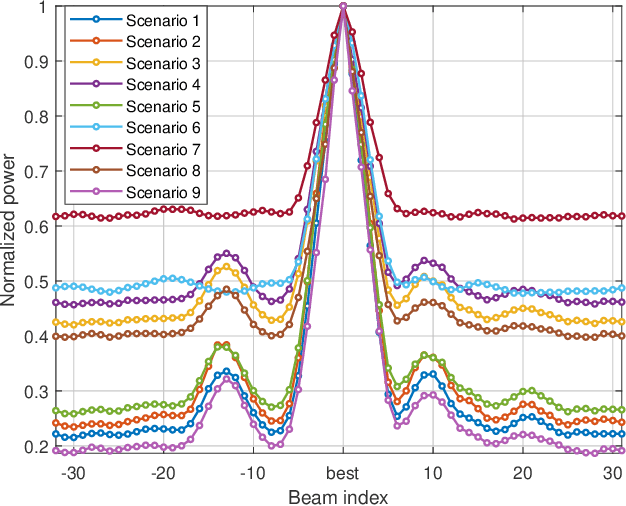

Abstract:Millimeter-wave (mmWave) communication systems rely on narrow beams for achieving sufficient receive signal power. Adjusting these beams is typically associated with large training overhead, which becomes particularly critical for highly-mobile applications. Intuitively, since optimal beam selection can benefit from the knowledge of the positions of communication terminals, there has been increasing interest in leveraging position data to reduce the overhead in mmWave beam prediction. Prior work, however, studied this problem using only synthetic data that generally does not accurately represent real-world measurements. In this paper, we investigate position-aided beam prediction using a real-world large-scale dataset to derive insights into precisely how much overhead can be saved in practice. Furthermore, we analyze which machine learning algorithms perform best, what factors degrade inference performance in real data, and which machine learning metrics are more meaningful in capturing the actual communication system performance.

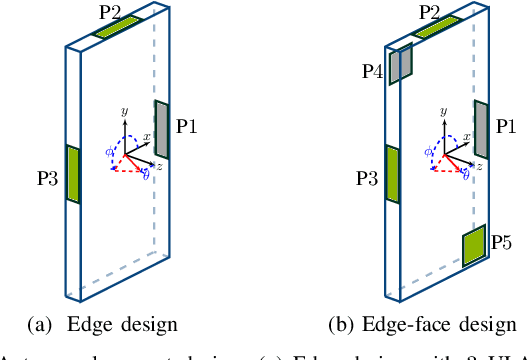

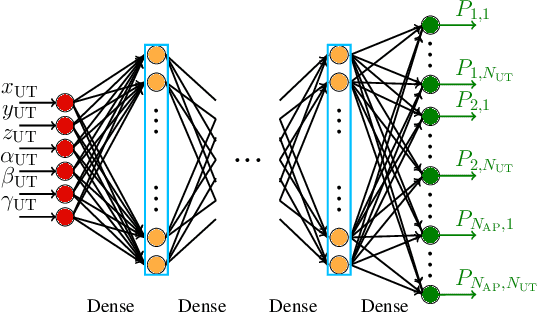

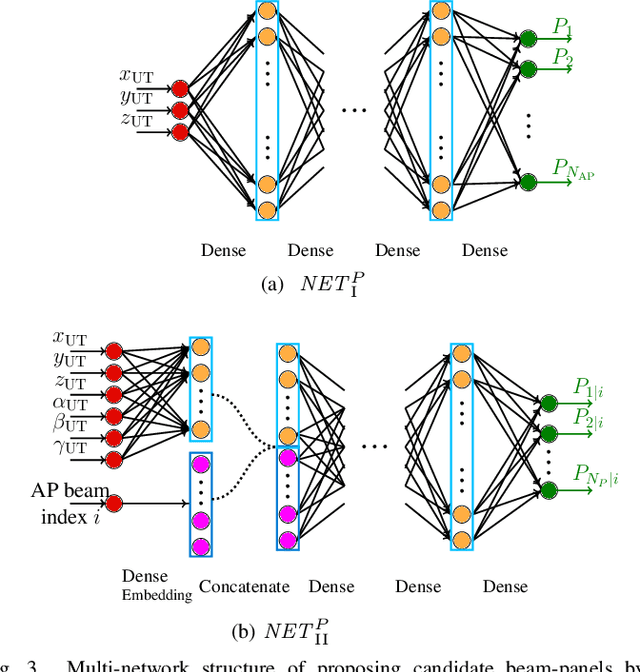

Location- and Orientation-aware Millimeter Wave Beam Selection for Multi-Panel Antenna Devices

Mar 22, 2022

Abstract:While initial beam alignment (BA) in millimeter-wave networks has been thoroughly investigated, most research assumes a simplified terminal model based on uniform linear/planar arrays with isotropic antennas. Devices with non-isotropic antenna elements need multiple panels to provide good spherical coverage, and exhaustive search over all beams of all the panels leads to unacceptable overhead. This paper proposes a location- and orientation-aware solution that manages the initial BA for multi-panel devices. We present three different neural network structures that provide efficient BA with a wide range of training dataset sizes, complexity, and feedback message sizes. Our proposed methods outperform the generalized inverse fingerprinting and hierarchical panel-beam selection methods for two considered edge and edge-face antenna placement designs.

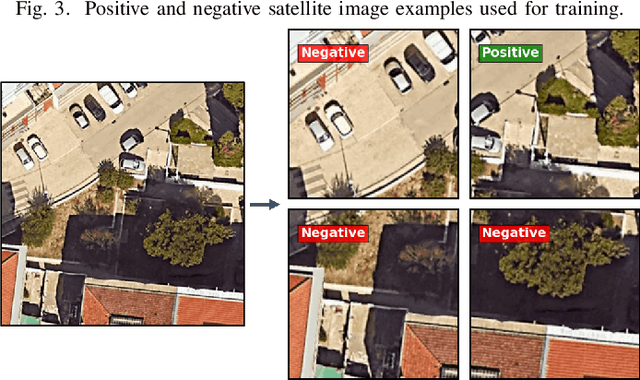

Parkour Spot ID: Feature Matching in Satellite and Street view images using Deep Learning

Jan 02, 2022

Abstract:How to find places that are not indexed by Google Maps? We propose an intuitive method and framework to locate places based on their distinctive spatial features. The method uses satellite and street view images in machine vision approaches to classify locations. If we can classify locations, we just need to repeat for non-overlapping locations in our area of interest. We assess the proposed system in finding Parkour spots in the campus of Arizona State University. The results are very satisfactory, having found more than 25 new Parkour spots, with a rate of true positives above 60%.

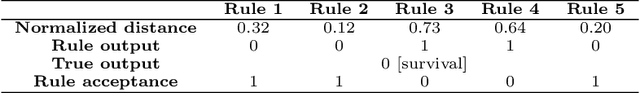

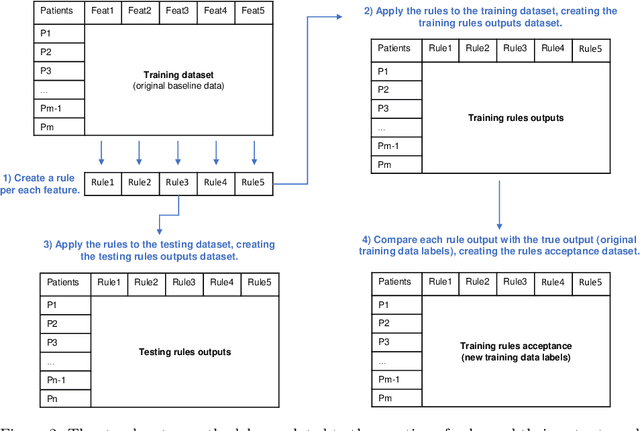

A New Approach for Interpretability and Reliability in Clinical Risk Prediction: Acute Coronary Syndrome Scenario

Oct 15, 2021

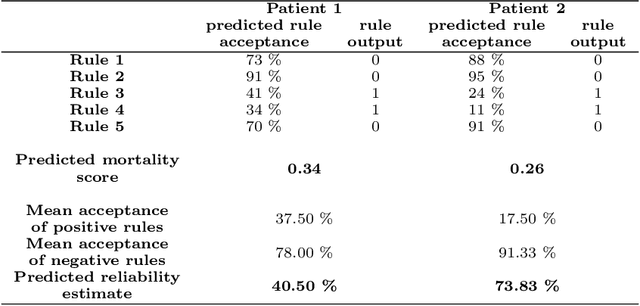

Abstract:We intend to create a new risk assessment methodology that combines the best characteristics of both risk score and machine learning models. More specifically, we aim to develop a method that, besides having a good performance, offers a personalized model and outcome for each patient, presents high interpretability, and incorporates an estimation of the prediction reliability which is not usually available. By combining these features in the same approach we expect that it can boost the confidence of physicians to use such a tool in their daily activity. In order to achieve the mentioned goals, a three-step methodology was developed: several rules were created by dichotomizing risk factors; such rules were trained with a machine learning classifier to predict the acceptance degree of each rule (the probability that the rule is correct) for each patient; that information was combined and used to compute the risk of mortality and the reliability of such prediction. The methodology was applied to a dataset of patients admitted with any type of acute coronary syndromes (ACS), to assess the 30-days all-cause mortality risk. The performance was compared with state-of-the-art approaches: logistic regression (LR), artificial neural network (ANN), and clinical risk score model (Global Registry of Acute Coronary Events - GRACE). The proposed approach achieved testing results identical to the standard LR, but offers superior interpretability and personalization; it also significantly outperforms the GRACE risk model and the standard ANN model. The calibration curve also suggests a very good generalization ability of the obtained model as it approaches the ideal curve. Finally, the reliability estimation of individual predictions presented a great correlation with the misclassifications rate. Those properties may have a beneficial application in other clinical scenarios as well. [abridged]

* Accepted for publication in the Artificial Intelligence in Medicine journal. Abstract abridged to respect the arXiv's characters limit

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge