José D. Martín-Guerrero

TrackFormers: In Search of Transformer-Based Particle Tracking for the High-Luminosity LHC Era

Jul 09, 2024

Abstract:High-Energy Physics experiments are facing a multi-fold data increase with every new iteration. This is certainly the case for the upcoming High-Luminosity LHC upgrade. Such increased data processing requirements forces revisions to almost every step of the data processing pipeline. One such step in need of an overhaul is the task of particle track reconstruction, a.k.a., tracking. A Machine Learning-assisted solution is expected to provide significant improvements, since the most time-consuming step in tracking is the assignment of hits to particles or track candidates. This is the topic of this paper. We take inspiration from large language models. As such, we consider two approaches: the prediction of the next word in a sentence (next hit point in a track), as well as the one-shot prediction of all hits within an event. In an extensive design effort, we have experimented with three models based on the Transformer architecture and one model based on the U-Net architecture, performing track association predictions for collision event hit points. In our evaluation, we consider a spectrum of simple to complex representations of the problem, eliminating designs with lower metrics early on. We report extensive results, covering both prediction accuracy (score) and computational performance. We have made use of the REDVID simulation framework, as well as reductions applied to the TrackML data set, to compose five data sets from simple to complex, for our experiments. The results highlight distinct advantages among different designs in terms of prediction accuracy and computational performance, demonstrating the efficiency of our methodology. Most importantly, the results show the viability of a one-shot encoder-classifier based Transformer solution as a practical approach for the task of tracking.

Novel Approaches for ML-Assisted Particle Track Reconstruction and Hit Clustering

May 27, 2024

Abstract:Track reconstruction is a vital aspect of High-Energy Physics (HEP) and plays a critical role in major experiments. In this study, we delve into unexplored avenues for particle track reconstruction and hit clustering. Firstly, we enhance the algorithmic design effort by utilising a simplified simulator (REDVID) to generate training data that is specifically composed for simplicity. We demonstrate the effectiveness of this data in guiding the development of optimal network architectures. Additionally, we investigate the application of image segmentation networks for this task, exploring their potential for accurate track reconstruction. Moreover, we approach the task from a different perspective by treating it as a hit sequence to track sequence translation problem. Specifically, we explore the utilisation of Transformer architectures for tracking purposes. Our preliminary findings are covered in detail. By considering this novel approach, we aim to uncover new insights and potential advancements in track reconstruction. This research sheds light on previously unexplored methods and provides valuable insights for the field of particle track reconstruction and hit clustering in HEP.

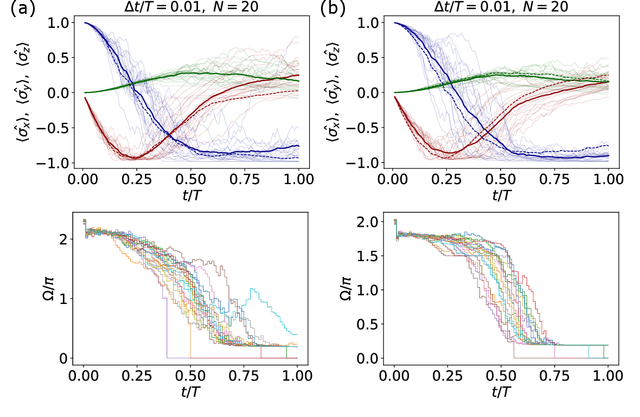

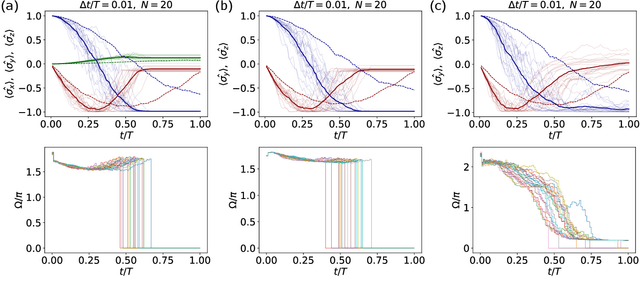

Physics-Informed Neural Networks for an optimal counterdiabatic quantum computation

Sep 13, 2023Abstract:We introduce a novel methodology that leverages the strength of Physics-Informed Neural Networks (PINNs) to address the counterdiabatic (CD) protocol in the optimization of quantum circuits comprised of systems with $N_{Q}$ qubits. The primary objective is to utilize physics-inspired deep learning techniques to accurately solve the time evolution of the different physical observables within the quantum system. To accomplish this objective, we embed the necessary physical information into an underlying neural network to effectively tackle the problem. In particular, we impose the hermiticity condition on all physical observables and make use of the principle of least action, guaranteeing the acquisition of the most appropriate counterdiabatic terms based on the underlying physics. The proposed approach offers a dependable alternative to address the CD driving problem, free from the constraints typically encountered in previous methodologies relying on classical numerical approximations. Our method provides a general framework to obtain optimal results from the physical observables relevant to the problem, including the external parameterization in time known as scheduling function, the gauge potential or operator involving the non-adiabatic terms, as well as the temporal evolution of the energy levels of the system, among others. The main applications of this methodology have been the $\mathrm{H_{2}}$ and $\mathrm{LiH}$ molecules, represented by a 2-qubit and 4-qubit systems employing the STO-3G basis. The presented results demonstrate the successful derivation of a desirable decomposition for the non-adiabatic terms, achieved through a linear combination utilizing Pauli operators. This attribute confers significant advantages to its practical implementation within quantum computing algorithms.

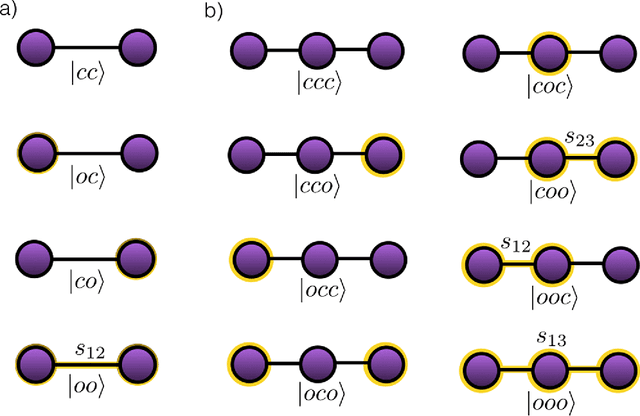

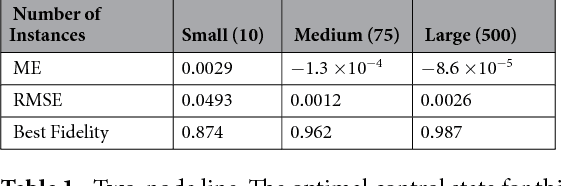

Machine Learning for maximizing the memristivity of single and coupled quantum memristors

Sep 10, 2023

Abstract:We propose machine learning (ML) methods to characterize the memristive properties of single and coupled quantum memristors. We show that maximizing the memristivity leads to large values in the degree of entanglement of two quantum memristors, unveiling the close relationship between quantum correlations and memory. Our results strengthen the possibility of using quantum memristors as key components of neuromorphic quantum computing.

Active Learning in Physics: From 101, to Progress, and Perspective

Jul 08, 2023Abstract:Active Learning (AL) is a family of machine learning (ML) algorithms that predates the current era of artificial intelligence. Unlike traditional approaches that require labeled samples for training, AL iteratively selects unlabeled samples to be annotated by an expert. This protocol aims to prioritize the most informative samples, leading to improved model performance compared to training with all labeled samples. In recent years, AL has gained increasing attention, particularly in the field of physics. This paper presents a comprehensive and accessible introduction to the theory of AL reviewing the latest advancements across various domains. Additionally, we explore the potential integration of AL with quantum ML, envisioning a synergistic fusion of these two fields rather than viewing AL as a mere extension of classical ML into the quantum realm.

Quantum Stream Learning

Dec 13, 2021

Abstract:The exotic nature of quantum mechanics makes machine learning (ML) be different in the quantum realm compared to classical applications. ML can be used for knowledge discovery using information continuously extracted from a quantum system in a broad range of tasks. The model receives streaming quantum information for learning and decision-making, resulting in instant feedback on the quantum system. As a stream learning approach, we present a deep reinforcement learning on streaming data from a continuously measured qubit at the presence of detuning, dephasing, and relaxation. We also investigate how the agent adapts to another quantum noise pattern by transfer learning. Stream learning provides a better understanding of closed-loop quantum control, which may pave the way for advanced quantum technologies.

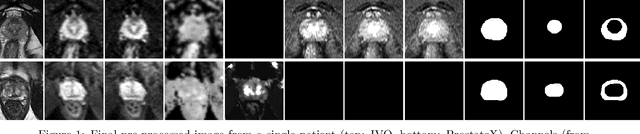

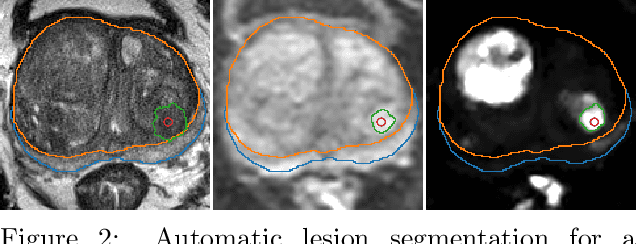

Deep Learning for fully automatic detection, segmentation, and Gleason Grade estimation of prostate cancer in multiparametric Magnetic Resonance Images

Mar 24, 2021

Abstract:The emergence of multi-parametric magnetic resonance imaging (mpMRI) has had a profound impact on the diagnosis of prostate cancers (PCa), which is the most prevalent malignancy in males in the western world, enabling a better selection of patients for confirmation biopsy. However, analyzing these images is complex even for experts, hence opening an opportunity for computer-aided diagnosis systems to seize. This paper proposes a fully automatic system based on Deep Learning that takes a prostate mpMRI from a PCa-suspect patient and, by leveraging the Retina U-Net detection framework, locates PCa lesions, segments them, and predicts their most likely Gleason grade group (GGG). It uses 490 mpMRIs for training/validation, and 75 patients for testing from two different datasets: ProstateX and IVO (Valencia Oncology Institute Foundation). In the test set, it achieves an excellent lesion-level AUC/sensitivity/specificity for the GGG$\geq$2 significance criterion of 0.96/1.00/0.79 for the ProstateX dataset, and 0.95/1.00/0.80 for the IVO dataset. Evaluated at a patient level, the results are 0.87/1.00/0.375 in ProstateX, and 0.91/1.00/0.762 in IVO. Furthermore, on the online ProstateX grand challenge, the model obtained an AUC of 0.85 (0.87 when trained only on the ProstateX data, tying up with the original winner of the challenge). For expert comparison, IVO radiologist's PI-RADS 4 sensitivity/specificity were 0.88/0.56 at a lesion level, and 0.85/0.58 at a patient level. Additional subsystems for automatic prostate zonal segmentation and mpMRI non-rigid sequence registration were also employed to produce the final fully automated system. The code for the ProstateX-trained system has been made openly available at https://github.com/OscarPellicer/prostate_lesion_detection. We hope that this will represent a landmark for future research to use, compare and improve upon.

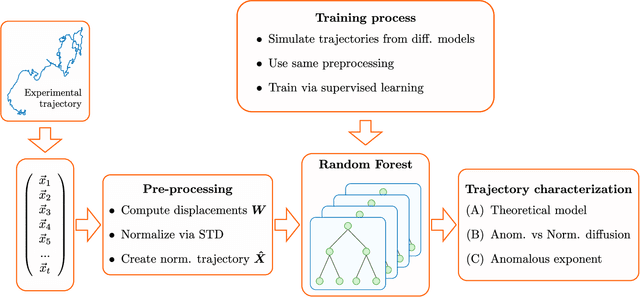

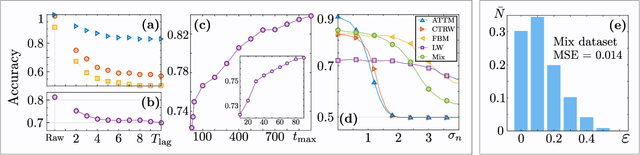

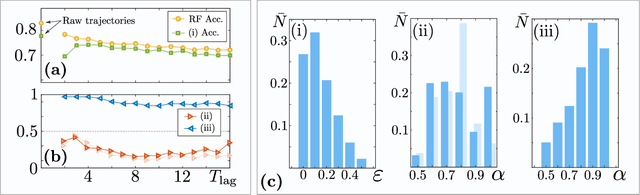

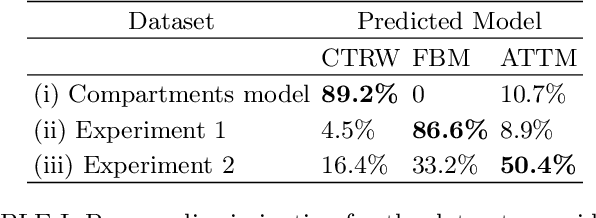

Machine learning method for single trajectory characterization

Mar 07, 2019

Abstract:In order to study transport in complex environments, it is extremely important to determine the physical mechanism underlying diffusion, and precisely characterize its nature and parameters. Often, this task is strongly impacted by data consisting of trajectories with short length and limited localization precision. In this paper, we propose a machine learning method based on a random forest architecture, which is able to associate even very short trajectories to the underlying diffusion mechanism with a high accuracy. In addition, the method is able to classify the motion according to normal or anomalous diffusion, and determine its anomalous exponent with a small error. The method provides highly accurate outputs even when working with very short trajectories and in the presence of experimental noise. We further demonstrate the application of transfer learning to experimental and simulated data not included in the training/testing dataset. This allows for a full, high-accuracy characterization of experimental trajectories without the need of any prior information.

A Probabilistic framework for Quantum Clustering

Feb 14, 2019

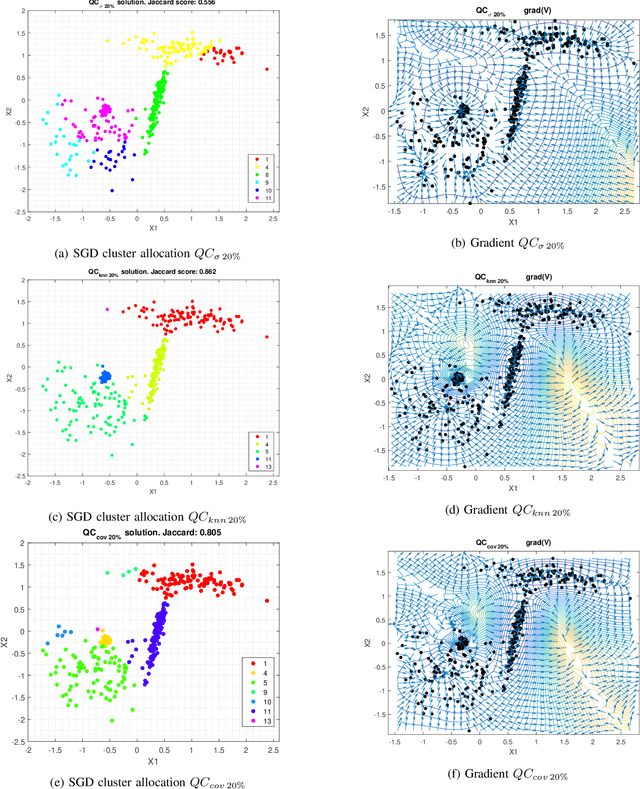

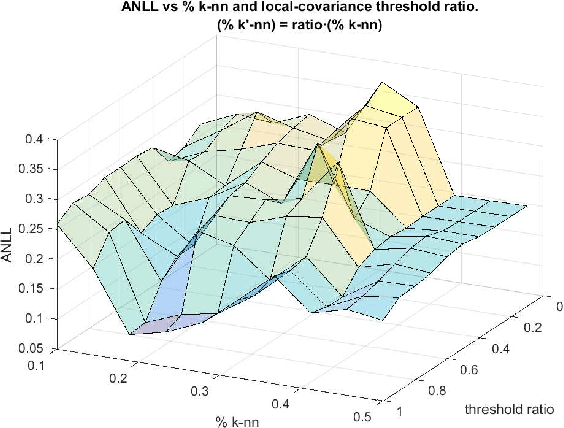

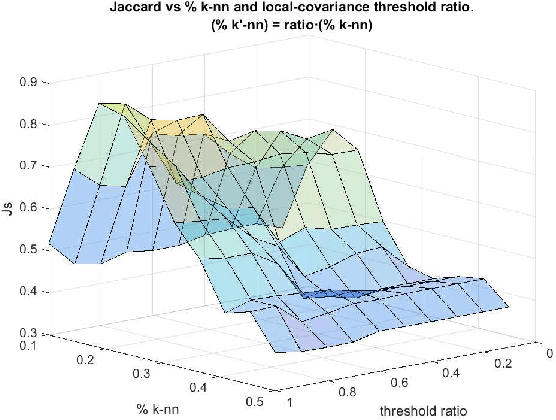

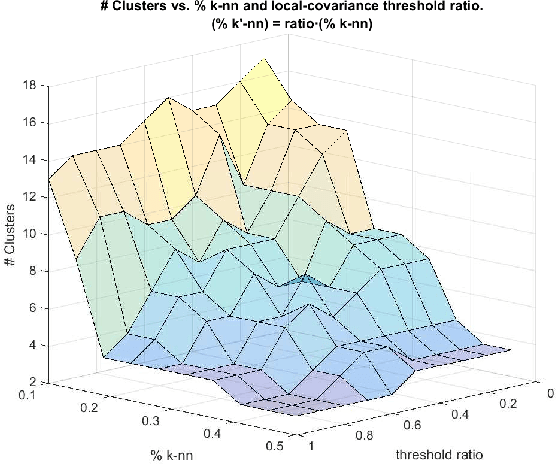

Abstract:Quantum Clustering is a powerful method to detect clusters in data with mixed density. However, it is very sensitive to a length parameter that is inherent to the Schr\"odinger equation. In addition, linking data points into clusters requires local estimates of covariance that are also controlled by length parameters. This raises the question of how to adjust the control parameters of the Schr\"odinger equation for optimal clustering. We propose a probabilistic framework that provides an objective function for the goodness-of-fit to the data, enabling the control parameters to be optimised within a Bayesian framework. This naturally yields probabilities of cluster membership and data partitions with specific numbers of clusters. The proposed framework is tested on real and synthetic data sets, assessing its validity by measuring concordance with known data structure by means of the Jaccard score (JS). This work also proposes an objective way to measure performance in unsupervised learning that correlates very well with JS.

Supervised Quantum Learning without Measurements

Oct 23, 2017

Abstract:We propose a quantum machine learning algorithm for efficiently solving a class of problems encoded in quantum controlled unitary operations. The central physical mechanism of the protocol is the iteration of a quantum time-delayed equation that introduces feedback in the dynamics and eliminates the necessity of intermediate measurements. The performance of the quantum algorithm is analyzed by comparing the results obtained in numerical simulations with the outcome of classical machine learning methods for the same problem. The use of time-delayed equations enhances the toolbox of the field of quantum machine learning, which may enable unprecedented applications in quantum technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge