Jonathan Cruz

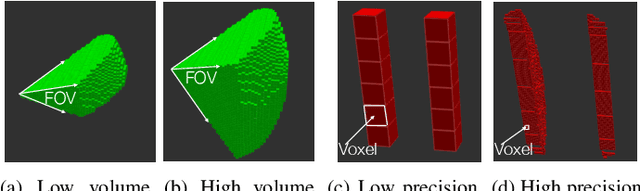

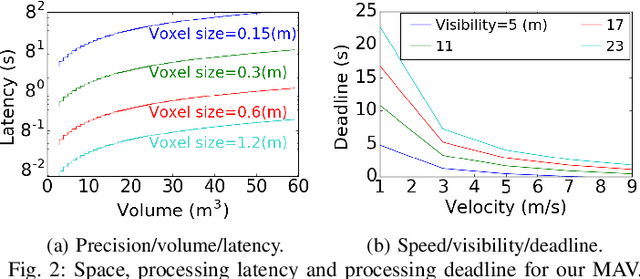

OMU: A Probabilistic 3D Occupancy Mapping Accelerator for Real-time OctoMap at the Edge

May 06, 2022

Abstract:Autonomous machines (e.g., vehicles, mobile robots, drones) require sophisticated 3D mapping to perceive the dynamic environment. However, maintaining a real-time 3D map is expensive both in terms of compute and memory requirements, especially for resource-constrained edge machines. Probabilistic OctoMap is a reliable and memory-efficient 3D dense map model to represent the full environment, with dynamic voxel node pruning and expansion capacity. This paper presents the first efficient accelerator solution, i.e. OMU, to enable real-time probabilistic 3D mapping at the edge. To improve the performance, the input map voxels are updated via parallel PE units for data parallelism. Within each PE, the voxels are stored using a specially developed data structure in parallel memory banks. In addition, a pruning address manager is designed within each PE unit to reuse the pruned memory addresses. The proposed 3D mapping accelerator is implemented and evaluated using a commercial 12 nm technology. Compared to the ARM Cortex-A57 CPU in the Nvidia Jetson TX2 platform, the proposed accelerator achieves up to 62$\times$ performance and 708$\times$ energy efficiency improvement. Furthermore, the accelerator provides 63 FPS throughput, more than 2$\times$ higher than a real-time requirement, enabling real-time perception for 3D mapping.

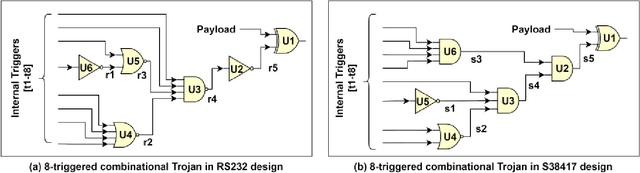

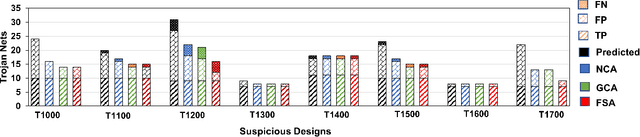

Third-Party Hardware IP Assurance against Trojans through Supervised Learning and Post-processing

Nov 29, 2021

Abstract:System-on-chip (SoC) developers increasingly rely on pre-verified hardware intellectual property (IP) blocks acquired from untrusted third-party vendors. These IPs might contain hidden malicious functionalities or hardware Trojans to compromise the security of the fabricated SoCs. Recently, supervised machine learning (ML) techniques have shown promising capability in identifying nets of potential Trojans in third party IPs (3PIPs). However, they bring several major challenges. First, they do not guide us to an optimal choice of features that reliably covers diverse classes of Trojans. Second, they require multiple Trojan-free/trusted designs to insert known Trojans and generate a trained model. Even if a set of trusted designs are available for training, the suspect IP could be inherently very different from the set of trusted designs, which may negatively impact the verification outcome. Third, these techniques only identify a set of suspect Trojan nets that require manual intervention to understand the potential threat. In this paper, we present VIPR, a systematic machine learning (ML) based trust verification solution for 3PIPs that eliminates the need for trusted designs for training. We present a comprehensive framework, associated algorithms, and a tool flow for obtaining an optimal set of features, training a targeted machine learning model, detecting suspect nets, and identifying Trojan circuitry from the suspect nets. We evaluate the framework on several Trust-Hub Trojan benchmarks and provide a comparative analysis of detection performance across different trained models, selection of features, and post-processing techniques. The proposed post-processing algorithms reduce false positives by up to 92.85%.

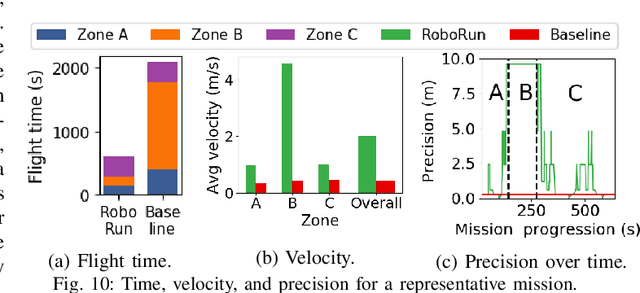

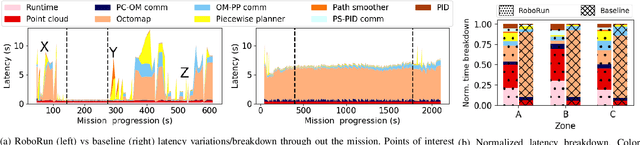

RoboRun: A Robot Runtime to Exploit Spatial Heterogeneity

Aug 30, 2021

Abstract:The limited onboard energy of autonomous mobile robots poses a tremendous challenge for practical deployment. Hence, efficient computing solutions are imperative. A crucial shortcoming of state-of-the-art computing solutions is that they ignore the robot's operating environment heterogeneity and make static, worst-case assumptions. As this heterogeneity impacts the system's computing payload, an optimal system must dynamically capture these changes in the environment and adjust its computational resources accordingly. This paper introduces RoboRun, a mobile-robot runtime that dynamically exploits the compute-environment synergy to improve performance and energy. We implement RoboRun in the Robot Operating System (ROS) and evaluate it on autonomous drones. We compare RoboRun against a state-of-the-art static design and show 4.5X and 4X improvements in mission time and energy, respectively, as well as a 36% reduction in CPU utilization.

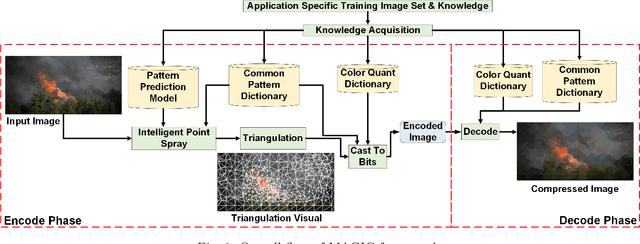

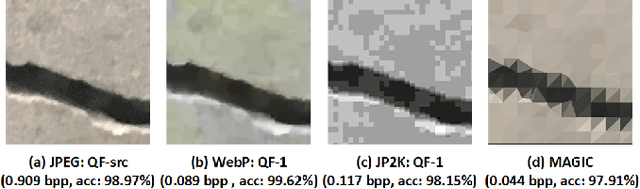

Leveraging Domain Knowledge using Machine Learning for Image Compression in Internet-of-Things

Sep 14, 2020

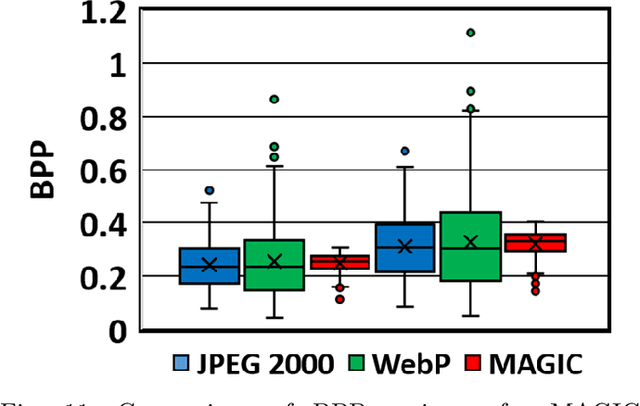

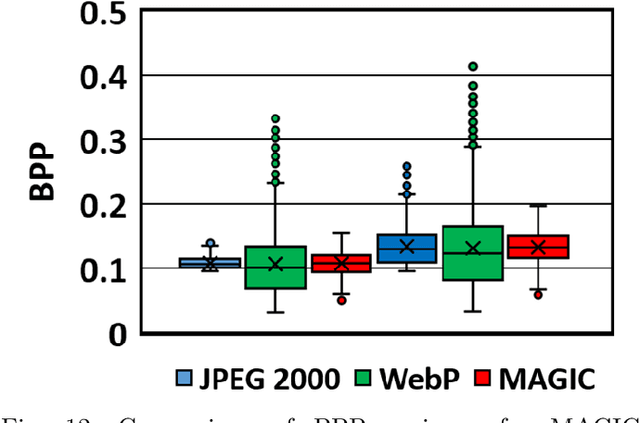

Abstract:The emergent ecosystems of intelligent edge devices in diverse Internet of Things (IoT) applications, from automatic surveillance to precision agriculture, increasingly rely on recording and processing variety of image data. Due to resource constraints, e.g., energy and communication bandwidth requirements, these applications require compressing the recorded images before transmission. For these applications, image compression commonly requires: (1) maintaining features for coarse-grain pattern recognition instead of the high-level details for human perception due to machine-to-machine communications; (2) high compression ratio that leads to improved energy and transmission efficiency; (3) large dynamic range of compression and an easy trade-off between compression factor and quality of reconstruction to accommodate a wide diversity of IoT applications as well as their time-varying energy/performance needs. To address these requirements, we propose, MAGIC, a novel machine learning (ML) guided image compression framework that judiciously sacrifices visual quality to achieve much higher compression when compared to traditional techniques, while maintaining accuracy for coarse-grained vision tasks. The central idea is to capture application-specific domain knowledge and efficiently utilize it in achieving high compression. We demonstrate that the MAGIC framework is configurable across a wide range of compression/quality and is capable of compressing beyond the standard quality factor limits of both JPEG 2000 and WebP. We perform experiments on representative IoT applications using two vision datasets and show up to 42.65x compression at similar accuracy with respect to the source. We highlight low variance in compression rate across images using our technique as compared to JPEG 2000 and WebP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge