Swarup Bhunia

Department of Electrical and Computer Engineering, University of Florida, Gainesville, FL

HAMLOCK: HArdware-Model LOgically Combined attacK

Oct 22, 2025Abstract:The growing use of third-party hardware accelerators (e.g., FPGAs, ASICs) for deep neural networks (DNNs) introduces new security vulnerabilities. Conventional model-level backdoor attacks, which only poison a model's weights to misclassify inputs with a specific trigger, are often detectable because the entire attack logic is embedded within the model (i.e., software), creating a traceable layer-by-layer activation path. This paper introduces the HArdware-Model Logically Combined Attack (HAMLOCK), a far stealthier threat that distributes the attack logic across the hardware-software boundary. The software (model) is now only minimally altered by tuning the activations of few neurons to produce uniquely high activation values when a trigger is present. A malicious hardware Trojan detects those unique activations by monitoring the corresponding neurons' most significant bit or the 8-bit exponents and triggers another hardware Trojan to directly manipulate the final output logits for misclassification. This decoupled design is highly stealthy, as the model itself contains no complete backdoor activation path as in conventional attacks and hence, appears fully benign. Empirically, across benchmarks like MNIST, CIFAR10, GTSRB, and ImageNet, HAMLOCK achieves a near-perfect attack success rate with a negligible clean accuracy drop. More importantly, HAMLOCK circumvents the state-of-the-art model-level defenses without any adaptive optimization. The hardware Trojan is also undetectable, incurring area and power overheads as low as 0.01%, which is easily masked by process and environmental noise. Our findings expose a critical vulnerability at the hardware-software interface, demanding new cross-layer defenses against this emerging threat.

Secure and Storage-Efficient Deep Learning Models for Edge AI Using Automatic Weight Generation

Jul 08, 2025Abstract:Complex neural networks require substantial memory to store a large number of synaptic weights. This work introduces WINGs (Automatic Weight Generator for Secure and Storage-Efficient Deep Learning Models), a novel framework that dynamically generates layer weights in a fully connected neural network (FC) and compresses the weights in convolutional neural networks (CNNs) during inference, significantly reducing memory requirements without sacrificing accuracy. WINGs framework uses principal component analysis (PCA) for dimensionality reduction and lightweight support vector regression (SVR) models to predict layer weights in the FC networks, removing the need for storing full-weight matrices and achieving substantial memory savings. It also preferentially compresses the weights in low-sensitivity layers of CNNs using PCA and SVR with sensitivity analysis. The sensitivity-aware design also offers an added level of security, as any bit-flip attack with weights in compressed layers has an amplified and readily detectable effect on accuracy. WINGs achieves 53x compression for the FC layers and 28x for AlexNet with MNIST dataset, and 18x for Alexnet with CIFAR-10 dataset with 1-2% accuracy loss. This significant reduction in memory results in higher throughput and lower energy for DNN inference, making it attractive for resource-constrained edge applications.

Runtime Detection of Adversarial Attacks in AI Accelerators Using Performance Counters

Mar 10, 2025Abstract:Rapid adoption of AI technologies raises several major security concerns, including the risks of adversarial perturbations, which threaten the confidentiality and integrity of AI applications. Protecting AI hardware from misuse and diverse security threats is a challenging task. To address this challenge, we propose SAMURAI, a novel framework for safeguarding against malicious usage of AI hardware and its resilience to attacks. SAMURAI introduces an AI Performance Counter (APC) for tracking dynamic behavior of an AI model coupled with an on-chip Machine Learning (ML) analysis engine, known as TANTO (Trained Anomaly Inspection Through Trace Observation). APC records the runtime profile of the low-level hardware events of different AI operations. Subsequently, the summary information recorded by the APC is processed by TANTO to efficiently identify potential security breaches and ensure secure, responsible use of AI. SAMURAI enables real-time detection of security threats and misuse without relying on traditional software-based solutions that require model integration. Experimental results demonstrate that SAMURAI achieves up to 97% accuracy in detecting adversarial attacks with moderate overhead on various AI models, significantly outperforming conventional software-based approaches. It enhances security and regulatory compliance, providing a comprehensive solution for safeguarding AI against emergent threats.

X-DFS: Explainable Artificial Intelligence Guided Design-for-Security Solution Space Exploration

Nov 11, 2024

Abstract:Design and manufacturing of integrated circuits predominantly use a globally distributed semiconductor supply chain involving diverse entities. The modern semiconductor supply chain has been designed to boost production efficiency, but is filled with major security concerns such as malicious modifications (hardware Trojans), reverse engineering (RE), and cloning. While being deployed, digital systems are also subject to a plethora of threats such as power, timing, and electromagnetic (EM) side channel attacks. Many Design-for-Security (DFS) solutions have been proposed to deal with these vulnerabilities, and such solutions (DFS) relays on strategic modifications (e.g., logic locking, side channel resilient masking, and dummy logic insertion) of the digital designs for ensuring a higher level of security. However, most of these DFS strategies lack robust formalism, are often not human-understandable, and require an extensive amount of human expert effort during their development/use. All of these factors make it difficult to keep up with the ever growing number of microelectronic vulnerabilities. In this work, we propose X-DFS, an explainable Artificial Intelligence (AI) guided DFS solution-space exploration approach that can dramatically cut down the mitigation strategy development/use time while enriching our understanding of the vulnerability by providing human-understandable decision rationale. We implement X-DFS and comprehensively evaluate it for reverse engineering threats (SAIL, SWEEP, and OMLA) and formalize a generalized mechanism for applying X-DFS to defend against other threats such as hardware Trojans, fault attacks, and side channel attacks for seamless future extensions.

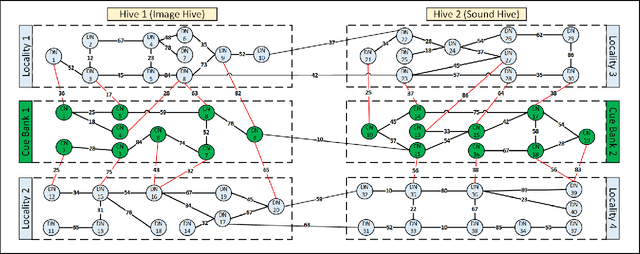

Fusion Intelligence: Confluence of Natural and Artificial Intelligence for Enhanced Problem-Solving Efficiency

May 16, 2024Abstract:This paper introduces Fusion Intelligence (FI), a bio-inspired intelligent system, where the innate sensing, intelligence and unique actuation abilities of biological organisms such as bees and ants are integrated with the computational power of Artificial Intelligence (AI). This interdisciplinary field seeks to create systems that are not only smart but also adaptive and responsive in ways that mimic the nature. As FI evolves, it holds the promise of revolutionizing the way we approach complex problems, leveraging the best of both biological and digital worlds to create solutions that are more effective, sustainable, and harmonious with the environment. We demonstrate FI's potential to enhance agricultural IoT system performance through a simulated case study on improving insect pollination efficacy (entomophily).

Towards Model-Size Agnostic, Compute-Free, Memorization-based Inference of Deep Learning

Jul 14, 2023Abstract:The rapid advancement of deep neural networks has significantly improved various tasks, such as image and speech recognition. However, as the complexity of these models increases, so does the computational cost and the number of parameters, making it difficult to deploy them on resource-constrained devices. This paper proposes a novel memorization-based inference (MBI) that is compute free and only requires lookups. Specifically, our work capitalizes on the inference mechanism of the recurrent attention model (RAM), where only a small window of input domain (glimpse) is processed in a one time step, and the outputs from multiple glimpses are combined through a hidden vector to determine the overall classification output of the problem. By leveraging the low-dimensionality of glimpse, our inference procedure stores key value pairs comprising of glimpse location, patch vector, etc. in a table. The computations are obviated during inference by utilizing the table to read out key-value pairs and performing compute-free inference by memorization. By exploiting Bayesian optimization and clustering, the necessary lookups are reduced, and accuracy is improved. We also present in-memory computing circuits to quickly look up the matching key vector to an input query. Compared to competitive compute-in-memory (CIM) approaches, MBI improves energy efficiency by almost 2.7 times than multilayer perceptions (MLP)-CIM and by almost 83 times than ResNet20-CIM for MNIST character recognition.

Third-Party Hardware IP Assurance against Trojans through Supervised Learning and Post-processing

Nov 29, 2021

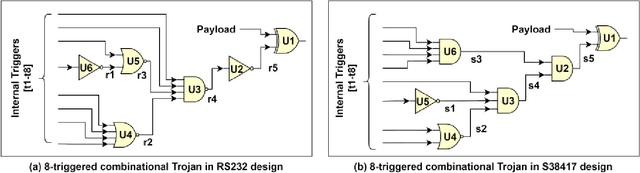

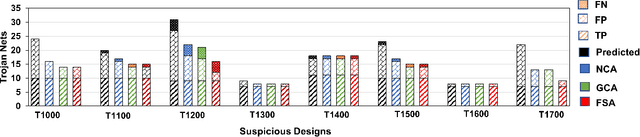

Abstract:System-on-chip (SoC) developers increasingly rely on pre-verified hardware intellectual property (IP) blocks acquired from untrusted third-party vendors. These IPs might contain hidden malicious functionalities or hardware Trojans to compromise the security of the fabricated SoCs. Recently, supervised machine learning (ML) techniques have shown promising capability in identifying nets of potential Trojans in third party IPs (3PIPs). However, they bring several major challenges. First, they do not guide us to an optimal choice of features that reliably covers diverse classes of Trojans. Second, they require multiple Trojan-free/trusted designs to insert known Trojans and generate a trained model. Even if a set of trusted designs are available for training, the suspect IP could be inherently very different from the set of trusted designs, which may negatively impact the verification outcome. Third, these techniques only identify a set of suspect Trojan nets that require manual intervention to understand the potential threat. In this paper, we present VIPR, a systematic machine learning (ML) based trust verification solution for 3PIPs that eliminates the need for trusted designs for training. We present a comprehensive framework, associated algorithms, and a tool flow for obtaining an optimal set of features, training a targeted machine learning model, detecting suspect nets, and identifying Trojan circuitry from the suspect nets. We evaluate the framework on several Trust-Hub Trojan benchmarks and provide a comparative analysis of detection performance across different trained models, selection of features, and post-processing techniques. The proposed post-processing algorithms reduce false positives by up to 92.85%.

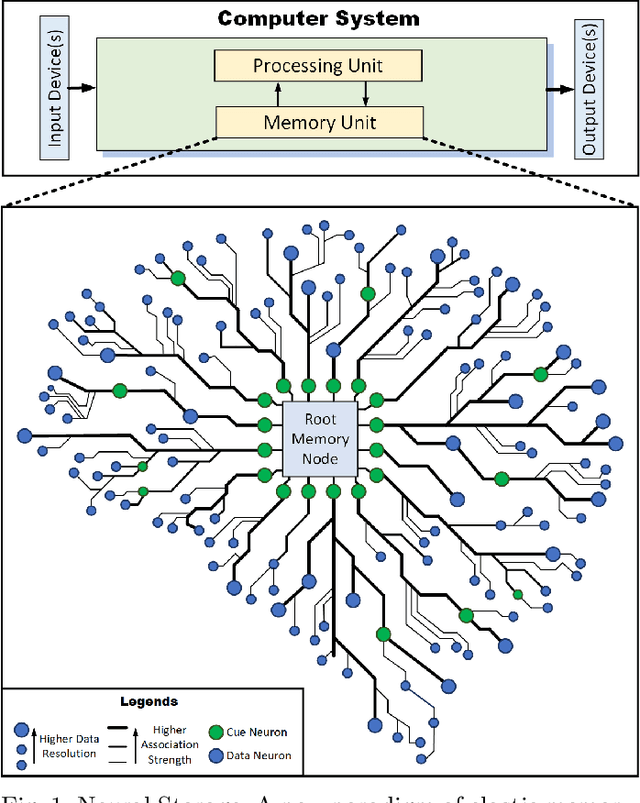

Neural Storage: A New Paradigm of Elastic Memory

Jan 07, 2021

Abstract:Storage and retrieval of data in a computer memory plays a major role in system performance. Traditionally, computer memory organization is static - i.e., they do not change based on the application-specific characteristics in memory access behaviour during system operation. Specifically, the association of a data block with a search pattern (or cues) as well as the granularity of a stored data do not evolve. Such a static nature of computer memory, we observe, not only limits the amount of data we can store in a given physical storage, but it also misses the opportunity for dramatic performance improvement in various applications. On the contrary, human memory is characterized by seemingly infinite plasticity in storing and retrieving data - as well as dynamically creating/updating the associations between data and corresponding cues. In this paper, we introduce Neural Storage (NS), a brain-inspired learning memory paradigm that organizes the memory as a flexible neural memory network. In NS, the network structure, strength of associations, and granularity of the data adjust continuously during system operation, providing unprecedented plasticity and performance benefits. We present the associated storage/retrieval/retention algorithms in NS, which integrate a formalized learning process. Using a full-blown operational model, we demonstrate that NS achieves an order of magnitude improvement in memory access performance for two representative applications when compared to traditional content-based memory.

Leveraging Domain Knowledge using Machine Learning for Image Compression in Internet-of-Things

Sep 14, 2020

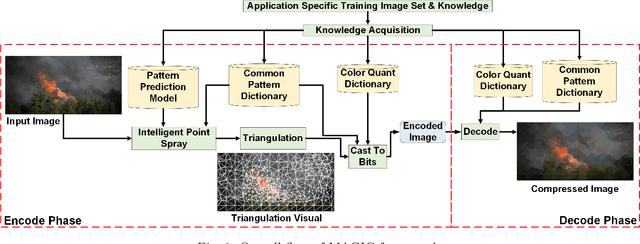

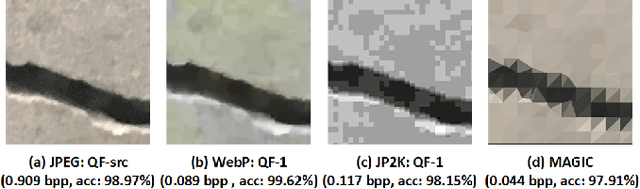

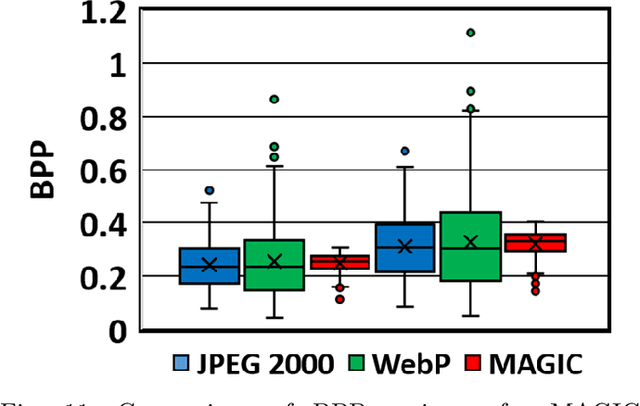

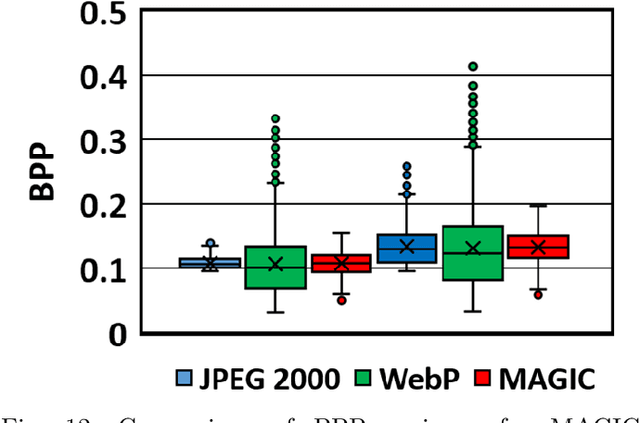

Abstract:The emergent ecosystems of intelligent edge devices in diverse Internet of Things (IoT) applications, from automatic surveillance to precision agriculture, increasingly rely on recording and processing variety of image data. Due to resource constraints, e.g., energy and communication bandwidth requirements, these applications require compressing the recorded images before transmission. For these applications, image compression commonly requires: (1) maintaining features for coarse-grain pattern recognition instead of the high-level details for human perception due to machine-to-machine communications; (2) high compression ratio that leads to improved energy and transmission efficiency; (3) large dynamic range of compression and an easy trade-off between compression factor and quality of reconstruction to accommodate a wide diversity of IoT applications as well as their time-varying energy/performance needs. To address these requirements, we propose, MAGIC, a novel machine learning (ML) guided image compression framework that judiciously sacrifices visual quality to achieve much higher compression when compared to traditional techniques, while maintaining accuracy for coarse-grained vision tasks. The central idea is to capture application-specific domain knowledge and efficiently utilize it in achieving high compression. We demonstrate that the MAGIC framework is configurable across a wide range of compression/quality and is capable of compressing beyond the standard quality factor limits of both JPEG 2000 and WebP. We perform experiments on representative IoT applications using two vision datasets and show up to 42.65x compression at similar accuracy with respect to the source. We highlight low variance in compression rate across images using our technique as compared to JPEG 2000 and WebP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge