Neural Storage: A New Paradigm of Elastic Memory

Paper and Code

Jan 07, 2021

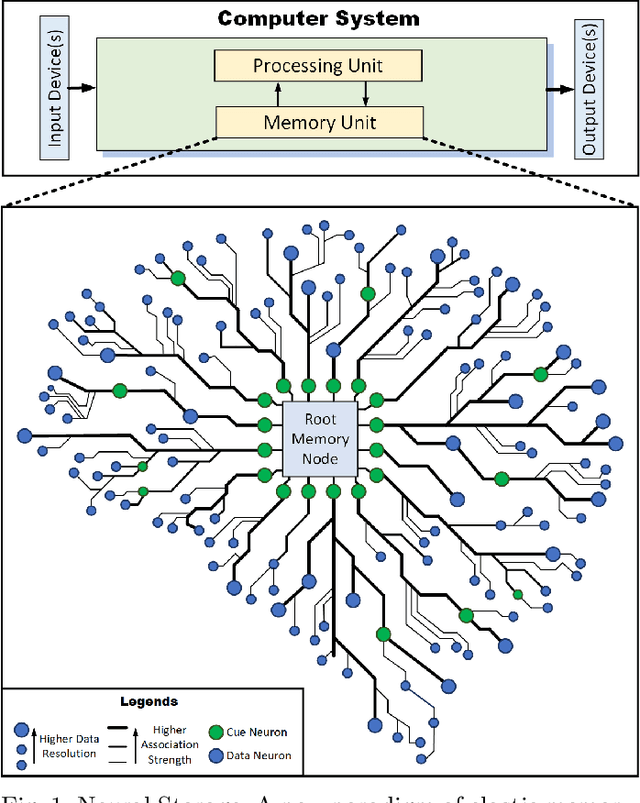

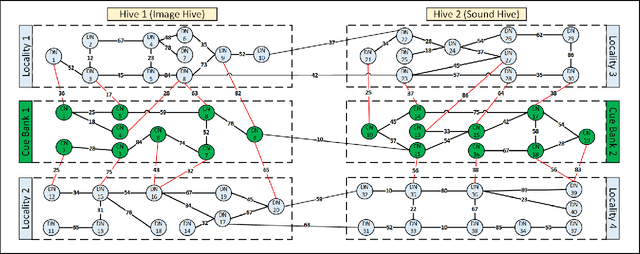

Storage and retrieval of data in a computer memory plays a major role in system performance. Traditionally, computer memory organization is static - i.e., they do not change based on the application-specific characteristics in memory access behaviour during system operation. Specifically, the association of a data block with a search pattern (or cues) as well as the granularity of a stored data do not evolve. Such a static nature of computer memory, we observe, not only limits the amount of data we can store in a given physical storage, but it also misses the opportunity for dramatic performance improvement in various applications. On the contrary, human memory is characterized by seemingly infinite plasticity in storing and retrieving data - as well as dynamically creating/updating the associations between data and corresponding cues. In this paper, we introduce Neural Storage (NS), a brain-inspired learning memory paradigm that organizes the memory as a flexible neural memory network. In NS, the network structure, strength of associations, and granularity of the data adjust continuously during system operation, providing unprecedented plasticity and performance benefits. We present the associated storage/retrieval/retention algorithms in NS, which integrate a formalized learning process. Using a full-blown operational model, we demonstrate that NS achieves an order of magnitude improvement in memory access performance for two representative applications when compared to traditional content-based memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge