Jingwei Guo

Decentralizing Test-time Adaptation under Heterogeneous Data Streams

Nov 16, 2024

Abstract:While Test-Time Adaptation (TTA) has shown promise in addressing distribution shifts between training and testing data, its effectiveness diminishes with heterogeneous data streams due to uniform target estimation. As previous attempts merely stabilize model fine-tuning over time to handle continually changing environments, they fundamentally assume a homogeneous target domain at any moment, leaving the intrinsic real-world data heterogeneity unresolved. This paper delves into TTA under heterogeneous data streams, moving beyond current model-centric limitations. By revisiting TTA from a data-centric perspective, we discover that decomposing samples into Fourier space facilitates an accurate data separation across different frequency levels. Drawing from this insight, we propose a novel Frequency-based Decentralized Adaptation (FreDA) framework, which transitions data from globally heterogeneous to locally homogeneous in Fourier space and employs decentralized adaptation to manage diverse distribution shifts.Interestingly, we devise a novel Fourier-based augmentation strategy to assist in decentralizing adaptation, which individually enhances sample quality for capturing each type of distribution shifts. Extensive experiments across various settings (corrupted, natural, and medical environments) demonstrate the superiority of our proposed framework over the state-of-the-arts.

Multiple Object Detection and Tracking in Panoramic Videos for Cycling Safety Analysis

Jul 21, 2024Abstract:Panoramic cycling videos can record 360{\deg} views around the cyclists. Thus, it is essential to conduct automatic road user analysis on them using computer vision models to provide data for studies on cycling safety. However, the features of panoramic data such as severe distortions, large number of small objects and boundary continuity have brought great challenges to the existing CV models, including poor performance and evaluation methods that are no longer applicable. In addition, due to the lack of data with annotations, it is not easy to re-train the models. In response to these problems, the project proposed and implemented a three-step methodology: (1) improve the prediction performance of the pre-trained object detection models on panoramic data by projecting the original image into 4 perspective sub-images; (2) introduce supports for boundary continuity and category information into DeepSORT, a commonly used multiple object tracking model, and set an improved detection model as its detector; (3) using the tracking results, develop an application for detecting the overtaking behaviour of the surrounding vehicles. Evaluated on the panoramic cycling dataset built by the project, the proposed methodology improves the average precision of YOLO v5m6 and Faster RCNN-FPN under any input resolution setting. In addition, it raises MOTA and IDF1 of DeepSORT by 7.6\% and 9.7\% respectively. When detecting the overtakes in the test videos, it achieves the F-score of 0.88. The code is available on GitHub at github.com/cuppp1998/360_object_tracking to ensure the reproducibility and further improvements of results.

Rethinking Spectral Graph Neural Networks with Spatially Adaptive Filtering

Jan 30, 2024Abstract:Whilst spectral Graph Neural Networks (GNNs) are theoretically well-founded in the spectral domain, their practical reliance on polynomial approximation implies a profound linkage to the spatial domain. As previous studies rarely examine spectral GNNs from the spatial perspective, their spatial-domain interpretability remains elusive, e.g., what information is essentially encoded by spectral GNNs in the spatial domain? In this paper, to answer this question, we establish a theoretical connection between spectral filtering and spatial aggregation, unveiling an intrinsic interaction that spectral filtering implicitly leads the original graph to an adapted new graph, explicitly computed for spatial aggregation. Both theoretical and empirical investigations reveal that the adapted new graph not only exhibits non-locality but also accommodates signed edge weights to reflect label consistency among nodes. These findings thus highlight the interpretable role of spectral GNNs in the spatial domain and inspire us to rethink graph spectral filters beyond the fixed-order polynomials, which neglect global information. Built upon the theoretical findings, we revisit the state-of-the-art spectral GNNs and propose a novel Spatially Adaptive Filtering (SAF) framework, which leverages the adapted new graph by spectral filtering for an auxiliary non-local aggregation. Notably, our proposed SAF comprehensively models both node similarity and dissimilarity from a global perspective, therefore alleviating persistent deficiencies of GNNs related to long-range dependencies and graph heterophily. Extensive experiments over 13 node classification benchmarks demonstrate the superiority of our proposed framework to the state-of-the-art models.

Unraveling Batch Normalization for Realistic Test-Time Adaptation

Dec 15, 2023

Abstract:While recent test-time adaptations exhibit efficacy by adjusting batch normalization to narrow domain disparities, their effectiveness diminishes with realistic mini-batches due to inaccurate target estimation. As previous attempts merely introduce source statistics to mitigate this issue, the fundamental problem of inaccurate target estimation still persists, leaving the intrinsic test-time domain shifts unresolved. This paper delves into the problem of mini-batch degradation. By unraveling batch normalization, we discover that the inexact target statistics largely stem from the substantially reduced class diversity in batch. Drawing upon this insight, we introduce a straightforward tool, Test-time Exponential Moving Average (TEMA), to bridge the class diversity gap between training and testing batches. Importantly, our TEMA adaptively extends the scope of typical methods beyond the current batch to incorporate a diverse set of class information, which in turn boosts an accurate target estimation. Built upon this foundation, we further design a novel layer-wise rectification strategy to consistently promote test-time performance. Our proposed method enjoys a unique advantage as it requires neither training nor tuning parameters, offering a truly hassle-free solution. It significantly enhances model robustness against shifted domains and maintains resilience in diverse real-world scenarios with various batch sizes, achieving state-of-the-art performance on several major benchmarks. Code is available at \url{https://github.com/kiwi12138/RealisticTTA}.

Graph Neural Networks with Diverse Spectral Filtering

Dec 14, 2023

Abstract:Spectral Graph Neural Networks (GNNs) have achieved tremendous success in graph machine learning, with polynomial filters applied for graph convolutions, where all nodes share the identical filter weights to mine their local contexts. Despite the success, existing spectral GNNs usually fail to deal with complex networks (e.g., WWW) due to such homogeneous spectral filtering setting that ignores the regional heterogeneity as typically seen in real-world networks. To tackle this issue, we propose a novel diverse spectral filtering (DSF) framework, which automatically learns node-specific filter weights to exploit the varying local structure properly. Particularly, the diverse filter weights consist of two components -- A global one shared among all nodes, and a local one that varies along network edges to reflect node difference arising from distinct graph parts -- to balance between local and global information. As such, not only can the global graph characteristics be captured, but also the diverse local patterns can be mined with awareness of different node positions. Interestingly, we formulate a novel optimization problem to assist in learning diverse filters, which also enables us to enhance any spectral GNNs with our DSF framework. We showcase the proposed framework on three state-of-the-arts including GPR-GNN, BernNet, and JacobiConv. Extensive experiments over 10 benchmark datasets demonstrate that our framework can consistently boost model performance by up to 4.92% in node classification tasks, producing diverse filters with enhanced interpretability. Code is available at \url{https://github.com/jingweio/DSF}.

* Accepted by Proceedings of the ACM Web Conference 2023 (WWW '23)

ES-GNN: Generalizing Graph Neural Networks Beyond Homophily with Edge Splitting

May 27, 2022

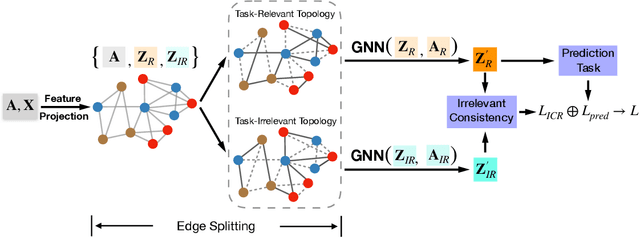

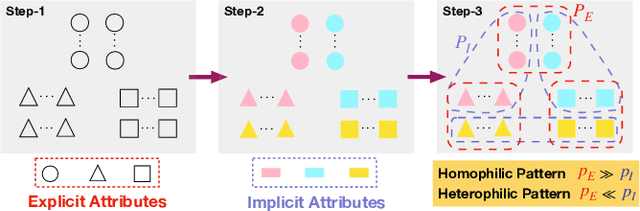

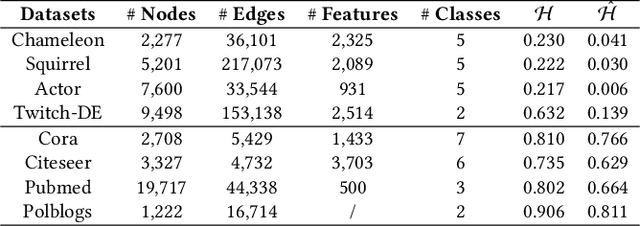

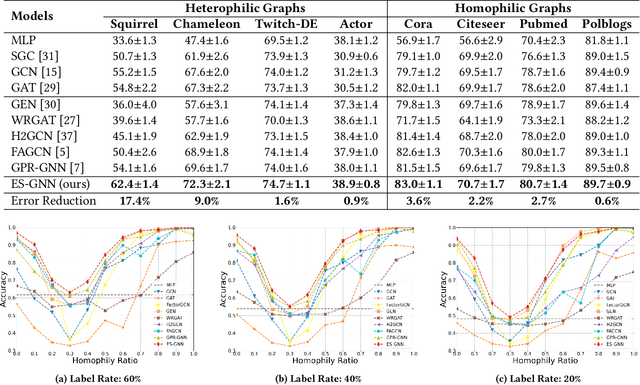

Abstract:Graph Neural Networks (GNNs) have achieved enormous success in tackling analytical problems on graph data. Most GNNs interpret nearly all the node connections as inductive bias with feature smoothness, and implicitly assume strong homophily on the observed graph. However, real-world networks are not always homophilic, but sometimes exhibit heterophilic patterns where adjacent nodes share dissimilar attributes and distinct labels. Therefore,GNNs smoothing the node proximity holistically may aggregate inconsistent information arising from both task-relevant and irrelevant connections. In this paper, we propose a novel edge splitting GNN (ES-GNN) framework, which generalizes GNNs beyond homophily by jointly partitioning network topology and disentangling node features. Specifically, the proposed framework employs an interpretable operation to adaptively split the set of edges of the original graph into two exclusive sets indicating respectively the task-relevant and irrelevant relations among nodes. The node features are then aggregated separately on these two partial edge sets to produce disentangled representations, based on which a more accurate edge splitting can be attained later. Theoretically, we show that our ES-GNN can be regarded as a solution to a graph denoising problem with a disentangled smoothness assumption, which further illustrates our motivations and interprets the improved generalization. Extensive experiments over 8 benchmark and 1 synthetic datasets demonstrate that ES-GNN not only outperforms the state-of-the-arts (including 8 GNN baselines), but also can be more robust to adversarial graphs and alleviate the over-smoothing problem.

LGD-GCN: Local and Global Disentangled Graph Convolutional Networks

Apr 24, 2021

Abstract:Disentangled Graph Convolutional Network (DisenGCN) is an encouraging framework to disentangle the latent factors arising in a real-world graph. However, it relies on disentangling information heavily from a local range (i.e., a node and its 1-hop neighbors), while the local information in many cases can be uneven and incomplete, hindering the interpretabiliy power and model performance of DisenGCN. In this paper, we introduce a novel Local and Global Disentangled Graph Convolutional Network (LGD-GCN) to capture both local and global information for graph disentanglement. LGD-GCN performs a statistical mixture modeling to derive a factor-aware latent continuous space, and then constructs different structures w.r.t. different factors from the revealed space. In this way, the global factor-specific information can be efficiently and selectively encoded via a message passing along these built structures, strengthening the intra-factor consistency. We also propose a novel diversity promoting regularizer employed with the latent space modeling, to encourage inter-factor diversity. Evaluations of the proposed LGD-GCN on the synthetic and real-world datasets show a better interpretability and improved performance in node classification over the existing competitive models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge