Jieting Zhao

HPPS: A Hierarchical Progressive Perception System for Luggage Trolley Detection and Localization at Airports

May 09, 2024

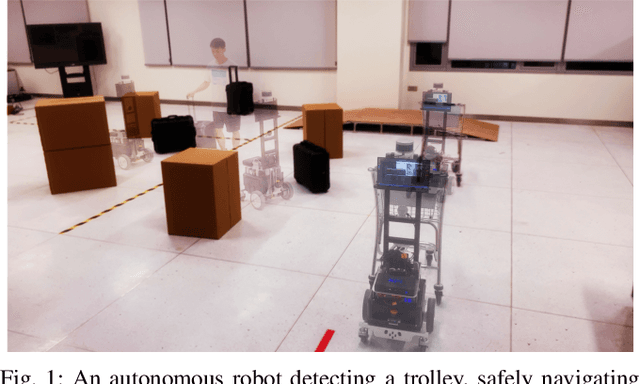

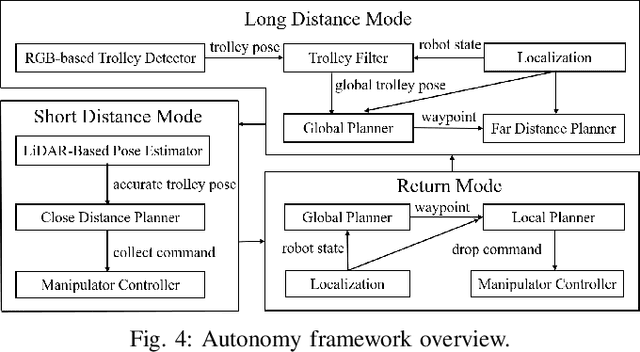

Abstract:The robotic autonomous luggage trolley collection system employs robots to gather and transport scattered luggage trolleys at airports. However, existing methods for detecting and locating these luggage trolleys often fail when they are not fully visible. To address this, we introduce the Hierarchical Progressive Perception System (HPPS), which enhances the detection and localization of luggage trolleys under partial occlusion. The HPPS processes the luggage trolley's position and orientation separately, which requires only RGB images for labeling and training, eliminating the need for 3D coordinates and alignment. The HPPS can accurately determine the position of the luggage trolley with just one well-detected keypoint and estimate the luggage trolley's orientation when it is partially occluded. Once the luggage trolley's initial pose is detected, HPPS updates this information continuously to refine its accuracy until the robot begins grasping. The experiments on detection and localization demonstrate that HPPS is more reliable under partial occlusion compared to existing methods. Its effectiveness and robustness have also been confirmed through practical tests in actual luggage trolley collection tasks. A website about this work is available at HPPS.

Human Orientation Estimation under Partial Observation

Apr 22, 2024Abstract:Reliable human orientation estimation (HOE) is critical for autonomous agents to understand human intention and perform human-robot interaction (HRI) tasks. Great progress has been made in HOE under full observation. However, the existing methods easily make a wrong prediction under partial observation and give it an unexpectedly high probability. To solve the above problems, this study first develops a method that estimates orientation from the visible joints of a target person so that it is able to handle partial observation. Subsequently, we introduce a confidence-aware orientation estimation method, enabling more accurate orientation estimation and reasonable confidence estimation under partial observation. The effectiveness of our method is validated on both public and custom-built datasets, and it showed great accuracy and reliability improvement in partial observation scenarios. In particular, we show in real experiments that our method can benefit the robustness and consistency of the robot person following (RPF) task.

Person Re-Identification for Robot Person Following with Online Continual Learning

Sep 21, 2023Abstract:Robot person following (RPF) is a crucial capability in human-robot interaction (HRI) applications, allowing a robot to persistently follow a designated person. In practical RPF scenarios, the person often be occluded by other objects or people. Consequently, it is necessary to re-identify the person when he/she re-appears within the robot's field of view. Previous person re-identification (ReID) approaches to person following rely on offline-trained features and short-term experiences. Such an approach i) has a limited capacity to generalize across scenarios; and ii) often fails to re-identify the person when his re-appearance is out of the learned domain represented by the short-term experiences. Based on this observation, in this work, we propose a ReID framework for RPF that leverages long-term experiences. The experiences are maintained by a loss-guided keyframe selection strategy, to enable online continual learning of the appearance model. Our experiments demonstrate that even in the presence of severe appearance changes and distractions from visually similar people, the proposed method can still re-identify the person more accurately than the state-of-the-art methods.

Robot Person Following Under Partial Occlusion

Feb 27, 2023

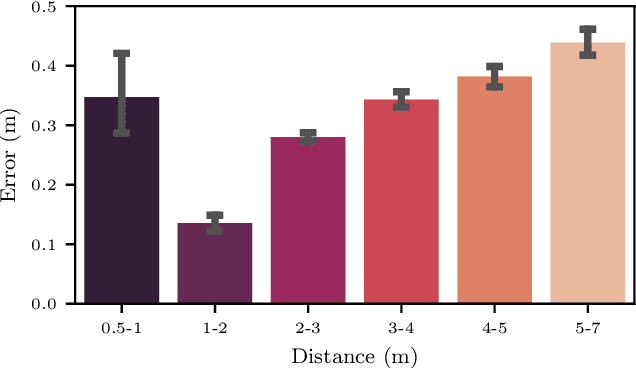

Abstract:Robot person following (RPF) is a capability that supports many useful human-robot-interaction (HRI) applications. However, existing solutions to person following often assume full observation of the tracked person. As a consequence, they cannot track the person reliably under partial occlusion where the assumption of full observation is not satisfied. In this paper, we focus on the problem of robot person following under partial occlusion caused by a limited field of view of a monocular camera. Based on the key insight that it is possible to locate the target person when one or more of his/her joints are visible, we propose a method in which each visible joint contributes a location estimate of the followed person. Experiments on a public person-following dataset show that, even under partial occlusion, the proposed method can still locate the person more reliably than the existing SOTA methods. As well, the application of our method is demonstrated in real experiments on a mobile robot.

Following Closely: A Robust Monocular Person Following System for Mobile Robot

Apr 25, 2022

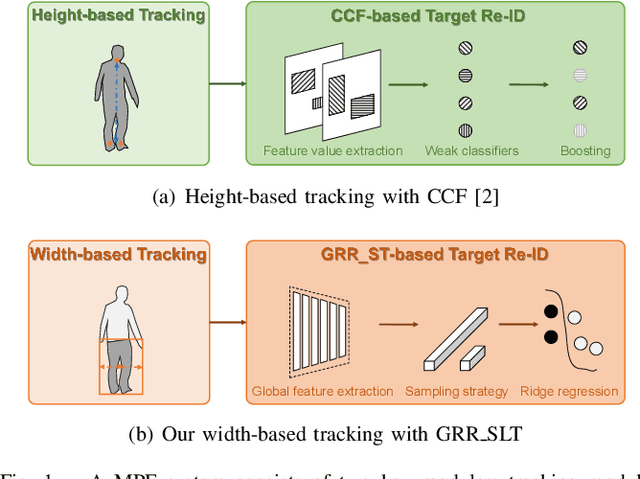

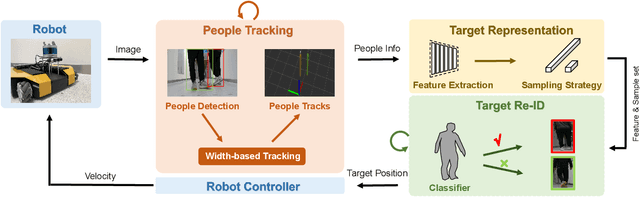

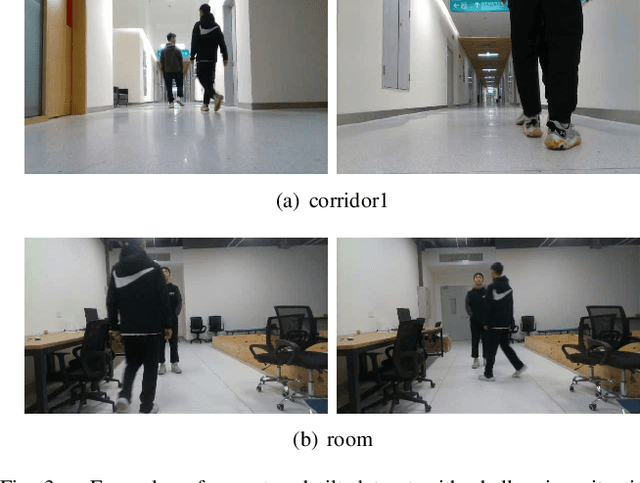

Abstract:Monocular person following (MPF) is a capability that supports many useful applications of a mobile robot. However, existing MPF solutions are not completely satisfactory. Firstly, they often fail to track the target at a close distance either because they are based on a visual servo or they need the observation of the full body by the robot. Secondly, their target Re-IDentification (Re-ID) abilities are weak in cases of target appearance change and highly similar appearance of distracting people. To remove the assumption of full-body observation, we propose a width-based tracking module, which relies on the target width, which can be observed even at a close distance. For handling issues related to appearance variation, we use a global CNN (convolutional neural network) descriptor to represent the target and a ridge regression model to learn a target appearance model online. We adopt a sampling strategy for online classifier learning, in which both long-term and short-term samples are involved. We evaluate our method in two datasets including a public person following dataset and a custom-built one with challenging target appearance and target distance. Our method achieves state-of-the-art (SOTA) results on both datasets. For the benefit of the community, we make public the dataset and the source code.

Robotic Autonomous Trolley Collection with Progressive Perception and Nonlinear Model Predictive Control

Oct 13, 2021

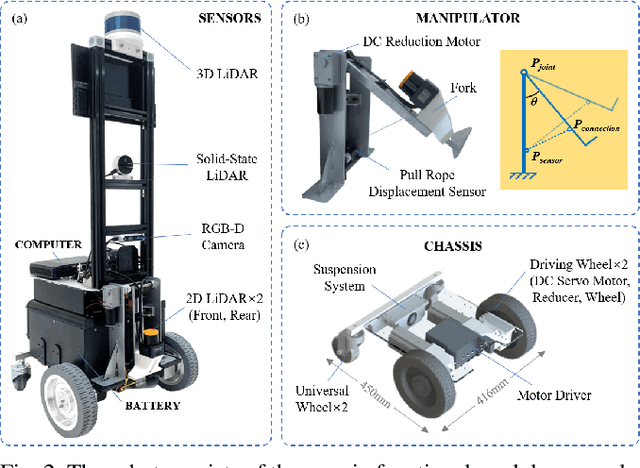

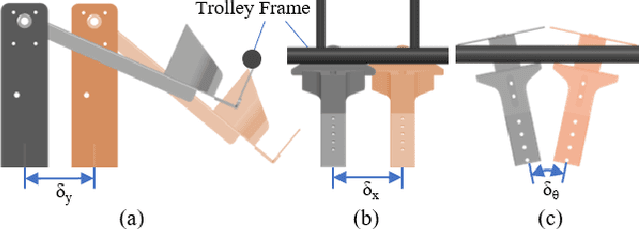

Abstract:Autonomous mobile manipulation robots that can collect trolleys are widely used to liberate human resources and fight epidemics. Most prior robotic trolley collection solutions only detect trolleys with 2D poses or are merely based on specific marks and lack the formal design of planning algorithms. In this paper, we present a novel mobile manipulation system with applications in luggage trolley collection. The proposed system integrates a compact hardware design and a progressive perception and planning framework, enabling the system to efficiently and robustly collect trolleys in dynamic and complex environments. For the perception, we first develop a 3D trolley detection method that combines object detection and keypoint estimation. Then, a docking process in a short distance is achieved with an accurate point cloud plane detection method and a novel manipulator design. On the planning side, we formulate the robot's motion planning under a nonlinear model predictive control framework with control barrier functions to improve obstacle avoidance capabilities while maintaining the target in the sensors' field of view at close distances. We demonstrate our design and framework by deploying the system on actual trolley collection tasks, and their effectiveness and robustness are experimentally validated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge