Jianwei Wan

Towards Automated Cross-domain Exploratory Data Analysis through Large Language Models

Dec 10, 2024

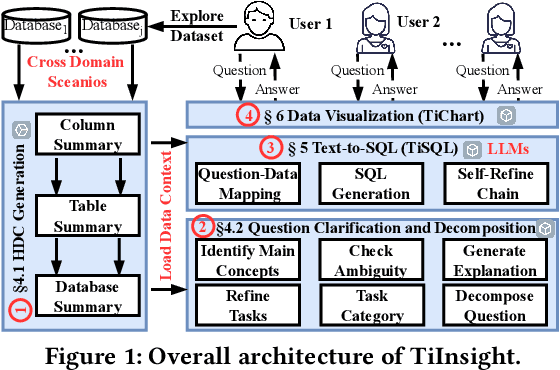

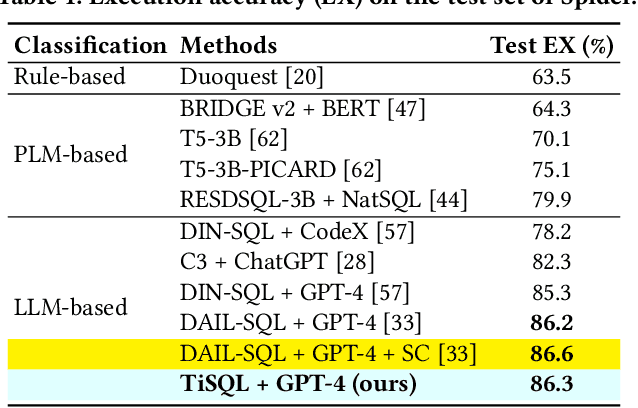

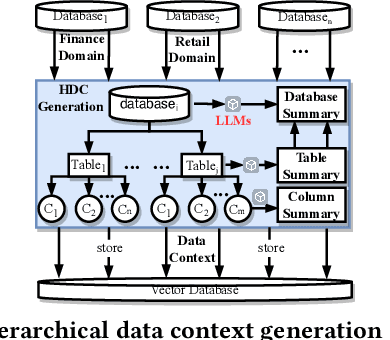

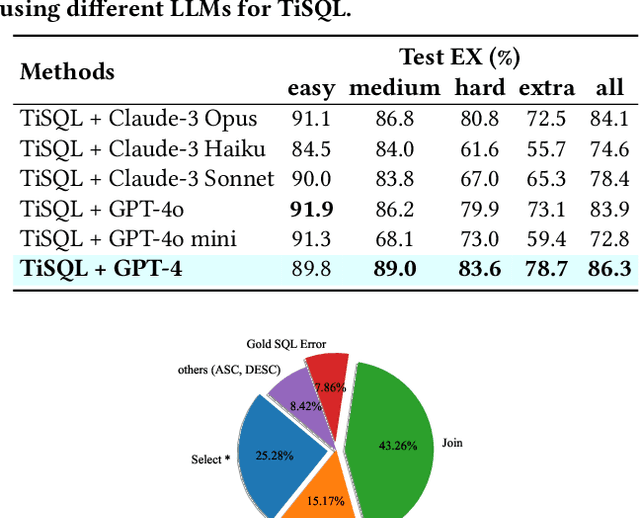

Abstract:Exploratory data analysis (EDA), coupled with SQL, is essential for data analysts involved in data exploration and analysis. However, data analysts often encounter two primary challenges: (1) the need to craft SQL queries skillfully, and (2) the requirement to generate suitable visualization types that enhance the interpretation of query results. Due to its significance, substantial research efforts have been made to explore different approaches to address these challenges, including leveraging large language models (LLMs). However, existing methods fail to meet real-world data exploration requirements primarily due to (1) complex database schema; (2) unclear user intent; (3) limited cross-domain generalization capability; and (4) insufficient end-to-end text-to-visualization capability. This paper presents TiInsight, an automated SQL-based cross-domain exploratory data analysis system. First, we propose hierarchical data context (i.e., HDC), which leverages LLMs to summarize the contexts related to the database schema, which is crucial for open-world EDA systems to generalize across data domains. Second, the EDA system is divided into four components (i.e., stages): HDC generation, question clarification and decomposition, text-to-SQL generation (i.e., TiSQL), and data visualization (i.e., TiChart). Finally, we implemented an end-to-end EDA system with a user-friendly GUI interface in the production environment at PingCAP. We have also open-sourced all APIs of TiInsight to facilitate research within the EDA community. Through extensive evaluations by a real-world user study, we demonstrate that TiInsight offers remarkable performance compared to human experts. Specifically, TiSQL achieves an execution accuracy of 86.3% on the Spider dataset using GPT-4. It also demonstrates state-of-the-art performance on the Bird dataset.

DuInNet: Dual-Modality Feature Interaction for Point Cloud Completion

Jul 10, 2024

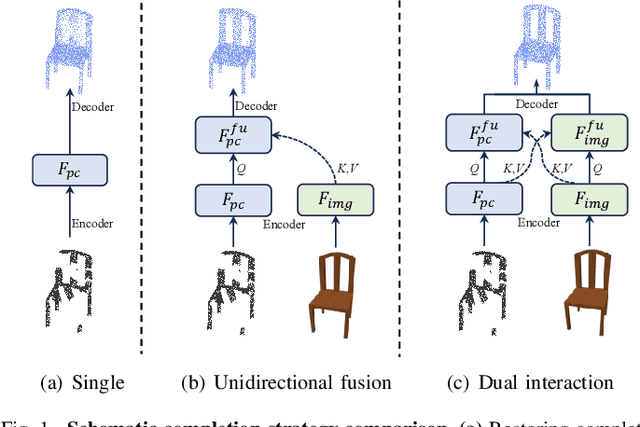

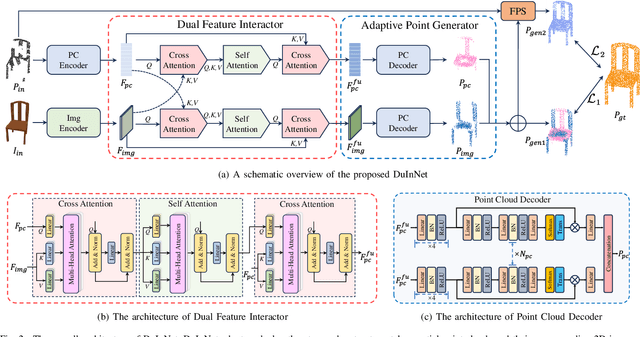

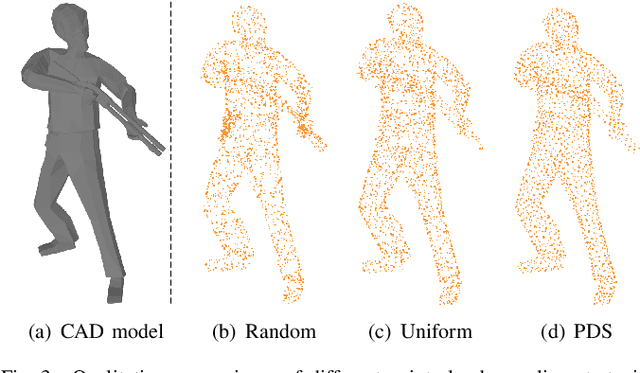

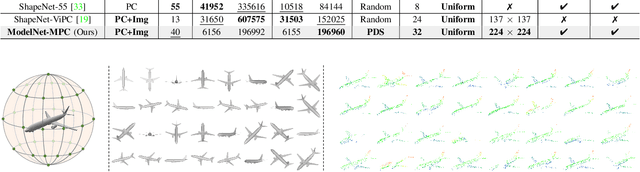

Abstract:To further promote the development of multimodal point cloud completion, we contribute a large-scale multimodal point cloud completion benchmark ModelNet-MPC with richer shape categories and more diverse test data, which contains nearly 400,000 pairs of high-quality point clouds and rendered images of 40 categories. Besides the fully supervised point cloud completion task, two additional tasks including denoising completion and zero-shot learning completion are proposed in ModelNet-MPC, to simulate real-world scenarios and verify the robustness to noise and the transfer ability across categories of current methods. Meanwhile, considering that existing multimodal completion pipelines usually adopt a unidirectional fusion mechanism and ignore the shape prior contained in the image modality, we propose a Dual-Modality Feature Interaction Network (DuInNet) in this paper. DuInNet iteratively interacts features between point clouds and images to learn both geometric and texture characteristics of shapes with the dual feature interactor. To adapt to specific tasks such as fully supervised, denoising, and zero-shot learning point cloud completions, an adaptive point generator is proposed to generate complete point clouds in blocks with different weights for these two modalities. Extensive experiments on the ShapeNet-ViPC and ModelNet-MPC benchmarks demonstrate that DuInNet exhibits superiority, robustness and transfer ability in all completion tasks over state-of-the-art methods. The code and dataset will be available soon.

Not All Points Are Equal: Learning Highly Efficient Point-based Detectors for 3D LiDAR Point Clouds

Mar 21, 2022

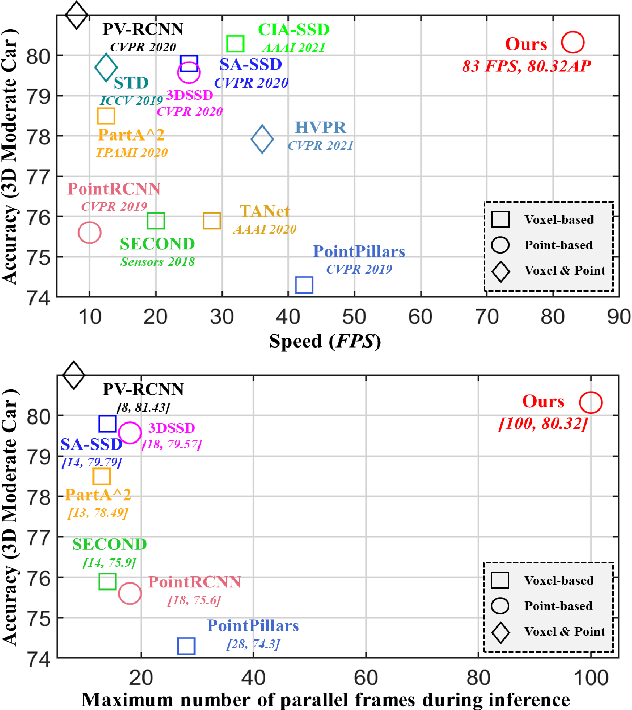

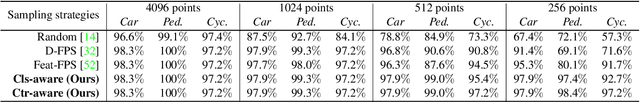

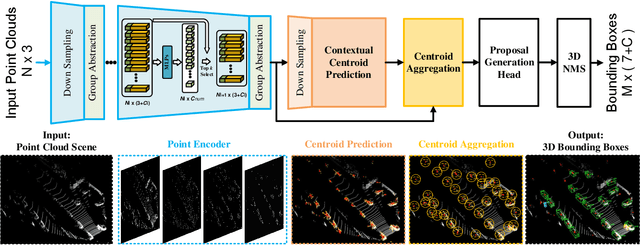

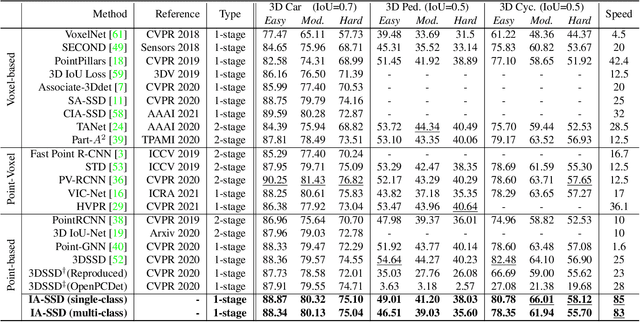

Abstract:We study the problem of efficient object detection of 3D LiDAR point clouds. To reduce the memory and computational cost, existing point-based pipelines usually adopt task-agnostic random sampling or farthest point sampling to progressively downsample input point clouds, despite the fact that not all points are equally important to the task of object detection. In particular, the foreground points are inherently more important than background points for object detectors. Motivated by this, we propose a highly-efficient single-stage point-based 3D detector in this paper, termed IA-SSD. The key of our approach is to exploit two learnable, task-oriented, instance-aware downsampling strategies to hierarchically select the foreground points belonging to objects of interest. Additionally, we also introduce a contextual centroid perception module to further estimate precise instance centers. Finally, we build our IA-SSD following the encoder-only architecture for efficiency. Extensive experiments conducted on several large-scale detection benchmarks demonstrate the competitive performance of our IA-SSD. Thanks to the low memory footprint and a high degree of parallelism, it achieves a superior speed of 80+ frames-per-second on the KITTI dataset with a single RTX2080Ti GPU. The code is available at \url{https://github.com/yifanzhang713/IA-SSD}.

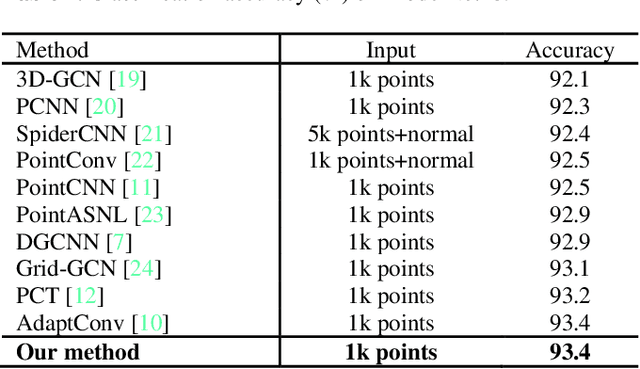

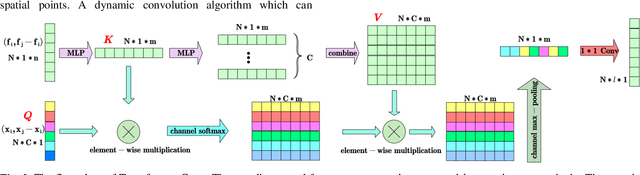

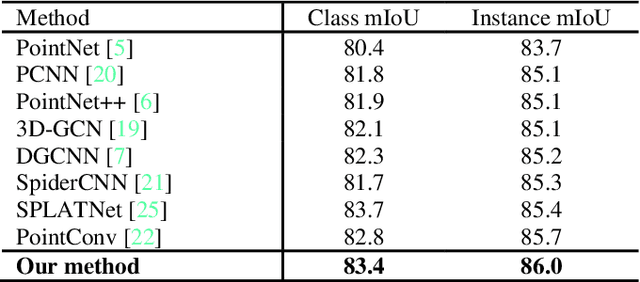

Adaptive Channel Encoding for Point Cloud Analysis

Dec 05, 2021

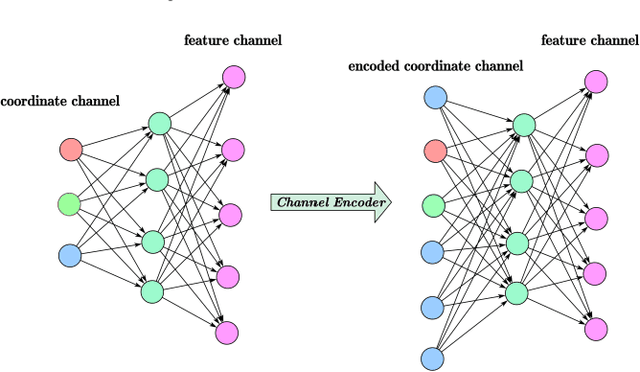

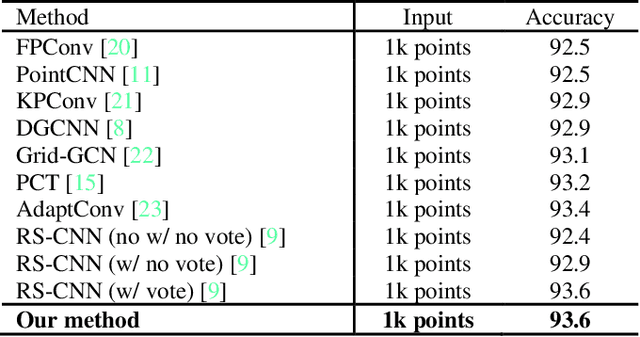

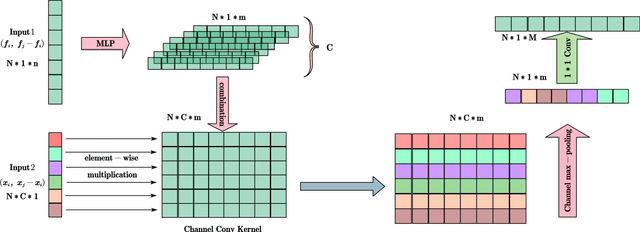

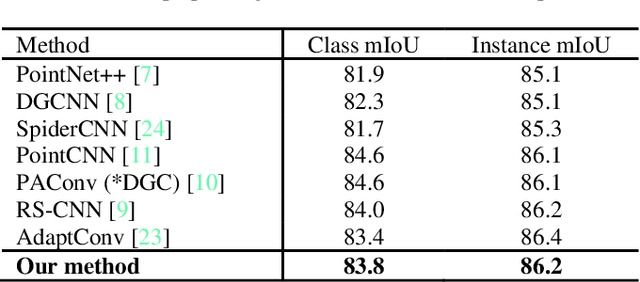

Abstract:Attention mechanism plays a more and more important role in point cloud analysis and channel attention is one of the hotspots. With so much channel information, it is difficult for neural networks to screen useful channel information. Thus, an adaptive channel encoding mechanism is proposed to capture channel relationships in this paper. It improves the quality of the representation generated by the network by explicitly encoding the interdependence between the channels of its features. Specifically, a channel-wise convolution (Channel-Conv) is proposed to adaptively learn the relationship between coordinates and features, so as to encode the channel. Different from the popular attention weight schemes, the Channel-Conv proposed in this paper realizes adaptability in convolution operation, rather than simply assigning different weights for channels. Extensive experiments on existing benchmarks verify our method achieves the state of the arts.

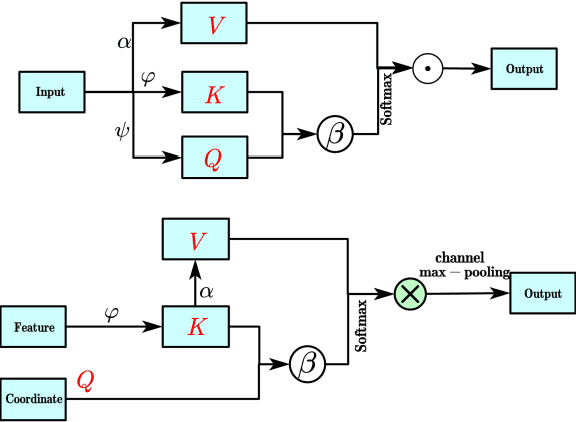

Adaptive Channel Encoding Transformer for Point Cloud Analysis

Dec 05, 2021

Abstract:Transformer plays an increasingly important role in various computer vision areas and remarkable achievements have also been made in point cloud analysis. Since they mainly focus on point-wise transformer, an adaptive channel encoding transformer is proposed in this paper. Specifically, a channel convolution called Transformer-Conv is designed to encode the channel. It can encode feature channels by capturing the potential relationship between coordinates and features. Compared with simply assigning attention weight to each channel, our method aims to encode the channel adaptively. In addition, our network adopts the neighborhood search method of low-level and high-level dual semantic receptive fields to improve the performance. Extensive experiments show that our method is superior to state-of-the-art point cloud classification and segmentation methods on three benchmark datasets.

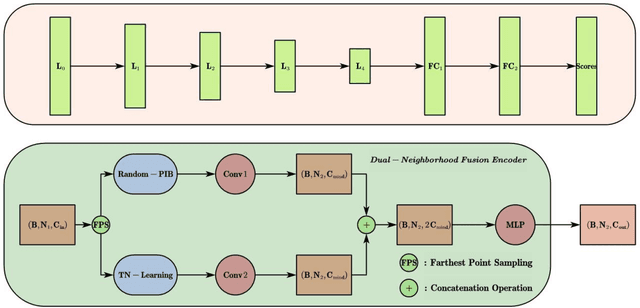

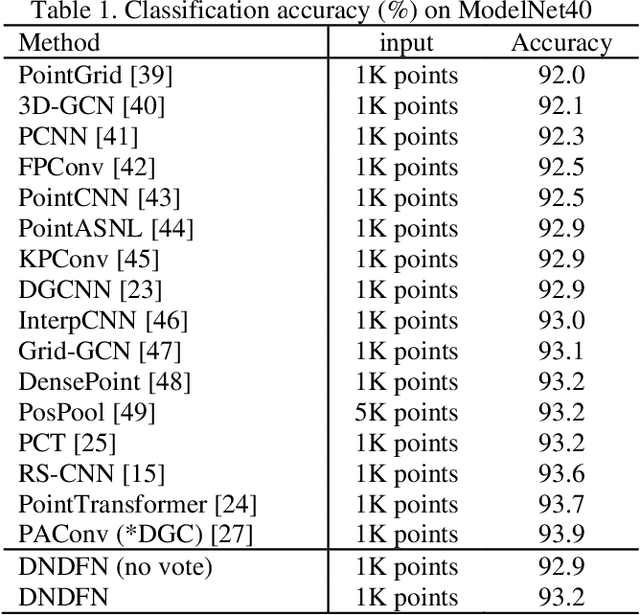

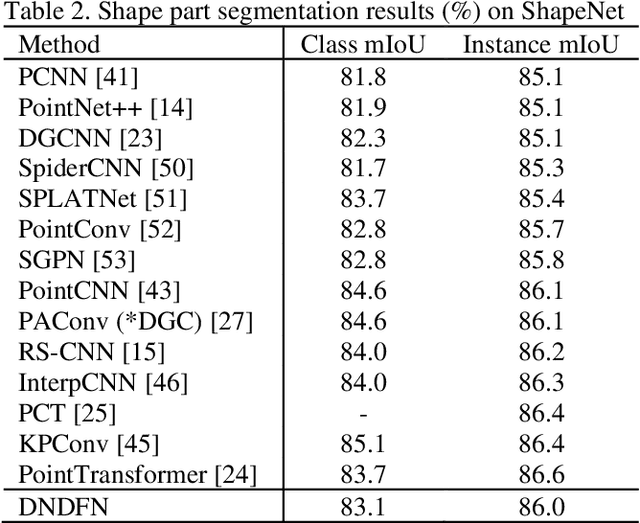

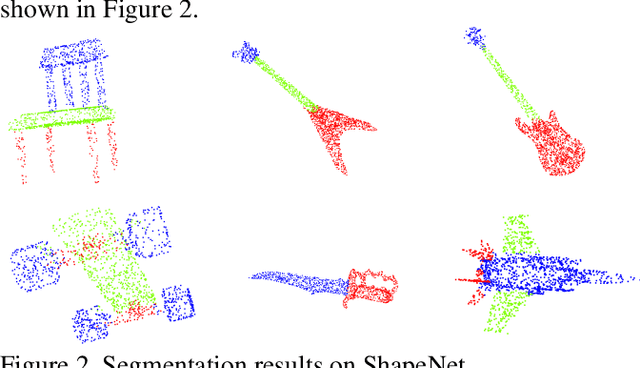

Dual-Neighborhood Deep Fusion Network for Point Cloud Analysis

Aug 20, 2021

Abstract:Convolutional neural network has made remarkable achievements in classification of idealized point cloud, however, non-idealized point cloud classification is still a challenging task. In this paper, DNDFN, namely, Dual-Neighborhood Deep Fusion Network, is proposed to deal with this problem. DNDFN has two key points. One is combination of local neighborhood and global neigh-borhood. nearest neighbor (kNN) or ball query can capture the local neighborhood but ignores long-distance dependencies. A trainable neighborhood learning meth-od called TN-Learning is proposed, which can capture the global neighborhood. TN-Learning is combined with them to obtain richer neighborhood information. The other is information transfer convolution (IT-Conv) which can learn the structural information between two points and transfer features through it. Extensive exper-iments on idealized and non-idealized benchmarks across four tasks verify DNDFN achieves the state of the arts.

Rotational Projection Statistics for 3D Local Surface Description and Object Recognition

Apr 11, 2013

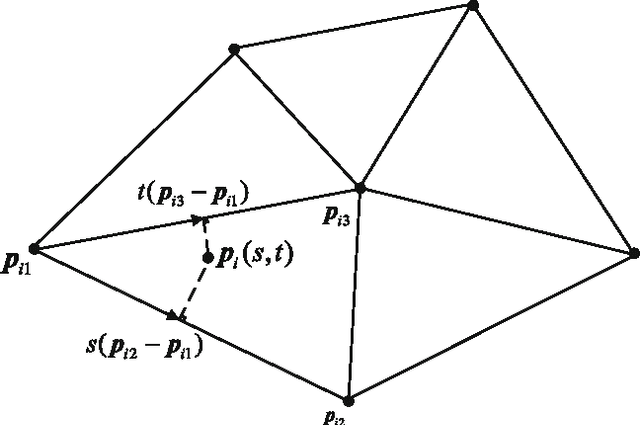

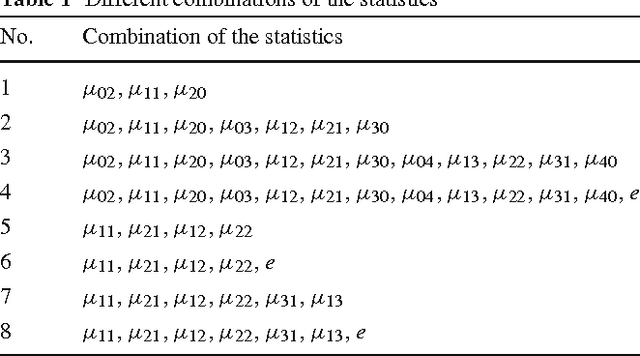

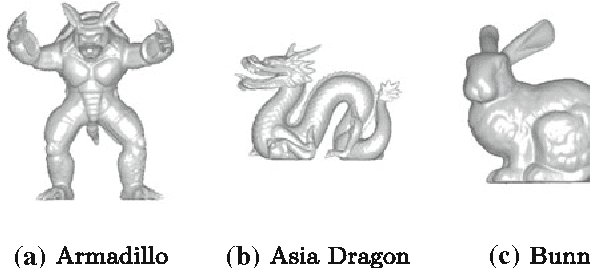

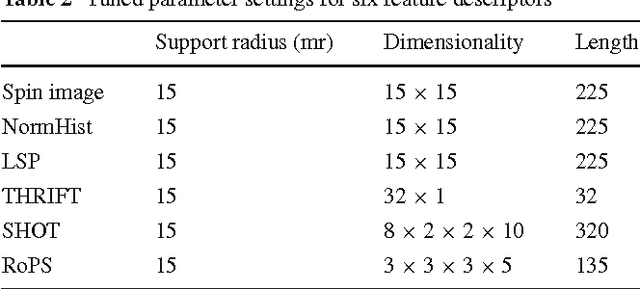

Abstract:Recognizing 3D objects in the presence of noise, varying mesh resolution, occlusion and clutter is a very challenging task. This paper presents a novel method named Rotational Projection Statistics (RoPS). It has three major modules: Local Reference Frame (LRF) definition, RoPS feature description and 3D object recognition. We propose a novel technique to define the LRF by calculating the scatter matrix of all points lying on the local surface. RoPS feature descriptors are obtained by rotationally projecting the neighboring points of a feature point onto 2D planes and calculating a set of statistics (including low-order central moments and entropy) of the distribution of these projected points. Using the proposed LRF and RoPS descriptor, we present a hierarchical 3D object recognition algorithm. The performance of the proposed LRF, RoPS descriptor and object recognition algorithm was rigorously tested on a number of popular and publicly available datasets. Our proposed techniques exhibited superior performance compared to existing techniques. We also showed that our method is robust with respect to noise and varying mesh resolution. Our RoPS based algorithm achieved recognition rates of 100%, 98.9%, 95.4% and 96.0% respectively when tested on the Bologna, UWA, Queen's and Ca' Foscari Venezia Datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge