Jiangbei Hu

Learning Unsigned Distance Fields from Local Shape Functions for 3D Surface Reconstruction

Jul 01, 2024

Abstract:Unsigned distance fields (UDFs) provide a versatile framework for representing a diverse array of 3D shapes, encompassing both watertight and non-watertight geometries. Traditional UDF learning methods typically require extensive training on large datasets of 3D shapes, which is costly and often necessitates hyperparameter adjustments for new datasets. This paper presents a novel neural framework, LoSF-UDF, for reconstructing surfaces from 3D point clouds by leveraging local shape functions to learn UDFs. We observe that 3D shapes manifest simple patterns within localized areas, prompting us to create a training dataset of point cloud patches characterized by mathematical functions that represent a continuum from smooth surfaces to sharp edges and corners. Our approach learns features within a specific radius around each query point and utilizes an attention mechanism to focus on the crucial features for UDF estimation. This method enables efficient and robust surface reconstruction from point clouds without the need for shape-specific training. Additionally, our method exhibits enhanced resilience to noise and outliers in point clouds compared to existing methods. We present comprehensive experiments and comparisons across various datasets, including synthetic and real-scanned point clouds, to validate our method's efficacy.

GS-Octree: Octree-based 3D Gaussian Splatting for Robust Object-level 3D Reconstruction Under Strong Lighting

Jun 26, 2024

Abstract:The 3D Gaussian Splatting technique has significantly advanced the construction of radiance fields from multi-view images, enabling real-time rendering. While point-based rasterization effectively reduces computational demands for rendering, it often struggles to accurately reconstruct the geometry of the target object, especially under strong lighting. To address this challenge, we introduce a novel approach that combines octree-based implicit surface representations with Gaussian splatting. Our method consists of four stages. Initially, it reconstructs a signed distance field (SDF) and a radiance field through volume rendering, encoding them in a low-resolution octree. The initial SDF represents the coarse geometry of the target object. Subsequently, it introduces 3D Gaussians as additional degrees of freedom, which are guided by the SDF. In the third stage, the optimized Gaussians further improve the accuracy of the SDF, allowing it to recover finer geometric details compared to the initial SDF obtained in the first stage. Finally, it adopts the refined SDF to further optimize the 3D Gaussians via splatting, eliminating those that contribute little to visual appearance. Experimental results show that our method, which leverages the distribution of 3D Gaussians with SDFs, reconstructs more accurate geometry, particularly in images with specular highlights caused by strong lighting.

Topology-Aware Latent Diffusion for 3D Shape Generation

Jan 31, 2024Abstract:We introduce a new generative model that combines latent diffusion with persistent homology to create 3D shapes with high diversity, with a special emphasis on their topological characteristics. Our method involves representing 3D shapes as implicit fields, then employing persistent homology to extract topological features, including Betti numbers and persistence diagrams. The shape generation process consists of two steps. Initially, we employ a transformer-based autoencoding module to embed the implicit representation of each 3D shape into a set of latent vectors. Subsequently, we navigate through the learned latent space via a diffusion model. By strategically incorporating topological features into the diffusion process, our generative module is able to produce a richer variety of 3D shapes with different topological structures. Furthermore, our framework is flexible, supporting generation tasks constrained by a variety of inputs, including sparse and partial point clouds, as well as sketches. By modifying the persistence diagrams, we can alter the topology of the shapes generated from these input modalities.

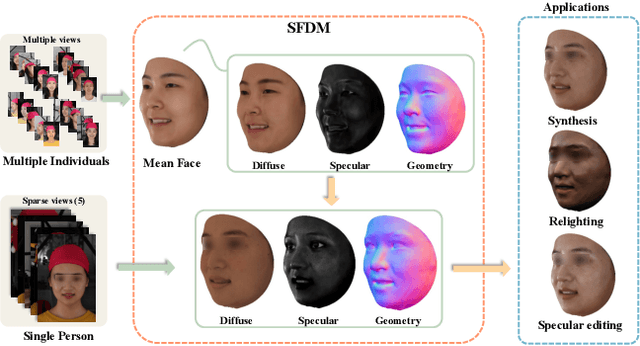

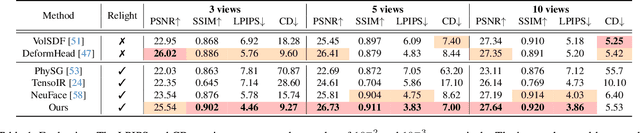

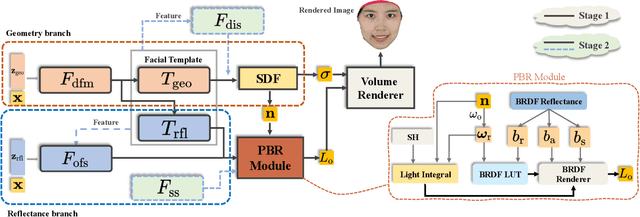

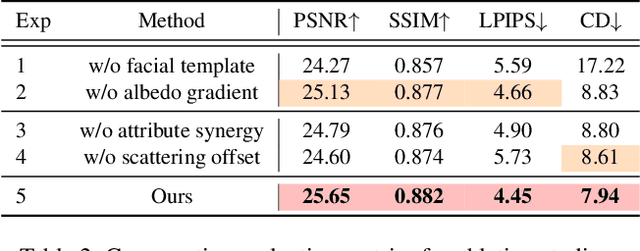

Robust Geometry and Reflectance Disentanglement for 3D Face Reconstruction from Sparse-view Images

Dec 11, 2023

Abstract:This paper presents a novel two-stage approach for reconstructing human faces from sparse-view images, a task made challenging by the unique geometry and complex skin reflectance of each individual. Our method focuses on decomposing key facial attributes, including geometry, diffuse reflectance, and specular reflectance, from ambient light. Initially, we create a general facial template from a diverse collection of individual faces, capturing essential geometric and reflectance characteristics. Guided by this template, we refine each specific face model in the second stage, which further considers the interaction between geometry and reflectance, as well as the subsurface scattering effects on facial skin. Our method enables the reconstruction of high-quality facial representations from as few as three images, offering improved geometric accuracy and reflectance detail. Through comprehensive evaluations and comparisons, our method demonstrates superiority over existing techniques. Our method effectively disentangles geometry and reflectance components, leading to enhanced quality in synthesizing new views and opening up possibilities for applications such as relighting and reflectance editing. We will make the code publicly available.

Bi-directional Deformation for Parameterization of Neural Implicit Surfaces

Oct 09, 2023Abstract:The growing capabilities of neural rendering have increased the demand for new techniques that enable the intuitive editing of 3D objects, particularly when they are represented as neural implicit surfaces. In this paper, we present a novel neural algorithm to parameterize neural implicit surfaces to simple parametric domains, such as spheres, cubes or polycubes, where 3D radiance field can be represented as a 2D field, thereby facilitating visualization and various editing tasks. Technically, our method computes a bi-directional deformation between 3D objects and their chosen parametric domains, eliminating the need for any prior information. We adopt a forward mapping of points on the zero level set of the 3D object to a parametric domain, followed by a backward mapping through inverse deformation. To ensure the map is bijective, we employ a cycle loss while optimizing the smoothness of both deformations. Additionally, we leverage a Laplacian regularizer to effectively control angle distortion and offer the flexibility to choose from a range of parametric domains for managing area distortion. Designed for compatibility, our framework integrates seamlessly with existing neural rendering pipelines, taking multi-view images as input to reconstruct 3D geometry and compute the corresponding texture map. We also introduce a simple yet effective technique for intrinsic radiance decomposition, facilitating both view-independent material editing and view-dependent shading editing. Our method allows for the immediate rendering of edited textures through volume rendering, without the need for network re-training. Moreover, our approach supports the co-parameterization of multiple objects and enables texture transfer between them. We demonstrate the effectiveness of our method on images of human heads and man-made objects. We will make the source code publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge