Jian-Qiao Zhu

Large Language Models Can Take False First Steps at Inference-time Planning

Feb 03, 2026Abstract:Large language models (LLMs) have been shown to acquire sequence-level planning abilities during training, yet their planning behavior exhibited at inference time often appears short-sighted and inconsistent with these capabilities. We propose a Bayesian account for this gap by grounding planning behavior in the evolving generative context: given the subtle differences between natural language and the language internalized by LLMs, accumulated self-generated context drives a planning-shift during inference and thereby creates the appearance of compromised planning behavior. We further validate the proposed model through two controlled experiments: a random-generation task demonstrating constrained planning under human prompts and increasing planning strength as self-generated context accumulates, and a Gaussian-sampling task showing reduced initial bias when conditioning on self-generated sequences. These findings provide a theoretical explanation along with empirical evidence for characterizing how LLMs plan ahead during inference.

Eliciting Trustworthiness Priors of Large Language Models via Economic Games

Jan 31, 2026Abstract:One critical aspect of building human-centered, trustworthy artificial intelligence (AI) systems is maintaining calibrated trust: appropriate reliance on AI systems outperforms both overtrust (e.g., automation bias) and undertrust (e.g., disuse). A fundamental challenge, however, is how to characterize the level of trust exhibited by an AI system itself. Here, we propose a novel elicitation method based on iterated in-context learning (Zhu and Griffiths, 2024a) and apply it to elicit trustworthiness priors using the Trust Game from behavioral game theory. The Trust Game is particularly well suited for this purpose because it operationalizes trust as voluntary exposure to risk based on beliefs about another agent, rather than self-reported attitudes. Using our method, we elicit trustworthiness priors from several leading large language models (LLMs) and find that GPT-4.1's trustworthiness priors closely track those observed in humans. Building on this result, we further examine how GPT-4.1 responds to different player personas in the Trust Game, providing an initial characterization of how such models differentiate trust across agent characteristics. Finally, we show that variation in elicited trustworthiness can be well predicted by a stereotype-based model grounded in perceived warmth and competence.

Simulated Annealing Enhances Theory-of-Mind Reasoning in Autoregressive Language Models

Jan 18, 2026Abstract:Autoregressive language models are next-token predictors and have been criticized for only optimizing surface plausibility (i.e., local coherence) rather than maintaining correct latent-state representations (i.e., global coherence). Because Theory of Mind (ToM) tasks crucially depend on reasoning about latent mental states of oneself and others, such models are therefore often thought to fail at ToM. While post-training methods can improve ToM performance, we show that strong ToM capability can be recovered directly from the base model without any additional weight updates or verifications. Our approach builds on recent power-sampling methods (Karan & Du, 2025) that use Markov chain Monte Carlo (MCMC) to sample from sharpened sequence-level (rather than token-level) probability distributions of autoregressive language models. We further find that incorporating annealing, where the tempered distribution is gradually shifted from high to low temperature, substantially improves ToM performance over fixed-temperature power sampling. Together, these results suggest that sampling-based optimization provides a powerful way to extract latent capabilities from language models without retraining.

Using Reinforcement Learning to Train Large Language Models to Explain Human Decisions

May 16, 2025

Abstract:A central goal of cognitive modeling is to develop models that not only predict human behavior but also provide insight into the underlying cognitive mechanisms. While neural network models trained on large-scale behavioral data often achieve strong predictive performance, they typically fall short in offering interpretable explanations of the cognitive processes they capture. In this work, we explore the potential of pretrained large language models (LLMs) to serve as dual-purpose cognitive models--capable of both accurate prediction and interpretable explanation in natural language. Specifically, we employ reinforcement learning with outcome-based rewards to guide LLMs toward generating explicit reasoning traces for explaining human risky choices. Our findings demonstrate that this approach produces high-quality explanations alongside strong quantitative predictions of human decisions.

Steering Risk Preferences in Large Language Models by Aligning Behavioral and Neural Representations

May 16, 2025Abstract:Changing the behavior of large language models (LLMs) can be as straightforward as editing the Transformer's residual streams using appropriately constructed "steering vectors." These modifications to internal neural activations, a form of representation engineering, offer an effective and targeted means of influencing model behavior without retraining or fine-tuning the model. But how can such steering vectors be systematically identified? We propose a principled approach for uncovering steering vectors by aligning latent representations elicited through behavioral methods (specifically, Markov chain Monte Carlo with LLMs) with their neural counterparts. To evaluate this approach, we focus on extracting latent risk preferences from LLMs and steering their risk-related outputs using the aligned representations as steering vectors. We show that the resulting steering vectors successfully and reliably modulate LLM outputs in line with the targeted behavior.

Recovering Event Probabilities from Large Language Model Embeddings via Axiomatic Constraints

May 10, 2025Abstract:Rational decision-making under uncertainty requires coherent degrees of belief in events. However, event probabilities generated by Large Language Models (LLMs) have been shown to exhibit incoherence, violating the axioms of probability theory. This raises the question of whether coherent event probabilities can be recovered from the embeddings used by the models. If so, those derived probabilities could be used as more accurate estimates in events involving uncertainty. To explore this question, we propose enforcing axiomatic constraints, such as the additive rule of probability theory, in the latent space learned by an extended variational autoencoder (VAE) applied to LLM embeddings. This approach enables event probabilities to naturally emerge in the latent space as the VAE learns to both reconstruct the original embeddings and predict the embeddings of semantically related events. We evaluate our method on complementary events (i.e., event A and its complement, event not-A), where the true probabilities of the two events must sum to 1. Experiment results on open-weight language models demonstrate that probabilities recovered from embeddings exhibit greater coherence than those directly reported by the corresponding models and align closely with the true probabilities.

Using the Tools of Cognitive Science to Understand Large Language Models at Different Levels of Analysis

Mar 17, 2025Abstract:Modern artificial intelligence systems, such as large language models, are increasingly powerful but also increasingly hard to understand. Recognizing this problem as analogous to the historical difficulties in understanding the human mind, we argue that methods developed in cognitive science can be useful for understanding large language models. We propose a framework for applying these methods based on Marr's three levels of analysis. By revisiting established cognitive science techniques relevant to each level and illustrating their potential to yield insights into the behavior and internal organization of large language models, we aim to provide a toolkit for making sense of these new kinds of minds.

Capturing the Complexity of Human Strategic Decision-Making with Machine Learning

Aug 15, 2024Abstract:Understanding how people behave in strategic settings--where they make decisions based on their expectations about the behavior of others--is a long-standing problem in the behavioral sciences. We conduct the largest study to date of strategic decision-making in the context of initial play in two-player matrix games, analyzing over 90,000 human decisions across more than 2,400 procedurally generated games that span a much wider space than previous datasets. We show that a deep neural network trained on these data predicts people's choices better than leading theories of strategic behavior, indicating that there is systematic variation that is not explained by those theories. We then modify the network to produce a new, interpretable behavioral model, revealing what the original network learned about people: their ability to optimally respond and their capacity to reason about others are dependent on the complexity of individual games. This context-dependence is critical in explaining deviations from the rational Nash equilibrium, response times, and uncertainty in strategic decisions. More broadly, our results demonstrate how machine learning can be applied beyond prediction to further help generate novel explanations of complex human behavior.

Eliciting the Priors of Large Language Models using Iterated In-Context Learning

Jun 04, 2024Abstract:As Large Language Models (LLMs) are increasingly deployed in real-world settings, understanding the knowledge they implicitly use when making decisions is critical. One way to capture this knowledge is in the form of Bayesian prior distributions. We develop a prompt-based workflow for eliciting prior distributions from LLMs. Our approach is based on iterated learning, a Markov chain Monte Carlo method in which successive inferences are chained in a way that supports sampling from the prior distribution. We validated our method in settings where iterated learning has previously been used to estimate the priors of human participants -- causal learning, proportion estimation, and predicting everyday quantities. We found that priors elicited from GPT-4 qualitatively align with human priors in these settings. We then used the same method to elicit priors from GPT-4 for a variety of speculative events, such as the timing of the development of superhuman AI.

Language Models Trained to do Arithmetic Predict Human Risky and Intertemporal Choice

May 29, 2024

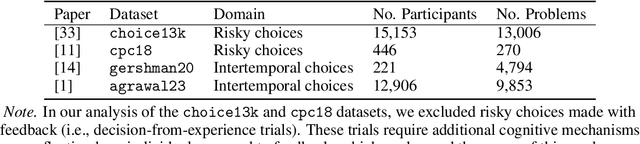

Abstract:The observed similarities in the behavior of humans and Large Language Models (LLMs) have prompted researchers to consider the potential of using LLMs as models of human cognition. However, several significant challenges must be addressed before LLMs can be legitimately regarded as cognitive models. For instance, LLMs are trained on far more data than humans typically encounter, and may have been directly trained on human data in specific cognitive tasks or aligned with human preferences. Consequently, the origins of these behavioral similarities are not well understood. In this paper, we propose a novel way to enhance the utility of LLMs as cognitive models. This approach involves (i) leveraging computationally equivalent tasks that both an LLM and a rational agent need to master for solving a cognitive problem and (ii) examining the specific task distributions required for an LLM to exhibit human-like behaviors. We apply this approach to decision-making -- specifically risky and intertemporal choice -- where the key computationally equivalent task is the arithmetic of expected value calculations. We show that an LLM pretrained on an ecologically valid arithmetic dataset, which we call Arithmetic-GPT, predicts human behavior better than many traditional cognitive models. Pretraining LLMs on ecologically valid arithmetic datasets is sufficient to produce a strong correspondence between these models and human decision-making. Our results also suggest that LLMs used as cognitive models should be carefully investigated via ablation studies of the pretraining data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge