Jiahong Wang

Neural Modes: Self-supervised Learning of Nonlinear Modal Subspaces

Apr 26, 2024Abstract:We propose a self-supervised approach for learning physics-based subspaces for real-time simulation. Existing learning-based methods construct subspaces by approximating pre-defined simulation data in a purely geometric way. However, this approach tends to produce high-energy configurations, leads to entangled latent space dimensions, and generalizes poorly beyond the training set. To overcome these limitations, we propose a self-supervised approach that directly minimizes the system's mechanical energy during training. We show that our method leads to learned subspaces that reflect physical equilibrium constraints, resolve overfitting issues of previous methods, and offer interpretable latent space parameters.

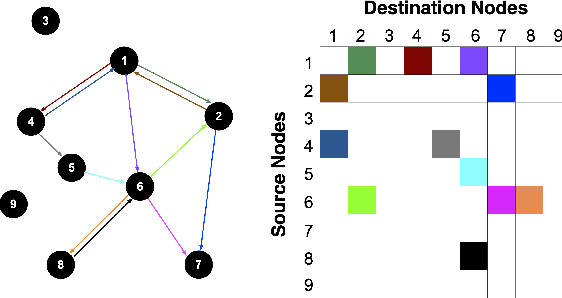

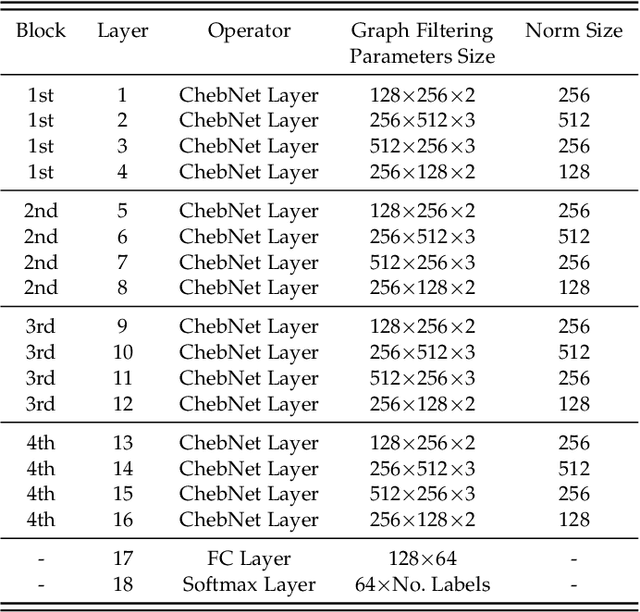

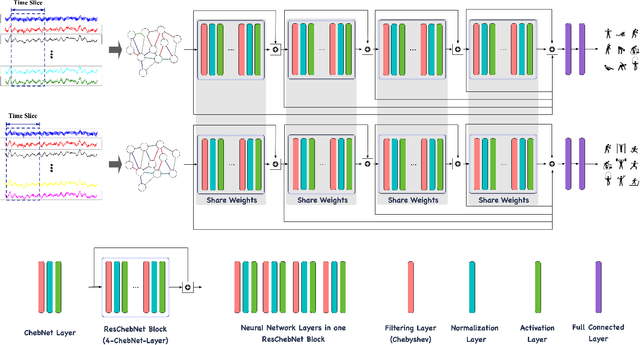

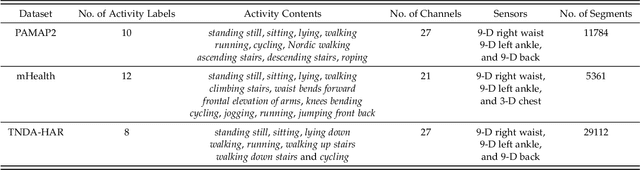

Deep Transfer Learning with Graph Neural Network for Sensor-Based Human Activity Recognition

Mar 14, 2022

Abstract:The sensor-based human activity recognition (HAR) in mobile application scenarios is often confronted with sensor modalities variation and annotated data deficiency. Given this observation, we devised a graph-inspired deep learning approach toward the sensor-based HAR tasks, which was further used to build a deep transfer learning model toward giving a tentative solution for these two challenging problems. Specifically, we present a multi-layer residual structure involved graph convolutional neural network (ResGCNN) toward the sensor-based HAR tasks, namely the HAR-ResGCNN approach. Experimental results on the PAMAP2 and mHealth data sets demonstrate that our ResGCNN is effective at capturing the characteristics of actions with comparable results compared to other sensor-based HAR models (with an average accuracy of 98.18% and 99.07%, respectively). More importantly, the deep transfer learning experiments using the ResGCNN model show excellent transferability and few-shot learning performance. The graph-based framework shows good meta-learning ability and is supposed to be a promising solution in sensor-based HAR tasks.

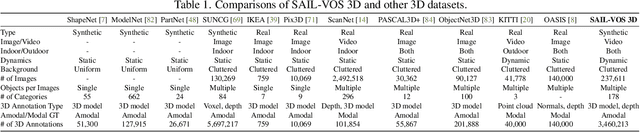

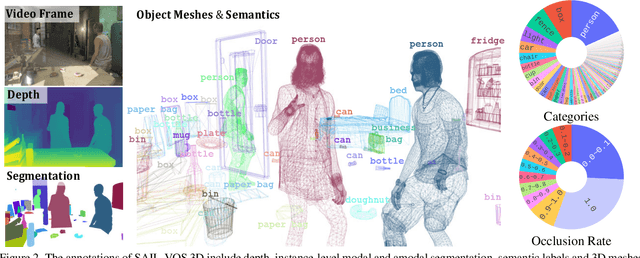

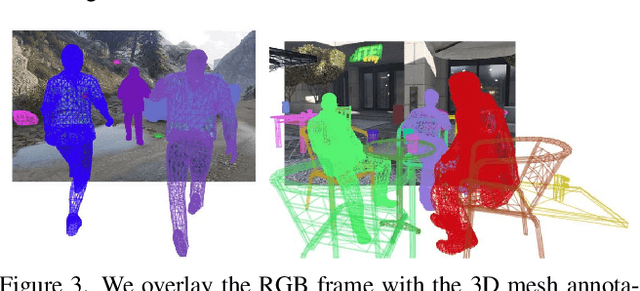

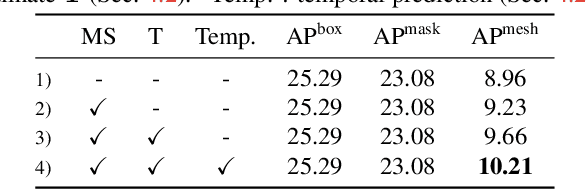

SAIL-VOS 3D: A Synthetic Dataset and Baselines for Object Detection and 3D Mesh Reconstruction from Video Data

May 18, 2021

Abstract:Extracting detailed 3D information of objects from video data is an important goal for holistic scene understanding. While recent methods have shown impressive results when reconstructing meshes of objects from a single image, results often remain ambiguous as part of the object is unobserved. Moreover, existing image-based datasets for mesh reconstruction don't permit to study models which integrate temporal information. To alleviate both concerns we present SAIL-VOS 3D: a synthetic video dataset with frame-by-frame mesh annotations which extends SAIL-VOS. We also develop first baselines for reconstruction of 3D meshes from video data via temporal models. We demonstrate efficacy of the proposed baseline on SAIL-VOS 3D and Pix3D, showing that temporal information improves reconstruction quality. Resources and additional information are available at http://sailvos.web.illinois.edu.

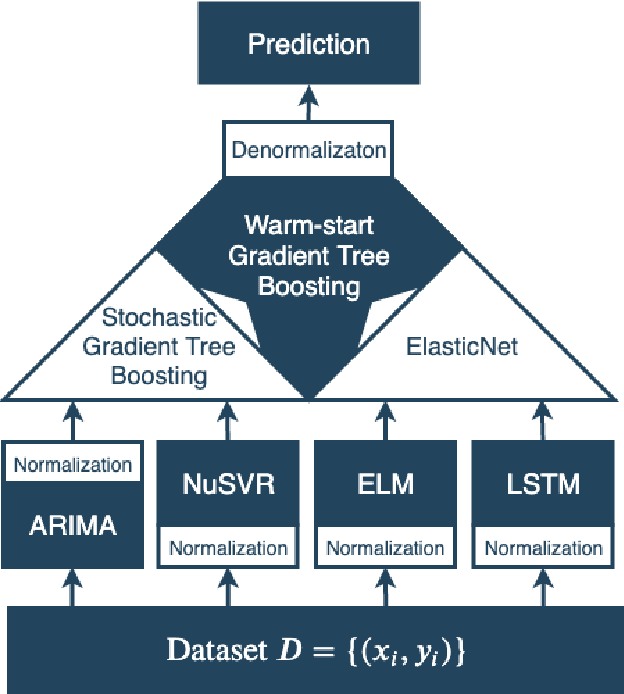

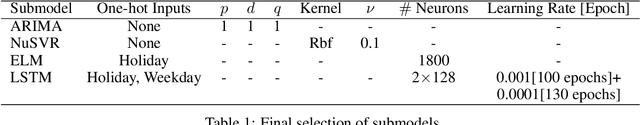

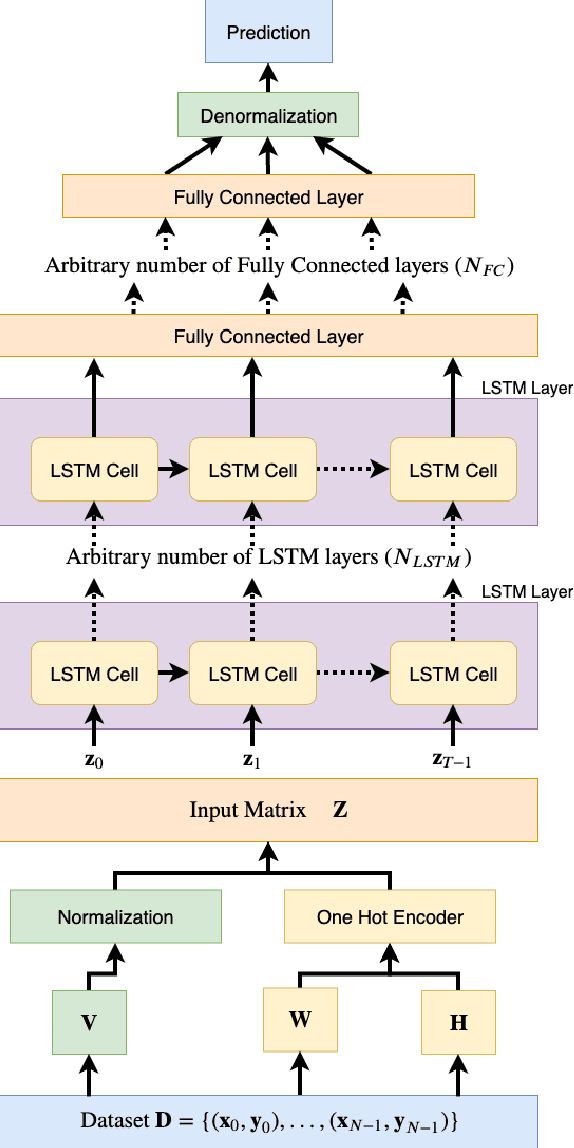

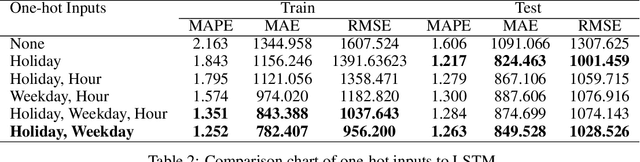

Short-term Load Forecasting Based on Hybrid Strategy Using Warm-start Gradient Tree Boosting

May 23, 2020

Abstract:A deep-learning based hybrid strategy for short-term load forecasting is presented. The strategy proposes a novel tree-based ensemble method Warm-start Gradient Tree Boosting (WGTB). Current strategies either ensemble submodels of a single type, which fail to take advantage of statistical strengths of different inference models. Or they simply sum the outputs from completely different inference models, which doesn't maximize the potential of ensemble. WGTB is thus proposed and tailored to the great disparity among different inference models in accuracy, volatility and linearity. The complete strategy integrates four different inference models (i.e., auto-regressive integrated moving average, nu support vector regression, extreme learning machine and long short-term memory neural network), both linear and nonlinear models. WGTB then ensembles their outputs by hybridizing linear estimator ElasticNet and nonlinear estimator ExtraTree via boosting algorithm. It is validated on the real historical data of a grid from State Grid Corporation of China of hourly resolution. The result demonstrates the effectiveness of the proposed strategy that hybridizes statistical strengths of both linear and nonlinear inference models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge