Tianzheng Liao

Deep Transfer Learning with Graph Neural Network for Sensor-Based Human Activity Recognition

Mar 14, 2022

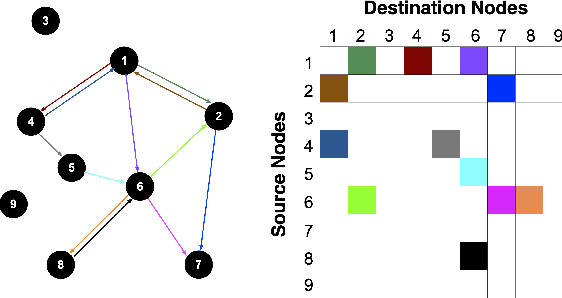

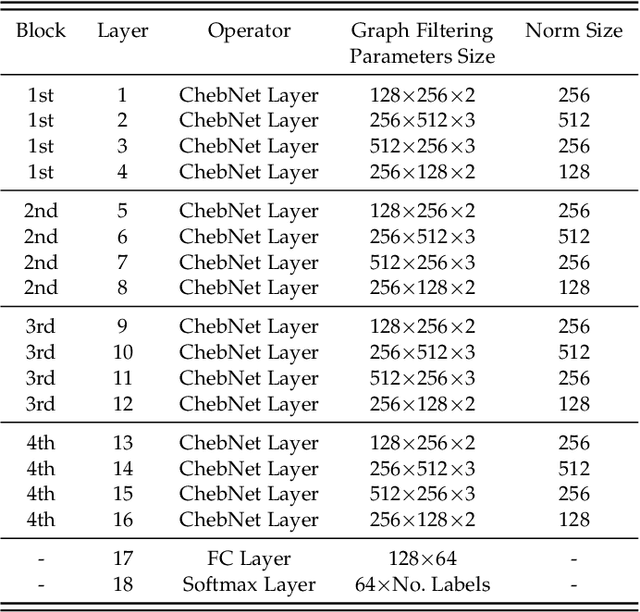

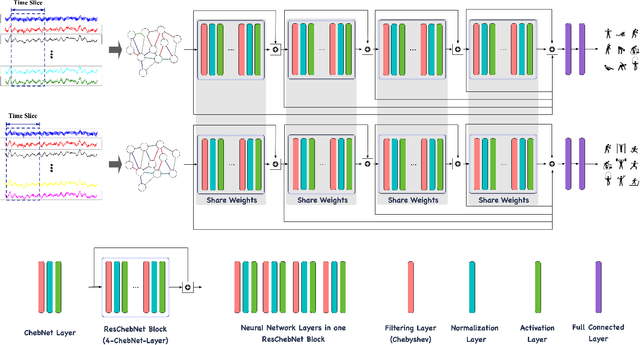

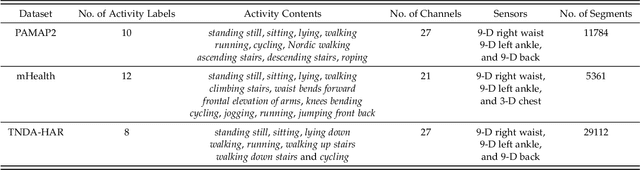

Abstract:The sensor-based human activity recognition (HAR) in mobile application scenarios is often confronted with sensor modalities variation and annotated data deficiency. Given this observation, we devised a graph-inspired deep learning approach toward the sensor-based HAR tasks, which was further used to build a deep transfer learning model toward giving a tentative solution for these two challenging problems. Specifically, we present a multi-layer residual structure involved graph convolutional neural network (ResGCNN) toward the sensor-based HAR tasks, namely the HAR-ResGCNN approach. Experimental results on the PAMAP2 and mHealth data sets demonstrate that our ResGCNN is effective at capturing the characteristics of actions with comparable results compared to other sensor-based HAR models (with an average accuracy of 98.18% and 99.07%, respectively). More importantly, the deep transfer learning experiments using the ResGCNN model show excellent transferability and few-shot learning performance. The graph-based framework shows good meta-learning ability and is supposed to be a promising solution in sensor-based HAR tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge