Jeroen Famaey

Cross-layer Integrated Sensing and Communication: A Joint Industrial and Academic Perspective

May 16, 2025

Abstract:Integrated sensing and communication (ISAC) enables radio systems to simultaneously sense and communicate with their environment. This paper, developed within the Hexa-X-II project funded by the European Union, presents a comprehensive cross-layer vision for ISAC in 6G networks, integrating insights from physical-layer design, hardware architectures, AI-driven intelligence, and protocol-level innovations. We begin by revisiting the foundational principles of ISAC, highlighting synergies and trade-offs between sensing and communication across different integration levels. Enabling technologies, such as multiband operation, massive and distributed MIMO, non-terrestrial networks, reconfigurable intelligent surfaces, and machine learning, are analyzed in conjunction with hardware considerations including waveform design, synchronization, and full-duplex operation. To bridge implementation and system-level evaluation, we introduce a quantitative cross-layer framework linking design parameters to key performance and value indicators. By synthesizing perspectives from both academia and industry, this paper outlines how deeply integrated ISAC can transform 6G into a programmable and context-aware platform supporting applications from reliable wireless access to autonomous mobility and digital twinning.

Multi-Stream Transmission in Cell-Free MIMO Networks with Coherent AP Clustering

Apr 14, 2025Abstract:This letter proposes a multi-stream selection framework for \ac{CF-MIMO} networks. Partially coherent transmission has been considered by clustering \acp{AP} into phase-aligned clusters to address the challenges of phase misalignment and inter-cluster interference. A novel stream selection algorithm is developed to dynamically allocate multiple streams to each multi-antenna \ac{UE}, ensuring that the system optimizes the sum rate while minimizing inter-cluster and inter-stream interference. Numerical results validate the effectiveness of the proposed method in enhancing spectral efficiency and fairness in distributed \ac{CF-MIMO} networks.

Generating Realistic Synthetic Head Rotation Data for Extended Reality using Deep Learning

Jan 15, 2025

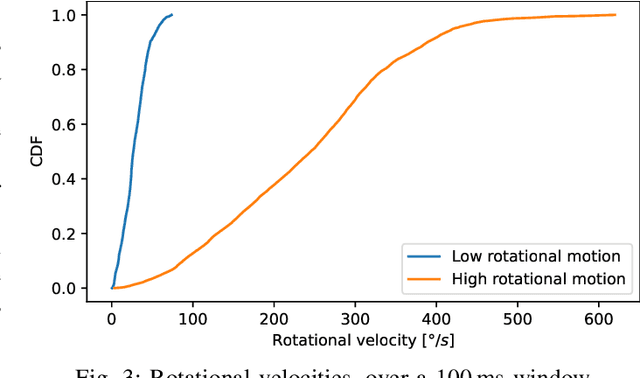

Abstract:Extended Reality is a revolutionary method of delivering multimedia content to users. A large contributor to its popularity is the sense of immersion and interactivity enabled by having real-world motion reflected in the virtual experience accurately and immediately. This user motion, mainly caused by head rotations, induces several technical challenges. For instance, which content is generated and transmitted depends heavily on where the user is looking. Seamless systems, taking user motion into account proactively, will therefore require accurate predictions of upcoming rotations. Training and evaluating such predictors requires vast amounts of orientational input data, which is expensive to gather, as it requires human test subjects. A more feasible approach is to gather a modest dataset through test subjects, and then extend it to a more sizeable set using synthetic data generation methods. In this work, we present a head rotation time series generator based on TimeGAN, an extension of the well-known Generative Adversarial Network, designed specifically for generating time series. This approach is able to extend a dataset of head rotations with new samples closely matching the distribution of the measured time series.

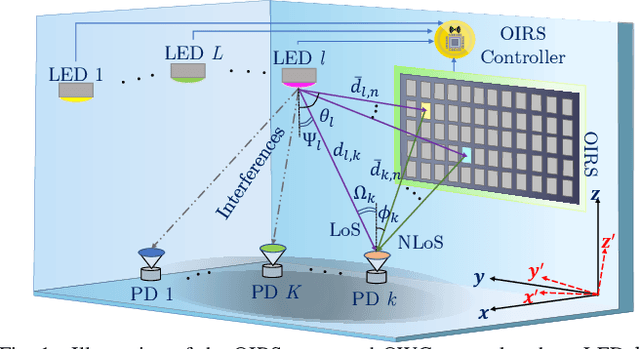

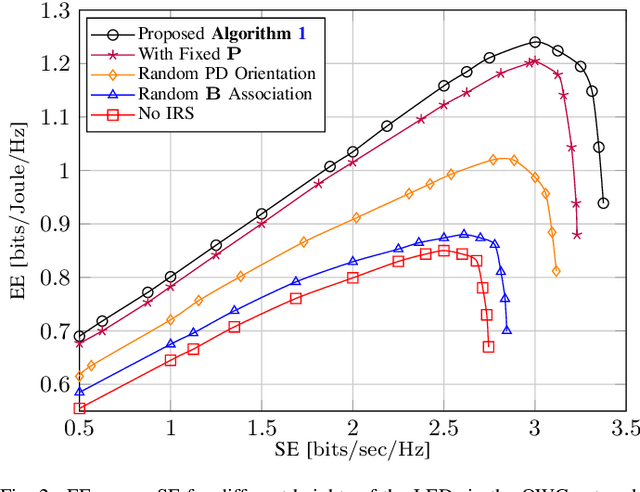

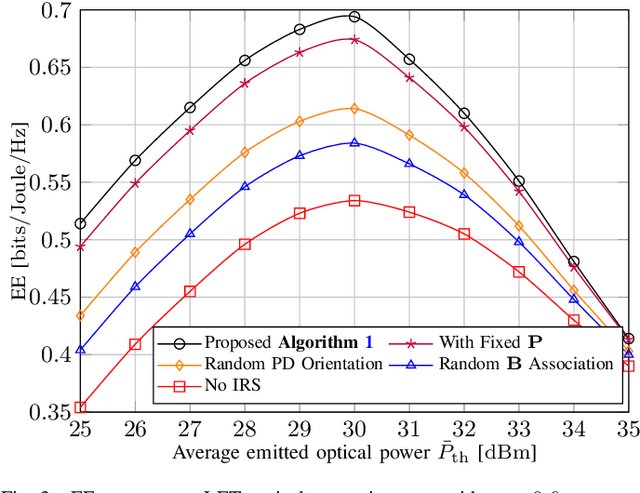

Placement, Orientation, and Resource Allocation Optimization for Cell-Free OIRS-aided OWC Network

Jan 06, 2025

Abstract:The emergence of optical intelligent reflecting surface (OIRS) technologies marks a milestone in optical wireless communication (OWC) systems, enabling enhanced control over light propagation in indoor environments. This capability allows for the customization of channel conditions to achieve specific performance goals. This paper presents an enhancement in downlink cell-free OWC networks through the integration of OIRS. The key focus is on fine-tuning crucial parameters, including transmit power, receiver orientations, OIRS elements allocation, and strategic placement. In particular, a multi-objective optimization problem (MOOP) aimed at simultaneously improving the network's spectral efficiency (SE) and energy efficiency (EE) while adhering to the network's quality of service (QoS) constraints is formulated. The problem is solved by employing the $\epsilon$-constraint method to convert the MOOP into a single-objective optimization problem and solving it through successive convex approximation. Simulation results show the significant impact of OIRS on SE and EE, confirming its effectiveness in improving OWC network performance.

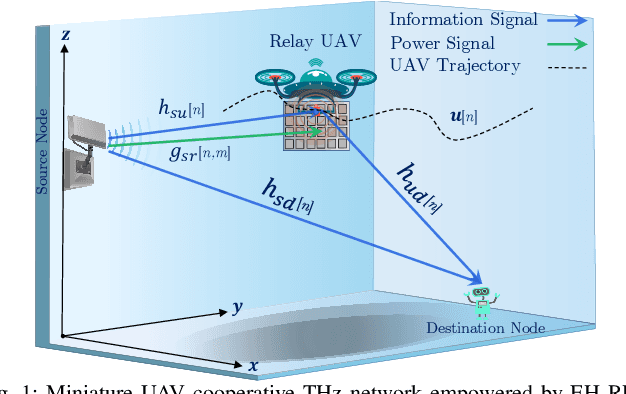

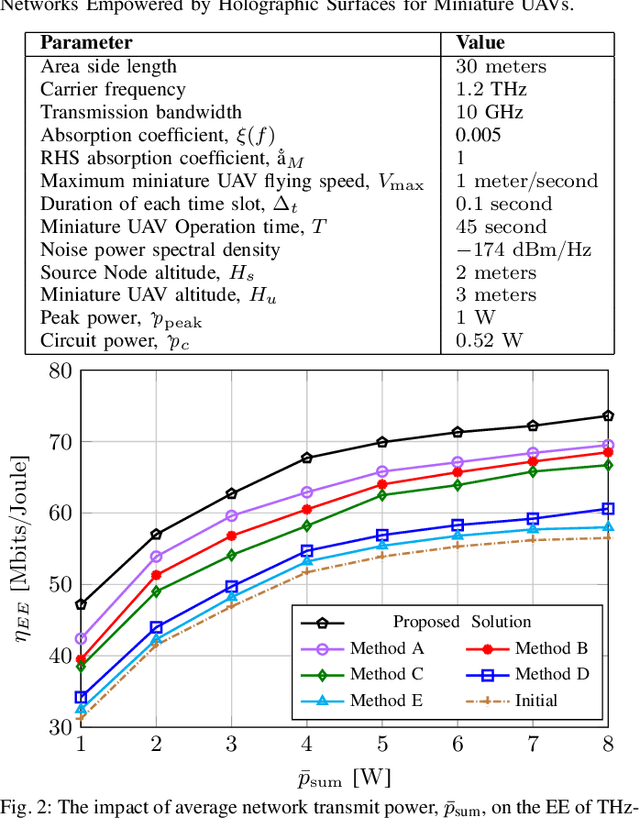

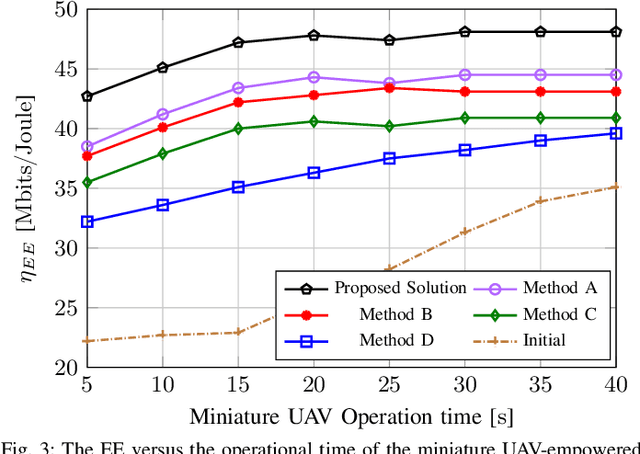

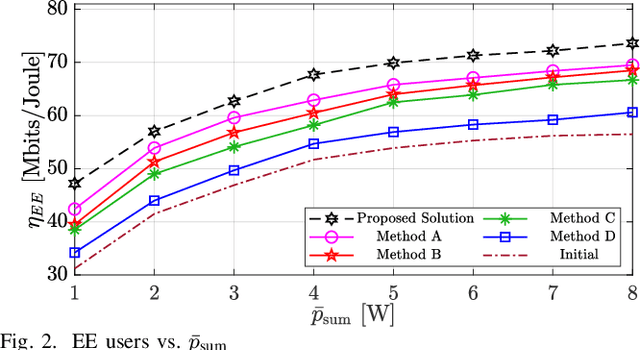

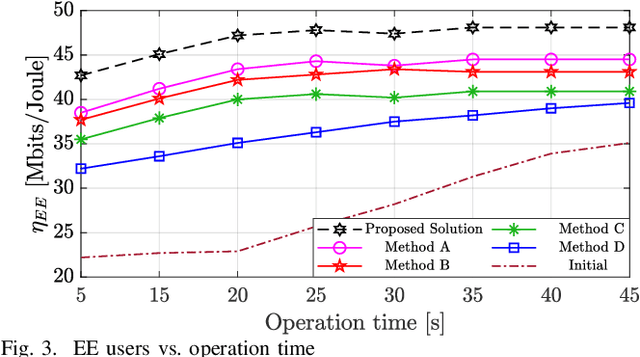

Miniature UAV Empowered Reconfigurable Energy Harvesting Holographic Surfaces in THz Cooperative Networks

Nov 27, 2024

Abstract:This paper focuses on enhancing the energy efficiency (EE) of a cooperative network featuring a `miniature' unmanned aerial vehicle (UAV) that operates at terahertz (THz) frequencies, utilizing holographic surfaces to improve the network's performance. Unlike traditional reconfigurable intelligent surfaces (RIS) that are typically used as passive relays to adjust signal reflections, this work introduces a novel concept: Energy harvesting (EH) using reconfigurable holographic surfaces (RHS) mounted on the miniature UAV. In this system, a source node facilitates the simultaneous reception of information and energy signals by the UAV, with the harvested energy from the RHS being used by the UAV to transmit data to a specific destination. The EE optimization involves adjusting non-orthogonal multiple access (NOMA) power coefficients and the UAV's flight path, considering the peculiarities of the THz channel. The optimization problem is solved in two steps. Initially, the trajectory is refined using a successive convex approximation (SCA) method, followed by the adjustment of NOMA power coefficients through a quadratic transform technique. The effectiveness of the proposed algorithm is demonstrated through simulations, showing superior results when compared to baseline methods.

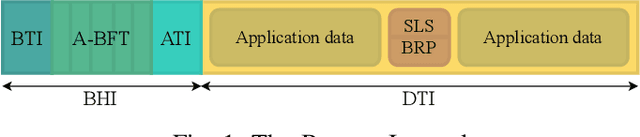

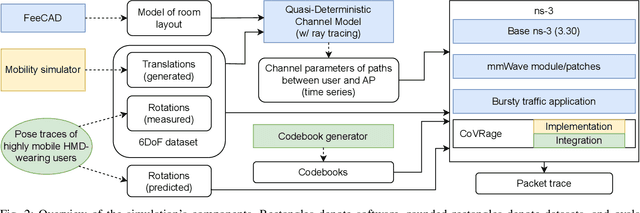

Multi-Gigabit Interactive Extended Reality over Millimeter-Wave: An End-to-End System Approach

May 24, 2024

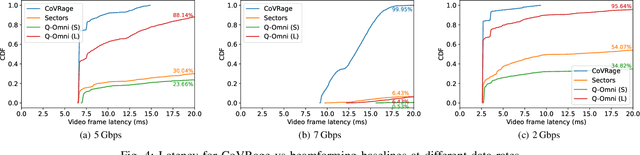

Abstract:Achieving high-quality wireless interactive Extended Reality (XR) will require multi-gigabit throughput at extremely low latency. The Millimeter-Wave (mmWave) frequency bands, between 24 and 300GHz, can achieve such extreme performance. However, maintaining a consistently high Quality of Experience with highly mobile users is challenging, as mmWave communications are inherently directional. In this work, we present and evaluate an end-to-end approach to such a mmWave-based mobile XR system. We perform a highly realistic simulation of the system, incorporating accurate XR data traffic, detailed mmWave propagation models and actual user motion. We evaluate the impact of the beamforming strategy and frequency on the overall performance. In addition, we provide the first system-level evaluation of the CoVRage algorithm, a proactive and spatially aware user-side beamforming approach designed specifically for highly mobile XR environments.

Zero-energy Devices for 6G: Technical Enablers at a Glance

Feb 14, 2024Abstract:Low-cost, resource-constrained, maintenance-free, and energy-harvesting (EH) Internet of Things (IoT) devices, referred to as zero-energy devices (ZEDs), are rapidly attracting attention from industry and academia due to their myriad of applications. To date, such devices remain primarily unsupported by modern IoT connectivity solutions due to their intrinsic fabrication, hardware, deployment, and operation limitations, while lacking clarity on their key technical enablers and prospects. Herein, we address this by discussing the main characteristics and enabling technologies of ZEDs within the next generation of mobile networks, specifically focusing on unconventional EH sources, multi-source EH, power management, energy storage solutions, manufacturing material and practices, backscattering, and low-complexity receivers. Moreover, we highlight the need for lightweight and energy-aware computing, communication, and scheduling protocols, while discussing potential approaches related to TinyML, duty cycling, and infrastructure enablers like radio frequency wireless power transfer and wake-up protocols. Challenging aspects and open research directions are identified and discussed in all the cases. Finally, we showcase an experimental ZED proof-of-concept related to ambient cellular backscattering.

On the Feasibility of Battery-Less LoRaWAN Communications using Energy Harvesting

Feb 09, 2024Abstract:From the outset, batteries have been the main power source for the Internet of Things (IoT). However, replacing and disposing of billions of dead batteries per year is costly in terms of maintenance and ecologically irresponsible. Since batteries are one of the greatest threats to a sustainable IoT, battery-less devices are the solution to this problem. These devices run on long-lived capacitors charged using various forms of energy harvesting, which results in intermittent on-off device behaviour. In this work, we model this intermittent battery-less behaviour for LoRaWAN devices. This model allows us to characterize the performance with the aim to determine under which conditions a LoRaWAN device can work without batteries, and how its parameters should be configured. Results show that the reliability directly depends on device configurations (i.e., capacitor size, turn-on voltage threshold), application behaviour (i.e., transmission interval, packet size) and environmental conditions (i.e., energy harvesting rate).

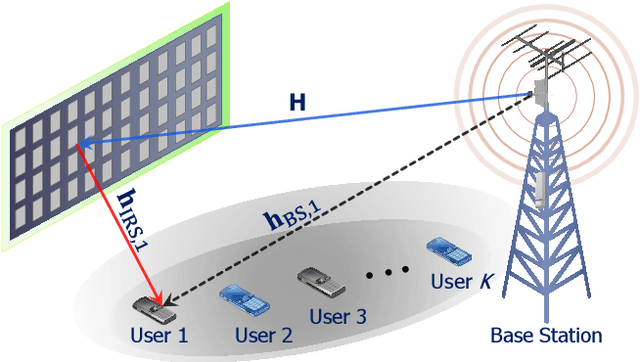

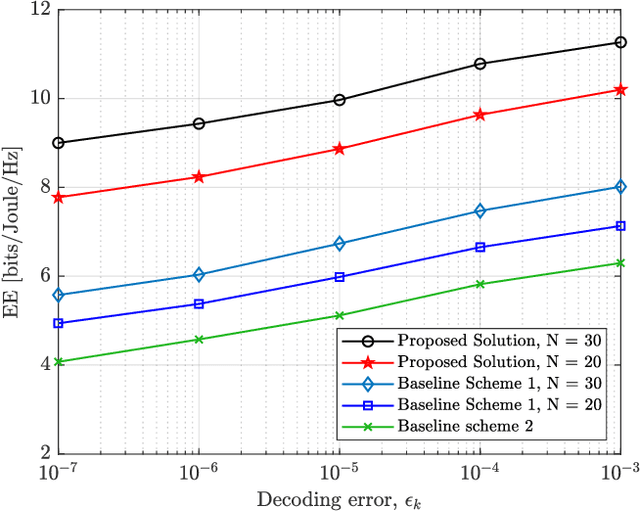

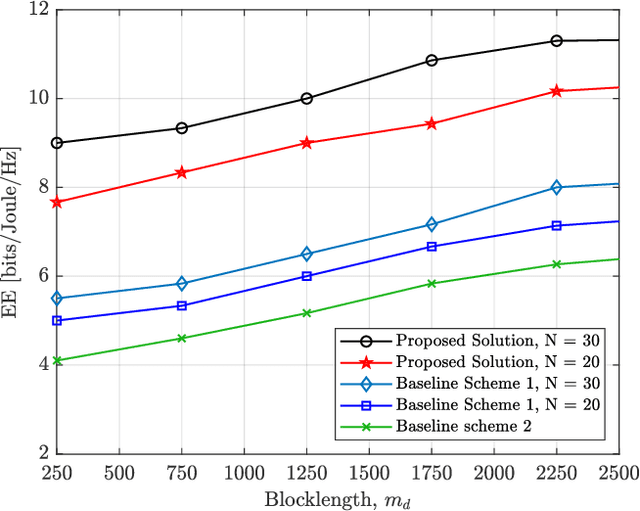

Toward Energy Efficient Multiuser IRS-Assisted URLLC Systems: A Novel Rank Relaxation Method

Sep 26, 2023

Abstract:This paper proposes an energy efficient resource allocation design algorithm for an intelligent reflecting surface (IRS)-assisted downlink ultra-reliable low-latency communication (URLLC) network. This setup features a multi-antenna base station (BS) transmitting data traffic to a group of URLLC users with short packet lengths. We maximize the total network's energy efficiency (EE) through the optimization of active beamformers at the BS and passive beamformers (a.k.a. phase shifts) at the IRS. The main non-convex problem is divided into two sub-problems. An alternating optimization (AO) approach is then used to solve the problem. Through the use of the successive convex approximation (SCA) with a novel iterative rank relaxation method, we construct a concave-convex objective function for each sub-problem. The first sub-problem is a fractional program that is solved using the Dinkelbach method and a penalty-based approach. The second sub-problem is then solved based on semi-definite programming (SDP) and the penalty-based approach. The iterative solution gradually approaches the rank-one for both the active beamforming and unit modulus IRS phase-shift sub-problems. Our results demonstrate the efficacy of the proposed solution compared to existing benchmarks.

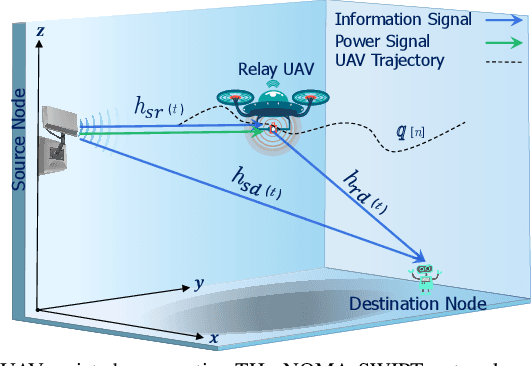

On the Energy Efficiency of THz-NOMA enhanced UAV Cooperative Network with SWIPT

Sep 25, 2023

Abstract:This paper considers the energy efficiency (EE) maximization of a simultaneous wireless information and power transfer (SWIPT)-assisted unmanned aerial vehicles (UAV) cooperative network operating at TeraHertz (THz) frequencies. The source performs SWIPT enabling the UAV to receive both power and information while also transmitting the information to a designated destination node. Subsequently, the UAV utilizes the harvested energy to relay the data to the intended destination node effectively. Specifically, we maximize EE by optimizing the non-orthogonal multiple access (NOMA) power allocation coefficients, SWIPT power splitting (PS) ratio, and UAV trajectory. The main problem is broken down into a two-stage optimization problem and solved using an alternating optimization approach. In the first stage, optimization of the PS ratio and trajectory is performed by employing successive convex approximation using a lower bound on the exponential factor in the THz channel model. In the second phase, the NOMA power coefficients are optimized using a quadratic transform approach. Numerical results demonstrate the effectiveness of our proposed resource allocation algorithm compared to the baselines where there is no trajectory optimization or no NOMA power or PS optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge