Jakob Struye

Generating Realistic Synthetic Head Rotation Data for Extended Reality using Deep Learning

Jan 15, 2025

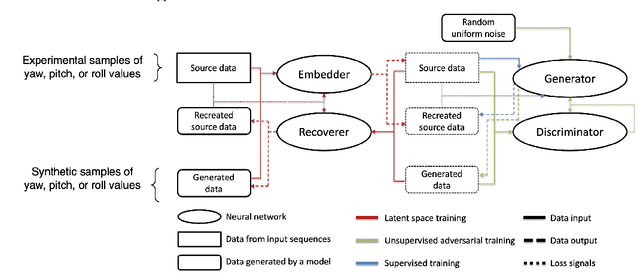

Abstract:Extended Reality is a revolutionary method of delivering multimedia content to users. A large contributor to its popularity is the sense of immersion and interactivity enabled by having real-world motion reflected in the virtual experience accurately and immediately. This user motion, mainly caused by head rotations, induces several technical challenges. For instance, which content is generated and transmitted depends heavily on where the user is looking. Seamless systems, taking user motion into account proactively, will therefore require accurate predictions of upcoming rotations. Training and evaluating such predictors requires vast amounts of orientational input data, which is expensive to gather, as it requires human test subjects. A more feasible approach is to gather a modest dataset through test subjects, and then extend it to a more sizeable set using synthetic data generation methods. In this work, we present a head rotation time series generator based on TimeGAN, an extension of the well-known Generative Adversarial Network, designed specifically for generating time series. This approach is able to extend a dataset of head rotations with new samples closely matching the distribution of the measured time series.

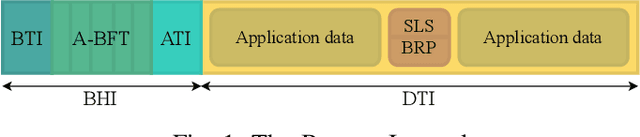

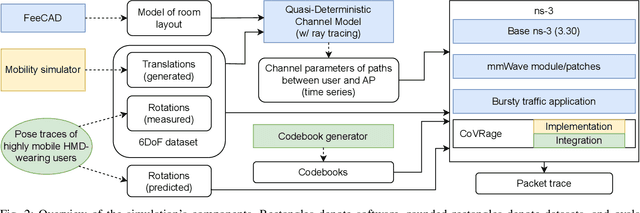

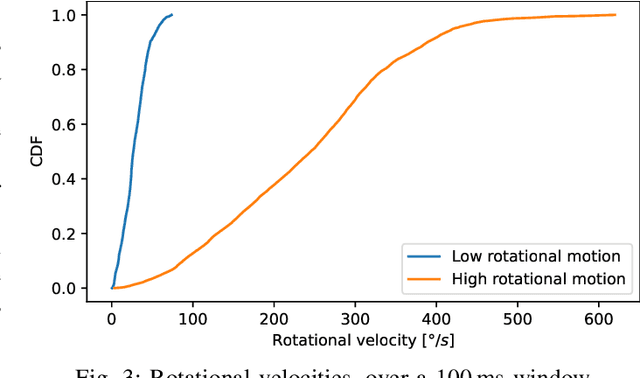

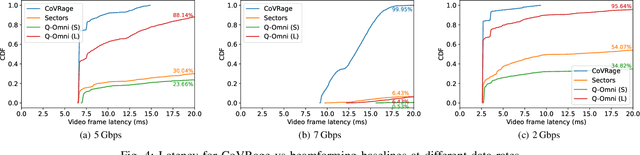

Multi-Gigabit Interactive Extended Reality over Millimeter-Wave: An End-to-End System Approach

May 24, 2024

Abstract:Achieving high-quality wireless interactive Extended Reality (XR) will require multi-gigabit throughput at extremely low latency. The Millimeter-Wave (mmWave) frequency bands, between 24 and 300GHz, can achieve such extreme performance. However, maintaining a consistently high Quality of Experience with highly mobile users is challenging, as mmWave communications are inherently directional. In this work, we present and evaluate an end-to-end approach to such a mmWave-based mobile XR system. We perform a highly realistic simulation of the system, incorporating accurate XR data traffic, detailed mmWave propagation models and actual user motion. We evaluate the impact of the beamforming strategy and frequency on the overall performance. In addition, we provide the first system-level evaluation of the CoVRage algorithm, a proactive and spatially aware user-side beamforming approach designed specifically for highly mobile XR environments.

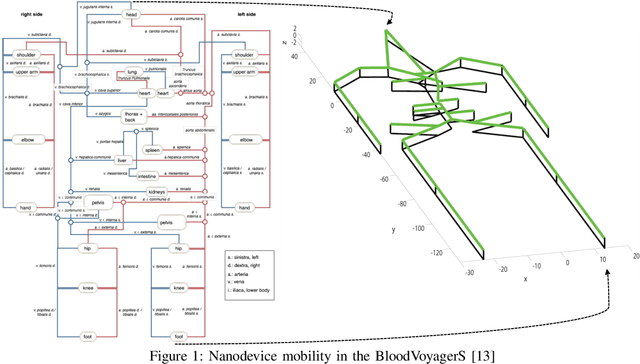

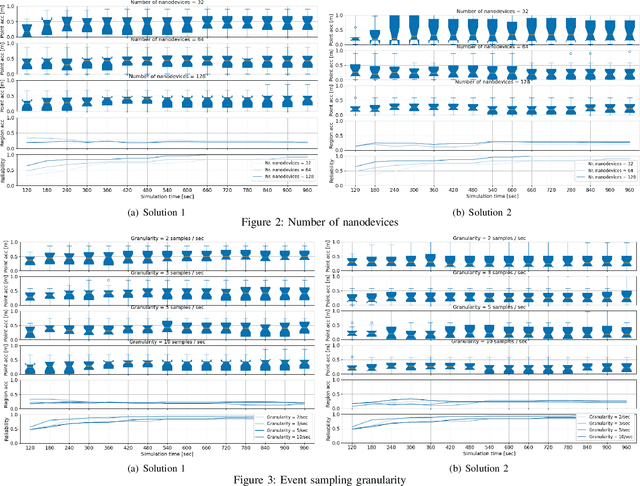

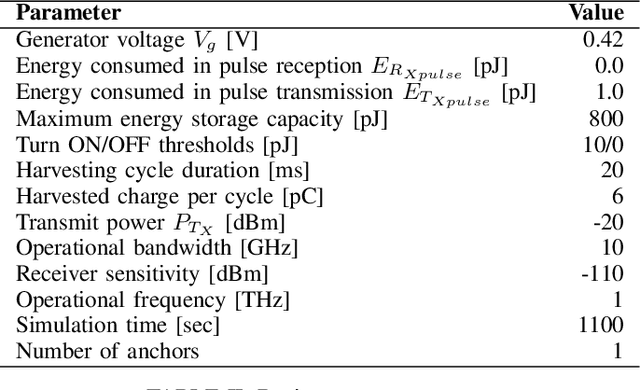

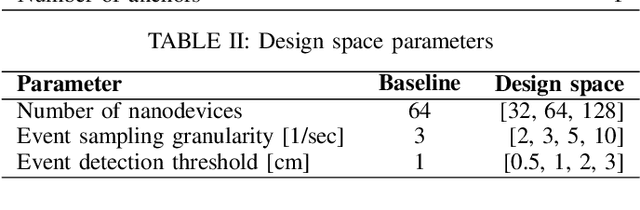

Insights from the Design Space Exploration of Flow-Guided Nanoscale Localization

May 29, 2023

Abstract:Nanodevices with Terahertz (THz)-based wireless communication capabilities are providing a primer for flow-guided localization within the human bloodstreams. Such localization is allowing for assigning the locations of sensed events with the events themselves, providing benefits in precision medicine along the lines of early and precise diagnostics, and reduced costs and invasiveness. Flow-guided localization is still in a rudimentary phase, with only a handful of works targeting the problem. Nonetheless, the performance assessments of the proposed solutions are already carried out in a non-standardized way, usually along a single performance metric, and ignoring various aspects that are relevant at such a scale (e.g., nanodevices' limited energy) and for such a challenging environment (e.g., extreme attenuation of in-body THz propagation). As such, these assessments feature low levels of realism and cannot be compared in an objective way. Toward addressing this issue, we account for the environmental and scale-related peculiarities of the scenario and assess the performance of two state-of-the-art flow-guided localization approaches along a set of heterogeneous performance metrics such as the accuracy and reliability of localization.

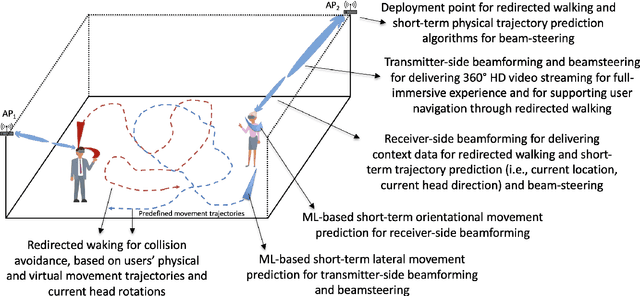

Predictive Context-Awareness for Full-Immersive Multiuser Virtual Reality with Redirected Walking

Apr 06, 2023

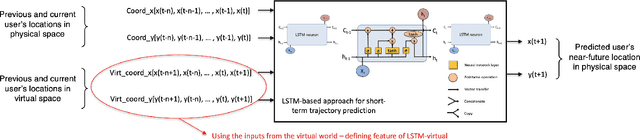

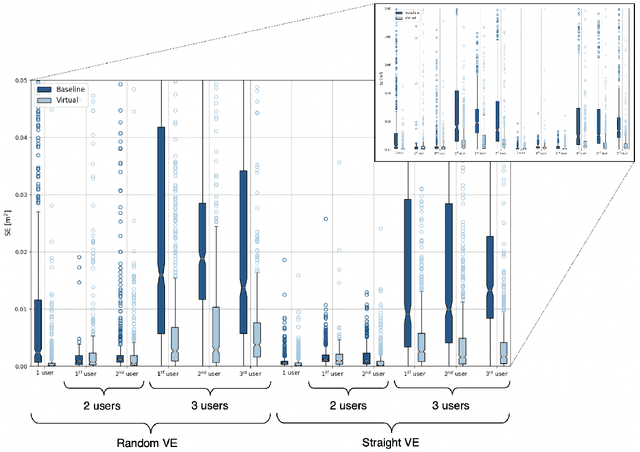

Abstract:The advancement of Virtual Reality (VR) technology is focused on improving its immersiveness, supporting multiuser Virtual Experiences (VEs), and enabling the users to move freely within their VEs while still being confined within specialized VR setups through Redirected Walking (RDW). To meet their extreme data-rate and latency requirements, future VR systems will require supporting wireless networking infrastructures operating in millimeter Wave (mmWave) frequencies that leverage highly directional communication in both transmission and reception through beamforming and beamsteering. We propose the use of predictive context-awareness to optimize transmitter and receiver-side beamforming and beamsteering. By predicting users' short-term lateral movements in multiuser VR setups with Redirected Walking (RDW), transmitter-side beamforming and beamsteering can be optimized through Line-of-Sight (LoS) "tracking" in the users' directions. At the same time, predictions of short-term orientational movements can be utilized for receiver-side beamforming for coverage flexibility enhancements. We target two open problems in predicting these two context information instances: i) predicting lateral movements in multiuser VR settings with RDW, and ii) generating synthetic head rotation datasets for training orientational movements predictors. Our experimental results demonstrate that Long Short-Term Memory (LSTM) networks feature promising accuracy in predicting lateral movements, and context-awareness stemming from VEs further enhances this accuracy. Additionally, we show that a TimeGAN-based approach for orientational data generation can create synthetic samples that closely match experimentally obtained ones.

CoVRage: Millimeter-Wave Beamforming for Mobile Interactive Virtual Reality

Dec 12, 2022

Abstract:Contemporary Virtual Reality (VR) setups often include an external source delivering content to a Head-Mounted Display (HMD). "Cutting the wire" in such setups and going truly wireless will require a wireless network capable of delivering enormous amounts of video data at an extremely low latency. The massive bandwidth of higher frequencies, such as the millimeter-wave (mmWave) band, can meet these requirements. Due to high attenuation and path loss in the mmWave frequencies, beamforming is essential. In wireless VR, where the antenna is integrated into the HMD, any head rotation also changes the antenna's orientation. As such, beamforming must adapt, in real-time, to the user's head rotations. An HMD's built-in sensors providing accurate orientation estimates may facilitate such rapid beamforming. In this work, we present coVRage, a receive-side beamforming solution tailored for VR HMDs. Using built-in orientation prediction present on modern HMDs, the algorithm estimates how the Angle of Arrival (AoA) at the HMD will change in the near future, and covers this AoA trajectory with a dynamically shaped oblong beam, synthesized using sub-arrays. We show that this solution can cover these trajectories with consistently high gain, even in light of temporally or spatially inaccurate orientational data.

Short-Term Trajectory Prediction for Full-Immersive Multiuser Virtual Reality with Redirected Walking

Jul 15, 2022

Abstract:Full-immersive multiuser Virtual Reality (VR) envisions supporting unconstrained mobility of the users in the virtual worlds, while at the same time constraining their physical movements inside VR setups through redirected walking. For enabling delivery of high data rate video content in real-time, the supporting wireless networks will leverage highly directional communication links that will "track" the users for maintaining the Line-of-Sight (LoS) connectivity. Recurrent Neural Networks (RNNs) and in particular Long Short-Term Memory (LSTM) networks have historically presented themselves as a suitable candidate for near-term movement trajectory prediction for natural human mobility, and have also recently been shown as applicable in predicting VR users' mobility under the constraints of redirected walking. In this work, we extend these initial findings by showing that Gated Recurrent Unit (GRU) networks, another candidate from the RNN family, generally outperform the traditionally utilized LSTMs. Second, we show that context from a virtual world can enhance the accuracy of the prediction if used as an additional input feature in comparison to the more traditional utilization of solely the historical physical movements of the VR users. Finally, we show that the prediction system trained on a static number of coexisting VR users be scaled to a multi-user system without significant accuracy degradation.

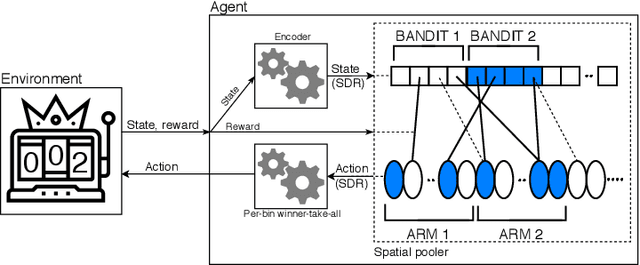

HTMRL: Biologically Plausible Reinforcement Learning with Hierarchical Temporal Memory

Sep 18, 2020

Abstract:Building Reinforcement Learning (RL) algorithms which are able to adapt to continuously evolving tasks is an open research challenge. One technology that is known to inherently handle such non-stationary input patterns well is Hierarchical Temporal Memory (HTM), a general and biologically plausible computational model for the human neocortex. As the RL paradigm is inspired by human learning, HTM is a natural framework for an RL algorithm supporting non-stationary environments. In this paper, we present HTMRL, the first strictly HTM-based RL algorithm. We empirically and statistically show that HTMRL scales to many states and actions, and demonstrate that HTM's ability for adapting to changing patterns extends to RL. Specifically, HTMRL performs well on a 10-armed bandit after 750 steps, but only needs a third of that to adapt to the bandit suddenly shuffling its arms. HTMRL is the first iteration of a novel RL approach, with the potential of extending to a capable algorithm for Meta-RL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge