Natasha Devroye

Miniature UAV Empowered Reconfigurable Energy Harvesting Holographic Surfaces in THz Cooperative Networks

Nov 27, 2024

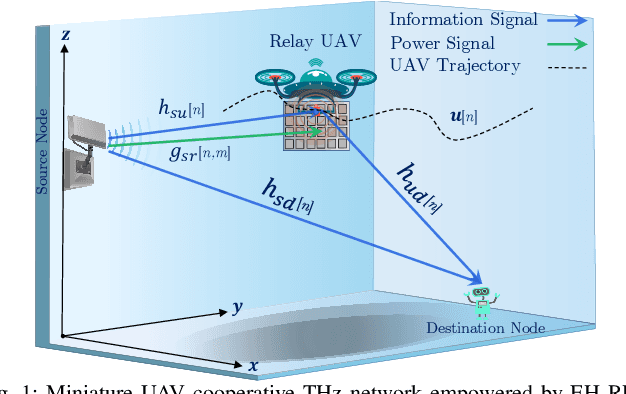

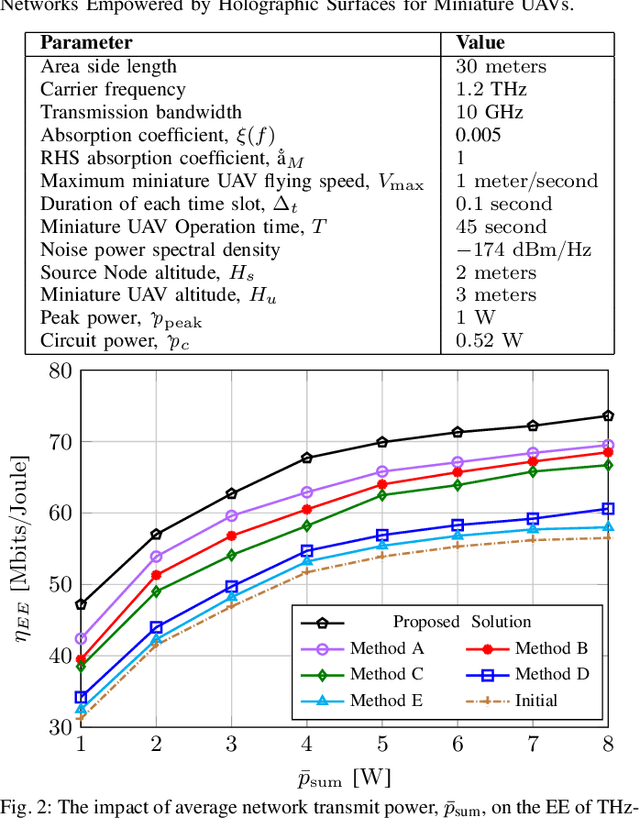

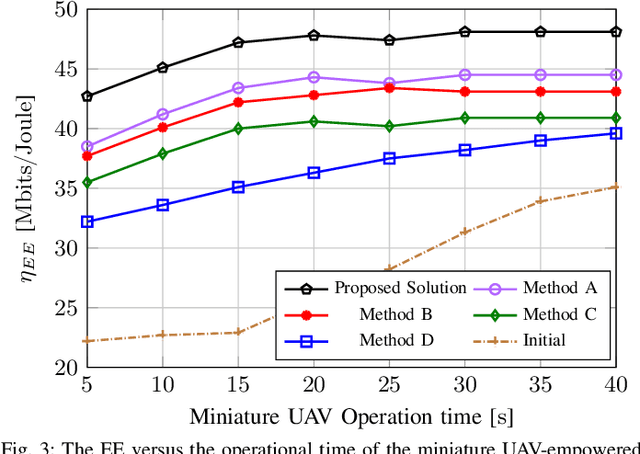

Abstract:This paper focuses on enhancing the energy efficiency (EE) of a cooperative network featuring a `miniature' unmanned aerial vehicle (UAV) that operates at terahertz (THz) frequencies, utilizing holographic surfaces to improve the network's performance. Unlike traditional reconfigurable intelligent surfaces (RIS) that are typically used as passive relays to adjust signal reflections, this work introduces a novel concept: Energy harvesting (EH) using reconfigurable holographic surfaces (RHS) mounted on the miniature UAV. In this system, a source node facilitates the simultaneous reception of information and energy signals by the UAV, with the harvested energy from the RHS being used by the UAV to transmit data to a specific destination. The EE optimization involves adjusting non-orthogonal multiple access (NOMA) power coefficients and the UAV's flight path, considering the peculiarities of the THz channel. The optimization problem is solved in two steps. Initially, the trajectory is refined using a successive convex approximation (SCA) method, followed by the adjustment of NOMA power coefficients through a quadratic transform technique. The effectiveness of the proposed algorithm is demonstrated through simulations, showing superior results when compared to baseline methods.

Interpreting Deepcode, a learned feedback code

Apr 26, 2024

Abstract:Deep learning methods have recently been used to construct non-linear codes for the additive white Gaussian noise (AWGN) channel with feedback. However, there is limited understanding of how these black-box-like codes with many learned parameters use feedback. This study aims to uncover the fundamental principles underlying the first deep-learned feedback code, known as Deepcode, which is based on an RNN architecture. Our interpretable model based on Deepcode is built by analyzing the influence length of inputs and approximating the non-linear dynamics of the original black-box RNN encoder. Numerical experiments demonstrate that our interpretable model -- which includes both an encoder and a decoder -- achieves comparable performance to Deepcode while offering an interpretation of how it employs feedback for error correction.

Active learning for fast and slow modeling attacks on Arbiter PUFs

Aug 25, 2023Abstract:Modeling attacks, in which an adversary uses machine learning techniques to model a hardware-based Physically Unclonable Function (PUF) pose a great threat to the viability of these hardware security primitives. In most modeling attacks, a random subset of challenge-response-pairs (CRPs) are used as the labeled data for the machine learning algorithm. Here, for the arbiter-PUF, a delay based PUF which may be viewed as a linear threshold function with random weights (due to manufacturing imperfections), we investigate the role of active learning in Support Vector Machine (SVM) learning. We focus on challenge selection to help SVM algorithm learn ``fast'' and learn ``slow''. Our methods construct challenges rather than relying on a sample pool of challenges as in prior work. Using active learning to learn ``fast'' (less CRPs revealed, higher accuracies) may help manufacturers learn the manufactured PUFs more efficiently, or may form a more powerful attack when the attacker may query the PUF for CRPs at will. Using active learning to select challenges from which learning is ``slow'' (low accuracy despite a large number of revealed CRPs) may provide a basis for slowing down attackers who are limited to overhearing CRPs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge