Jason Hughes

A Multi-Robot Platform for Robotic Triage Combining Onboard Sensing and Foundation Models

Dec 09, 2025

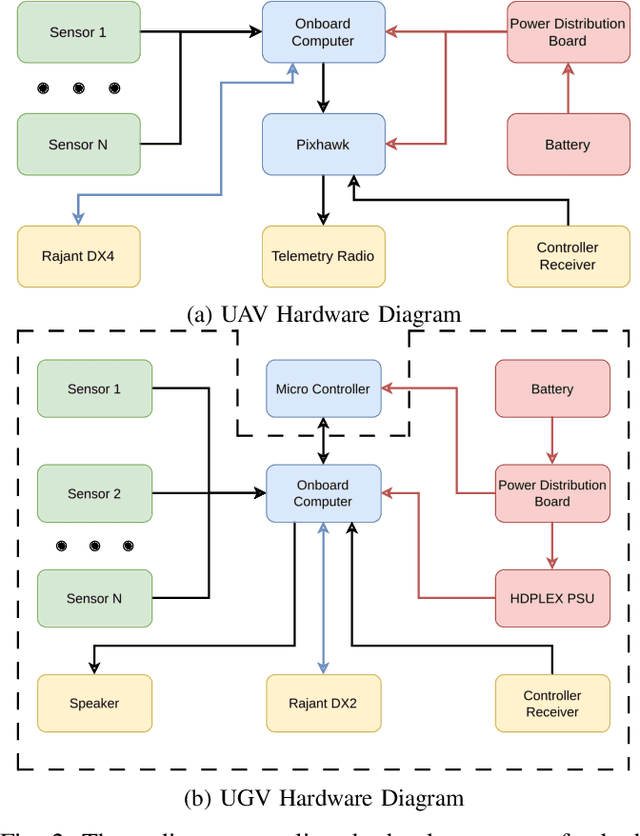

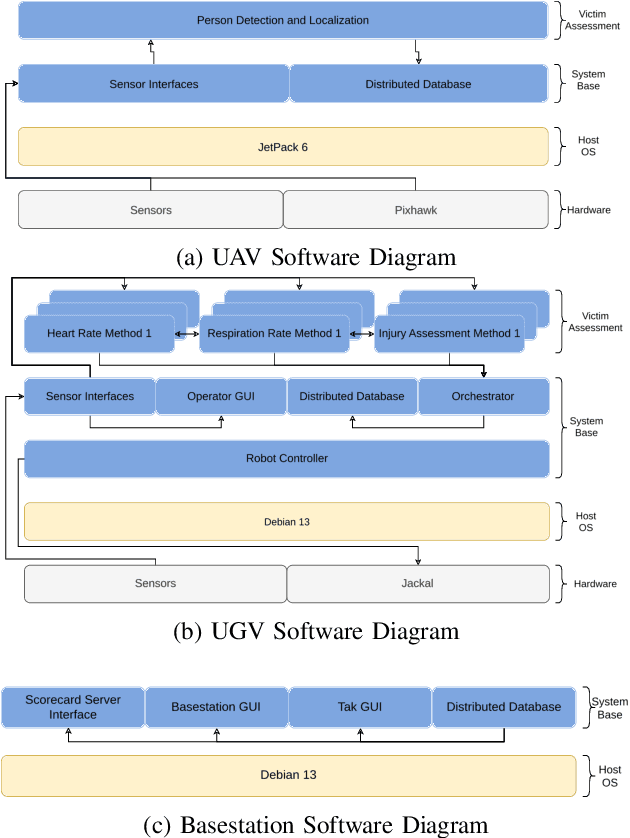

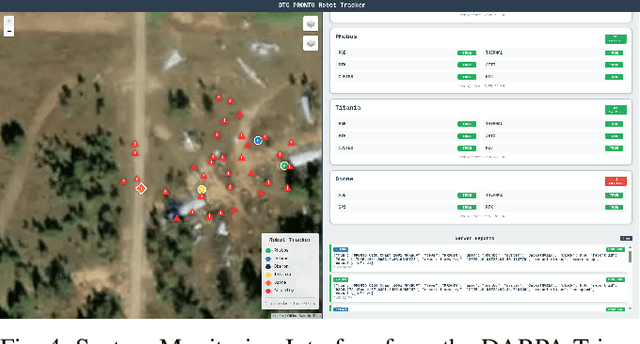

Abstract:This report presents a heterogeneous robotic system designed for remote primary triage in mass-casualty incidents (MCIs). The system employs a coordinated air-ground team of unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) to locate victims, assess their injuries, and prioritize medical assistance without risking the lives of first responders. The UAV identify and provide overhead views of casualties, while UGVs equipped with specialized sensors measure vital signs and detect and localize physical injuries. Unlike previous work that focused on exploration or limited medical evaluation, this system addresses the complete triage process: victim localization, vital sign measurement, injury severity classification, mental status assessment, and data consolidation for first responders. Developed as part of the DARPA Triage Challenge, this approach demonstrates how multi-robot systems can augment human capabilities in disaster response scenarios to maximize lives saved.

Heterogeneous Robot Collaboration in Unstructured Environments with Grounded Generative Intelligence

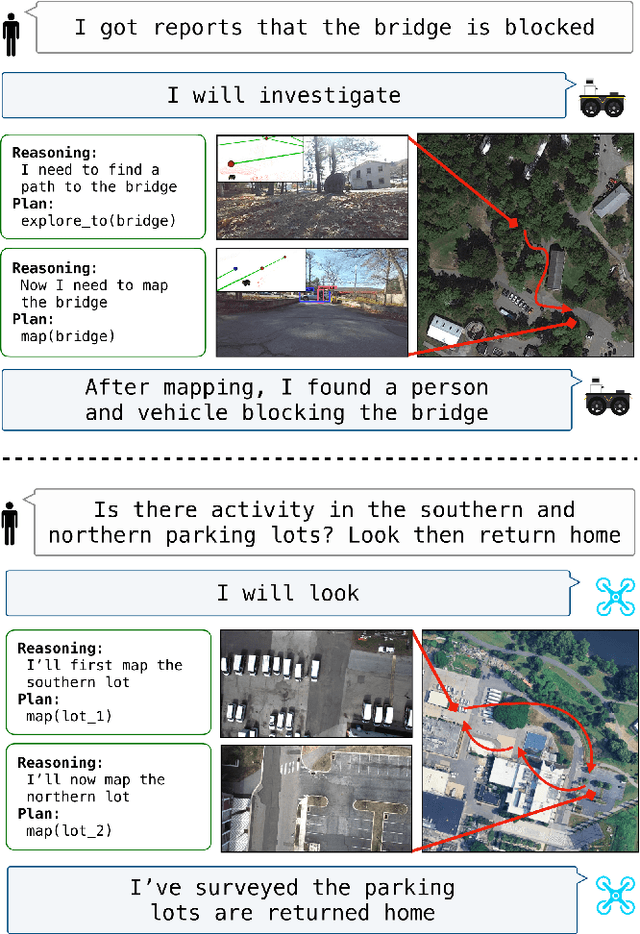

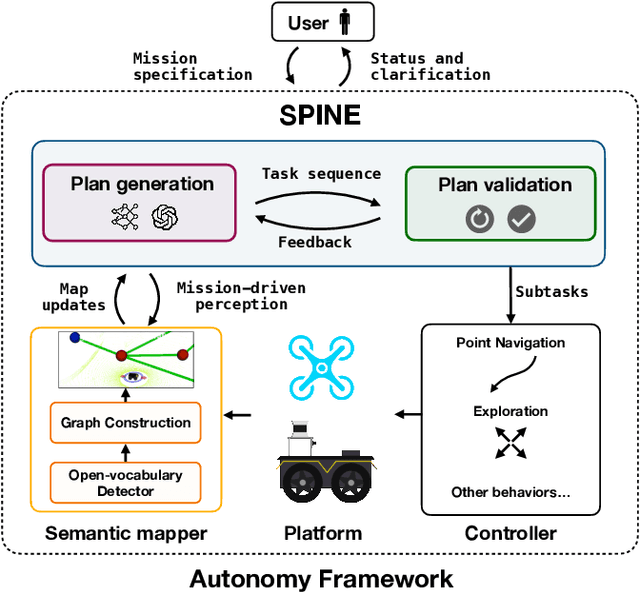

Oct 30, 2025Abstract:Heterogeneous robot teams operating in realistic settings often must accomplish complex missions requiring collaboration and adaptation to information acquired online. Because robot teams frequently operate in unstructured environments -- uncertain, open-world settings without prior maps -- subtasks must be grounded in robot capabilities and the physical world. While heterogeneous teams have typically been designed for fixed specifications, generative intelligence opens the possibility of teams that can accomplish a wide range of missions described in natural language. However, current large language model (LLM)-enabled teaming methods typically assume well-structured and known environments, limiting deployment in unstructured environments. We present SPINE-HT, a framework that addresses these limitations by grounding the reasoning abilities of LLMs in the context of a heterogeneous robot team through a three-stage process. Given language specifications describing mission goals and team capabilities, an LLM generates grounded subtasks which are validated for feasibility. Subtasks are then assigned to robots based on capabilities such as traversability or perception and refined given feedback collected during online operation. In simulation experiments with closed-loop perception and control, our framework achieves nearly twice the success rate compared to prior LLM-enabled heterogeneous teaming approaches. In real-world experiments with a Clearpath Jackal, a Clearpath Husky, a Boston Dynamics Spot, and a high-altitude UAV, our method achieves an 87\% success rate in missions requiring reasoning about robot capabilities and refining subtasks with online feedback. More information is provided at https://zacravichandran.github.io/SPINE-HT.

Deploying Foundation Model-Enabled Air and Ground Robots in the Field: Challenges and Opportunities

May 14, 2025

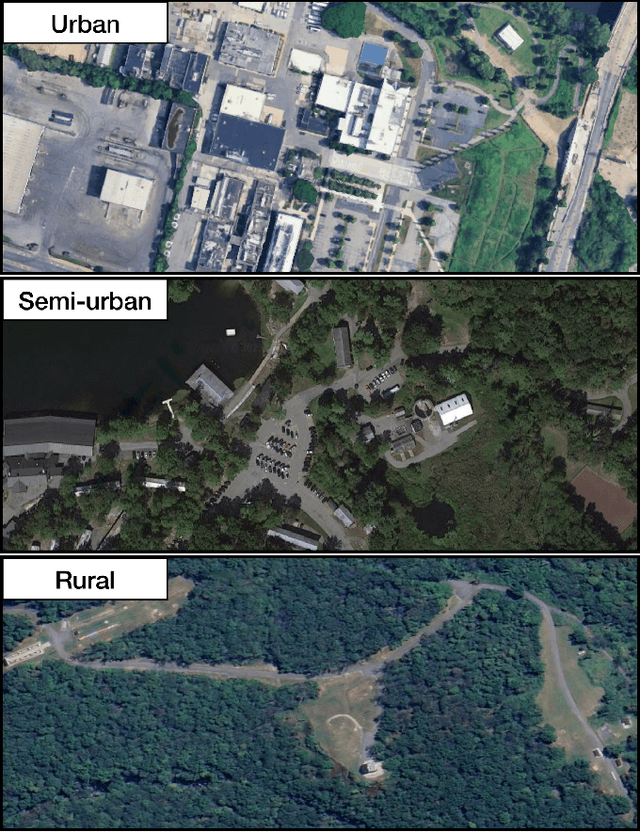

Abstract:The integration of foundation models (FMs) into robotics has enabled robots to understand natural language and reason about the semantics in their environments. However, existing FM-enabled robots primary operate in closed-world settings, where the robot is given a full prior map or has a full view of its workspace. This paper addresses the deployment of FM-enabled robots in the field, where missions often require a robot to operate in large-scale and unstructured environments. To effectively accomplish these missions, robots must actively explore their environments, navigate obstacle-cluttered terrain, handle unexpected sensor inputs, and operate with compute constraints. We discuss recent deployments of SPINE, our LLM-enabled autonomy framework, in field robotic settings. To the best of our knowledge, we present the first demonstration of large-scale LLM-enabled robot planning in unstructured environments with several kilometers of missions. SPINE is agnostic to a particular LLM, which allows us to distill small language models capable of running onboard size, weight and power (SWaP) limited platforms. Via preliminary model distillation work, we then present the first language-driven UAV planner using on-device language models. We conclude our paper by proposing several promising directions for future research.

Air-Ground Collaboration for Language-Specified Missions in Unknown Environments

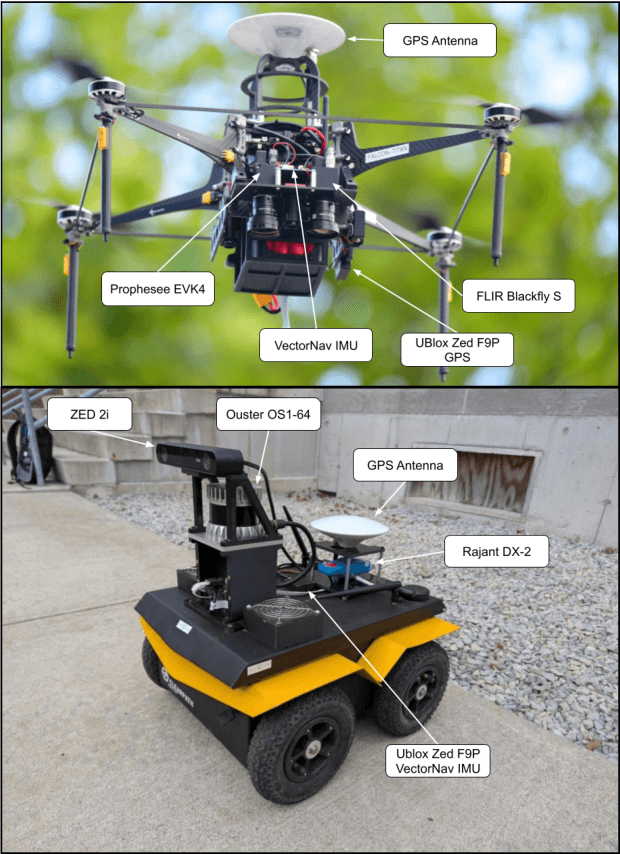

May 14, 2025Abstract:As autonomous robotic systems become increasingly mature, users will want to specify missions at the level of intent rather than in low-level detail. Language is an expressive and intuitive medium for such mission specification. However, realizing language-guided robotic teams requires overcoming significant technical hurdles. Interpreting and realizing language-specified missions requires advanced semantic reasoning. Successful heterogeneous robots must effectively coordinate actions and share information across varying viewpoints. Additionally, communication between robots is typically intermittent, necessitating robust strategies that leverage communication opportunities to maintain coordination and achieve mission objectives. In this work, we present a first-of-its-kind system where an unmanned aerial vehicle (UAV) and an unmanned ground vehicle (UGV) are able to collaboratively accomplish missions specified in natural language while reacting to changes in specification on the fly. We leverage a Large Language Model (LLM)-enabled planner to reason over semantic-metric maps that are built online and opportunistically shared between an aerial and a ground robot. We consider task-driven navigation in urban and rural areas. Our system must infer mission-relevant semantics and actively acquire information via semantic mapping. In both ground and air-ground teaming experiments, we demonstrate our system on seven different natural-language specifications at up to kilometer-scale navigation.

Challenges and Opportunities for Large-Scale Exploration with Air-Ground Teams using Semantics

May 12, 2024

Abstract:One common and desirable application of robots is exploring potentially hazardous and unstructured environments. Air-ground collaboration offers a synergistic approach to addressing such exploration challenges. In this paper, we demonstrate a system for large-scale exploration using a team of aerial and ground robots. Our system uses semantics as lingua franca, and relies on fully opportunistic communications. We highlight the unique challenges from this approach, explain our system architecture and showcase lessons learned during our experiments. All our code is open-source, encouraging researchers to use it and build upon.

Dynamic and Distributed Optimization for the Allocation of Aerial Swarm Vehicles

Nov 30, 2022

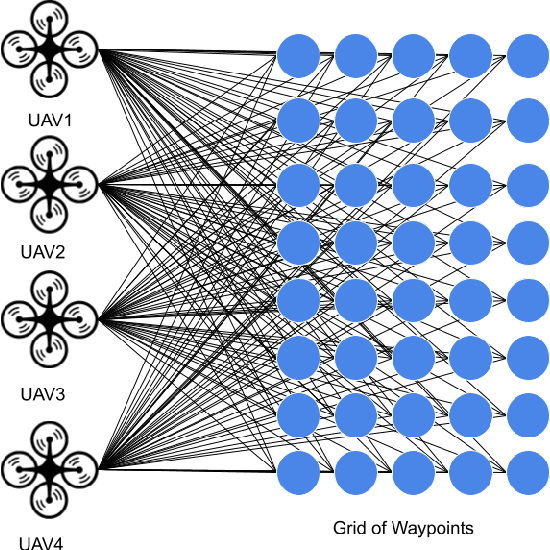

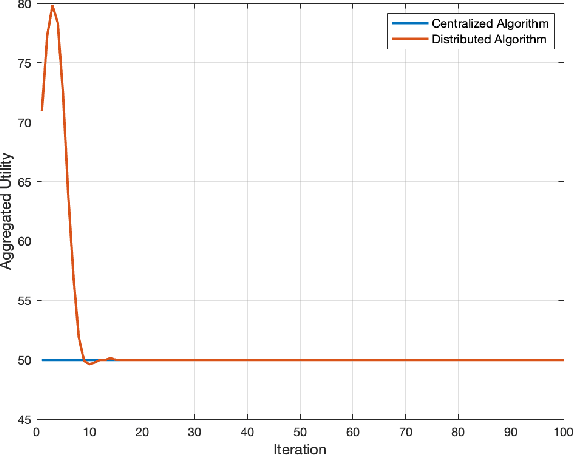

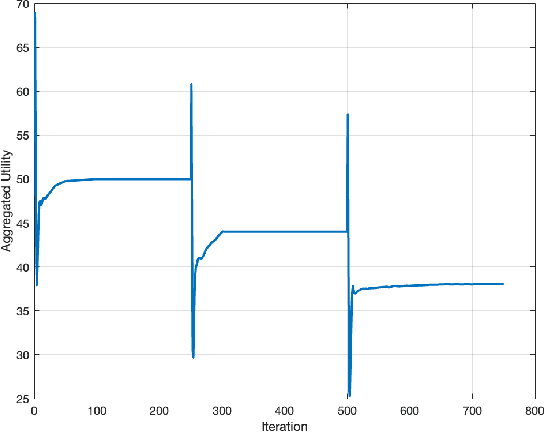

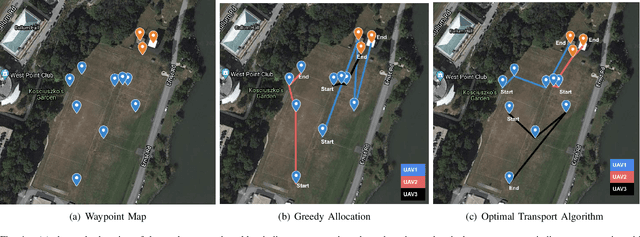

Abstract:Optimal transport (OT) is a framework that can guide the design of efficient resource allocation strategies in a network of multiple sources and targets. This paper applies discrete OT to a swarm of UAVs in a novel way to achieve appropriate task allocation and execution. Drone swarm deployments already operate in multiple domains where sensors are used to gain knowledge of an environment [1]. Use cases such as, chemical and radiation detection, and thermal and RGB imaging create a specific need for an algorithm that considers parameters on both the UAV and waypoint side and allows for updating the matching scheme as the swarm gains information from the environment. Additionally, the need for a centralized planner can be removed by using a distributed algorithm that can dynamically update based on changes in the swarm network or parameters. To this end, we develop a dynamic and distributed OT algorithm that matches a UAV to the optimal waypoint based on one parameter at the UAV and another parameter at the waypoint. We show the convergence and allocation of the algorithm through a case study and test the algorithm's effectiveness against a greedy assignment algorithm in simulation.

Wall Detection Via IMU Data Classification In Autonomous Quadcopters

Mar 29, 2021

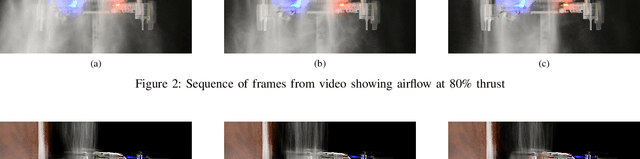

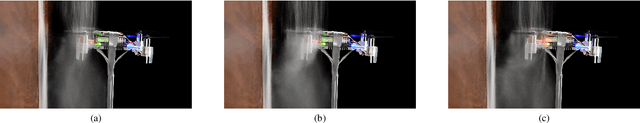

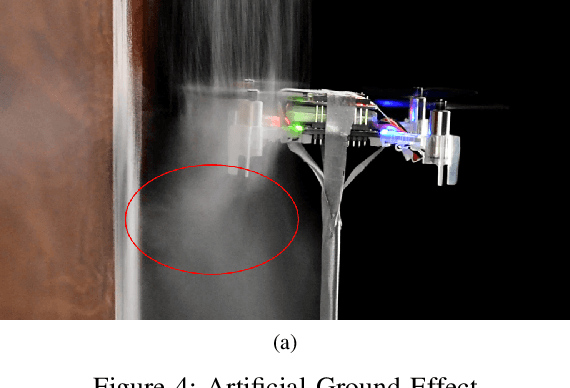

Abstract:An autonomous drone flying near obstacles needs to be able to detect and avoid the obstacles or it will collide with them. In prior work, drones can detect and avoid walls using data from camera, ultrasonic or laser sensors mounted either on the drone or in the environment. It is not always possible to instrument the environment, and sensors added to the drone consume payload and power - both of which are constrained for drones. This paper studies how data mining classification techniques can be used to predict where an obstacle is in relation to the drone based only on monitoring air-disturbance. We modeled the airflow of the rotors physically to deduce higher level features for classification. Data was collected from the drone's IMU while it was flying with a wall to its direct left, front and right, as well as with no walls present. In total 18 higher level features were produced from the raw data. We used an 80%, 20% train-test scheme with the RandomForest (RF), K-Nearest Neighbor (KNN) and GradientBoosting (GB) classifiers. Our results show that with the RF classifier and with 90% accuracy it can predict which direction a wall is in relation to the drone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge