Damian Lyons

Adaptive Thresholding for Visual Place Recognition using Negative Gaussian Mixture Statistics

Dec 09, 2025Abstract:Visual place recognition (VPR) is an important component technology for camera-based mapping and navigation applications. This is a challenging problem because images of the same place may appear quite different for reasons including seasonal changes, weather illumination, structural changes to the environment, as well as transient pedestrian or vehicle traffic. Papers focusing on generating image descriptors for VPR report their results using metrics such as recall@K and ROC curves. However, for a robot implementation, determining which matches are sufficiently good is often reduced to a manually set threshold. And it is difficult to manually select a threshold that will work for a variety of visual scenarios. This paper addresses the problem of automatically selecting a threshold for VPR by looking at the 'negative' Gaussian mixture statistics for a place - image statistics indicating not this place. We show that this approach can be used to select thresholds that work well for a variety of image databases and image descriptors.

Improving Classification of Occluded Objects through Scene Context

Oct 30, 2025Abstract:The presence of occlusions has provided substantial challenges to typically-powerful object recognition algorithms. Additional sources of information can be extremely valuable to reduce errors caused by occlusions. Scene context is known to aid in object recognition in biological vision. In this work, we attempt to add robustness into existing Region Proposal Network-Deep Convolutional Neural Network (RPN-DCNN) object detection networks through two distinct scene-based information fusion techniques. We present one algorithm under each methodology: the first operates prior to prediction, selecting a custom object network to use based on the identified background scene, and the second operates after detection, fusing scene knowledge into initial object scores output by the RPN. We demonstrate our algorithms on challenging datasets featuring partial occlusions, which show overall improvement in both recall and precision against baseline methods. In addition, our experiments contrast multiple training methodologies for occlusion handling, finding that training on a combination of both occluded and unoccluded images demonstrates an improvement over the others. Our method is interpretable and can easily be adapted to other datasets, offering many future directions for research and practical applications.

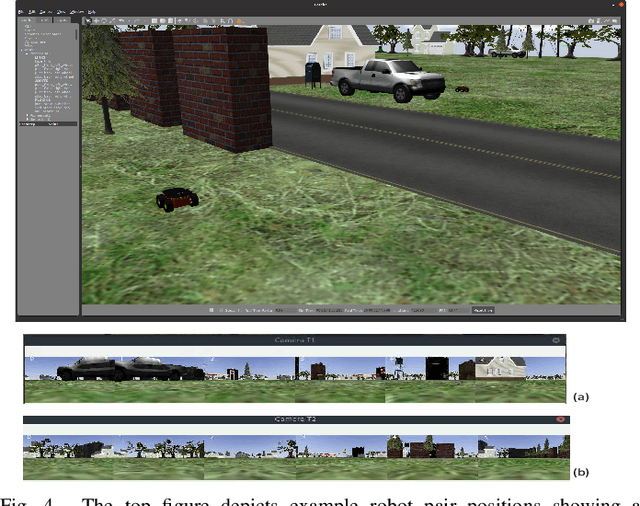

A Decentralized Cooperative Navigation Approach for Visual Homing Networks

Oct 02, 2023

Abstract:Visual homing is a lightweight approach to visual navigation. Given the stored information of an initial 'home' location, the navigation task back to this location is achieved from any other location by comparing the stored home information to the current image and extracting a motion vector. A challenge that constrains the applicability of visual homing is that the home location must be within the robot's field of view to initiate the homing process. Thus, we propose a blockchain approach to visual navigation for a heterogeneous robot team over a wide area of visual navigation. Because it does not require map data structures, the approach is useful for robot platforms with a small computational footprint, and because it leverages current visual information, it supports a resilient and adaptive path selection. Further, we present a lightweight Proof-of-Work (PoW) mechanism for reaching consensus in the untrustworthy visual homing network.

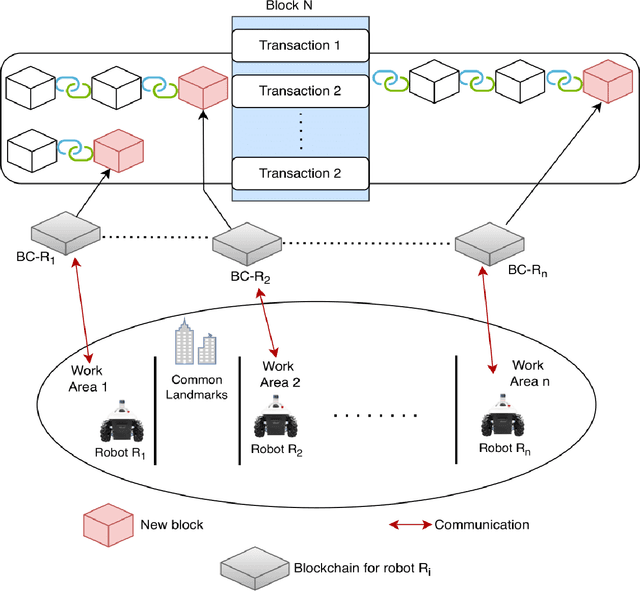

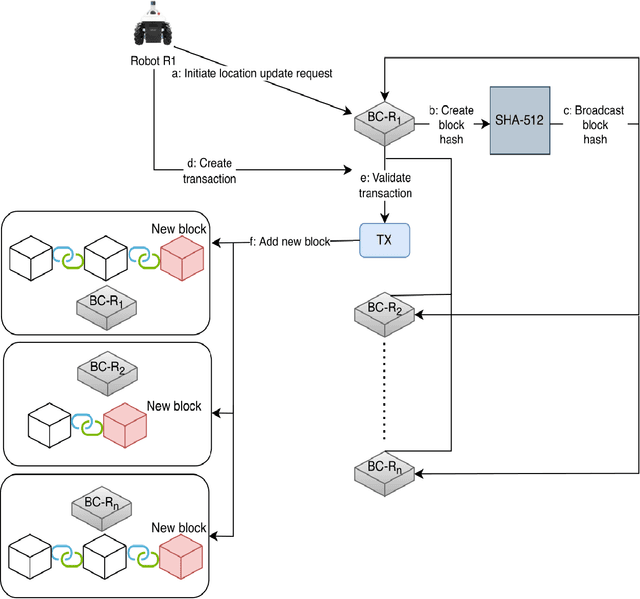

VRChain: A Blockchain-Enabled Framework for Visual Homing and Navigation Robots

Jun 08, 2022

Abstract:Visual homing is a lightweight approach to robot visual navigation. Based upon stored visual information of a home location, the navigation back to this location can be accomplished from any other location in which this location is visible by comparing home to the current image. However, a key challenge of visual homing is that the target home location must be within the robot's field of view (FOV) to start homing. Therefore, this work addresses such a challenge by integrating blockchain technology into the visual homing navigation system. Based on the decentralized feature of blockchain, the proposed solution enables visual homing robots to share their visual homing information and synchronously access the stored data (visual homing information) in the decentralized ledger to establish the navigation path. The navigation path represents a per-robot sequence of views stored in the ledger. If the home location is not in the FOV, the proposed solution permits a robot to find another robot that can see the home location and travel towards that desired location. The evaluation results demonstrate the efficiency of the proposed framework in terms of end-to-end latency, throughput, and scalability.

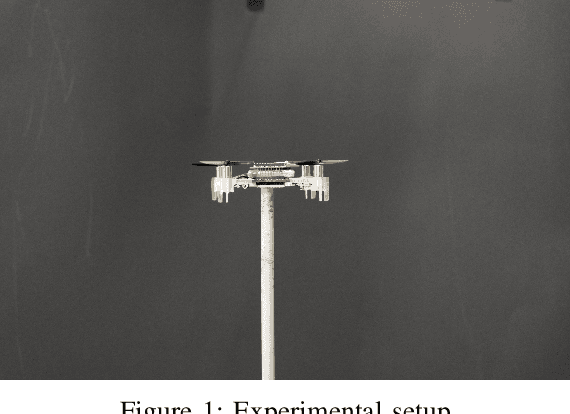

Wall Detection Via IMU Data Classification In Autonomous Quadcopters

Mar 29, 2021

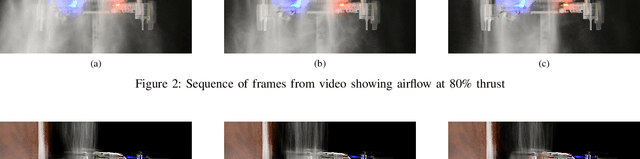

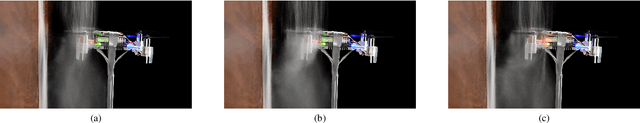

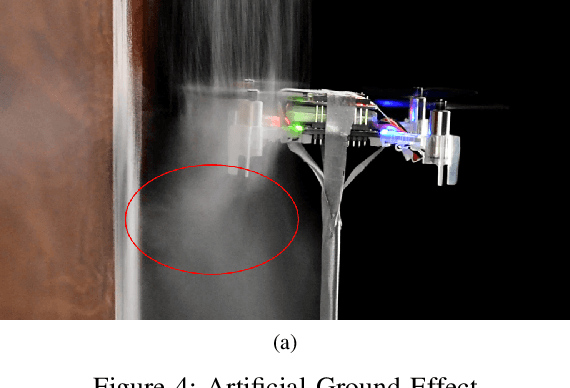

Abstract:An autonomous drone flying near obstacles needs to be able to detect and avoid the obstacles or it will collide with them. In prior work, drones can detect and avoid walls using data from camera, ultrasonic or laser sensors mounted either on the drone or in the environment. It is not always possible to instrument the environment, and sensors added to the drone consume payload and power - both of which are constrained for drones. This paper studies how data mining classification techniques can be used to predict where an obstacle is in relation to the drone based only on monitoring air-disturbance. We modeled the airflow of the rotors physically to deduce higher level features for classification. Data was collected from the drone's IMU while it was flying with a wall to its direct left, front and right, as well as with no walls present. In total 18 higher level features were produced from the raw data. We used an 80%, 20% train-test scheme with the RandomForest (RF), K-Nearest Neighbor (KNN) and GradientBoosting (GB) classifiers. Our results show that with the RF classifier and with 90% accuracy it can predict which direction a wall is in relation to the drone.

Evaluating the Potential of Drone Swarms in Nonverbal HRI Communication

Jul 11, 2020

Abstract:Human-to-human communications are enriched with affects and emotions, conveyed, and perceived through both verbal and nonverbal communication. It is our thesis that drone swarms can be used to communicate information enriched with effects via nonverbal channels: guiding, generally interacting with, or warning a human audience via their pattern of motions or behavior. And furthermore that this approach has unique advantages such as flexibility and mobility over other forms of user interface. In this paper, we present a user study to understand how human participants perceived and interpreted swarm behaviors of micro-drone Crazyflie quadcopters flying three different flight formations to bridge the psychological gap between front-end technologies (drones) and the human observers' emotional perceptions. We ask the question whether a human observer would in fact consider a swarm of drones in their immediate vicinity to be nonthreatening enough to be a vehicle for communication, and whether a human would intuit some communication from the swarm behavior, despite the lack of verbal or written language. Our results show that there is statistically significant support for the thesis that a human participant is open to interpreting the motion of drones as having intent and to potentially interpret their motion as communication. This supports the potential use of drone swarms as a communication resource, emergency guidance situations, policing of public events, tour guidance, etc.

A Monte Carlo Approach to Closing the Reality Gap

May 08, 2020

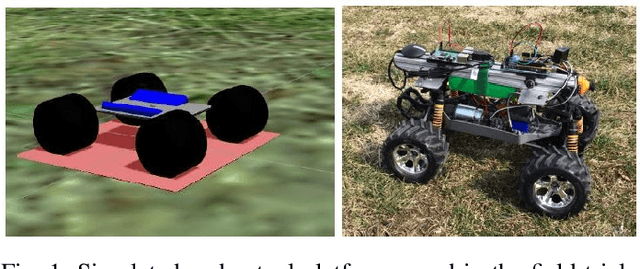

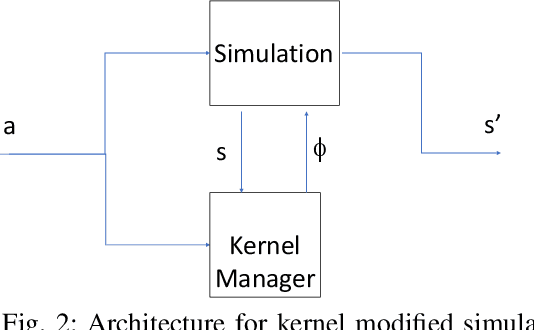

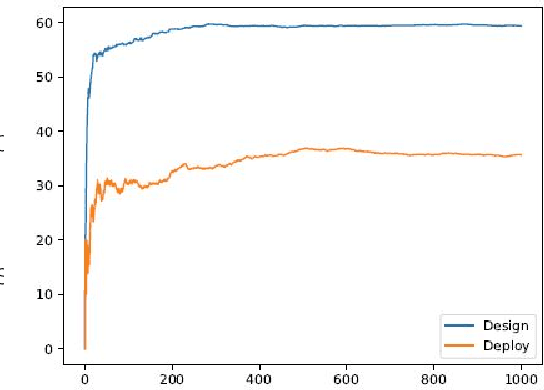

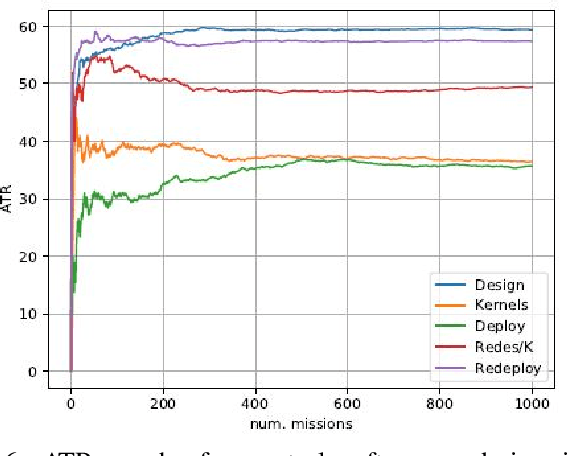

Abstract:We propose a novel approach to the 'reality gap' problem, i.e., modifying a robot simulation so that its performance becomes more similar to observed real world phenomena. This problem arises whether the simulation is being used by human designers or in an automated policy development mechanism. We expect that the program/policy is developed using simulation, and subsequently deployed on a real system. We further assume that the program includes a monitor procedure with scalar output to determine when it is achieving its performance objectives. The proposed approach collects simulation and real world observations and builds conditional probability functions. These are used to generate paired roll-outs to identify points of divergence in behavior. These are used to generate {\it state-space kernels} that coerce the simulation into behaving more like observed reality. The method was evaluated using ROS/Gazebo for simulation and a heavily modified Traaxas platform in outdoor deployment. The results support not just that the kernel approach can force the simulation to behave more like reality, but that the modification is such that an improved control policy tested in the modified simulation also performs better in the real world.

Constructionist Steps Towards an Autonomously Empathetic System

Aug 02, 2018

Abstract:Prior efforts to create an autonomous computer system capable of predicting what a human being is thinking or feeling from facial expression data have been largely based on outdated, inaccurate models of how emotions work that rely on many scientifically questionable assumptions. In our research, we are creating an empathetic system that incorporates the latest provable scientific understanding of emotions: that they are constructs of the human mind, rather than universal expressions of distinct internal states. Thus, our system uses a user-dependent method of analysis and relies heavily on contextual information to make predictions about what subjects are experiencing. Our system's accuracy and therefore usefulness are built on provable ground truths that prohibit the drawing of inaccurate conclusions that other systems could too easily make.

Towards Affective Drone Swarms: A Preliminary Crowd-Sourced Study

May 31, 2018

Abstract:Drone swarms are teams of autonomous un-manned aerial vehicles that act as a collective entity. We are interested in humanizing drone swarms, equipping them with the ability to emotionally affect human users through their non-verbal motions. Inspired by recent findings in how observers are emotionally touched by watching dance moves, we investigate the questions of whether and how coordinated drone swarms' motions can achieve emotive impacts on general audience. Our preliminary study on Amazon Mechanical Turk led to a number of interesting findings, including both promising results and challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge