Truong-Huy D. Nguyen

Towards Affective Drone Swarms: A Preliminary Crowd-Sourced Study

May 31, 2018

Abstract:Drone swarms are teams of autonomous un-manned aerial vehicles that act as a collective entity. We are interested in humanizing drone swarms, equipping them with the ability to emotionally affect human users through their non-verbal motions. Inspired by recent findings in how observers are emotionally touched by watching dance moves, we investigate the questions of whether and how coordinated drone swarms' motions can achieve emotive impacts on general audience. Our preliminary study on Amazon Mechanical Turk led to a number of interesting findings, including both promising results and challenges.

Modeling Game Avatar Synergy and Opposition through Embedding in Multiplayer Online Battle Arena Games

Mar 28, 2018

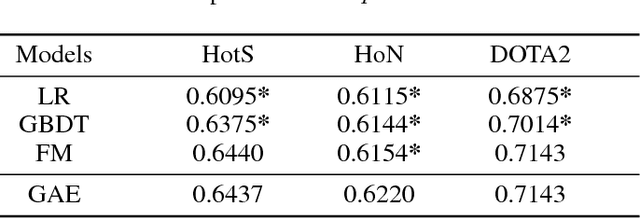

Abstract:Multiplayer Online Battle Arena (MOBA) games have received increasing worldwide popularity recently. In such games, players compete in teams against each other by controlling selected game avatars, each of which is designed with different strengths and weaknesses. Intuitively, putting together game avatars that complement each other (synergy) and suppress those of opponents (opposition) would result in a stronger team. In-depth understanding of synergy and opposition relationships among game avatars benefits player in making decisions in game avatar drafting and gaining better prediction of match events. However, due to intricate design and complex interactions between game avatars, thorough understanding of their relationships is not a trivial task. In this paper, we propose a latent variable model, namely Game Avatar Embedding (GAE), to learn avatars' numerical representations which encode synergy and opposition relationships between pairs of avatars. The merits of our model are twofold: (1) the captured synergy and opposition relationships are sensible to experienced human players' perception; (2) the learned numerical representations of game avatars allow many important downstream tasks, such as similar avatar search, match outcome prediction, and avatar pick recommender. To our best knowledge, no previous model is able to simultaneously support both features. Our quantitative and qualitative evaluations on real match data from three commercial MOBA games illustrate the benefits of our model.

Hand Gesture Controlled Drones: An Open Source Library

Mar 27, 2018

Abstract:Drones are conventionally controlled using joysticks, remote controllers, mobile applications, and embedded computers. A few significant issues with these approaches are that drone control is limited by the range of electromagnetic radiation and susceptible to interference noise. In this study we propose the use of hand gestures as a method to control drones. We investigate the use of computer vision methods to develop an intuitive way of agent-less communication between a drone and its operator. Computer vision-based methods rely on the ability of a drone's camera to capture surrounding images and use pattern recognition to translate images to meaningful and/or actionable information. The proposed framework involves a few key parts toward an ultimate action to be taken. They are: image segregation from the video streams of front camera, creating a robust and reliable image recognition based on segregated images, and finally conversion of classified gestures into actionable drone movement, such as takeoff, landing, hovering and so forth. A set of five gestures are studied in this work. Haar feature-based AdaBoost classifier is employed for gesture recognition. We also envisage safety of the operator and drone's action calculating the distance based on computer vision for this task. A series of experiments are conducted to measure gesture recognition accuracies considering the major scene variabilities, illumination, background, and distance. Classification accuracies show that well-lit, clear background, and within 3 ft gestures are recognized correctly over 90%. Limitations of current framework and feasible solutions for better gesture recognition are discussed, too. The software library we developed, and hand gesture data sets are open-sourced at project website.

Player Skill Decomposition in Multiplayer Online Battle Arenas

Feb 21, 2017

Abstract:Successful analysis of player skills in video games has important impacts on the process of enhancing player experience without undermining their continuous skill development. Moreover, player skill analysis becomes more intriguing in team-based video games because such form of study can help discover useful factors in effective team formation. In this paper, we consider the problem of skill decomposition in MOBA (MultiPlayer Online Battle Arena) games, with the goal to understand what player skill factors are essential for the outcome of a game match. To understand the construct of MOBA player skills, we utilize various skill-based predictive models to decompose player skills into interpretative parts, the impact of which are assessed in statistical terms. We apply this analysis approach on two widely known MOBAs, namely League of Legends (LoL) and Defense of the Ancients 2 (DOTA2). The finding is that base skills of in-game avatars, base skills of players, and players' champion-specific skills are three prominent skill components influencing LoL's match outcomes, while those of DOTA2 are mainly impacted by in-game avatars' base skills but not much by the other two.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge