Jacob Lambert

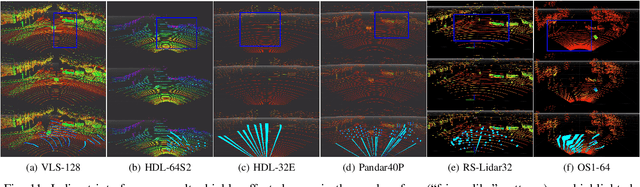

Characterization of Multiple 3D LiDARs for Localization and Mapping using Normal Distributions Transform

Apr 03, 2020

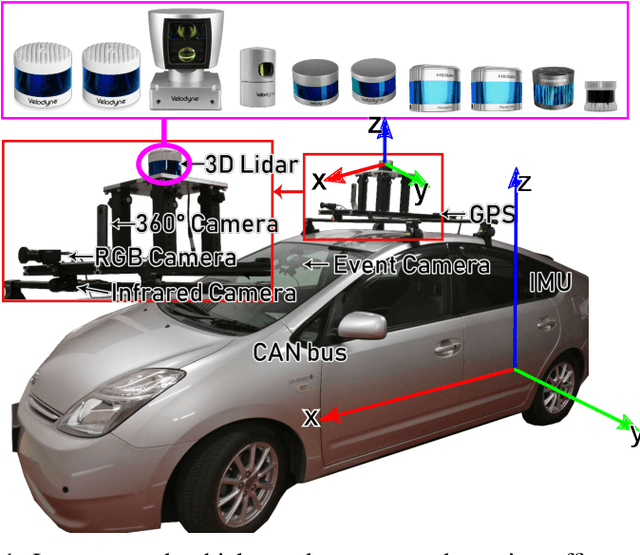

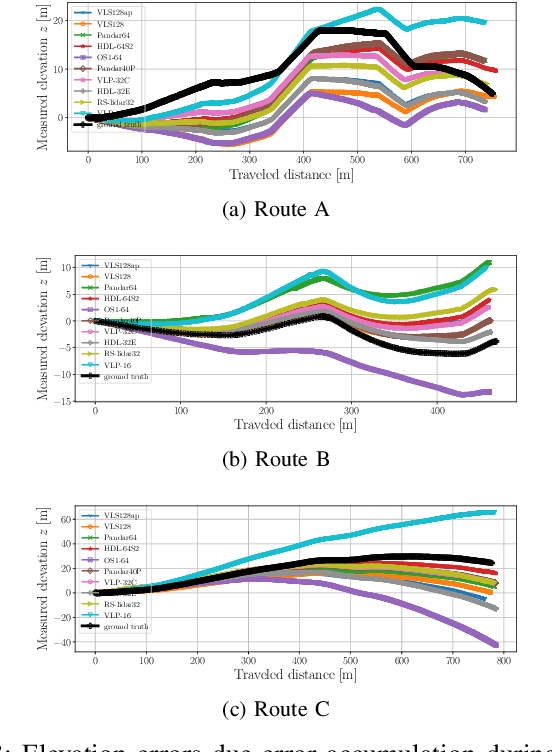

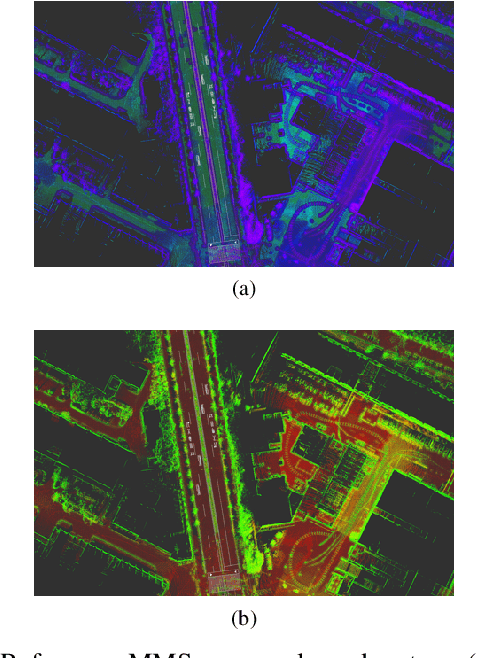

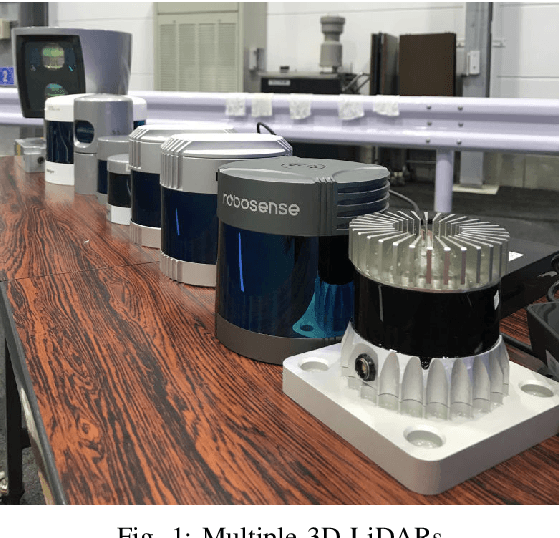

Abstract:In this work, we present a detailed comparison of ten different 3D LiDAR sensors, covering a range of manufacturers, models, and laser configurations, for the tasks of mapping and vehicle localization, using as common reference the Normal Distributions Transform (NDT) algorithm implemented in the self-driving open source platform Autoware. LiDAR data used in this study is a subset of our LiDAR Benchmarking and Reference (LIBRE) dataset, captured independently from each sensor, from a vehicle driven on public urban roads multiple times, at different times of the day. In this study, we analyze the performance and characteristics of each LiDAR for the tasks of (1) 3D mapping including an assessment map quality based on mean map entropy, and (2) 6-DOF localization using a ground truth reference map.

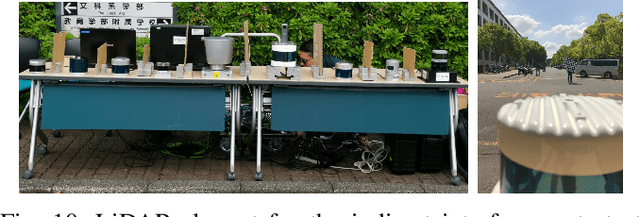

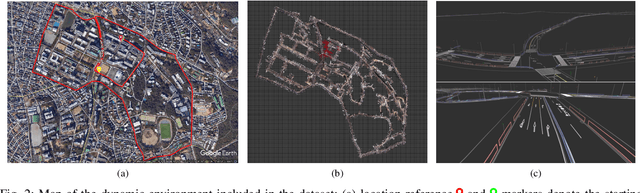

LIBRE: The Multiple 3D LiDAR Dataset

Mar 13, 2020

Abstract:In this work, we present LIBRE: LiDAR Benchmarking and Reference, a first-of-its-kind dataset featuring 12 different LiDAR sensors, covering a range of manufacturers, models, and laser configurations. Data captured independently from each sensor includes four different environments and configurations: static obstacles placed at known distances and measured from a fixed position within a controlled environment; static obstacles measured from a moving vehicle, captured in a weather chamber where LiDARs were exposed to different conditions (fog, rain, strong light); dynamic objects actively measured from a fixed position by multiple LiDARs mounted side-by-side simultaneously, creating indirect interference conditions; and dynamic traffic objects captured from a vehicle driven on public urban roads multiple times at different times of the day, including data from supporting sensors such as cameras, infrared imaging, and odometry devices. LIBRE will contribute the research community to (1) provide a means for a fair comparison of currently available LiDARs, and (2) facilitate the improvement of existing self-driving vehicles and robotics-related software, in terms of development and tuning of LiDAR-based perception algorithms.

A Survey of Autonomous Driving: Common Practices and Emerging Technologies

Jun 12, 2019

Abstract:Automated driving systems (ADSs) promise a safe, comfortable and efficient driving experience. However, fatalities involving vehicles equipped with ADSs are on the rise. The full potential of ADSs cannot be realized unless the robustness of state-of-the-art improved further. This paper discusses unsolved problems and surveys the technical aspect of automated driving. Studies regarding present challenges, high-level system architectures, emerging methodologies and core functions: localization, mapping, perception, planning, and human machine interface, were thoroughly reviewed. Furthermore, the state-of-the-art was implemented on our own platform and various algorithms were compared in a real-world driving setting. The paper concludes with an overview of available datasets and tools for ADS development.

Risky Action Recognition in Lane Change Video Clips using Deep Spatiotemporal Networks with Segmentation Mask Transfer

Jun 07, 2019

Abstract:Advanced driver assistance and automated driving systems rely on risk estimation modules to predict and avoid dangerous situations. Current methods use expensive sensor setups and complex processing pipeline, limiting their availability and robustness. To address these issues, we introduce a novel deep learning based action recognition framework for classifying dangerous lane change behavior in short video clips captured by a monocular camera. We designed a deep spatiotemporal classification network that uses pre-trained state-of-the-art instance segmentation network Mask R-CNN as its spatial feature extractor for this task. The Long-Short Term Memory (LSTM) and shallower final classification layers of the proposed method were trained on a semi-naturalistic lane change dataset with annotated risk labels. A comprehensive comparison of state-of-the-art feature extractors was carried out to find the best network layout and training strategy. The best result, with a 0.937 AUC score, was obtained with the proposed network. Our code and trained models are available open-source.

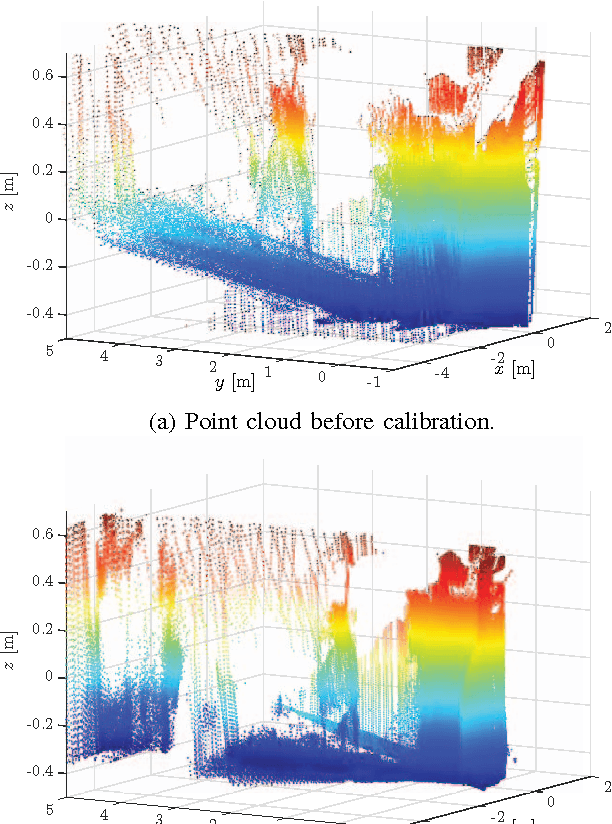

Entropy-Based $Sim$ Calibration of 2D Lidars to Egomotion Sensors

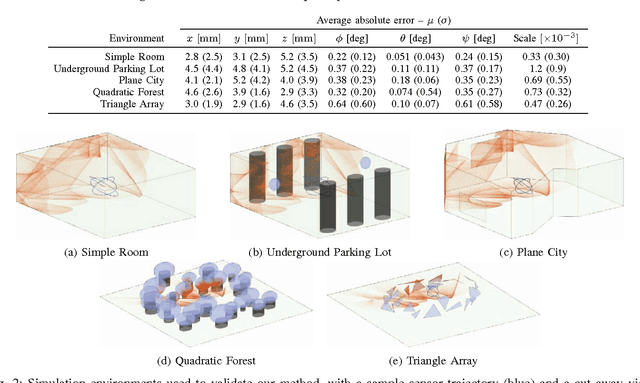

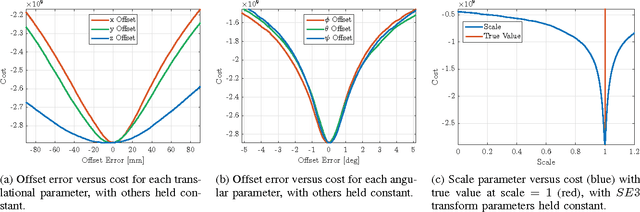

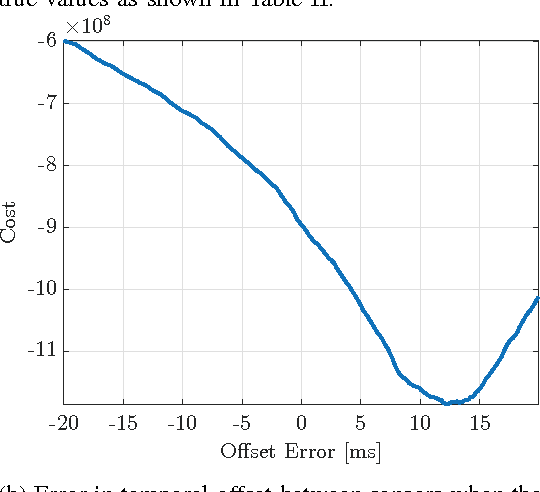

Jul 13, 2018

Abstract:This paper explores the use of an entropy-based technique for point cloud reconstruction with the goal of calibrating a lidar to a sensor capable of providing egomotion information. We extend recent work in this area to the problem of recovering the $Sim(3)$ transformation between a 2D lidar and a rigidly attached monocular camera, where the scale of the camera trajectory is not known a priori. We demonstrate the robustness of our approach on realistic simulations in multiple environments, as well as on data collected from a hand-held sensor rig. Given a non-degenerate trajectory and a sufficient number of lidar measurements, our calibration procedure achieves millimetre-scale and sub-degree accuracy. Moreover, our method relaxes the need for specific scene geometry, fiducial markers, or overlapping sensor fields of view, which had previously limited similar techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge