Ibrahim Sobh

Adversarial Attacks on Multi-task Visual Perception for Autonomous Driving

Jul 15, 2021

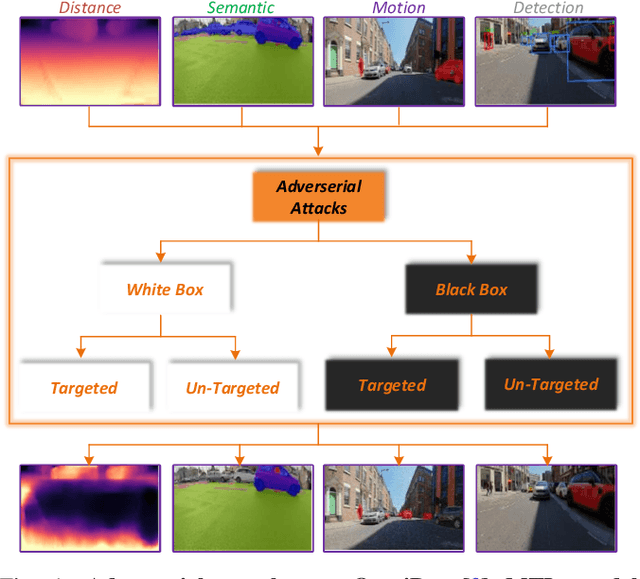

Abstract:Deep neural networks (DNNs) have accomplished impressive success in various applications, including autonomous driving perception tasks, in recent years. On the other hand, current deep neural networks are easily fooled by adversarial attacks. This vulnerability raises significant concerns, particularly in safety-critical applications. As a result, research into attacking and defending DNNs has gained much coverage. In this work, detailed adversarial attacks are applied on a diverse multi-task visual perception deep network across distance estimation, semantic segmentation, motion detection, and object detection. The experiments consider both white and black box attacks for targeted and un-targeted cases, while attacking a task and inspecting the effect on all the others, in addition to inspecting the effect of applying a simple defense method. We conclude this paper by comparing and discussing the experimental results, proposing insights and future work. The visualizations of the attacks are available at https://youtu.be/R3JUV41aiPY.

Deep Reinforcement Learning for Autonomous Driving: A Survey

Feb 02, 2020

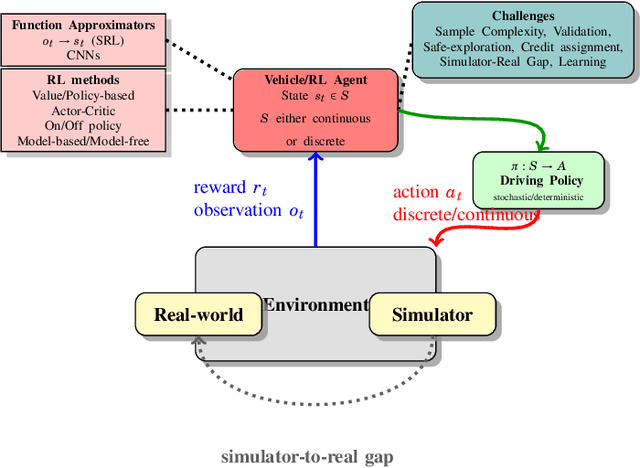

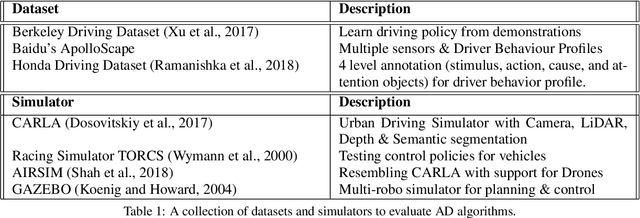

Abstract:With the development of deep representation learning, the domain of reinforcement learning (RL) has become a powerful learning framework now capable of learning complex policies in high dimensional environments. This review summarises deep reinforcement learning (DRL) algorithms, provides a taxonomy of automated driving tasks where (D)RL methods have been employed, highlights the key challenges algorithmically as well as in terms of deployment of real world autonomous driving agents, the role of simulators in training agents, and finally methods to evaluate, test and robustifying existing solutions in RL and imitation learning.

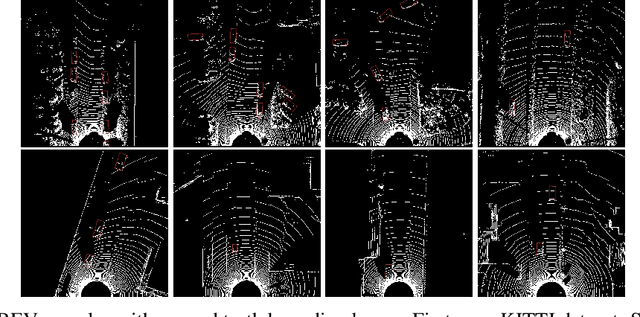

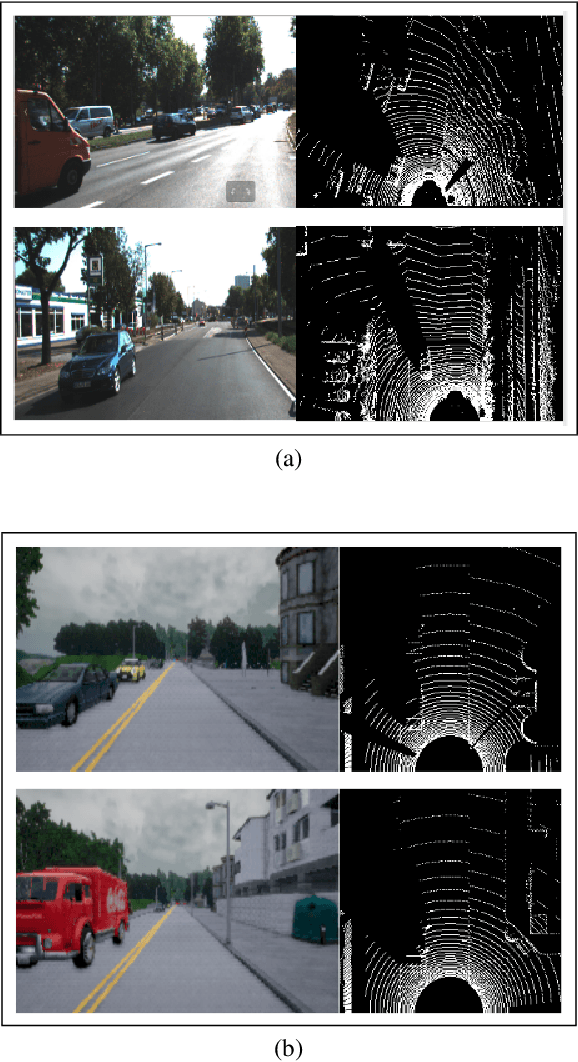

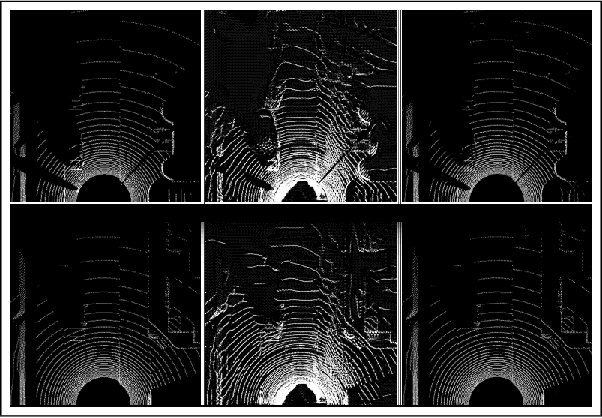

Unsupervised Neural Sensor Models for Synthetic LiDAR Data Augmentation

Nov 24, 2019

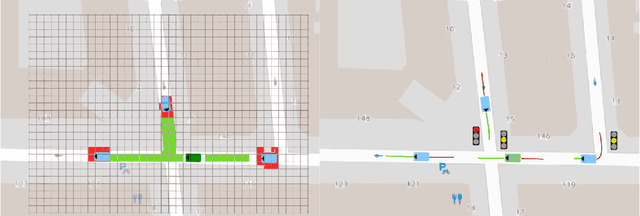

Abstract:Data scarcity is a bottleneck to machine learning-based perception modules, usually tackled by augmenting real data with synthetic data from simulators. Realistic models of the vehicle perception sensors are hard to formulate in closed form, and at the same time, they require the existence of paired data to be learned. In this work, we propose two unsupervised neural sensor models based on unpaired domain translations with CycleGANs and Neural Style Transfer techniques. We employ CARLA as the simulation environment to obtain simulated LiDAR point clouds, together with their annotations for data augmentation, and we use KITTI dataset as the real LiDAR dataset from which we learn the realistic sensor model mapping. Moreover, we provide a framework for data augmentation and evaluation of the developed sensor models, through extrinsic object detection task evaluation using YOLO network adapted to provide oriented bounding boxes for LiDAR Bird-eye-View projected point clouds. Evaluation is performed on unseen real LiDAR frames from KITTI dataset, with different amounts of simulated data augmentation using the two proposed approaches, showing improvement of 6% mAP for the object detection task, in favor of the augmenting LiDAR point clouds adapted with the proposed neural sensor models over the raw simulated LiDAR.

End-to-End 3D-PointCloud Semantic Segmentation for Autonomous Driving

Jun 26, 2019

Abstract:3D semantic scene labeling is a fundamental task for Autonomous Driving. Recent work shows the capability of Deep Neural Networks in labeling 3D point sets provided by sensors like LiDAR, and Radar. Imbalanced distribution of classes in the dataset is one of the challenges that face 3D semantic scene labeling task. This leads to misclassifying for the non-dominant classes which suffer from two main problems: a) rare appearance in the dataset, and b) few sensor points reflected from one object of these classes. This paper proposes a Weighted Self-Incremental Transfer Learning as a generalized methodology that solves the imbalanced training dataset problems. It re-weights the components of the loss function computed from individual classes based on their frequencies in the training dataset, and applies Self-Incremental Transfer Learning by running the Neural Network model on non-dominant classes first, then dominant classes one-by-one are added. The experimental results introduce a new 3D point cloud semantic segmentation benchmark for KITTI dataset.

LiDAR Sensor modeling and Data augmentation with GANs for Autonomous driving

May 17, 2019

Abstract:In the autonomous driving domain, data collection and annotation from real vehicles are expensive and sometimes unsafe. Simulators are often used for data augmentation, which requires realistic sensor models that are hard to formulate and model in closed forms. Instead, sensors models can be learned from real data. The main challenge is the absence of paired data set, which makes traditional supervised learning techniques not suitable. In this work, we formulate the problem as image translation from unpaired data and employ CycleGANs to solve the sensor modeling problem for LiDAR, to produce realistic LiDAR from simulated LiDAR (sim2real). Further, we generate high-resolution, realistic LiDAR from lower resolution one (real2real). The LiDAR 3D point cloud is processed in Bird-eye View and Polar 2D representations. The experimental results show a high potential of the proposed approach.

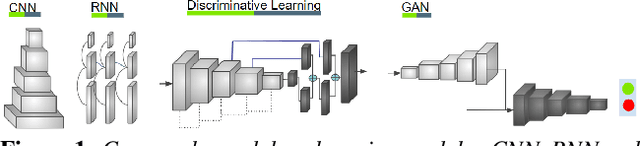

Yes, we GAN: Applying Adversarial Techniques for Autonomous Driving

Feb 09, 2019

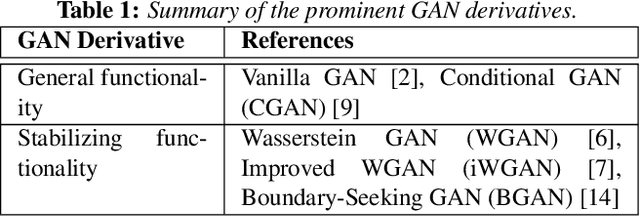

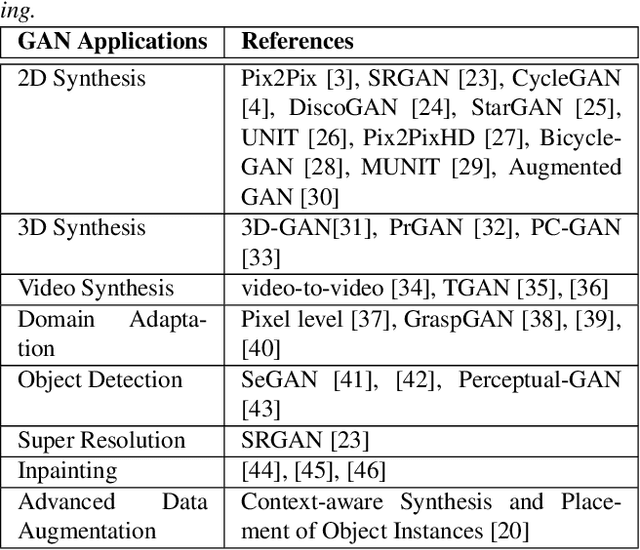

Abstract:Generative Adversarial Networks (GAN) have gained a lot of popularity from their introduction in 2014 till present. Research on GAN is rapidly growing and there are many variants of the original GAN focusing on various aspects of deep learning. GAN are perceived as the most impactful direction of machine learning in the last decade. This paper focuses on the application of GAN in autonomous driving including topics such as advanced data augmentation, loss function learning, semi-supervised learning, etc. We formalize and review key applications of adversarial techniques and discuss challenges and open problems to be addressed.

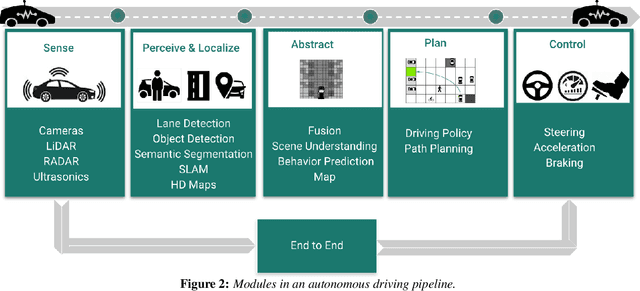

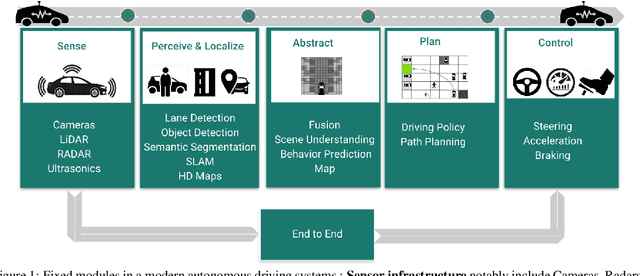

Exploring applications of deep reinforcement learning for real-world autonomous driving systems

Jan 16, 2019

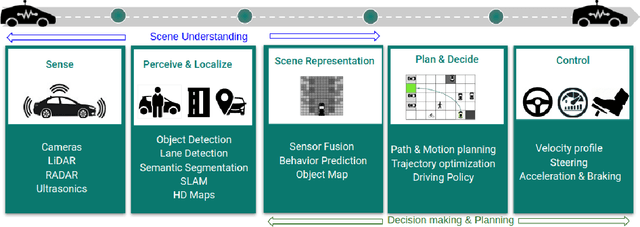

Abstract:Deep Reinforcement Learning (DRL) has become increasingly powerful in recent years, with notable achievements such as Deepmind's AlphaGo. It has been successfully deployed in commercial vehicles like Mobileye's path planning system. However, a vast majority of work on DRL is focused on toy examples in controlled synthetic car simulator environments such as TORCS and CARLA. In general, DRL is still at its infancy in terms of usability in real-world applications. Our goal in this paper is to encourage real-world deployment of DRL in various autonomous driving (AD) applications. We first provide an overview of the tasks in autonomous driving systems, reinforcement learning algorithms and applications of DRL to AD systems. We then discuss the challenges which must be addressed to enable further progress towards real-world deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge