Mohammed Abdou

End-to-End 3D-PointCloud Semantic Segmentation for Autonomous Driving

Jun 26, 2019

Abstract:3D semantic scene labeling is a fundamental task for Autonomous Driving. Recent work shows the capability of Deep Neural Networks in labeling 3D point sets provided by sensors like LiDAR, and Radar. Imbalanced distribution of classes in the dataset is one of the challenges that face 3D semantic scene labeling task. This leads to misclassifying for the non-dominant classes which suffer from two main problems: a) rare appearance in the dataset, and b) few sensor points reflected from one object of these classes. This paper proposes a Weighted Self-Incremental Transfer Learning as a generalized methodology that solves the imbalanced training dataset problems. It re-weights the components of the loss function computed from individual classes based on their frequencies in the training dataset, and applies Self-Incremental Transfer Learning by running the Neural Network model on non-dominant classes first, then dominant classes one-by-one are added. The experimental results introduce a new 3D point cloud semantic segmentation benchmark for KITTI dataset.

Meta learning Framework for Automated Driving

Jun 11, 2017

Abstract:The success of automated driving deployment is highly depending on the ability to develop an efficient and safe driving policy. The problem is well formulated under the framework of optimal control as a cost optimization problem. Model based solutions using traditional planning are efficient, but require the knowledge of the environment model. On the other hand, model free solutions suffer sample inefficiency and require too many interactions with the environment, which is infeasible in practice. Methods under the Reinforcement Learning framework usually require the notion of a reward function, which is not available in the real world. Imitation learning helps in improving sample efficiency by introducing prior knowledge obtained from the demonstrated behavior, on the risk of exact behavior cloning without generalizing to unseen environments. In this paper we propose a Meta learning framework, based on data set aggregation, to improve generalization of imitation learning algorithms. Under the proposed framework, we propose MetaDAgger, a novel algorithm which tackles the generalization issues in traditional imitation learning. We use The Open Race Car Simulator (TORCS) to test our algorithm. Results on unseen test tracks show significant improvement over traditional imitation learning algorithms, improving the learning time and sample efficiency in the same time. The results are also supported by visualization of the learnt features to prove generalization of the captured details.

Deep Reinforcement Learning framework for Autonomous Driving

Apr 08, 2017

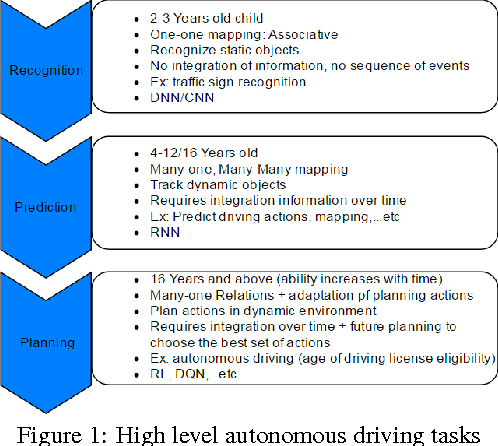

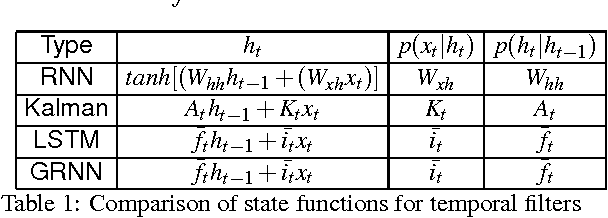

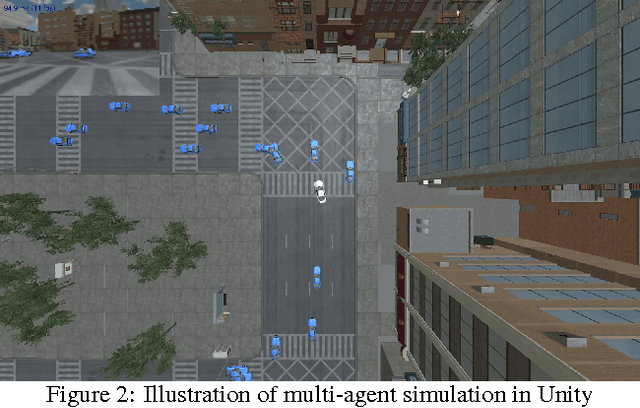

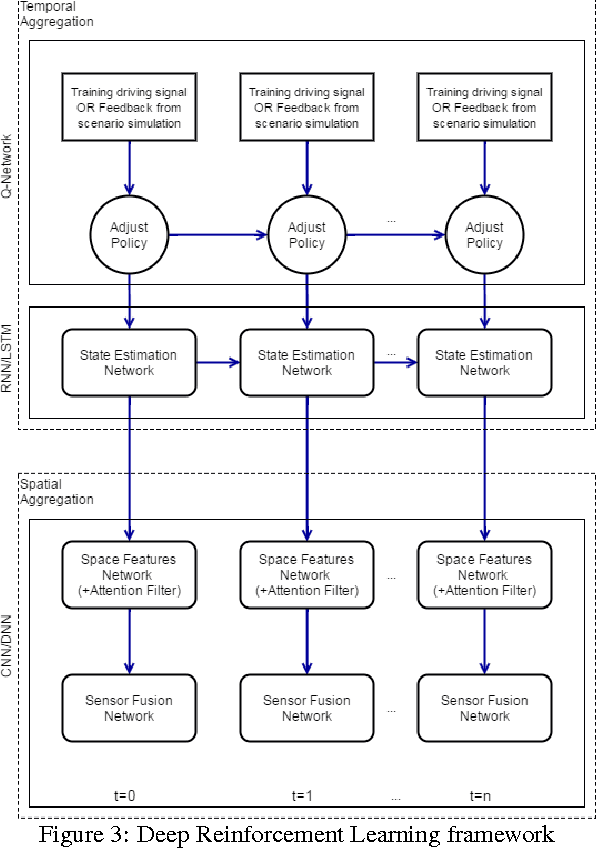

Abstract:Reinforcement learning is considered to be a strong AI paradigm which can be used to teach machines through interaction with the environment and learning from their mistakes. Despite its perceived utility, it has not yet been successfully applied in automotive applications. Motivated by the successful demonstrations of learning of Atari games and Go by Google DeepMind, we propose a framework for autonomous driving using deep reinforcement learning. This is of particular relevance as it is difficult to pose autonomous driving as a supervised learning problem due to strong interactions with the environment including other vehicles, pedestrians and roadworks. As it is a relatively new area of research for autonomous driving, we provide a short overview of deep reinforcement learning and then describe our proposed framework. It incorporates Recurrent Neural Networks for information integration, enabling the car to handle partially observable scenarios. It also integrates the recent work on attention models to focus on relevant information, thereby reducing the computational complexity for deployment on embedded hardware. The framework was tested in an open source 3D car racing simulator called TORCS. Our simulation results demonstrate learning of autonomous maneuvering in a scenario of complex road curvatures and simple interaction of other vehicles.

* Reprinted with permission of IS&T: The Society for Imaging Science and Technology, sole copyright owners of Electronic Imaging, Autonomous Vehicles and Machines 2017

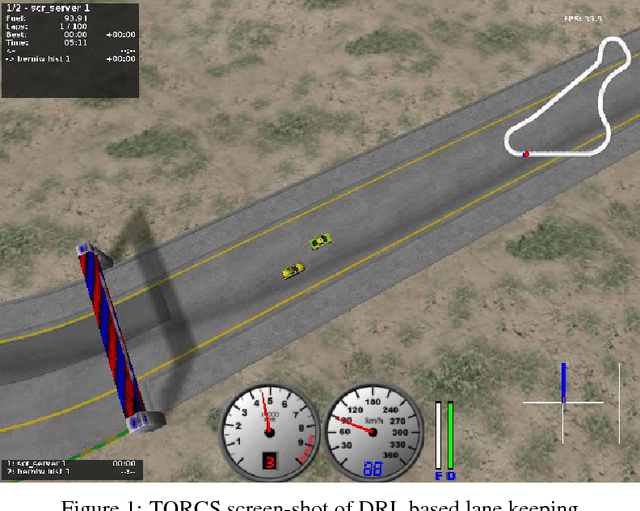

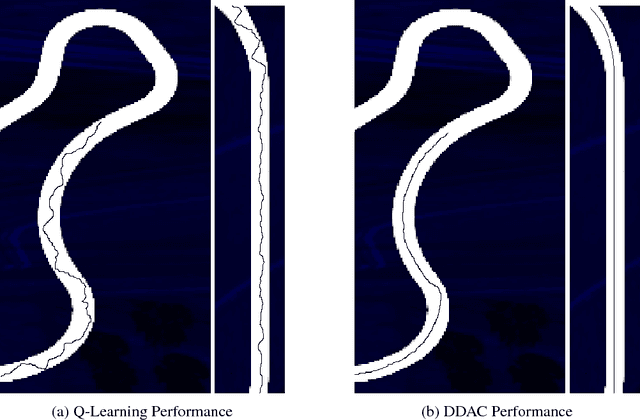

End-to-End Deep Reinforcement Learning for Lane Keeping Assist

Dec 13, 2016

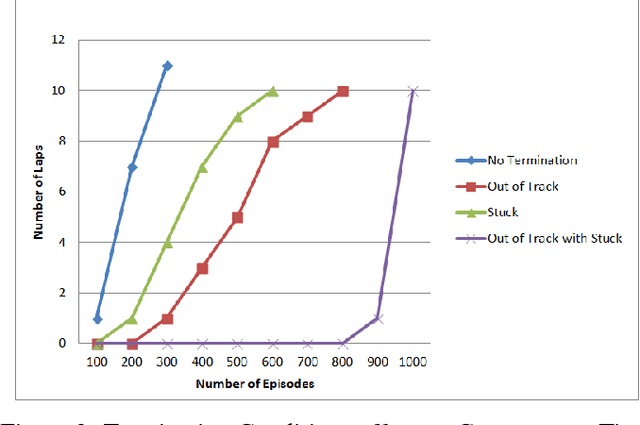

Abstract:Reinforcement learning is considered to be a strong AI paradigm which can be used to teach machines through interaction with the environment and learning from their mistakes, but it has not yet been successfully used for automotive applications. There has recently been a revival of interest in the topic, however, driven by the ability of deep learning algorithms to learn good representations of the environment. Motivated by Google DeepMind's successful demonstrations of learning for games from Breakout to Go, we will propose different methods for autonomous driving using deep reinforcement learning. This is of particular interest as it is difficult to pose autonomous driving as a supervised learning problem as it has a strong interaction with the environment including other vehicles, pedestrians and roadworks. As this is a relatively new area of research for autonomous driving, we will formulate two main categories of algorithms: 1) Discrete actions category, and 2) Continuous actions category. For the discrete actions category, we will deal with Deep Q-Network Algorithm (DQN) while for the continuous actions category, we will deal with Deep Deterministic Actor Critic Algorithm (DDAC). In addition to that, We will also discover the performance of these two categories on an open source car simulator for Racing called (TORCS) which stands for The Open Racing car Simulator. Our simulation results demonstrate learning of autonomous maneuvering in a scenario of complex road curvatures and simple interaction with other vehicles. Finally, we explain the effect of some restricted conditions, put on the car during the learning phase, on the convergence time for finishing its learning phase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge