Hyunwoo Park

Michael Pokorny

System-Level Comparison of Multimodal and In-Band mmWave Sensing for Beam Prediction in 6G ISAC

Jan 03, 2026Abstract:Integrated sensing and communication (ISAC) can reduce beam-training overhead in mmWave vehicle-to-infrastructure (V2I) links by enabling in-band sensing-based beam prediction, while exteroceptive sensors can further enhance the prediction accuracy. This work develop a system-level framework that evaluates camera, LiDAR, radar, GPS, and in-band mmWave power, both individually and in multimodal fusion using the DeepSense-6G Scenario-33 dataset. A latency-aware neural network composed of lightweight convolutional (CNN) and multilayer-perceptron (MLP) encoders predict a 64-beam index. We assess performance using Top-k accuracy alongside spectral-efficiency (SE) gap, signal-to-noise-ratio (SNR) gap, rate loss, and end-to-end latency. Results show that the mmWave power vector is a strong standalone predictor, and fusing exteroceptive sensors with it preserves high performance: mmWave alone and mmWave+LiDAR/GPS/Radar achieve 98% Top-5 accuracy, while mmWave+camera achieves 94% Top-5 accuracy. The proposed framework establishes calibrated baselines for 6G ISAC-assisted beam prediction in V2I systems.

Spatio-Temporal Graphs Beyond Grids: Benchmark for Maritime Anomaly Detection

Dec 23, 2025

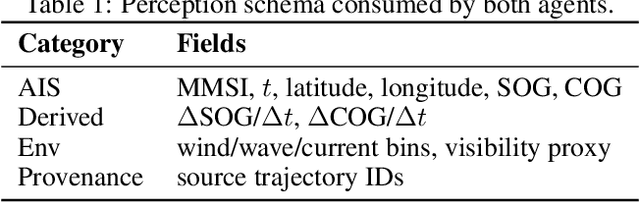

Abstract:Spatio-temporal graph neural networks (ST-GNNs) have achieved notable success in structured domains such as road traffic and public transportation, where spatial entities can be naturally represented as fixed nodes. In contrast, many real-world systems including maritime traffic lack such fixed anchors, making the construction of spatio-temporal graphs a fundamental challenge. Anomaly detection in these non-grid environments is particularly difficult due to the absence of canonical reference points, the sparsity and irregularity of trajectories, and the fact that anomalies may manifest at multiple granularities. In this work, we introduce a novel benchmark dataset for anomaly detection in the maritime domain, extending the Open Maritime Traffic Analysis Dataset (OMTAD) into a benchmark tailored for graph-based anomaly detection. Our dataset enables systematic evaluation across three different granularities: node-level, edge-level, and graph-level anomalies. We plan to employ two specialized LLM-based agents: \emph{Trajectory Synthesizer} and \emph{Anomaly Injector} to construct richer interaction contexts and generate semantically meaningful anomalies. We expect this benchmark to promote reproducibility and to foster methodological advances in anomaly detection for non-grid spatio-temporal systems.

Mobility-Aware Localization in mmWave Channel: Adaptive Hybrid Filtering Approach

Oct 08, 2025Abstract:Precise user localization and tracking enhances energy-efficient and ultra-reliable low latency applications in the next generation wireless networks. In addition to computational complexity and data association challenges with Kalman-filter localization techniques, estimation errors tend to grow as the user's trajectory speed increases. By exploiting mmWave signals for joint sensing and communication, our approach dispenses with additional sensors adopted in most techniques while retaining high resolution spatial cues. We present a hybrid mobility-aware adaptive framework that selects the Extended Kalman filter at pedestrian speed and the Unscented Kalman filter at vehicular speed. The scheme mitigates data-association problem and estimation errors through adaptive noise scaling, chi-square gating, Rauch-Tung-Striebel smoothing. Evaluations using Absolute Trajectory Error, Relative Pose Error, Normalized Estimated Error Squared and Root Mean Square Error metrics demonstrate roughly 30-60% improvement in their respective regimes indicating a clear advantage over existing approaches tailored to either indoor or static settings.

DropGaussian: Structural Regularization for Sparse-view Gaussian Splatting

Apr 01, 2025

Abstract:Recently, 3D Gaussian splatting (3DGS) has gained considerable attentions in the field of novel view synthesis due to its fast performance while yielding the excellent image quality. However, 3DGS in sparse-view settings (e.g., three-view inputs) often faces with the problem of overfitting to training views, which significantly drops the visual quality of novel view images. Many existing approaches have tackled this issue by using strong priors, such as 2D generative contextual information and external depth signals. In contrast, this paper introduces a prior-free method, so-called DropGaussian, with simple changes in 3D Gaussian splatting. Specifically, we randomly remove Gaussians during the training process in a similar way of dropout, which allows non-excluded Gaussians to have larger gradients while improving their visibility. This makes the remaining Gaussians to contribute more to the optimization process for rendering with sparse input views. Such simple operation effectively alleviates the overfitting problem and enhances the quality of novel view synthesis. By simply applying DropGaussian to the original 3DGS framework, we can achieve the competitive performance with existing prior-based 3DGS methods in sparse-view settings of benchmark datasets without any additional complexity. The code and model are publicly available at: https://github.com/DCVL-3D/DropGaussian release.

SPECTra: Scalable Multi-Agent Reinforcement Learning with Permutation-Free Networks

Mar 14, 2025

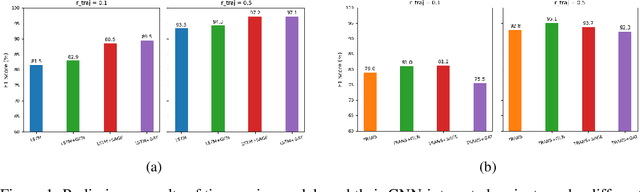

Abstract:In cooperative multi-agent reinforcement learning (MARL), the permutation problem where the state space grows exponentially with the number of agents reduces sample efficiency. Additionally, many existing architectures struggle with scalability, relying on a fixed structure tied to a specific number of agents, limiting their applicability to environments with a variable number of entities. While approaches such as graph neural networks (GNNs) and self-attention mechanisms have progressed in addressing these challenges, they have significant limitations as dense GNNs and self-attention mechanisms incur high computational costs. To overcome these limitations, we propose a novel agent network and a non-linear mixing network that ensure permutation-equivariance and scalability, allowing them to generalize to environments with various numbers of agents. Our agent network significantly reduces computational complexity, and our scalable hypernetwork enables efficient weight generation for non-linear mixing. Additionally, we introduce curriculum learning to improve training efficiency. Experiments on SMACv2 and Google Research Football (GRF) demonstrate that our approach achieves superior learning performance compared to existing methods. By addressing both permutation-invariance and scalability in MARL, our work provides a more efficient and adaptable framework for cooperative MARL. Our code is available at https://github.com/funny-rl/SPECTra.

Adaptive Sparsified Graph Learning Framework for Vessel Behavior Anomalies

Feb 20, 2025Abstract:Graph neural networks have emerged as a powerful tool for learning spatiotemporal interactions. However, conventional approaches often rely on predefined graphs, which may obscure the precise relationships being modeled. Additionally, existing methods typically define nodes based on fixed spatial locations, a strategy that is ill-suited for dynamic environments like maritime environments. Our method introduces an innovative graph representation where timestamps are modeled as distinct nodes, allowing temporal dependencies to be explicitly captured through graph edges. This setup is extended to construct a multi-ship graph that effectively captures spatial interactions while preserving graph sparsity. The graph is processed using Graph Convolutional Network layers to capture spatiotemporal patterns, with a forecasting layer for feature prediction and a Variational Graph Autoencoder for reconstruction, enabling robust anomaly detection.

Humanity's Last Exam

Jan 24, 2025Abstract:Benchmarks are important tools for tracking the rapid advancements in large language model (LLM) capabilities. However, benchmarks are not keeping pace in difficulty: LLMs now achieve over 90\% accuracy on popular benchmarks like MMLU, limiting informed measurement of state-of-the-art LLM capabilities. In response, we introduce Humanity's Last Exam (HLE), a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. HLE consists of 3,000 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading. Each question has a known solution that is unambiguous and easily verifiable, but cannot be quickly answered via internet retrieval. State-of-the-art LLMs demonstrate low accuracy and calibration on HLE, highlighting a significant gap between current LLM capabilities and the expert human frontier on closed-ended academic questions. To inform research and policymaking upon a clear understanding of model capabilities, we publicly release HLE at https://lastexam.ai.

Quantum-MUSIC: Multiple Signal Classification for Quantum Wireless Sensing

Dec 31, 2024

Abstract:This paper proposes a Quantum-MUSIC, the first multiple signal classification (MUSIC) algorithm for quantum wireless sensing of multi-user. Since an atomic receiver for quantum wireless sensing can only measure the magnitude of a received signal, sensing performance degradation of traditional antenna-based signal processing algorithms is inevitable. To overcome this limitation, the proposed algorithm recovers the channel information and incorporates the traditional MUSIC algorithm, enabling the sensing of multi-user with magnitude-only measurement. Simulation results showed that the proposed algorithm outperforms the existing MUSIC algorithm, validating the superior potential of quantum wireless sensing.

IMPaCT GNN: Imposing invariance with Message Passing in Chronological split Temporal Graphs

Nov 17, 2024Abstract:This paper addresses domain adaptation challenges in graph data resulting from chronological splits. In a transductive graph learning setting, where each node is associated with a timestamp, we focus on the task of Semi-Supervised Node Classification (SSNC), aiming to classify recent nodes using labels of past nodes. Temporal dependencies in node connections create domain shifts, causing significant performance degradation when applying models trained on historical data into recent data. Given the practical relevance of this scenario, addressing domain adaptation in chronological split data is crucial, yet underexplored. We propose Imposing invariance with Message Passing in Chronological split Temporal Graphs (IMPaCT), a method that imposes invariant properties based on realistic assumptions derived from temporal graph structures. Unlike traditional domain adaptation approaches which rely on unverifiable assumptions, IMPaCT explicitly accounts for the characteristics of chronological splits. The IMPaCT is further supported by rigorous mathematical analysis, including a derivation of an upper bound of the generalization error. Experimentally, IMPaCT achieves a 3.8% performance improvement over current SOTA method on the ogbn-mag graph dataset. Additionally, we introduce the Temporal Stochastic Block Model (TSBM), which replicates temporal graphs under varying conditions, demonstrating the applicability of our methods to general spatial GNNs.

Trajectory Planning for Autonomous Vehicle Using Iterative Reward Prediction in Reinforcement Learning

Apr 18, 2024

Abstract:Traditional trajectory planning methods for autonomous vehicles have several limitations. Heuristic and explicit simple rules make trajectory lack generality and complex motion. One of the approaches to resolve the above limitations of traditional trajectory planning methods is trajectory planning using reinforcement learning. However, reinforcement learning suffers from instability of learning and prior works of trajectory planning using reinforcement learning didn't consider the uncertainties. In this paper, we propose a trajectory planning method for autonomous vehicles using reinforcement learning. The proposed method includes iterative reward prediction method that stabilizes the learning process, and uncertainty propagation method that makes the reinforcement learning agent to be aware of the uncertainties. The proposed method is experimented in the CARLA simulator. Compared to the baseline method, we have reduced the collision rate by 60.17%, and increased the average reward to 30.82 times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge