Daehyeok Kwon

Autonomous Algorithm for Training Autonomous Vehicles with Minimal Human Intervention

May 22, 2024

Abstract:Reinforcement learning (RL) provides a compelling framework for enabling autonomous vehicles to continue to learn and improve diverse driving behaviors on their own. However, training real-world autonomous vehicles with current RL algorithms presents several challenges. One critical challenge, often overlooked in these algorithms, is the need to reset a driving environment between every episode. While resetting an environment after each episode is trivial in simulated settings, it demands significant human intervention in the real world. In this paper, we introduce a novel autonomous algorithm that allows off-the-shelf RL algorithms to train an autonomous vehicle with minimal human intervention. Our algorithm takes into account the learning progress of the autonomous vehicle to determine when to abort episodes before it enters unsafe states and where to reset it for subsequent episodes in order to gather informative transitions. The learning progress is estimated based on the novelty of both current and future states. We also take advantage of rule-based autonomous driving algorithms to safely reset an autonomous vehicle to an initial state. We evaluate our algorithm against baselines on diverse urban driving tasks. The experimental results show that our algorithm is task-agnostic and achieves better driving performance with fewer manual resets than baselines.

Safe and Efficient Trajectory Optimization for Autonomous Vehicles using B-spline with Incremental Path Flattening

Nov 13, 2023

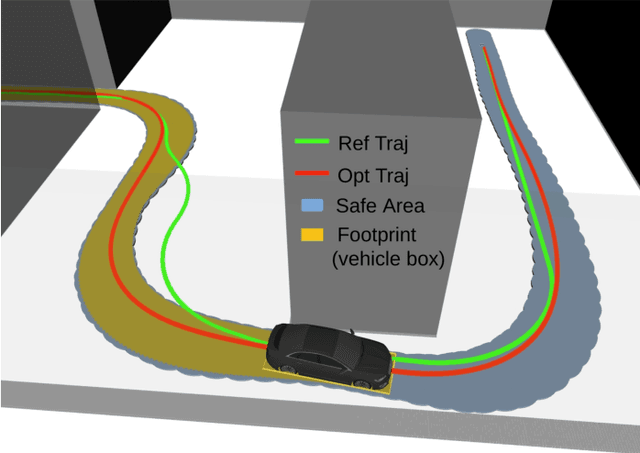

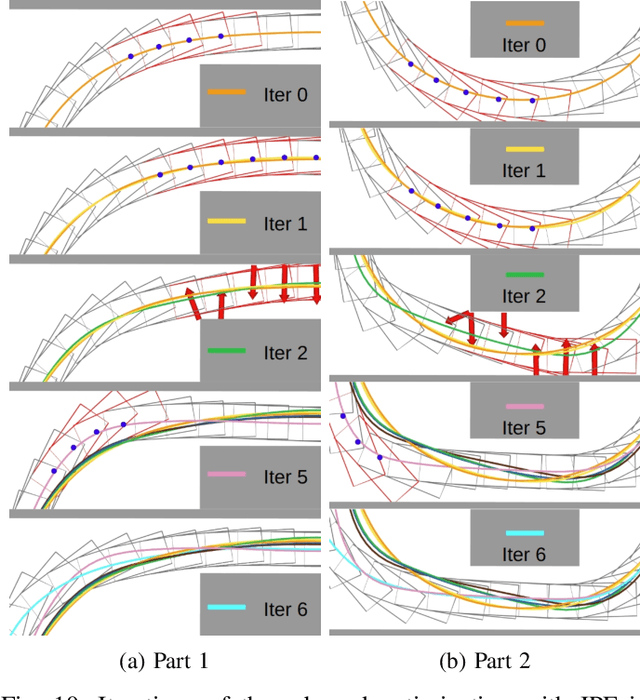

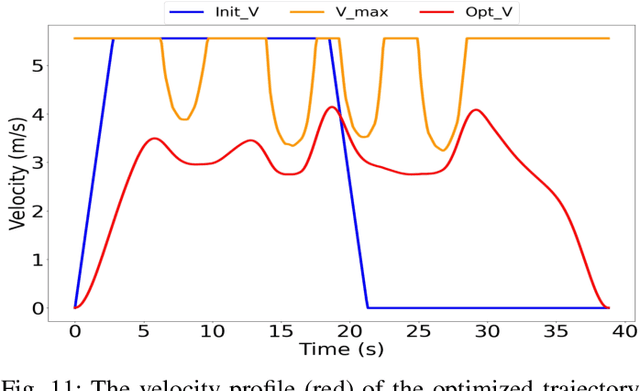

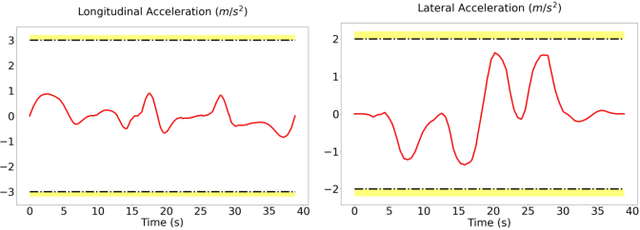

Abstract:B-spline-based trajectory optimization has been widely used in robot navigation, especially as quadrotor-like vehicles can easily enjoy the advantage of a B-spline curve (e.g. computational efficiency) with its convex hull property for trajectory optimization. Nevertheless, leveraging the B-splined-based optimization algorithm to generate a collision-free trajectory for autonomous vehicles is still challenging because their complex vehicle kinematics make it difficult to use the convex hull property. In this paper, we propose a novel trajectory optimization algorithm for autonomous vehicles that enables the advantage of a B-spline curve into a B-spline-based optimization algorithm by incorporating vehicle kinematics with two methods. An incremental path flattening is a new method that iteratively increases path curvature weight around vehicle collision points to find a collision-free path by reducing swept volume. A new swept volume estimation method can reduce over-approximation of the swept volume and make the vehicle pass through a narrow corridor without losing safety. Furthermore, a clamped B-spline curvature constraint, which can simplify a B-spline curvature constraint, is added with other feasibility constraints (e.g. longitudinal \& lateral velocity and acceleration) for the vehicle kinodynamic constraints. Our experimental results demonstrate that our method outperforms state-of-the-art baselines in various simulated environments and verifies its valid tracking performance with an autonomous vehicle in a real-world scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge