Hussein Abbass

University of New South Wales Canberra

Graph Attention Neural Network for Botnet Detection: Evaluating Autoencoder, VAE and PCA-Based Dimension Reduction

May 23, 2025Abstract:With the rise of IoT-based botnet attacks, researchers have explored various learning models for detection, including traditional machine learning, deep learning, and hybrid approaches. A key advancement involves deploying attention mechanisms to capture long-term dependencies among features, significantly improving detection accuracy. However, most models treat attack instances independently, overlooking inter-instance relationships. Graph Neural Networks (GNNs) address this limitation by learning an embedding space via iterative message passing where similar instances are placed closer based on node features and relationships, enhancing classification performance. To further improve detection, attention mechanisms have been embedded within GNNs, leveraging both long-range dependencies and inter-instance connections. However, transforming the high dimensional IoT attack datasets into a graph structured dataset poses challenges, such as large graph structures leading computational overhead. To mitigate this, this paper proposes a framework that first reduces dimensionality of the NetFlow-based IoT attack dataset before transforming it into a graph dataset. We evaluate three dimension reduction techniques--Variational Autoencoder (VAE-encoder), classical autoencoder (AE-encoder), and Principal Component Analysis (PCA)--and compare their effects on a Graph Attention neural network (GAT) model for botnet attack detection

Are GNNs Worth the Effort for IoT Botnet Detection? A Comparative Study of VAE-GNN vs. ViT-MLP and VAE-MLP Approaches

May 23, 2025Abstract:Due to the exponential rise in IoT-based botnet attacks, researchers have explored various advanced techniques for both dimensionality reduction and attack detection to enhance IoT security. Among these, Variational Autoencoders (VAE), Vision Transformers (ViT), and Graph Neural Networks (GNN), including Graph Convolutional Networks (GCN) and Graph Attention Networks (GAT), have garnered significant research attention in the domain of attack detection. This study evaluates the effectiveness of four state-of-the-art deep learning architectures for IoT botnet detection: a VAE encoder with a Multi-Layer Perceptron (MLP), a VAE encoder with a GCN, a VAE encoder with a GAT, and a ViT encoder with an MLP. The evaluation is conducted on a widely studied IoT benchmark dataset--the N-BaIoT dataset for both binary and multiclass tasks. For the binary classification task, all models achieved over 99.93% in accuracy, recall, precision, and F1-score, with no notable differences in performance. In contrast, for the multiclass classification task, GNN-based models showed significantly lower performance compared to VAE-MLP and ViT-MLP, with accuracies of 86.42%, 89.46%, 99.72%, and 98.38% for VAE-GCN, VAE-GAT, VAE-MLP, and ViT-MLP, respectively.

IoT Botnet Detection: Application of Vision Transformer to Classification of Network Flow Traffic

Apr 26, 2025Abstract:Despite the demonstrated effectiveness of transformer models in NLP, and image and video classification, the available tools for extracting features from captured IoT network flow packets fail to capture sequential patterns in addition to the absence of spatial patterns consequently limiting transformer model application. This work introduces a novel preprocessing method to adapt transformer models, the vision transformer (ViT) in particular, for IoT botnet attack detection using network flow packets. The approach involves feature extraction from .pcap files and transforming each instance into a 1-channel 2D image shape, enabling ViT-based classification. Also, the ViT model was enhanced to allow use any classifier besides Multilayer Perceptron (MLP) that was deployed in the initial ViT paper. Models including the conventional feed forward Deep Neural Network (DNN), LSTM and Bidirectional-LSTM (BLSTM) demonstrated competitive performance in terms of precision, recall, and F1-score for multiclass-based attack detection when evaluated on two IoT attack datasets.

Enhancing IoT-Botnet Detection using Variational Auto-encoder and Cost-Sensitive Learning: A Deep Learning Approach for Imbalanced Datasets

Apr 26, 2025Abstract:The Internet of Things (IoT) technology has rapidly gained popularity with applications widespread across a variety of industries. However, IoT devices have been recently serving as a porous layer for many malicious attacks to both personal and enterprise information systems with the most famous attacks being botnet-related attacks. The work in this study leveraged Variational Auto-encoder (VAE) and cost-sensitive learning to develop lightweight, yet effective, models for IoT-botnet detection. The aim is to enhance the detection of minority class attack traffic instances which are often missed by machine learning models. The proposed approach is evaluated on a multi-class problem setting for the detection of traffic categories on highly imbalanced datasets. The performance of two deep learning models including the standard feed forward deep neural network (DNN), and Bidirectional-LSTM (BLSTM) was evaluated and both recorded commendable results in terms of accuracy, precision, recall and F1-score for all traffic classes.

Planning-assisted autonomous swarm shepherding with collision avoidance

Jan 25, 2023

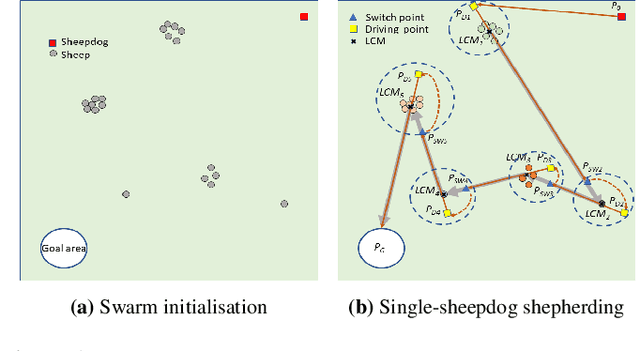

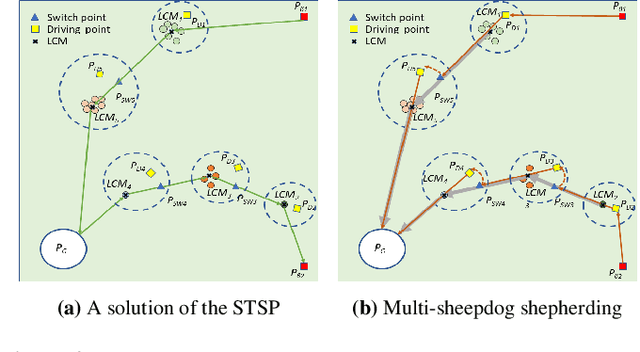

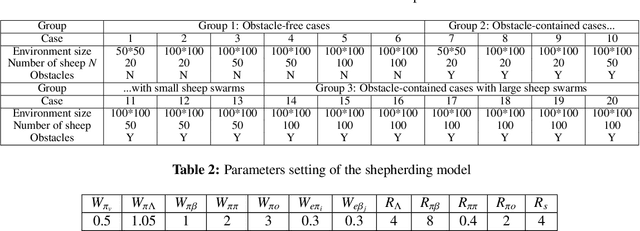

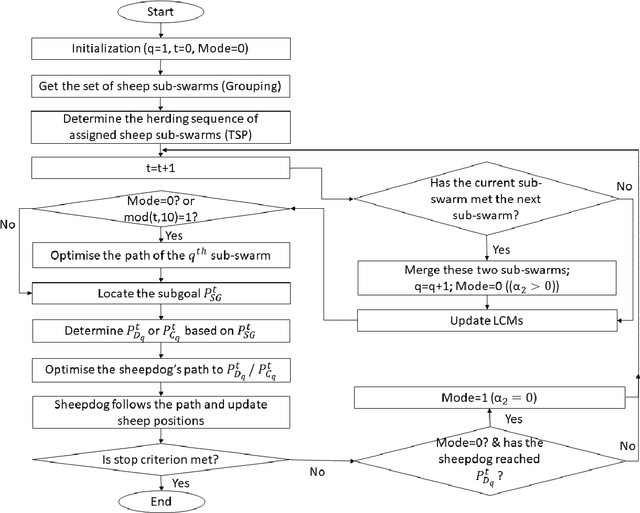

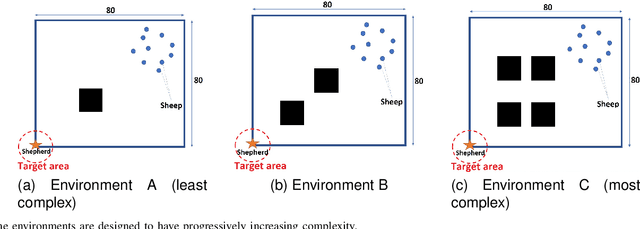

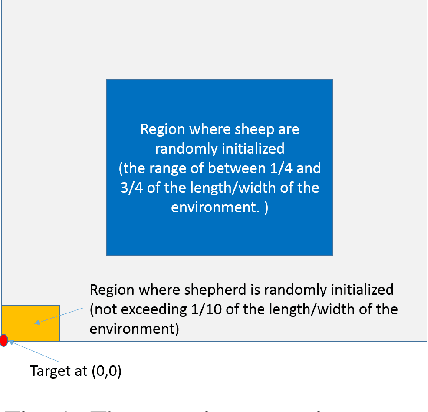

Abstract:Robotic shepherding is a bio-inspired approach to autonomously guiding a swarm of agents towards a desired location and has earned increasing research interest recently. However, shepherding a highly dispersed swarm in an obstructive environment remains challenging for the existing methods. To improve the shepherding efficacy in complex environments with obstacles and dispersed sheep, this paper proposes a planning-assisted autonomous shepherding framework with collision avoidance. The proposed approach transforms the swarm shepherding problem into a single Travelling Salesman Problem (TSP), with the sheepdog moving mode classified into non-interaction and interaction mode. Additionally, an adaptive switching approach is integrated into the framework to guide real-time path planning for avoiding collisions with obstacles and sometimes with sheep swarm. Then the overarching hierarchical mission planning system is presented, which consists of a grouping approach to obtain sheep sub-swarms, a general TSP solver for determining the optimal push sequence of sub-swarms, and an online path planner for calculating optimal paths for both sheepdogs and sheep. The experiments on a range of environments, both with and without obstacles, quantitatively demonstrate the effectiveness of the proposed shepherding framework and planning approaches.

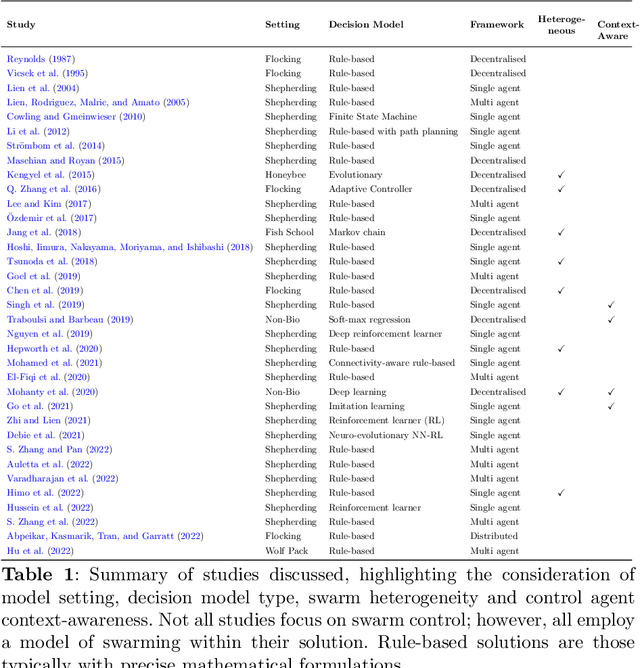

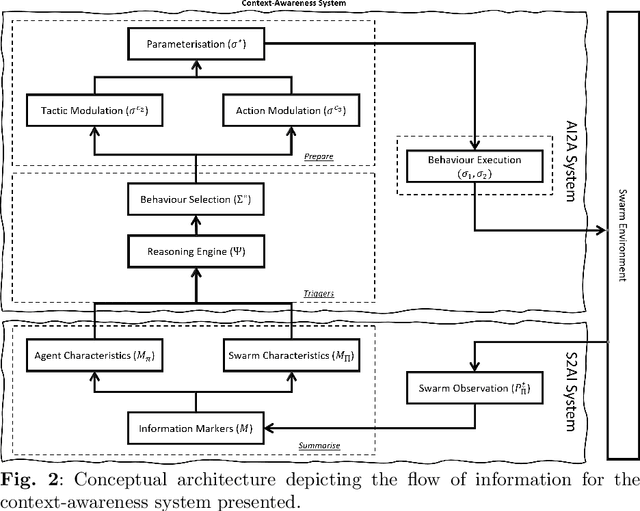

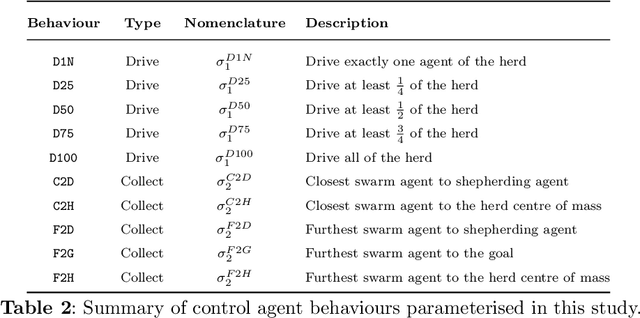

Contextually Aware Intelligent Control Agents for Heterogeneous Swarms

Nov 22, 2022

Abstract:An emerging challenge in swarm shepherding research is to design effective and efficient artificial intelligence algorithms that maintain a low-computational ceiling while increasing the swarm's abilities to operate in diverse contexts. We propose a methodology to design a context-aware swarm-control intelligent agent. The intelligent control agent (shepherd) first uses swarm metrics to recognise the type of swarm it interacts with to then select a suitable parameterisation from its behavioural library for that particular swarm type. The design principle of our methodology is to increase the situation awareness (i.e. information contents) of the control agent without sacrificing the low-computational cost necessary for efficient swarm control. We demonstrate successful shepherding in both homogeneous and heterogeneous swarms.

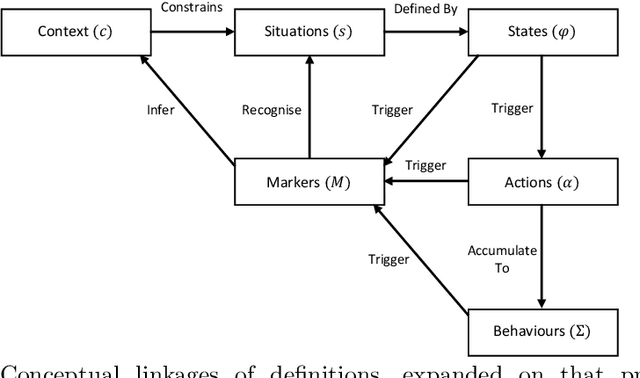

Swarm Analytics: Designing Information Markers to Characterise Swarm Systems in Shepherding Contexts

Aug 26, 2022

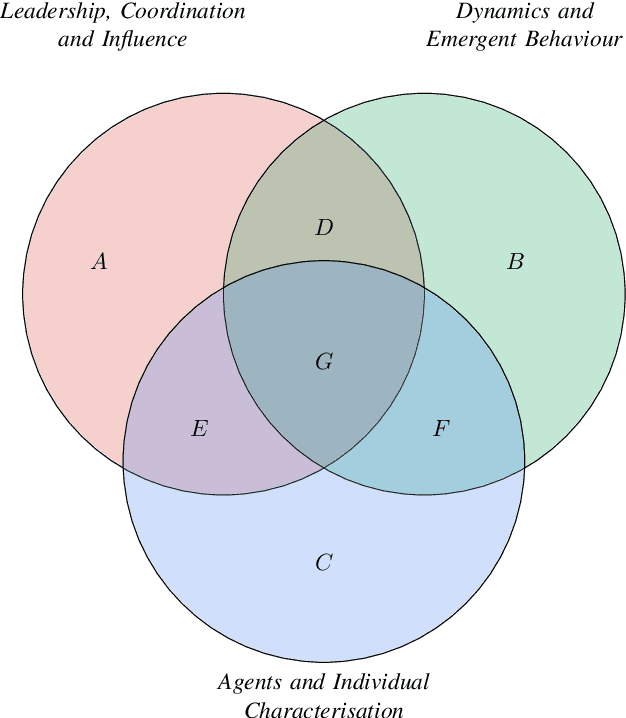

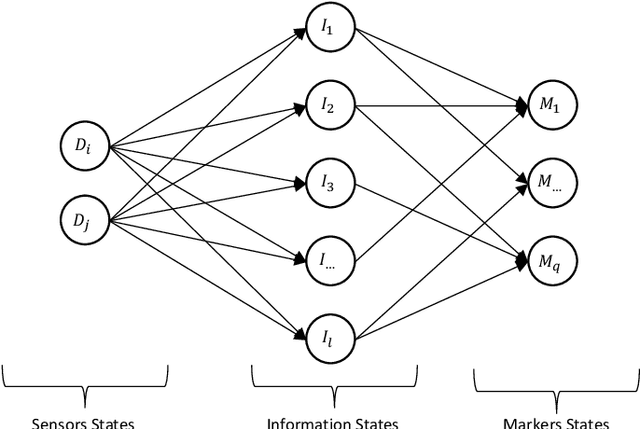

Abstract:Contemporary swarm indicators are often used in isolation, focused on extracting information at the individual or collective levels. These are seldom integrated to infer a top-level operating picture of the swarm, its individual members, and its overall collective dynamics. The primary contribution of this paper is to organise a suite of indicators about swarms into an ontologically-arranged collection of information markers to characterise the swarm from the perspective of an external observer-, a recognition agent. Our contribution shows the foundations for a new area of research that we title \emph{swarm analytics}, which its primary concern is with the design and organisation of collections of swarm markers to understand, detect, recognise, track, and learn a particular insight about a swarm system. We present our designed framework of information markers presents a new avenue for swarm research, especially for heterogeneous and cognitive swarms that may require more advanced capabilities to detect agencies and categorise agent influences and responses.

Fusing Interpretable Knowledge of Neural Network Learning Agents For Swarm-Guidance

Apr 01, 2022

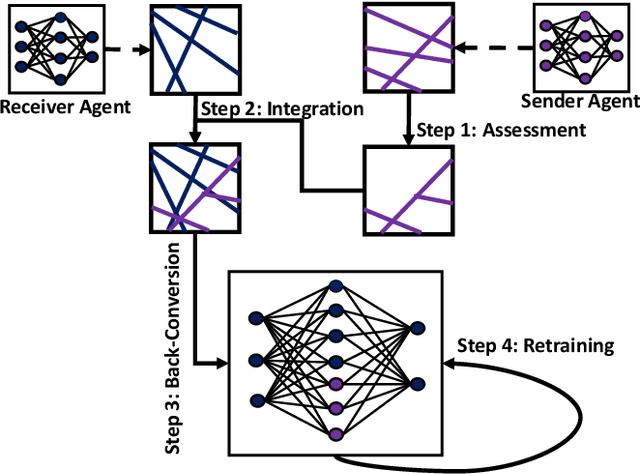

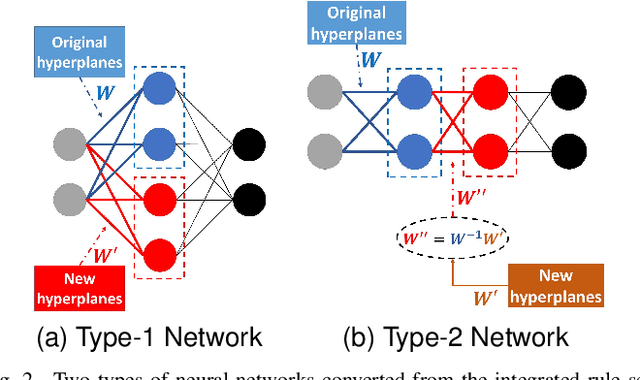

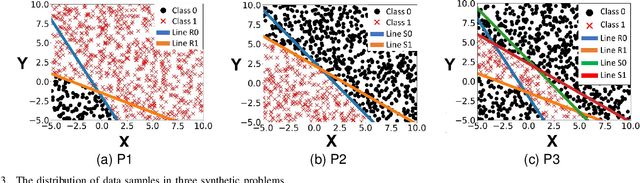

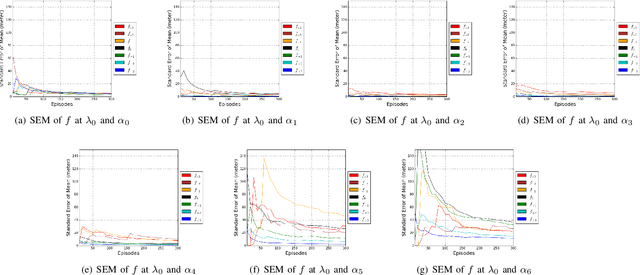

Abstract:Neural-based learning agents make decisions using internal artificial neural networks. In certain situations, it becomes pertinent that this knowledge is re-interpreted in a friendly form to both the human and the machine. These situations include: when agents are required to communicate the knowledge they learn to each other in a transparent way in the presence of an external human observer, in human-machine teaming settings where humans and machines need to collaborate on a task, or where there is a requirement to verify the knowledge exchanged between the agents. We propose an interpretable knowledge fusion framework suited for neural-based learning agents, and propose a Priority on Weak State Areas (PoWSA) retraining technique. We first test the proposed framework on a synthetic binary classification task before evaluating it on a shepherding-based multi-agent swarm guidance task. Results demonstrate that the proposed framework increases the success rate on the swarm-guidance environment by 11% and better stability in return for a modest increase in computational cost of 14.5% to achieve interpretability. Moreover, the framework presents the knowledge learnt by an agent in a human-friendly representation, leading to a better descriptive visual representation of an agent's knowledge.

Agile, Antifragile, Artificial-Intelligence-Enabled, Command and Control

Sep 14, 2021

Abstract:Artificial Intelligence (AI) is rapidly becoming integrated into military Command and Control (C2) systems as a strategic priority for many defence forces. The successful implementation of AI is promising to herald a significant leap in C2 agility through automation. However, realistic expectations need to be set on what AI can achieve in the foreseeable future. This paper will argue that AI could lead to a fragility trap, whereby the delegation of C2 functions to an AI could increase the fragility of C2, resulting in catastrophic strategic failures. This calls for a new framework for AI in C2 to avoid this trap. We will argue that antifragility along with agility should form the core design principles for AI-enabled C2 systems. This duality is termed Agile, Antifragile, AI-Enabled Command and Control (A3IC2). An A3IC2 system continuously improves its capacity to perform in the face of shocks and surprises through overcompensation from feedback during the C2 decision-making cycle. An A3IC2 system will not only be able to survive within a complex operational environment, it will also thrive, benefiting from the inevitable shocks and volatility of war.

Disturbances in Influence of a Shepherding Agent is More Impactful than Sensorial Noise During Swarm Guidance

Oct 03, 2020

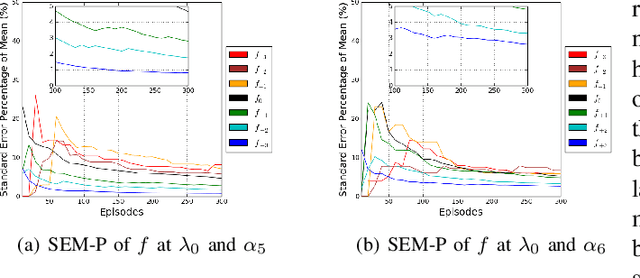

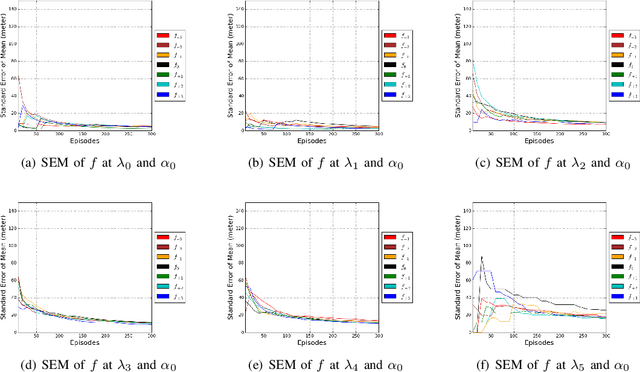

Abstract:The guidance of a large swarm is a challenging control problem. Shepherding offers one approach to guide a large swarm using a few shepherding agents (sheepdogs). While noise is an inherent characteristic in many real-world problems, the impact of noise on shepherding is not a well-studied problem. We study two forms of noise. First, we evaluate noise in the sensorial information received by the shepherd about the location of sheep. Second, we evaluate noise in the ability of the sheepdog to influence sheep due to disturbance forces occurring during actuation. We study both types of noise in this paper, and investigate the performance of Str\"{o}mbom's approach under these actuation and perception noises. To ensure that the parameterisation of the algorithm creates a stable performance, we need to run a large number of simulations, while increasing the number of random episodes until stability is achieved. We then systematically study the impact of sensorial and actuation noise on performance. Str\"{o}mbom's approach is found to be more sensitive to actuation noise than perception noise. This implies that it is more important for the shepherding agent to influence the sheep more accurately by reducing actuation noise than attempting to reduce noise in its sensors. Moreover, different levels of noise required different parameterisation for the shepherding agent, where the threshold needed by an agent to decide whether or not to collect astray sheep is different for different noise levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge