Darryn Reid

Contextually Aware Intelligent Control Agents for Heterogeneous Swarms

Nov 22, 2022

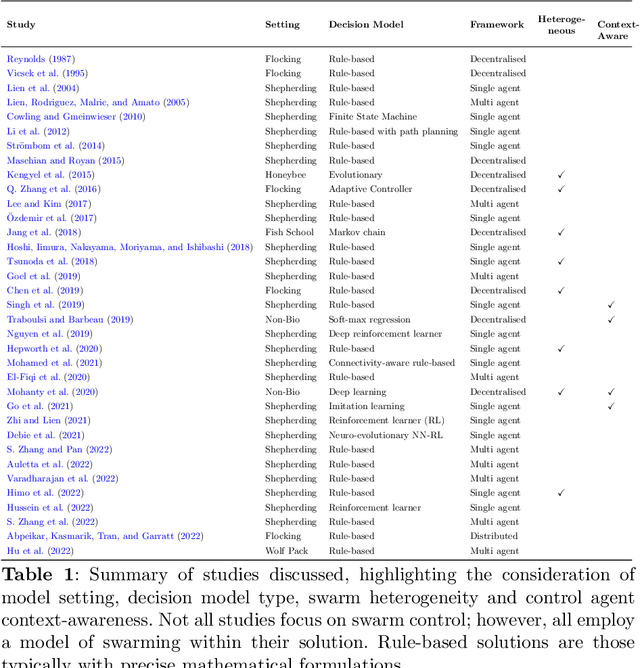

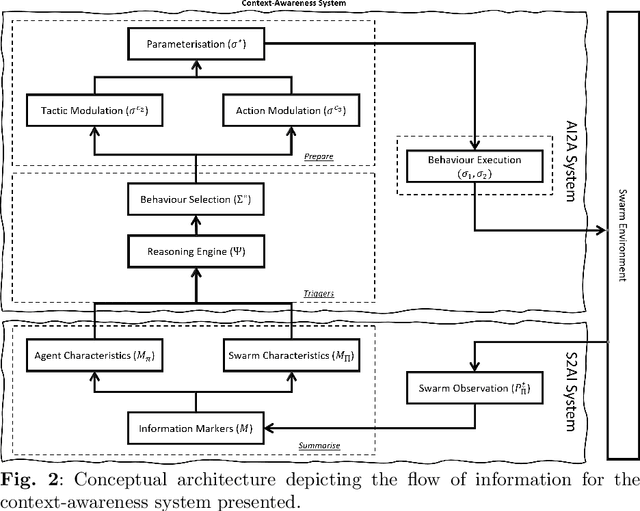

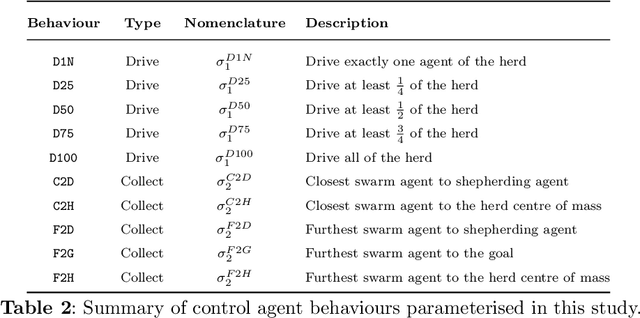

Abstract:An emerging challenge in swarm shepherding research is to design effective and efficient artificial intelligence algorithms that maintain a low-computational ceiling while increasing the swarm's abilities to operate in diverse contexts. We propose a methodology to design a context-aware swarm-control intelligent agent. The intelligent control agent (shepherd) first uses swarm metrics to recognise the type of swarm it interacts with to then select a suitable parameterisation from its behavioural library for that particular swarm type. The design principle of our methodology is to increase the situation awareness (i.e. information contents) of the control agent without sacrificing the low-computational cost necessary for efficient swarm control. We demonstrate successful shepherding in both homogeneous and heterogeneous swarms.

Swarm Analytics: Designing Information Markers to Characterise Swarm Systems in Shepherding Contexts

Aug 26, 2022

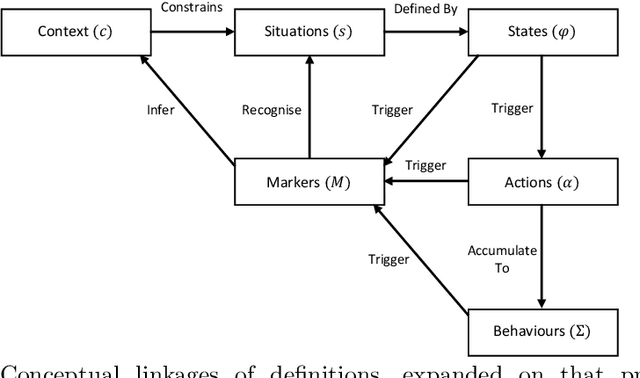

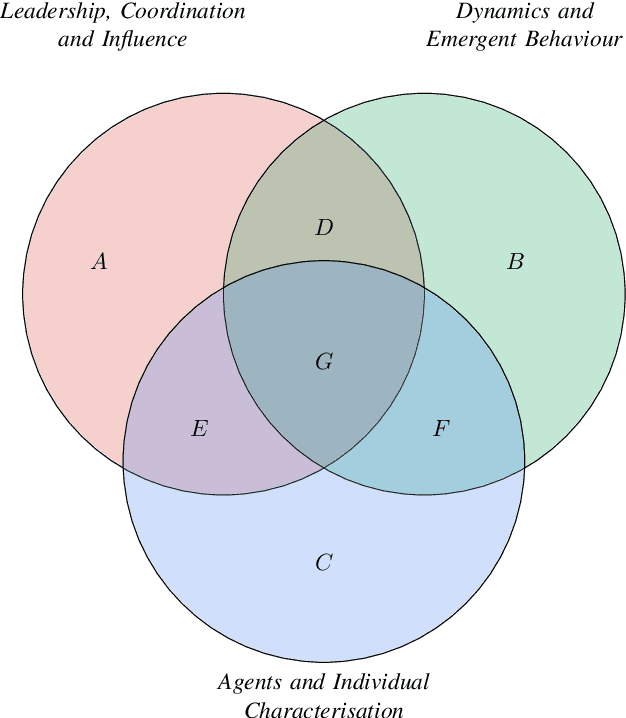

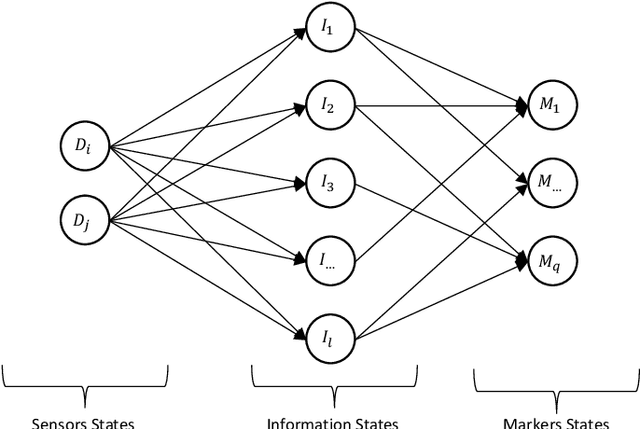

Abstract:Contemporary swarm indicators are often used in isolation, focused on extracting information at the individual or collective levels. These are seldom integrated to infer a top-level operating picture of the swarm, its individual members, and its overall collective dynamics. The primary contribution of this paper is to organise a suite of indicators about swarms into an ontologically-arranged collection of information markers to characterise the swarm from the perspective of an external observer-, a recognition agent. Our contribution shows the foundations for a new area of research that we title \emph{swarm analytics}, which its primary concern is with the design and organisation of collections of swarm markers to understand, detect, recognise, track, and learn a particular insight about a swarm system. We present our designed framework of information markers presents a new avenue for swarm research, especially for heterogeneous and cognitive swarms that may require more advanced capabilities to detect agencies and categorise agent influences and responses.

Collaborative Human-Agent Planning for Resilience

Apr 29, 2021

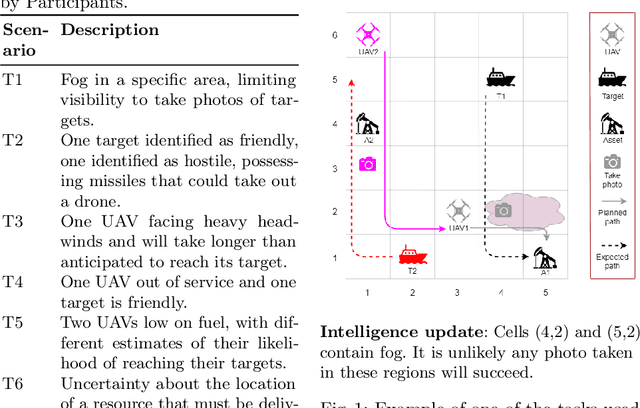

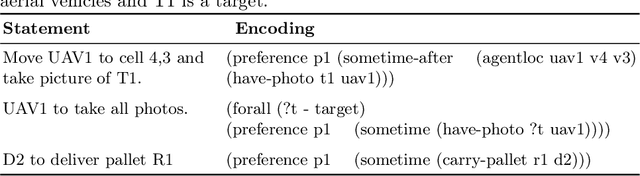

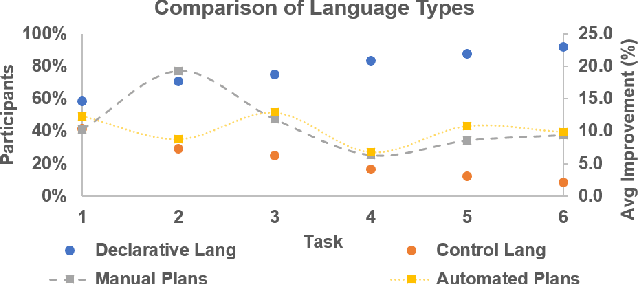

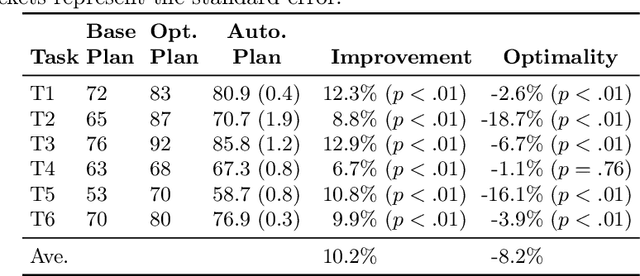

Abstract:Intelligent agents powered by AI planning assist people in complex scenarios, such as managing teams of semi-autonomous vehicles. However, AI planning models may be incomplete, leading to plans that do not adequately meet the stated objectives, especially in unpredicted situations. Humans, who are apt at identifying and adapting to unusual situations, may be able to assist planning agents in these situations by encoding their knowledge into a planner at run-time. We investigate whether people can collaborate with agents by providing their knowledge to an agent using linear temporal logic (LTL) at run-time without changing the agent's domain model. We presented 24 participants with baseline plans for situations in which a planner had limitations, and asked the participants for workarounds for these limitations. We encoded these workarounds as LTL constraints. Results show that participants' constraints improved the expected return of the plans by 10% ($p < 0.05$) relative to baseline plans, demonstrating that human insight can be used in collaborative planning for resilience. However, participants used more declarative than control constraints over time, but declarative constraints produced plans less similar to the expectation of the participants, which could lead to potential trust issues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge