Hooman Esfandiari

Domain adaptation strategies for 3D reconstruction of the lumbar spine using real fluoroscopy data

Jan 29, 2024

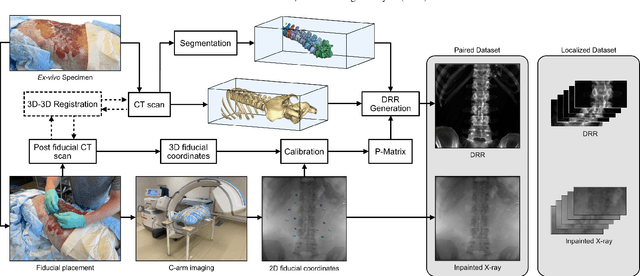

Abstract:This study tackles key obstacles in adopting surgical navigation in orthopedic surgeries, including time, cost, radiation, and workflow integration challenges. Recently, our work X23D showed an approach for generating 3D anatomical models of the spine from only a few intraoperative fluoroscopic images. This negates the need for conventional registration-based surgical navigation by creating a direct intraoperative 3D reconstruction of the anatomy. Despite these strides, the practical application of X23D has been limited by a domain gap between synthetic training data and real intraoperative images. In response, we devised a novel data collection protocol for a paired dataset consisting of synthetic and real fluoroscopic images from the same perspectives. Utilizing this dataset, we refined our deep learning model via transfer learning, effectively bridging the domain gap between synthetic and real X-ray data. A novel style transfer mechanism also allows us to convert real X-rays to mirror the synthetic domain, enabling our in-silico-trained X23D model to achieve high accuracy in real-world settings. Our results demonstrated that the refined model can rapidly generate accurate 3D reconstructions of the entire lumbar spine from as few as three intraoperative fluoroscopic shots. It achieved an 84% F1 score, matching the accuracy of our previous synthetic data-based research. Additionally, with a computational time of only 81.1 ms, our approach provides real-time capabilities essential for surgery integration. Through examining ideal imaging setups and view angle dependencies, we've further confirmed our system's practicality and dependability in clinical settings. Our research marks a significant step forward in intraoperative 3D reconstruction, offering enhancements to surgical planning, navigation, and robotics.

Automatic registration with continuous pose updates for marker-less surgical navigation in spine surgery

Aug 05, 2023Abstract:Established surgical navigation systems for pedicle screw placement have been proven to be accurate, but still reveal limitations in registration or surgical guidance. Registration of preoperative data to the intraoperative anatomy remains a time-consuming, error-prone task that includes exposure to harmful radiation. Surgical guidance through conventional displays has well-known drawbacks, as information cannot be presented in-situ and from the surgeon's perspective. Consequently, radiation-free and more automatic registration methods with subsequent surgeon-centric navigation feedback are desirable. In this work, we present an approach that automatically solves the registration problem for lumbar spinal fusion surgery in a radiation-free manner. A deep neural network was trained to segment the lumbar spine and simultaneously predict its orientation, yielding an initial pose for preoperative models, which then is refined for each vertebra individually and updated in real-time with GPU acceleration while handling surgeon occlusions. An intuitive surgical guidance is provided thanks to the integration into an augmented reality based navigation system. The registration method was verified on a public dataset with a mean of 96\% successful registrations, a target registration error of 2.73 mm, a screw trajectory error of 1.79{\deg} and a screw entry point error of 2.43 mm. Additionally, the whole pipeline was validated in an ex-vivo surgery, yielding a 100\% screw accuracy and a registration accuracy of 1.20 mm. Our results meet clinical demands and emphasize the potential of RGB-D data for fully automatic registration approaches in combination with augmented reality guidance.

Safe Deep RL for Intraoperative Planning of Pedicle Screw Placement

May 10, 2023

Abstract:Spinal fusion surgery requires highly accurate implantation of pedicle screw implants, which must be conducted in critical proximity to vital structures with a limited view of anatomy. Robotic surgery systems have been proposed to improve placement accuracy, however, state-of-the-art systems suffer from the limitations of open-loop approaches, as they follow traditional concepts of preoperative planning and intraoperative registration, without real-time recalculation of the surgical plan. In this paper, we propose an intraoperative planning approach for robotic spine surgery that leverages real-time observation for drill path planning based on Safe Deep Reinforcement Learning (DRL). The main contributions of our method are (1) the capability to guarantee safe actions by introducing an uncertainty-aware distance-based safety filter; and (2) the ability to compensate for incomplete intraoperative anatomical information, by encoding a-priori knowledge about anatomical structures with a network pre-trained on high-fidelity anatomical models. Planning quality was assessed by quantitative comparison with the gold standard (GS) drill planning. In experiments with 5 models derived from real magnetic resonance imaging (MRI) data, our approach was capable of achieving 90% bone penetration with respect to the GS while satisfying safety requirements, even under observation and motion uncertainty. To the best of our knowledge, our approach is the first safe DRL approach focusing on orthopedic surgeries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge