Florentin Liebmann

SurgPointTransformer: Vertebrae Shape Completion with RGB-D Data

Oct 02, 2024

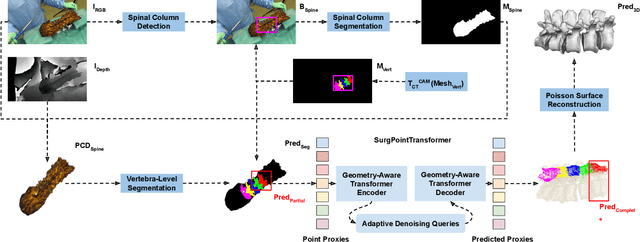

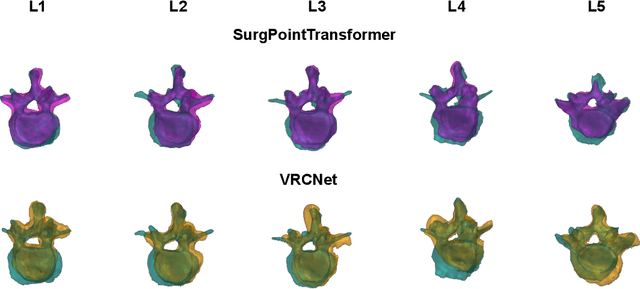

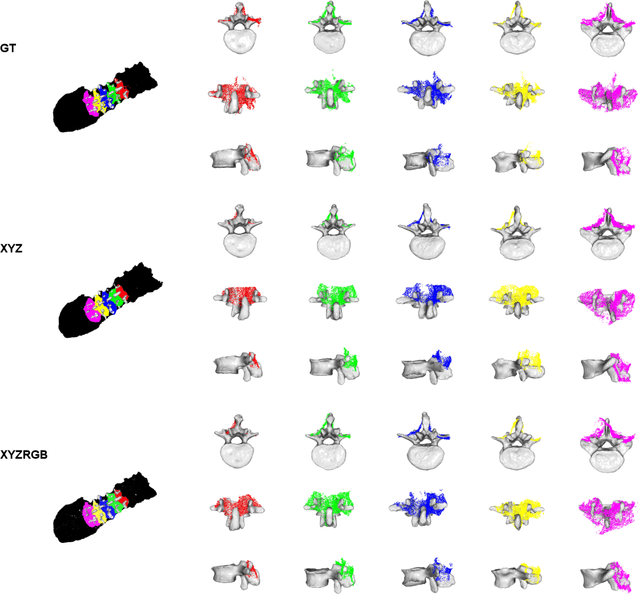

Abstract:State-of-the-art computer- and robot-assisted surgery systems heavily depend on intraoperative imaging technologies such as CT and fluoroscopy to generate detailed 3D visualization of the patient's anatomy. While imaging techniques are highly accurate, they are based on ionizing radiation and expose patients and clinicians. This study introduces an alternative, radiation-free approach for reconstructing the 3D spine anatomy using RGB-D data. Drawing inspiration from the 3D "mental map" that surgeons form during surgeries, we introduce SurgPointTransformer, a shape completion approach for surgical applications that can accurately reconstruct the unexposed spine regions from sparse observations of the exposed surface. Our method involves two main steps: segmentation and shape completion. The segmentation step includes spinal column localization and segmentation, followed by vertebra-wise segmentation. The segmented vertebra point clouds are then subjected to SurgPointTransformer, which leverages an attention mechanism to learn patterns between visible surface features and the underlying anatomy. For evaluation, we utilize an ex-vivo dataset of nine specimens. Their CT data is used to establish ground truth data that were used to compare to the outputs of our methods. Our method significantly outperforms the state-of-the-art baselines, achieving an average Chamfer Distance of 5.39, an F-Score of 0.85, an Earth Mover's Distance of 0.011, and a Signal-to-Noise Ratio of 22.90 dB. This study demonstrates the potential of our reconstruction method for 3D vertebral shape completion. It enables 3D reconstruction of the entire lumbar spine and surgical guidance without ionizing radiation or invasive imaging. Our work contributes to computer-aided and robot-assisted surgery, advancing the perception and intelligence of these systems.

Automatic registration with continuous pose updates for marker-less surgical navigation in spine surgery

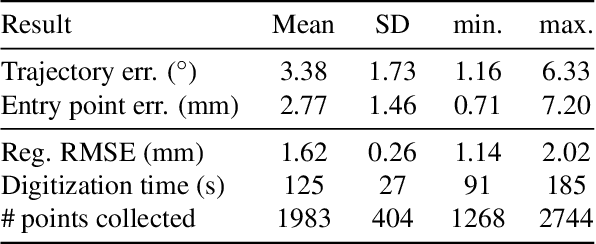

Aug 05, 2023Abstract:Established surgical navigation systems for pedicle screw placement have been proven to be accurate, but still reveal limitations in registration or surgical guidance. Registration of preoperative data to the intraoperative anatomy remains a time-consuming, error-prone task that includes exposure to harmful radiation. Surgical guidance through conventional displays has well-known drawbacks, as information cannot be presented in-situ and from the surgeon's perspective. Consequently, radiation-free and more automatic registration methods with subsequent surgeon-centric navigation feedback are desirable. In this work, we present an approach that automatically solves the registration problem for lumbar spinal fusion surgery in a radiation-free manner. A deep neural network was trained to segment the lumbar spine and simultaneously predict its orientation, yielding an initial pose for preoperative models, which then is refined for each vertebra individually and updated in real-time with GPU acceleration while handling surgeon occlusions. An intuitive surgical guidance is provided thanks to the integration into an augmented reality based navigation system. The registration method was verified on a public dataset with a mean of 96\% successful registrations, a target registration error of 2.73 mm, a screw trajectory error of 1.79{\deg} and a screw entry point error of 2.43 mm. Additionally, the whole pipeline was validated in an ex-vivo surgery, yielding a 100\% screw accuracy and a registration accuracy of 1.20 mm. Our results meet clinical demands and emphasize the potential of RGB-D data for fully automatic registration approaches in combination with augmented reality guidance.

Registration made easy -- standalone orthopedic navigation with HoloLens

Jan 17, 2020

Abstract:In surgical navigation, finding correspondence between preoperative plan and intraoperative anatomy, the so-called registration task, is imperative. One promising approach is to intraoperatively digitize anatomy and register it with the preoperative plan. State-of-the-art commercial navigation systems implement such approaches for pedicle screw placement in spinal fusion surgery. Although these systems improve surgical accuracy, they are not gold standard in clinical practice. Besides economical reasons, this may be due to their difficult integration into clinical workflows and unintuitive navigation feedback. Augmented Reality has the potential to overcome these limitations. Consequently, we propose a surgical navigation approach comprising intraoperative surface digitization for registration and intuitive holographic navigation for pedicle screw placement that runs entirely on the Microsoft HoloLens. Preliminary results from phantom experiments suggest that the method may meet clinical accuracy requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge