Haoqi Wu

CAPE: Context-Aware Prompt Perturbation Mechanism with Differential Privacy

May 09, 2025

Abstract:Large Language Models (LLMs) have gained significant popularity due to their remarkable capabilities in text understanding and generation. However, despite their widespread deployment in inference services such as ChatGPT, concerns about the potential leakage of sensitive user data have arisen. Existing solutions primarily rely on privacy-enhancing technologies to mitigate such risks, facing the trade-off among efficiency, privacy, and utility. To narrow this gap, we propose Cape, a context-aware prompt perturbation mechanism based on differential privacy, to enable efficient inference with an improved privacy-utility trade-off. Concretely, we introduce a hybrid utility function that better captures the token similarity. Additionally, we propose a bucketized sampling mechanism to handle large sampling space, which might lead to long-tail phenomenons. Extensive experiments across multiple datasets, along with ablation studies, demonstrate that Cape achieves a better privacy-utility trade-off compared to prior state-of-the-art works.

Nimbus: Secure and Efficient Two-Party Inference for Transformers

Nov 24, 2024

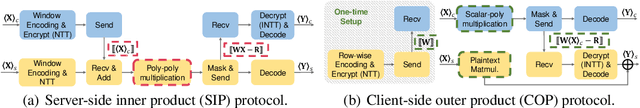

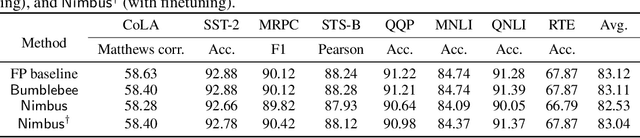

Abstract:Transformer models have gained significant attention due to their power in machine learning tasks. Their extensive deployment has raised concerns about the potential leakage of sensitive information during inference. However, when being applied to Transformers, existing approaches based on secure two-party computation (2PC) bring about efficiency limitations in two folds: (1) resource-intensive matrix multiplications in linear layers, and (2) complex non-linear activation functions like $\mathsf{GELU}$ and $\mathsf{Softmax}$. This work presents a new two-party inference framework $\mathsf{Nimbus}$ for Transformer models. For the linear layer, we propose a new 2PC paradigm along with an encoding approach to securely compute matrix multiplications based on an outer-product insight, which achieves $2.9\times \sim 12.5\times$ performance improvements compared to the state-of-the-art (SOTA) protocol. For the non-linear layer, through a new observation of utilizing the input distribution, we propose an approach of low-degree polynomial approximation for $\mathsf{GELU}$ and $\mathsf{Softmax}$, which improves the performance of the SOTA polynomial approximation by $2.9\times \sim 4.0\times$, where the average accuracy loss of our approach is 0.08\% compared to the non-2PC inference without privacy. Compared with the SOTA two-party inference, $\mathsf{Nimbus}$ improves the end-to-end performance of \bert{} inference by $2.7\times \sim 4.7\times$ across different network settings.

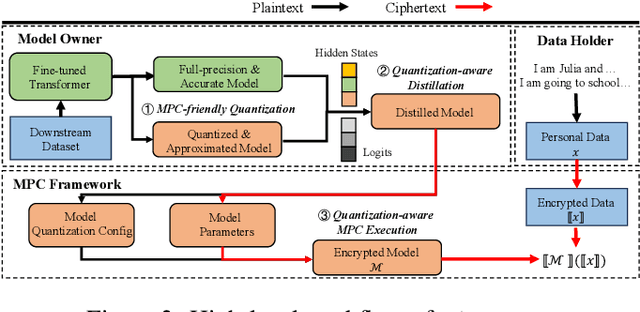

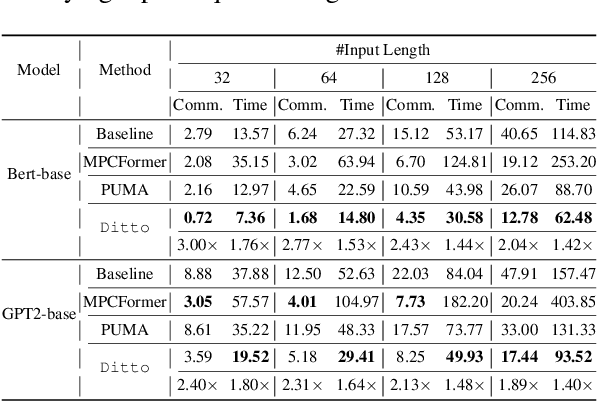

Ditto: Quantization-aware Secure Inference of Transformers upon MPC

May 09, 2024

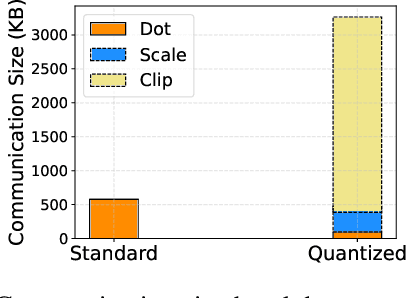

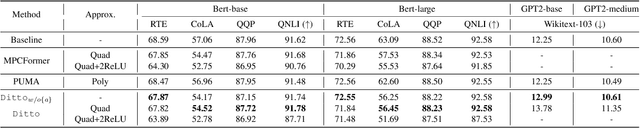

Abstract:Due to the rising privacy concerns on sensitive client data and trained models like Transformers, secure multi-party computation (MPC) techniques are employed to enable secure inference despite attendant overhead. Existing works attempt to reduce the overhead using more MPC-friendly non-linear function approximations. However, the integration of quantization widely used in plaintext inference into the MPC domain remains unclear. To bridge this gap, we propose the framework named Ditto to enable more efficient quantization-aware secure Transformer inference. Concretely, we first incorporate an MPC-friendly quantization into Transformer inference and employ a quantization-aware distillation procedure to maintain the model utility. Then, we propose novel MPC primitives to support the type conversions that are essential in quantization and implement the quantization-aware MPC execution of secure quantized inference. This approach significantly decreases both computation and communication overhead, leading to improvements in overall efficiency. We conduct extensive experiments on Bert and GPT2 models to evaluate the performance of Ditto. The results demonstrate that Ditto is about $3.14\sim 4.40\times$ faster than MPCFormer (ICLR 2023) and $1.44\sim 2.35\times$ faster than the state-of-the-art work PUMA with negligible utility degradation.

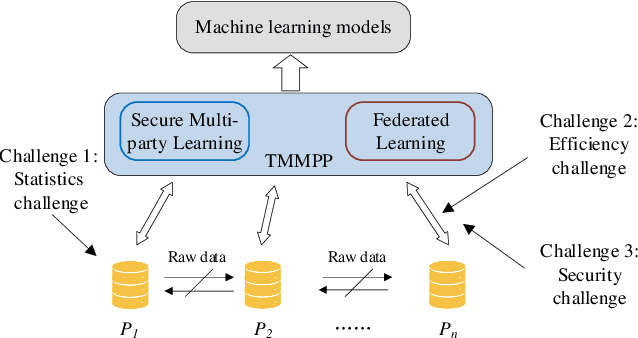

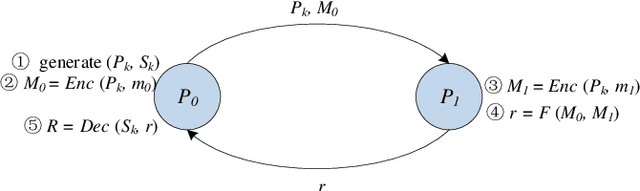

SoK: Training Machine Learning Models over Multiple Sources with Privacy Preservation

Dec 06, 2020

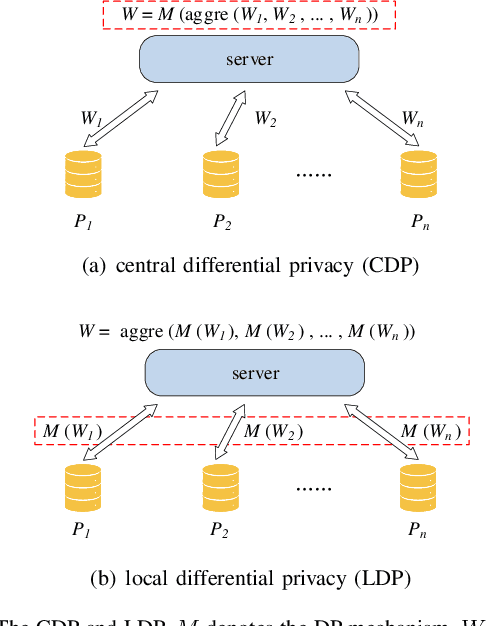

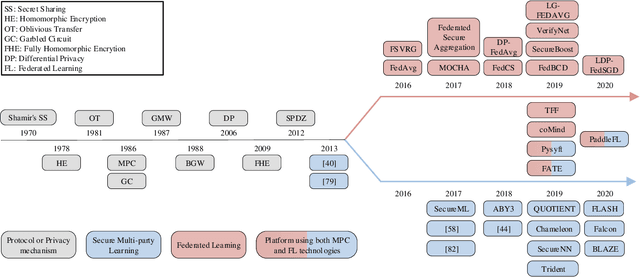

Abstract:Nowadays, gathering high-quality training data from multiple data controllers with privacy preservation is a key challenge to train high-quality machine learning models. The potential solutions could dramatically break the barriers among isolated data corpus, and consequently enlarge the range of data available for processing. To this end, both academia researchers and industrial vendors are recently strongly motivated to propose two main-stream folders of solutions: 1) Secure Multi-party Learning (MPL for short); and 2) Federated Learning (FL for short). These two solutions have their advantages and limitations when we evaluate them from privacy preservation, ways of communication, communication overhead, format of data, the accuracy of trained models, and application scenarios. Motivated to demonstrate the research progress and discuss the insights on the future directions, we thoroughly investigate these protocols and frameworks of both MPL and FL. At first, we define the problem of training machine learning models over multiple data sources with privacy-preserving (TMMPP for short). Then, we compare the recent studies of TMMPP from the aspects of the technical routes, parties supported, data partitioning, threat model, and supported machine learning models, to show the advantages and limitations. Next, we introduce the state-of-the-art platforms which support online training over multiple data sources. Finally, we discuss the potential directions to resolve the problem of TMMPP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge