Han-Lim Choi

Aircraft Trajectory Segmentation-based Contrastive Coding: A Framework for Self-supervised Trajectory Representation

Jul 29, 2024

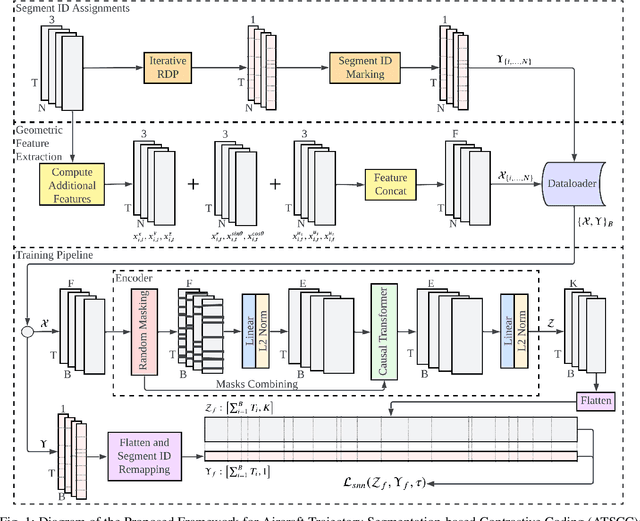

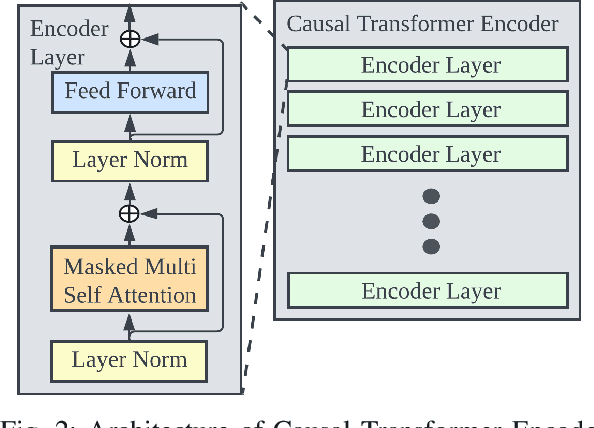

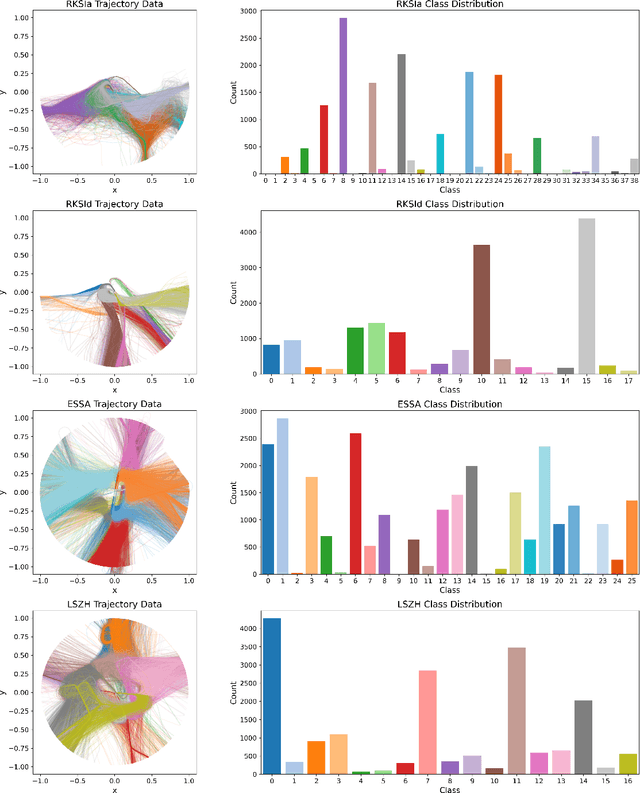

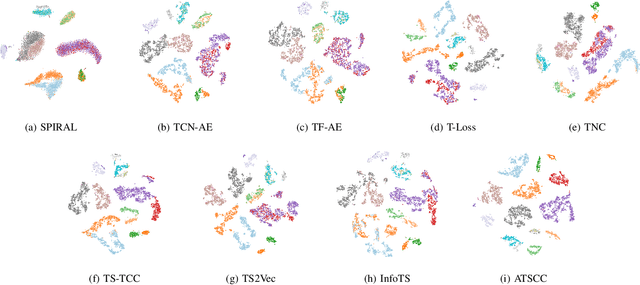

Abstract:Air traffic trajectory recognition has gained significant interest within the air traffic management community, particularly for fundamental tasks such as classification and clustering. This paper introduces Aircraft Trajectory Segmentation-based Contrastive Coding (ATSCC), a novel self-supervised time series representation learning framework designed to capture semantic information in air traffic trajectory data. The framework leverages the segmentable characteristic of trajectories and ensures consistency within the self-assigned segments. Intensive experiments were conducted on datasets from three different airports, totaling four datasets, comparing the learned representation's performance of downstream classification and clustering with other state-of-the-art representation learning techniques. The results show that ATSCC outperforms these methods by aligning with the labels defined by aeronautical procedures. ATSCC is adaptable to various airport configurations and scalable to incomplete trajectories. This research has expanded upon existing capabilities, achieving these improvements independently without predefined inputs such as airport configurations, maneuvering procedures, or labeled data.

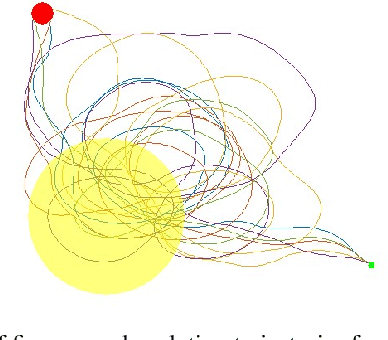

LiCS: Navigation using Learned-imitation on Cluttered Space

Jun 21, 2024

Abstract:In this letter, we propose a robust and fast navigation system in a narrow indoor environment for UGV (Unmanned Ground Vehicle) using 2D LiDAR and odometry. We used behavior cloning with Transformer neural network to learn the optimization-based baseline algorithm. We inject Gaussian noise during expert demonstration to increase the robustness of learned policy. We evaluate the performance of LiCS using both simulation and hardware experiments. It outperforms all other baselines in terms of navigation performance and can maintain its robust performance even on highly cluttered environments. During the hardware experiments, LiCS can maintain safe navigation at maximum speed of $1.5\ m/s$.

Distilling Privileged Information for Dubins Traveling Salesman Problems with Neighborhoods

Apr 25, 2024

Abstract:This paper presents a novel learning approach for Dubins Traveling Salesman Problems(DTSP) with Neighborhood (DTSPN) to quickly produce a tour of a non-holonomic vehicle passing through neighborhoods of given task points. The method involves two learning phases: initially, a model-free reinforcement learning approach leverages privileged information to distill knowledge from expert trajectories generated by the LinKernighan heuristic (LKH) algorithm. Subsequently, a supervised learning phase trains an adaptation network to solve problems independently of privileged information. Before the first learning phase, a parameter initialization technique using the demonstration data was also devised to enhance training efficiency. The proposed learning method produces a solution about 50 times faster than LKH and substantially outperforms other imitation learning and RL with demonstration schemes, most of which fail to sense all the task points.

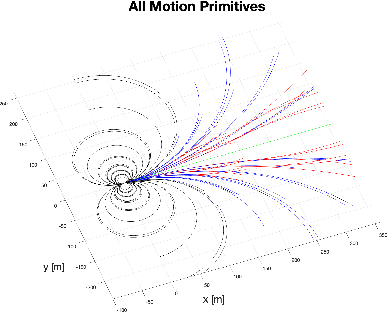

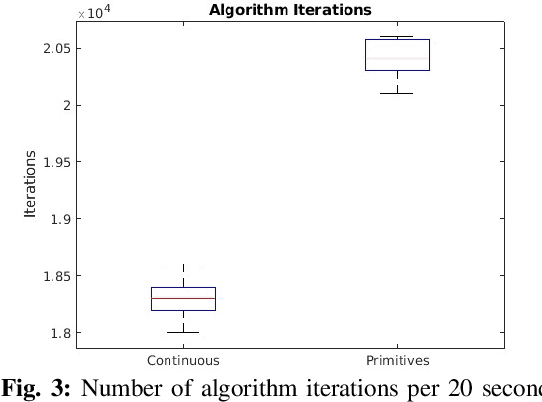

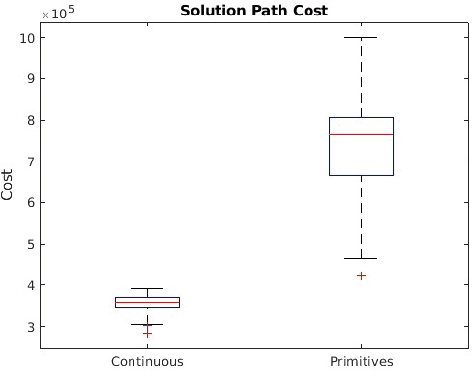

Path Planning in 3D with Motion Primitives for Wind Energy-Harvesting Fixed-Wing Aircraft

Nov 17, 2023

Abstract:In this work, a set of motion primitives is defined for use in an energy-aware motion planning problem. The motion primitives are defined as sequences of control inputs to a simplified four-DOF dynamics model and are used to replace the traditional continuous control space used in many sampling-based motion planners. The primitives are implemented in a Stable Sparse Rapidly Exploring Random Tree (SST) motion planner and compared to an identical planner using a continuous control space. The planner using primitives was found to run 11.0\% faster but yielded solution paths that were on average worse with higher variance. Also, the solution path travel time is improved by about 50\%. Using motion primitives for sampling spaces in SST can effectively reduce the run time of the algorithm, although at the cost of solution quality.

Computing Forward Reachable Sets for Nonlinear Adaptive Multirotor Controllers

Sep 16, 2022

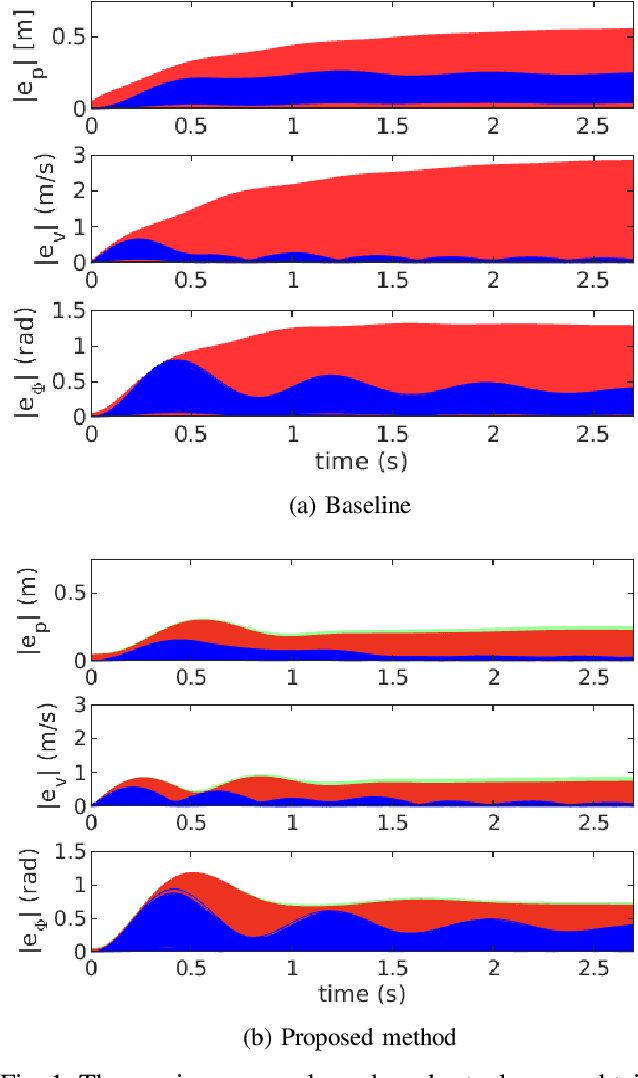

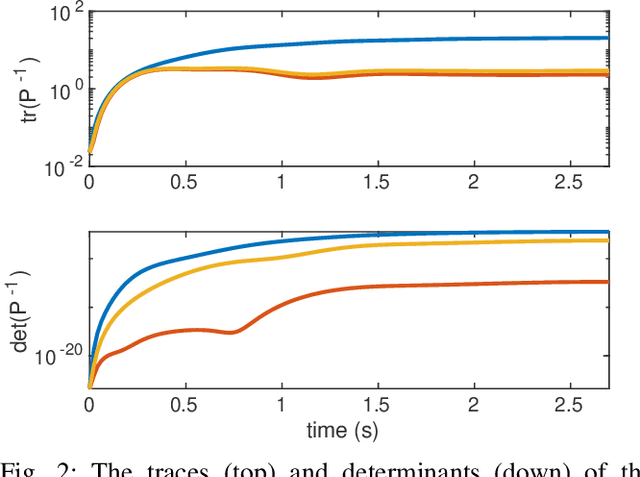

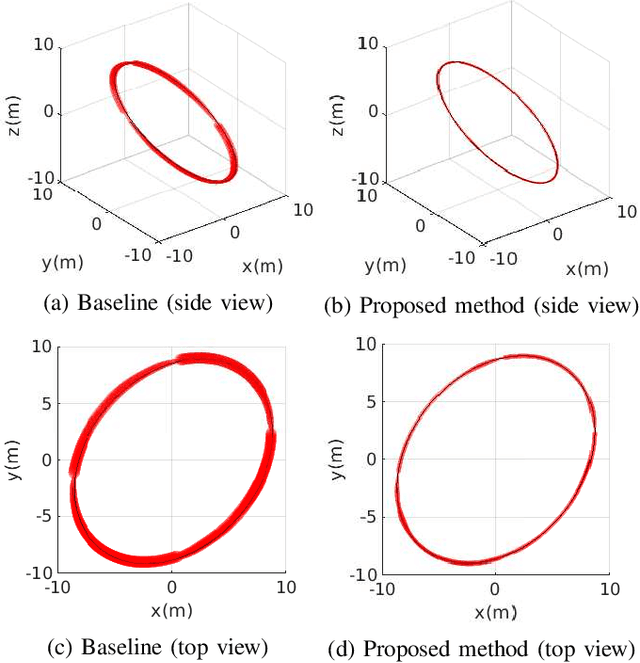

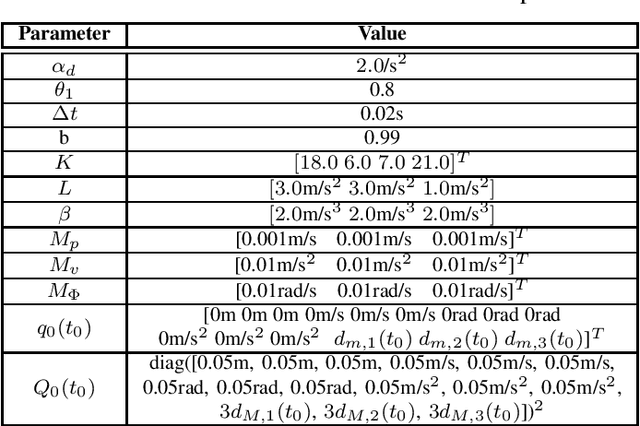

Abstract:In multirotor systems, guaranteeing safety while considering unknown disturbances is essential for robust trajectory planning. Computing the forward reachable set (FRS), the set of all possible states with bounded disturbances, can be a viable solution to find robust and collision-free trajectories. However, in many cases, the FRS is not calculated in real time and is too conservative to be used in actual applications. In this paper, we mitigate these problems by applying a nonlinear disturbance observer (NDOB) and an adaptive controller to the multirotor system. We formulate the FRS of the closed-loop system combined with the adaptive controller in augmented state space by exploiting the Hamilton-Jacobi reachability analysis and then present the ellipsoidal approximation in a closed-form expression to compute the small FRS in real time. Moreover, tighter disturbance bounds in the prediction horizon are inferred from the NDOB so that a much smaller FRS can be generated. Numerical examples validate the computational efficiency and the smaller scale of the proposed FRS compared to the baseline.

DS-K3DOM: 3-D Dynamic Occupancy Mapping with Kernel Inference and Dempster-Shafer Evidential Theory

Sep 16, 2022

Abstract:Occupancy mapping has been widely utilized to represent the surroundings for autonomous robots to perform tasks such as navigation and manipulation. While occupancy mapping in 2-D environments has been well-studied, there have been few approaches suitable for 3-D dynamic occupancy mapping which is essential for aerial robots. This paper presents a novel 3-D dynamic occupancy mapping algorithm called DSK3DOM. We first establish a Bayesian method to sequentially update occupancy maps for a stream of measurements based on the random finite set theory. Then, we approximate it with particles in the Dempster-Shafer domain to enable real time computation. Moreover, the algorithm applies kernel based inference with Dirichlet basic belief assignment to enable dense mapping from sparse measurements. The efficacy of the proposed algorithm is demonstrated through simulations and real experiments.

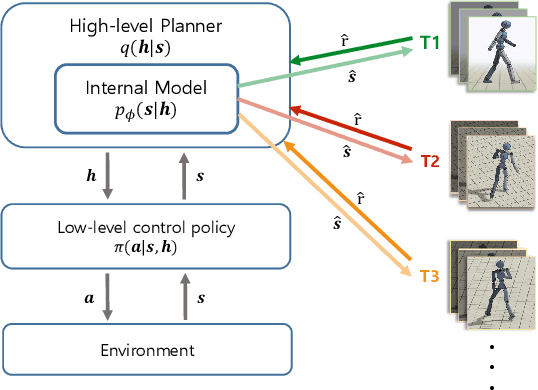

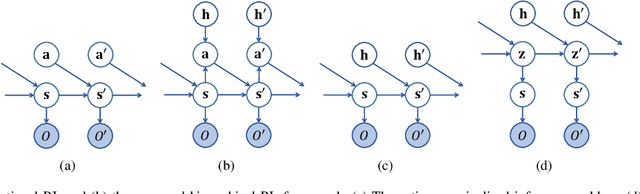

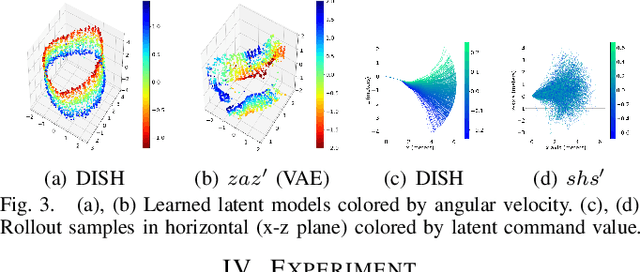

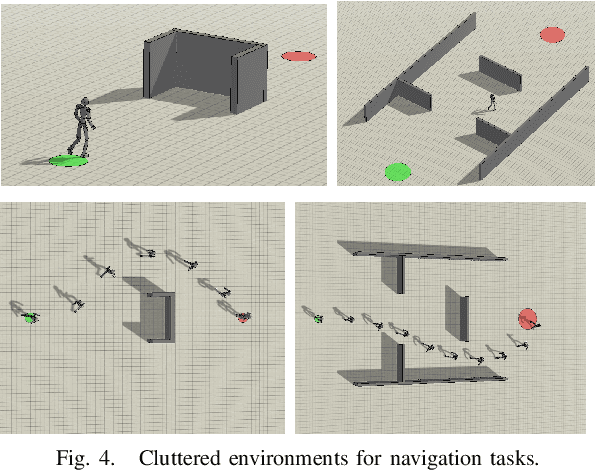

Distilling a Hierarchical Policy for Planning and Control via Representation and Reinforcement Learning

Nov 16, 2020

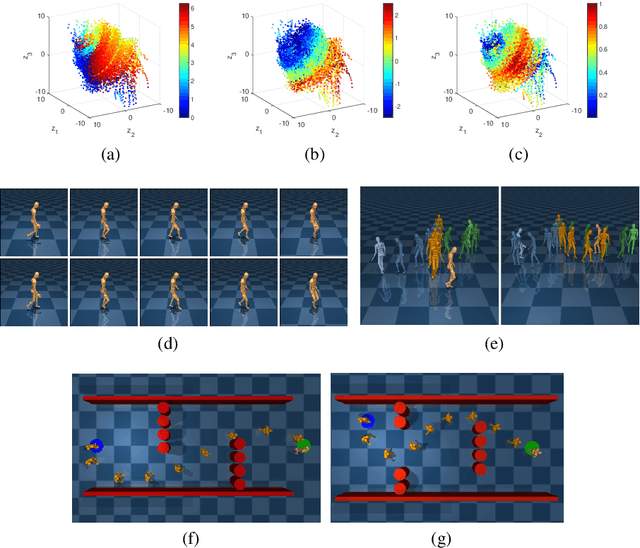

Abstract:We present a hierarchical planning and control framework that enables an agent to perform various tasks and adapt to a new task flexibly. Rather than learning an individual policy for each particular task, the proposed framework, DISH, distills a hierarchical policy from a set of tasks by representation and reinforcement learning. The framework is based on the idea of latent variable models that represent high-dimensional observations using low-dimensional latent variables. The resulting policy consists of two levels of hierarchy: (i) a planning module that reasons a sequence of latent intentions that would lead to an optimistic future and (ii) a feedback control policy, shared across the tasks, that executes the inferred intention. Because the planning is performed in low-dimensional latent space, the learned policy can immediately be used to solve or adapt to new tasks without additional training. We demonstrate the proposed framework can learn compact representations (3- and 1-dimensional latent states and commands for a humanoid with 197- and 36-dimensional state features and actions) while solving a small number of imitation tasks, and the resulting policy is directly applicable to other types of tasks, i.e., navigation in cluttered environments.

Online Gaussian Process State-Space Models: Learning and Planning for Partially Observable Dynamical Systems

Mar 14, 2019

Abstract:Gaussian process state-space model (GPSSM) is a probabilistic dynamical system that represents unknown transition and/or measurement models as the Gaussian process (GP). The majority of approaches to learning GPSSM are focused on handling given time series data. However, in most dynamical systems, data required for model learning arrives sequentially and accumulates over time. Storing all the data requires large ammounts of memory, and using it for model learning can be computationally infeasible. To overcome this challenge, this paper develops an online inference method for learning the GPSSM (onlineGPSSM) that fuses stochastic variational inference (VI) and online VI. The proposed method can mitigate the computation time issue without catastrophic forgetting and supports adaptation to changes in a system and/or a real environments. Furthermore, we propose a GPSSM-based reinforcement learning (RL) framework for partially observable dynamical systems by combining onlineGPSSM with Bayesian filtering and trajectory optimization algorithms. Numerical examples are presented to demonstrate the applicability of the proposed method.

A Distributed ADMM Approach to Informative Trajectory Planning for Multi-Target Tracking

Jan 09, 2019

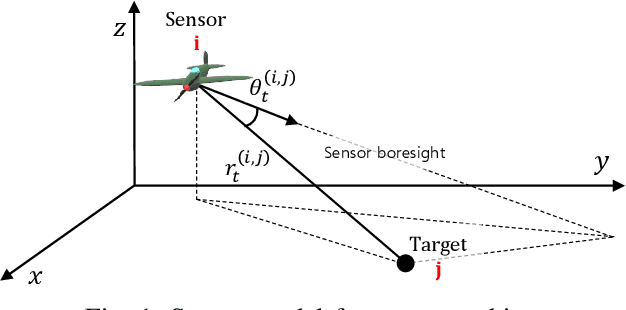

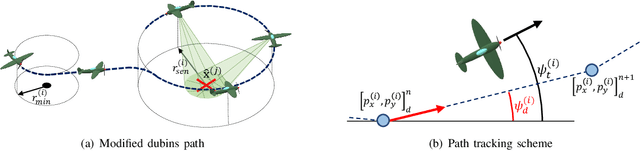

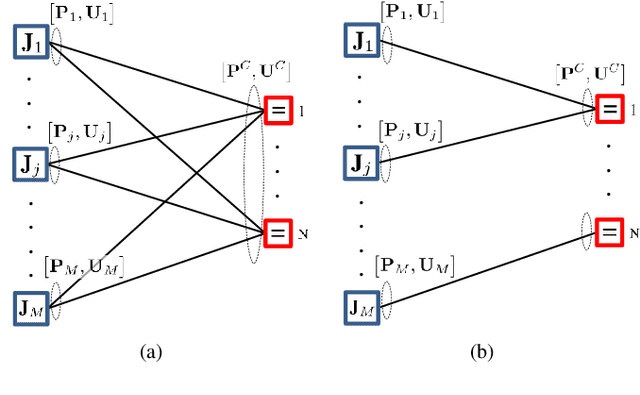

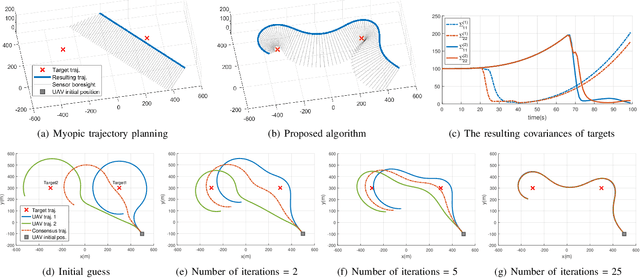

Abstract:This paper presents a distributed optimization method for informative trajectory planning in multi-target tracking problems. The purpose of such problems is to optimize a sequence of waypoints/control inputs of mobile sensors over a certain future time step to minimize the uncertainty of targets. The planning problem is reformulated as a distributed optimization problem that can be expressed in the form of a subproblem for each target. The subproblems are coupled using the distributed Alternating Direction Method of Multipliers (ADMM). This coupling not only enables the results of each subproblem to be reflected in the optimization process of the other subproblems, but also guides the results of the subproblems to converge to the same solution. In contrast to the existing approaches performing trajectory optimization after assigning tasks, the proposed algorithm does not require the design of a heuristic cost function for task assignment, and it can handle both trajectory optimization and task assignment in multiple target tracking problems simultaneously. In order to reduce the computation time of the algorithm, an edge-cutting method suitable for multiple-target tracking problems is proposed, as is a receding horizon control scheme for real-time implementation, which considers the computation time. Numerical examples are presented to demonstrate the applicability of the algorithm.

Adaptive Path-Integral Autoencoder: Representation Learning and Planning for Dynamical Systems

Jan 03, 2019

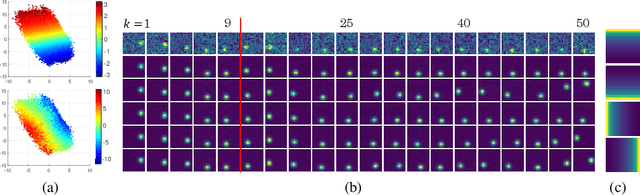

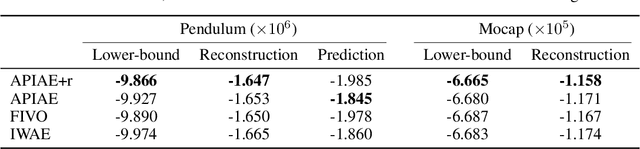

Abstract:We present a representation learning algorithm that learns a low-dimensional latent dynamical system from high-dimensional \textit{sequential} raw data, e.g., video. The framework builds upon recent advances in amortized inference methods that use both an inference network and a refinement procedure to output samples from a variational distribution given an observation sequence, and takes advantage of the duality between control and inference to approximately solve the intractable inference problem using the path integral control approach. The learned dynamical model can be used to predict and plan the future states; we also present the efficient planning method that exploits the learned low-dimensional latent dynamics. Numerical experiments show that the proposed path-integral control based variational inference method leads to tighter lower bounds in statistical model learning of sequential data. The supplementary video: https://youtu.be/xCp35crUoLQ

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge