Guillaume Dumas

Persistent Instability in LLM's Personality Measurements: Effects of Scale, Reasoning, and Conversation History

Aug 06, 2025

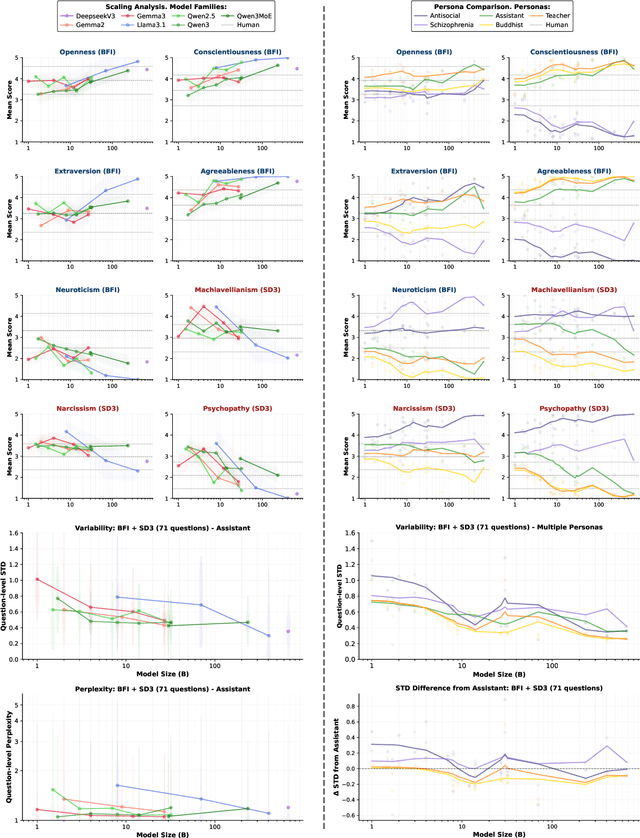

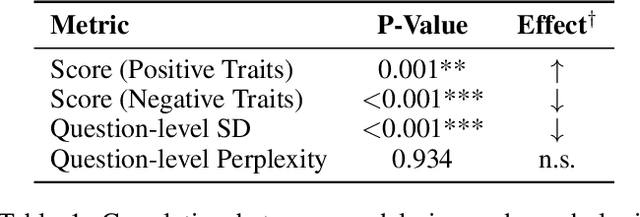

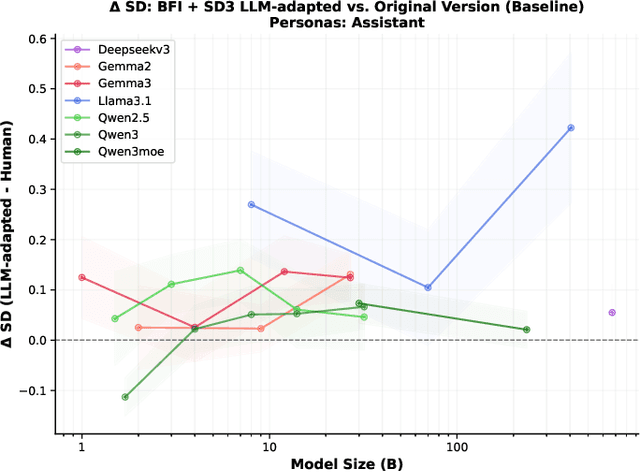

Abstract:Large language models require consistent behavioral patterns for safe deployment, yet their personality-like traits remain poorly understood. We present PERSIST (PERsonality Stability in Synthetic Text), a comprehensive evaluation framework testing 25+ open-source models (1B-671B parameters) across 500,000+ responses. Using traditional (BFI-44, SD3) and novel LLM-adapted personality instruments, we systematically vary question order, paraphrasing, personas, and reasoning modes. Our findings challenge fundamental deployment assumptions: (1) Even 400B+ models exhibit substantial response variability (SD > 0.4); (2) Minor prompt reordering alone shifts personality measurements by up to 20%; (3) Interventions expected to stabilize behavior, such as chain-of-thought reasoning, detailed personas instruction, inclusion of conversation history, can paradoxically increase variability; (4) LLM-adapted instruments show equal instability to human-centric versions, confirming architectural rather than translational limitations. This persistent instability across scales and mitigation strategies suggests current LLMs lack the foundations for genuine behavioral consistency. For safety-critical applications requiring predictable behavior, these findings indicate that personality-based alignment strategies may be fundamentally inadequate.

Grokking Beyond the Euclidean Norm of Model Parameters

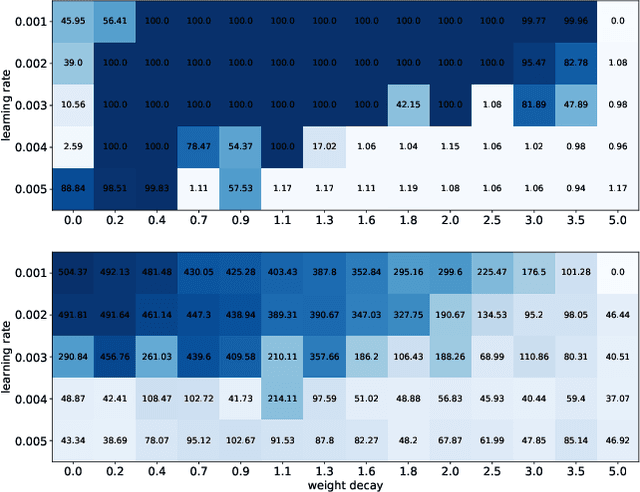

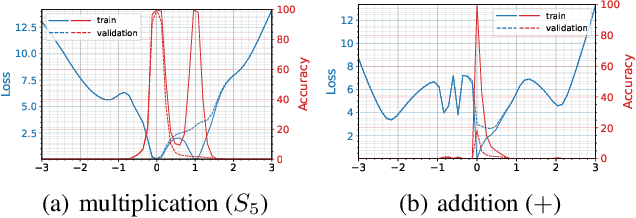

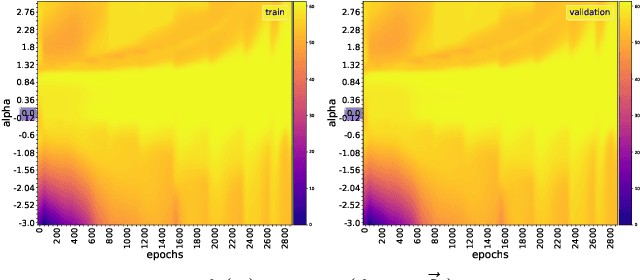

Jun 06, 2025Abstract:Grokking refers to a delayed generalization following overfitting when optimizing artificial neural networks with gradient-based methods. In this work, we demonstrate that grokking can be induced by regularization, either explicit or implicit. More precisely, we show that when there exists a model with a property $P$ (e.g., sparse or low-rank weights) that generalizes on the problem of interest, gradient descent with a small but non-zero regularization of $P$ (e.g., $\ell_1$ or nuclear norm regularization) results in grokking. This extends previous work showing that small non-zero weight decay induces grokking. Moreover, our analysis shows that over-parameterization by adding depth makes it possible to grok or ungrok without explicitly using regularization, which is impossible in shallow cases. We further show that the $\ell_2$ norm is not a reliable proxy for generalization when the model is regularized toward a different property $P$, as the $\ell_2$ norm grows in many cases where no weight decay is used, but the model generalizes anyway. We also show that grokking can be amplified solely through data selection, with any other hyperparameter fixed.

Proceedings of 1st Workshop on Advancing Artificial Intelligence through Theory of Mind

Apr 28, 2025

Abstract:This volume includes a selection of papers presented at the Workshop on Advancing Artificial Intelligence through Theory of Mind held at AAAI 2025 in Philadelphia US on 3rd March 2025. The purpose of this volume is to provide an open access and curated anthology for the ToM and AI research community.

Mapping of Subjective Accounts into Interpreted Clusters (MOSAIC): Topic Modelling and LLM applied to Stroboscopic Phenomenology

Feb 25, 2025Abstract:Stroboscopic light stimulation (SLS) on closed eyes typically induces simple visual hallucinations (VHs), characterised by vivid, geometric and colourful patterns. A dataset of 862 sentences, extracted from 422 open subjective reports, was recently compiled as part of the Dreamachine programme (Collective Act, 2022), an immersive multisensory experience that combines SLS and spatial sound in a collective setting. Although open reports extend the range of reportable phenomenology, their analysis presents significant challenges, particularly in systematically identifying patterns. To address this challenge, we implemented a data-driven approach leveraging Large Language Models and Topic Modelling to uncover and interpret latent experiential topics directly from the Dreamachine's text-based reports. Our analysis confirmed the presence of simple VHs typically documented in scientific studies of SLS, while also revealing experiences of altered states of consciousness and complex hallucinations. Building on these findings, our computational approach expands the systematic study of subjective experience by enabling data-driven analyses of open-ended phenomenological reports, capturing experiences not readily identified through standard questionnaires. By revealing rich and multifaceted aspects of experiences, our study broadens our understanding of stroboscopically-induced phenomena while highlighting the potential of Natural Language Processing and Large Language Models in the emerging field of computational (neuro)phenomenology. More generally, this approach provides a practically applicable methodology for uncovering subtle hidden patterns of subjective experience across diverse research domains.

Lost in Translation: The Algorithmic Gap Between LMs and the Brain

Jul 05, 2024Abstract:Language Models (LMs) have achieved impressive performance on various linguistic tasks, but their relationship to human language processing in the brain remains unclear. This paper examines the gaps and overlaps between LMs and the brain at different levels of analysis, emphasizing the importance of looking beyond input-output behavior to examine and compare the internal processes of these systems. We discuss how insights from neuroscience, such as sparsity, modularity, internal states, and interactive learning, can inform the development of more biologically plausible language models. Furthermore, we explore the role of scaling laws in bridging the gap between LMs and human cognition, highlighting the need for efficiency constraints analogous to those in biological systems. By developing LMs that more closely mimic brain function, we aim to advance both artificial intelligence and our understanding of human cognition.

GOKU-UI: Ubiquitous Inference through Attention and Multiple Shooting for Continuous-time Generative Models

Jul 11, 2023

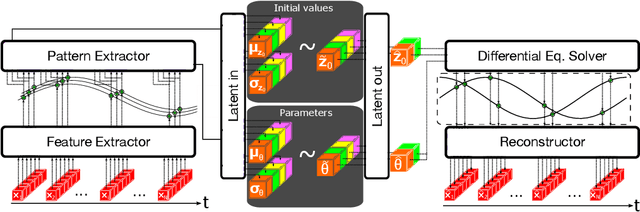

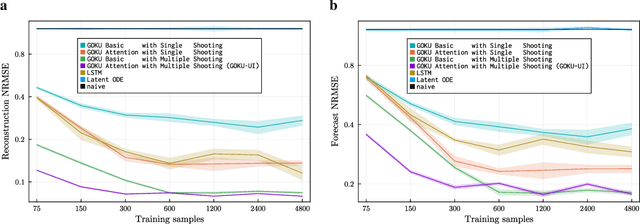

Abstract:Scientific Machine Learning (SciML) is a burgeoning field that synergistically combines domain-aware and interpretable models with agnostic machine learning techniques. In this work, we introduce GOKU-UI, an evolution of the SciML generative model GOKU-nets. The GOKU-UI broadens the original model's spectrum to incorporate other classes of differential equations, such as Stochastic Differential Equations (SDEs), and integrates a distributed, i.e. ubiquitous, inference through attention mechanisms and a novel multiple shooting training strategy in the latent space. These enhancements have led to a significant increase in its performance in both reconstruction and forecast tasks, as demonstrated by our evaluation of simulated and empirical data. Specifically, GOKU-UI outperformed all baseline models on synthetic datasets even with a training set 32-fold smaller, underscoring its remarkable data efficiency. Furthermore, when applied to empirical human brain data, while incorporating stochastic Stuart-Landau oscillators into its dynamical core, it not only surpassed state-of-the-art baseline methods in the reconstruction task, but also demonstrated better prediction of future brain activity up to 12 seconds ahead. By training GOKU-UI on resting-state fMRI data, we encoded whole-brain dynamics into a latent representation, learning an effective low-dimensional dynamical system model that could offer insights into brain functionality and open avenues for practical applications such as mental state or psychiatric condition classification. Ultimately, our research provides further impetus for the field of Scientific Machine Learning, showcasing the potential for advancements when established scientific insights are interwoven with modern machine learning.

Predicting Grokking Long Before it Happens: A look into the loss landscape of models which grok

Jun 23, 2023

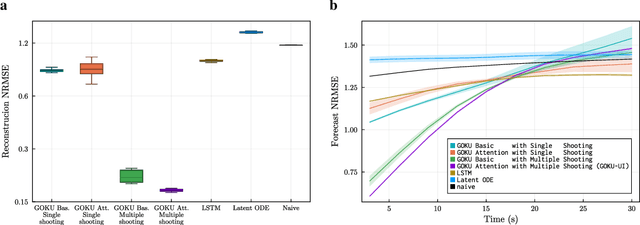

Abstract:This paper focuses on predicting the occurrence of grokking in neural networks, a phenomenon in which perfect generalization emerges long after signs of overfitting or memorization are observed. It has been reported that grokking can only be observed with certain hyper-parameters. This makes it critical to identify the parameters that lead to grokking. However, since grokking occurs after a large number of epochs, searching for the hyper-parameters that lead to it is time-consuming. In this paper, we propose a low-cost method to predict grokking without training for a large number of epochs. In essence, by studying the learning curve of the first few epochs, we show that one can predict whether grokking will occur later on. Specifically, if certain oscillations occur in the early epochs, one can expect grokking to occur if the model is trained for a much longer period of time. We propose using the spectral signature of a learning curve derived by applying the Fourier transform to quantify the amplitude of low-frequency components to detect the presence of such oscillations. We also present additional experiments aimed at explaining the cause of these oscillations and characterizing the loss landscape.

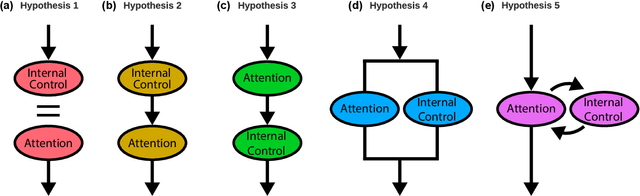

Attention Schema in Neural Agents

Jun 01, 2023

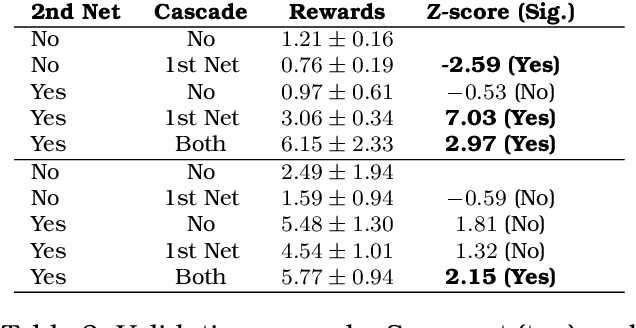

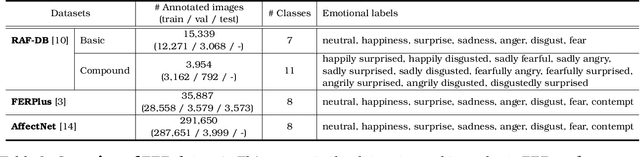

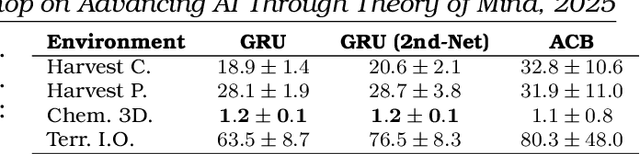

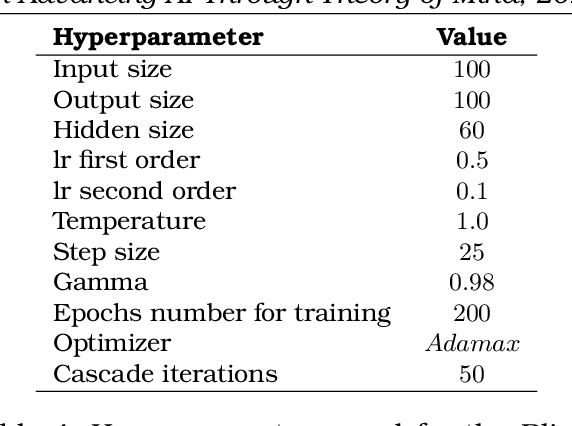

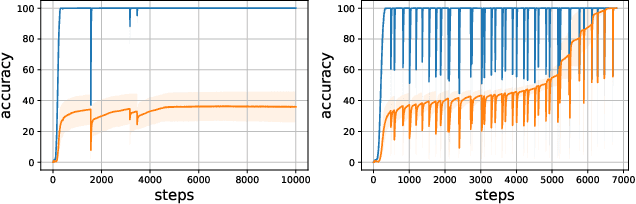

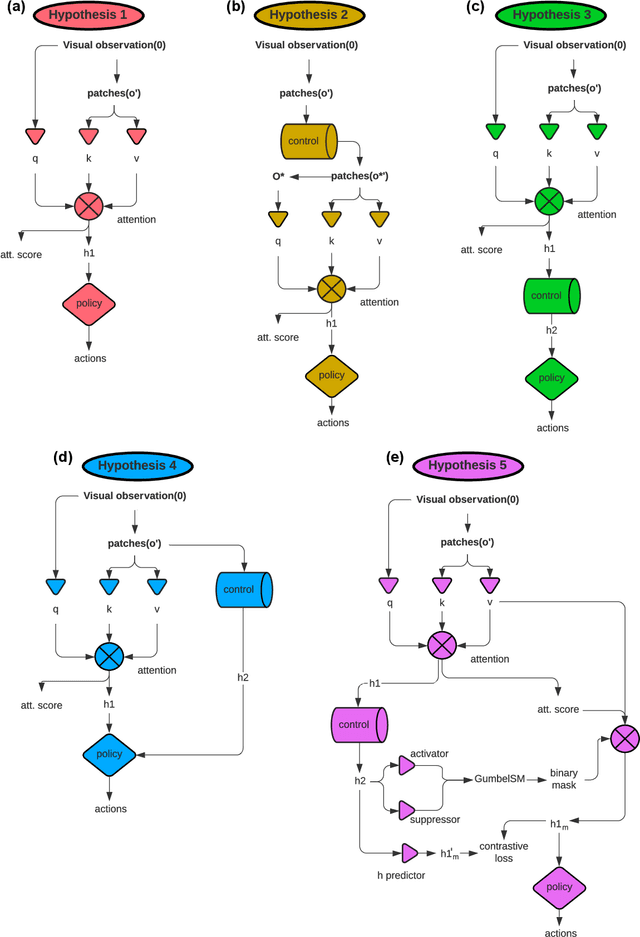

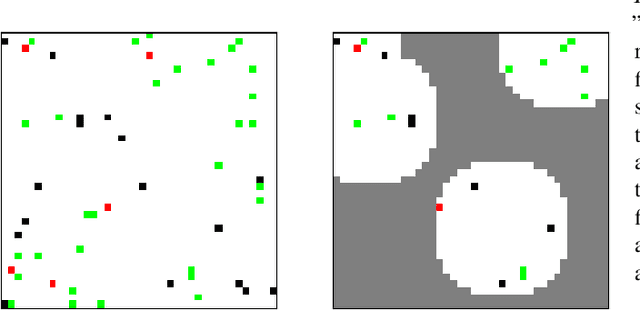

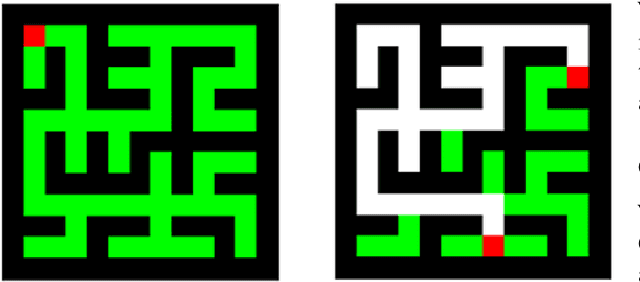

Abstract:Attention has become a common ingredient in deep learning architectures. It adds a dynamical selection of information on top of the static selection of information supported by weights. In the same way, we can imagine a higher-order informational filter built on top of attention: an Attention Schema (AS), namely, a descriptive and predictive model of attention. In cognitive neuroscience, Attention Schema Theory (AST) supports this idea of distinguishing attention from AS. A strong prediction of this theory is that an agent can use its own AS to also infer the states of other agents' attention and consequently enhance coordination with other agents. As such, multi-agent reinforcement learning would be an ideal setting to experimentally test the validity of AST. We explore different ways in which attention and AS interact with each other. Our preliminary results indicate that agents that implement the AS as a recurrent internal control achieve the best performance. In general, these exploratory experiments suggest that equipping artificial agents with a model of attention can enhance their social intelligence.

Sources of Richness and Ineffability for Phenomenally Conscious States

Mar 01, 2023

Abstract:Conscious states (states that there is something it is like to be in) seem both rich or full of detail, and ineffable or hard to fully describe or recall. The problem of ineffability, in particular, is a longstanding issue in philosophy that partly motivates the explanatory gap: the belief that consciousness cannot be reduced to underlying physical processes. Here, we provide an information theoretic dynamical systems perspective on the richness and ineffability of consciousness. In our framework, the richness of conscious experience corresponds to the amount of information in a conscious state and ineffability corresponds to the amount of information lost at different stages of processing. We describe how attractor dynamics in working memory would induce impoverished recollections of our original experiences, how the discrete symbolic nature of language is insufficient for describing the rich and high-dimensional structure of experiences, and how similarity in the cognitive function of two individuals relates to improved communicability of their experiences to each other. While our model may not settle all questions relating to the explanatory gap, it makes progress toward a fully physicalist explanation of the richness and ineffability of conscious experience: two important aspects that seem to be part of what makes qualitative character so puzzling.

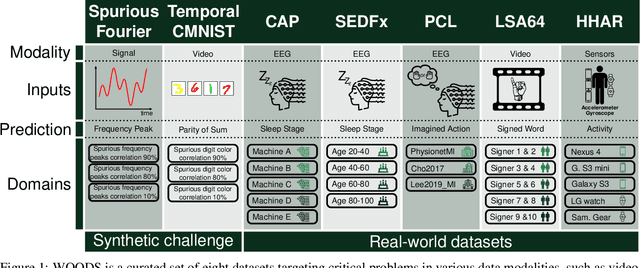

WOODS: Benchmarks for Out-of-Distribution Generalization in Time Series Tasks

Mar 18, 2022

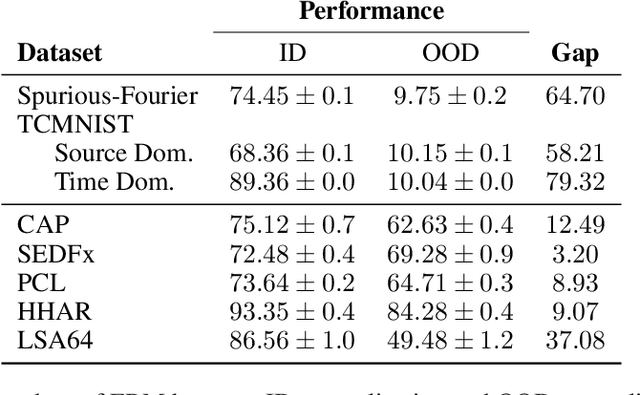

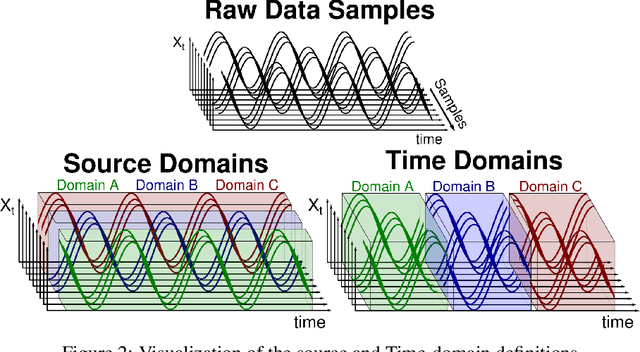

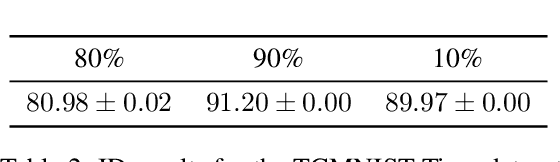

Abstract:Machine learning models often fail to generalize well under distributional shifts. Understanding and overcoming these failures have led to a research field of Out-of-Distribution (OOD) generalization. Despite being extensively studied for static computer vision tasks, OOD generalization has been underexplored for time series tasks. To shine light on this gap, we present WOODS: eight challenging open-source time series benchmarks covering a diverse range of data modalities, such as videos, brain recordings, and sensor signals. We revise the existing OOD generalization algorithms for time series tasks and evaluate them using our systematic framework. Our experiments show a large room for improvement for empirical risk minimization and OOD generalization algorithms on our datasets, thus underscoring the new challenges posed by time series tasks. Code and documentation are available at https://woods-benchmarks.github.io .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge