Gregory Cohen

Optical Linear Systems Framework for Event Sensing and Computational Neuromorphic Imaging

Jan 20, 2026Abstract:Event vision sensors (neuromorphic cameras) output sparse, asynchronous ON/OFF events triggered by log-intensity threshold crossings, enabling microsecond-scale sensing with high dynamic range and low data bandwidth. As a nonlinear system, this event representation does not readily integrate with the linear forward models that underpin most computational imaging and optical system design. We present a physics-grounded processing pipeline that maps event streams to estimates of per-pixel log-intensity and intensity derivatives, and embeds these measurements in a dynamic linear systems model with a time-varying point spread function. This enables inverse filtering directly from event data, using frequency-domain Wiener deconvolution with a known (or parameterised) dynamic transfer function. We validate the approach in simulation for single and overlapping point sources under modulated defocus, and on real event data from a tunable-focus telescope imaging a star field, demonstrating source localisation and separability. The proposed framework provides a practical bridge between event sensing and model-based computational imaging for dynamic optical systems.

Real-time CARFAC Cochlea Model Acceleration on FPGA for Underwater Acoustic Sensing Systems

Aug 11, 2025Abstract:This paper presents a real-time, energy-efficient embedded system implementing an array of Cascade of Asymmetric Resonators with Fast-Acting Compression (CARFAC) cochlea models for underwater sound analysis. Built on the AMD Kria KV260 System-on-Module (SoM), the system integrates a Rust-based software framework on the processor for real-time interfacing and synchronization with multiple hydrophone inputs, and a hardware-accelerated implementation of the CARFAC models on a Field-Programmable Gate Array (FPGA) for real-time sound pre-processing. Compared to prior work, the CARFAC accelerator achieves improved scalability and processing speed while reducing resource usage through optimized time-multiplexing, pipelined design, and elimination of costly division circuits. Experimental results demonstrate 13.5% hardware utilization for a single 64-channel CARFAC instance and a whole board power consumption of 3.11 W when processing a 256 kHz input signal in real time.

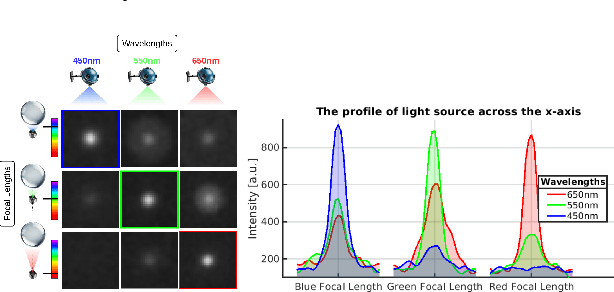

Seeing like a Cephalopod: Colour Vision with a Monochrome Event Camera

Apr 15, 2025

Abstract:Cephalopods exhibit unique colour discrimination capabilities despite having one type of photoreceptor, relying instead on chromatic aberration induced by their ocular optics and pupil shapes to perceive spectral information. We took inspiration from this biological mechanism to design a spectral imaging system that combines a ball lens with an event-based camera. Our approach relies on a motorised system that shifts the focal position, mirroring the adaptive lens motion in cephalopods. This approach has enabled us to achieve wavelength-dependent focusing across the visible light and near-infrared spectrum, making the event a spectral sensor. We characterise chromatic aberration effects, using both event-based and conventional frame-based sensors, validating the effectiveness of bio-inspired spectral discrimination both in simulation and in a real setup as well as assessing the spectral discrimination performance. Our proposed approach provides a robust spectral sensing capability without conventional colour filters or computational demosaicing. This approach opens new pathways toward new spectral sensing systems inspired by nature's evolutionary solutions. Code and analysis are available at: https://samiarja.github.io/neuromorphic_octopus_eye/

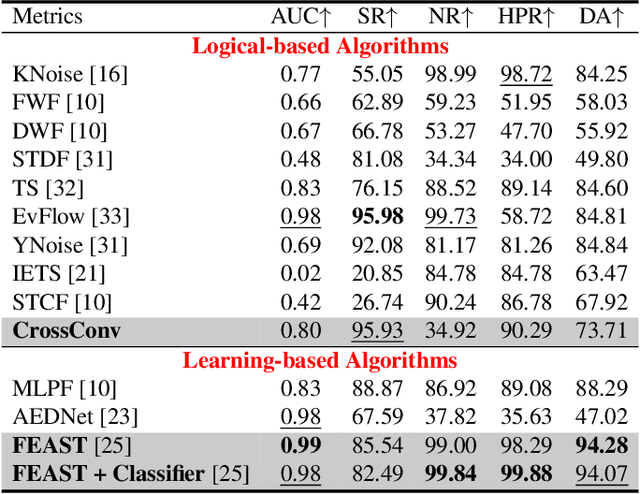

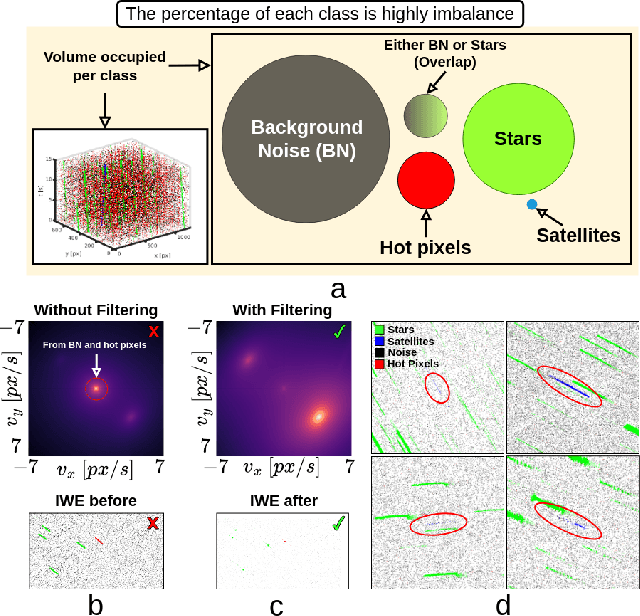

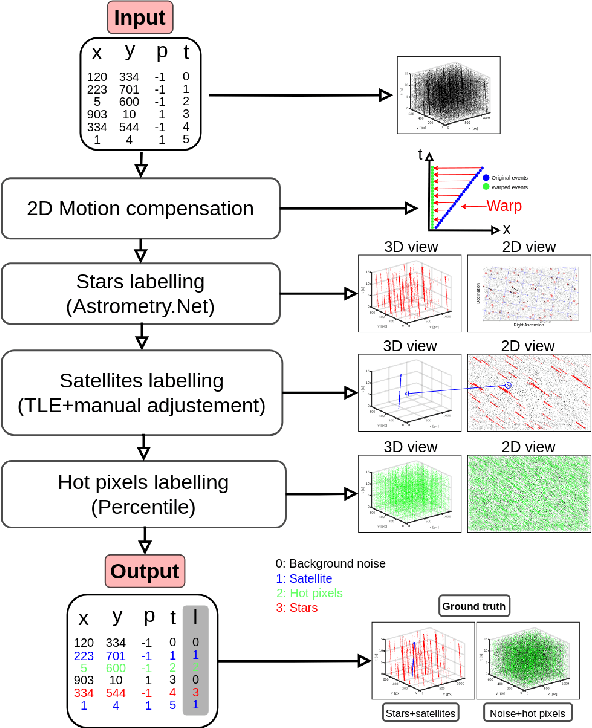

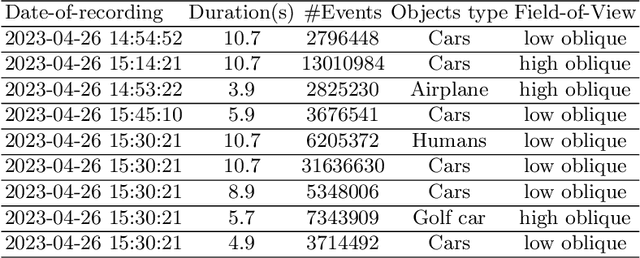

Noise Filtering Benchmark for Neuromorphic Satellites Observations

Nov 18, 2024

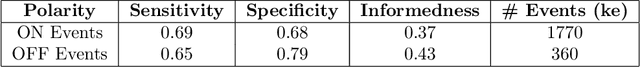

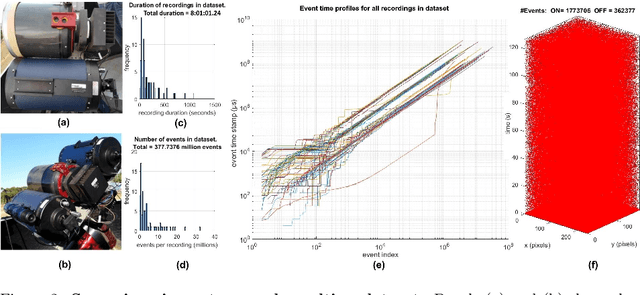

Abstract:Event cameras capture sparse, asynchronous brightness changes which offer high temporal resolution, high dynamic range, low power consumption, and sparse data output. These advantages make them ideal for Space Situational Awareness, particularly in detecting resident space objects moving within a telescope's field of view. However, the output from event cameras often includes substantial background activity noise, which is known to be more prevalent in low-light conditions. This noise can overwhelm the sparse events generated by satellite signals, making detection and tracking more challenging. Existing noise-filtering algorithms struggle in these scenarios because they are typically designed for denser scenes, where losing some signal is acceptable. This limitation hinders the application of event cameras in complex, real-world environments where signals are extremely sparse. In this paper, we propose new event-driven noise-filtering algorithms specifically designed for very sparse scenes. We categorise the algorithms into logical-based and learning-based approaches and benchmark their performance against 11 state-of-the-art noise-filtering algorithms, evaluating how effectively they remove noise and hot pixels while preserving the signal. Their performance was quantified by measuring signal retention and noise removal accuracy, with results reported using ROC curves across the parameter space. Additionally, we introduce a new high-resolution satellite dataset with ground truth from a real-world platform under various noise conditions, which we have made publicly available. Code, dataset, and trained weights are available at \url{https://github.com/samiarja/dvs_sparse_filter}.

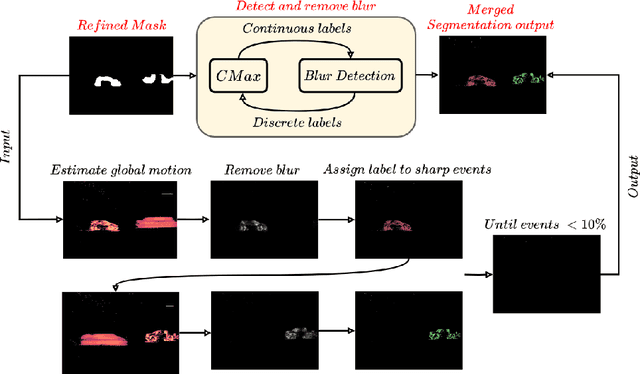

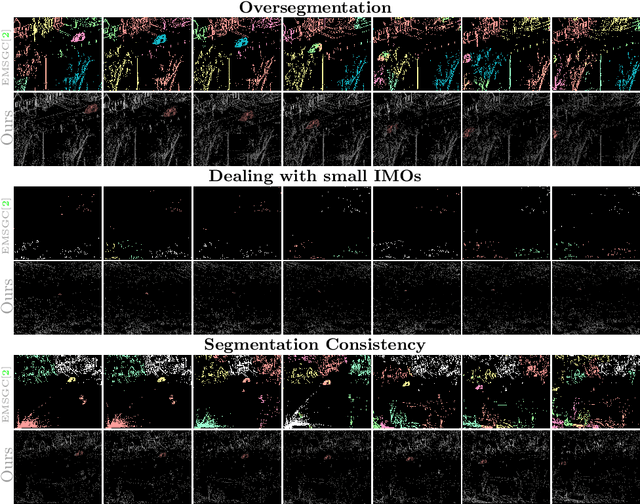

Unsupervised Motion Segmentation for Neuromorphic Aerial Surveillance

May 24, 2024

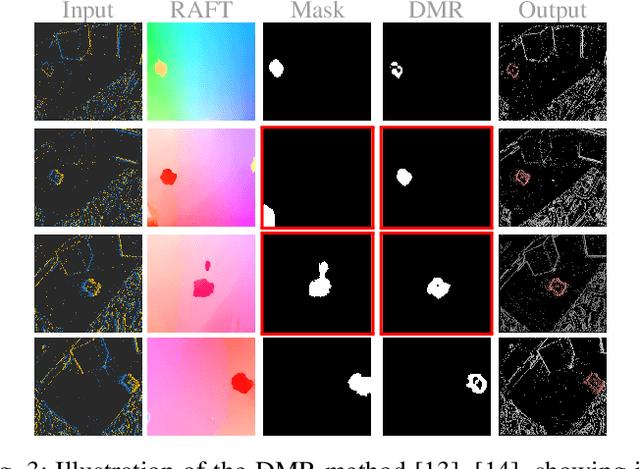

Abstract:Achieving optimal performance with frame-based vision sensors on aerial platforms poses a significant challenge due to the fundamental tradeoffs between bandwidth and latency. Event cameras, which draw inspiration from biological vision systems, present a promising alternative due to their exceptional temporal resolution, superior dynamic range, and minimal power requirements. Due to these properties, they are well-suited for processing and segmenting fast motions that require rapid reactions. However, previous methods for event-based motion segmentation encountered limitations, such as the need for per-scene parameter tuning or manual labelling to achieve satisfactory results. To overcome these issues, our proposed method leverages features from self-supervised transformers on both event data and optical flow information, eliminating the need for human annotations and reducing the parameter tuning problem. In this paper, we use an event camera with HD resolution onboard a highly dynamic aerial platform in an urban setting. We conduct extensive evaluations of our framework across multiple datasets, demonstrating state-of-the-art performance compared to existing works. Our method can effectively handle various types of motion and an arbitrary number of moving objects. Code and dataset are available at: \url{https://samiarja.github.io/evairborne/}

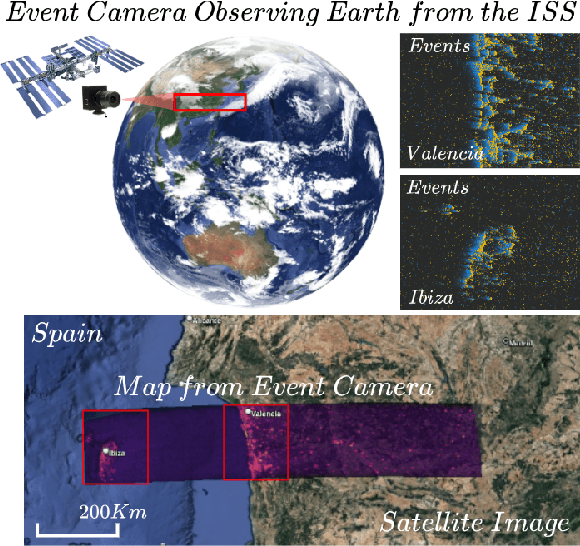

Density Invariant Contrast Maximization for Neuromorphic Earth Observations

May 03, 2023

Abstract:Contrast maximization (CMax) techniques are widely used in event-based vision systems to estimate the motion parameters of the camera and generate high-contrast images. However, these techniques are noise-intolerance and suffer from the multiple extrema problem which arises when the scene contains more noisy events than structure, causing the contrast to be higher at multiple locations. This makes the task of estimating the camera motion extremely challenging, which is a problem for neuromorphic earth observation, because, without a proper estimation of the motion parameters, it is not possible to generate a map with high contrast, causing important details to be lost. Similar methods that use CMax addressed this problem by changing or augmenting the objective function to enable it to converge to the correct motion parameters. Our proposed solution overcomes the multiple extrema and noise-intolerance problems by correcting the warped event before calculating the contrast and offers the following advantages: it does not depend on the event data, it does not require a prior about the camera motion, and keeps the rest of the CMax pipeline unchanged. This is to ensure that the contrast is only high around the correct motion parameters. Our approach enables the creation of better motion-compensated maps through an analytical compensation technique using a novel dataset from the International Space Station (ISS). Code is available at \url{https://github.com/neuromorphicsystems/event_warping}

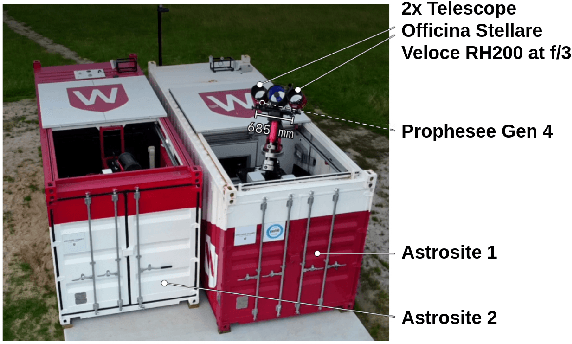

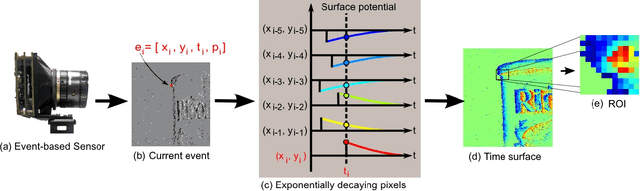

Astrometric Calibration and Source Characterisation of the Latest Generation Neuromorphic Event-based Cameras for Space Imaging

Nov 17, 2022Abstract:As an emerging approach to space situational awareness and space imaging, the practical use of an event-based camera in space imaging for precise source analysis is still in its infancy. The nature of event-based space imaging and data collection needs to be further explored to develop more effective event-based space image systems and advance the capabilities of event-based tracking systems with improved target measurement models. Moreover, for event measurements to be meaningful, a framework must be investigated for event-based camera calibration to project events from pixel array coordinates in the image plane to coordinates in a target resident space object's reference frame. In this paper, the traditional techniques of conventional astronomy are reconsidered to properly utilise the event-based camera for space imaging and space situational awareness. This paper presents the techniques and systems used for calibrating an event-based camera for reliable and accurate measurement acquisition. These techniques are vital in building event-based space imaging systems capable of real-world space situational awareness tasks. By calibrating sources detected using the event-based camera, the spatio-temporal characteristics of detected sources or `event sources' can be related to the photometric characteristics of the underlying astrophysical objects. Finally, these characteristics are analysed to establish a foundation for principled processing and observing techniques which appropriately exploit the capabilities of the event-based camera.

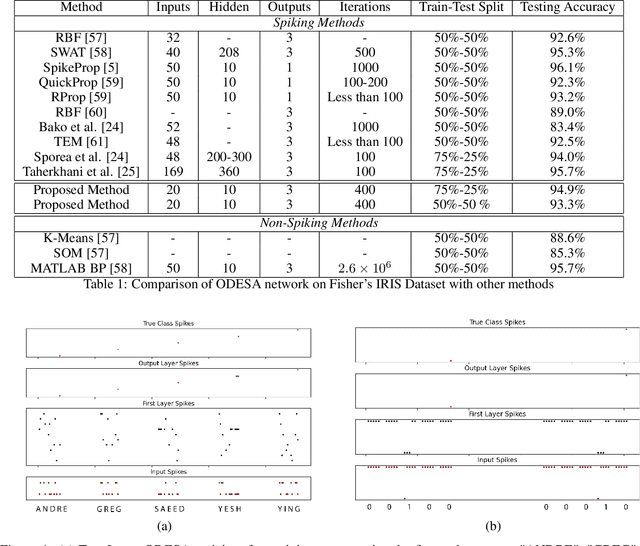

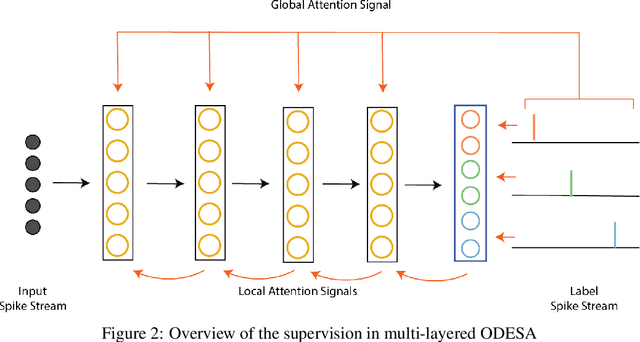

An optimised deep spiking neural network architecture without gradients

Oct 12, 2021

Abstract:We present an end-to-end trainable modular event-driven neural architecture that uses local synaptic and threshold adaptation rules to perform transformations between arbitrary spatio-temporal spike patterns. The architecture represents a highly abstracted model of existing Spiking Neural Network (SNN) architectures. The proposed Optimized Deep Event-driven Spiking neural network Architecture (ODESA) can simultaneously learn hierarchical spatio-temporal features at multiple arbitrary time scales. ODESA performs online learning without the use of error back-propagation or the calculation of gradients. Through the use of simple local adaptive selection thresholds at each node, the network rapidly learns to appropriately allocate its neuronal resources at each layer for any given problem without using a real-valued error measure. These adaptive selection thresholds are the central feature of ODESA, ensuring network stability and remarkable robustness to noise as well as to the selection of initial system parameters. Network activations are inherently sparse due to a hard Winner-Take-All (WTA) constraint at each layer. We evaluate the architecture on existing spatio-temporal datasets, including the spike-encoded IRIS and TIDIGITS datasets, as well as a novel set of tasks based on International Morse Code that we created. These tests demonstrate the hierarchical spatio-temporal learning capabilities of ODESA. Through these tests, we demonstrate ODESA can optimally solve practical and highly challenging hierarchical spatio-temporal learning tasks with the minimum possible number of computing nodes.

Event-based Object Detection and Tracking for Space Situational Awareness

Nov 20, 2019

Abstract:In this work, we present optical space imaging using an unconventional yet promising class of imaging devices known as neuromorphic event-based sensors. These devices, which are modeled on the human retina, do not operate with frames, but rather generate asynchronous streams of events in response to changes in log-illumination at each pixel. These devices are therefore extremely fast, do not have fixed exposure times, allow for imaging whilst the device is moving and enable low power space imaging during daytime as well as night without modification of the sensors. Recorded at multiple remote sites, we present the first event-based space imaging dataset including recordings from multiple event-based sensors from multiple providers, greatly lowering the barrier to entry for other researchers given the scarcity of such sensors and the expertise required to operate them. The dataset contains 236 separate recordings and 572 labeled resident space objects. The event-based imaging paradigm presents unique opportunities and challenges motivating the development of specialized event-based algorithms that can perform tasks such as detection and tracking in an event-based manner. Here we examine a range of such event-based algorithms for detection and tracking. The presented methods are designed specifically for space situational awareness applications and are evaluated in terms of accuracy and speed and suitability for implementation in neuromorphic hardware on remote or space-based imaging platforms.

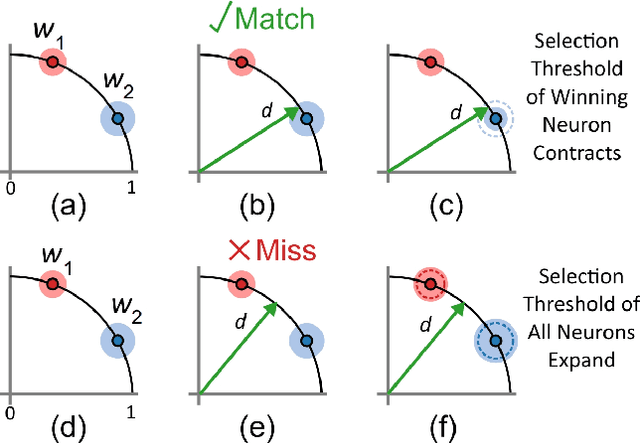

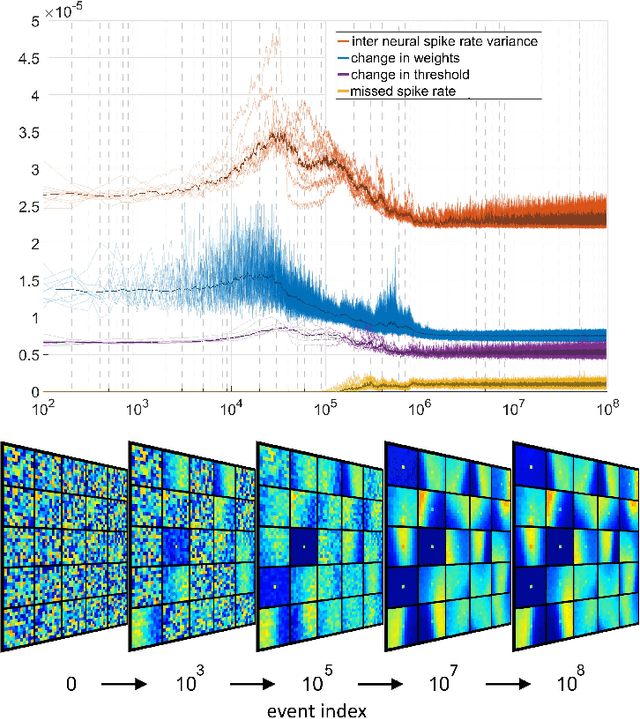

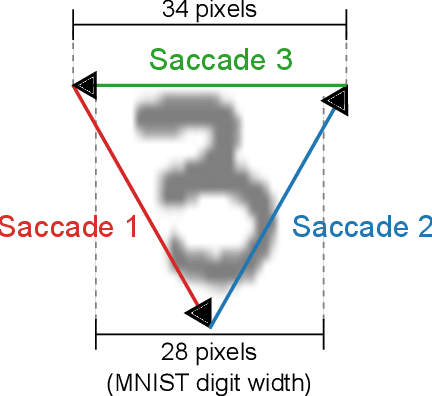

Event-based Feature Extraction Using Adaptive Selection Thresholds

Jul 30, 2019

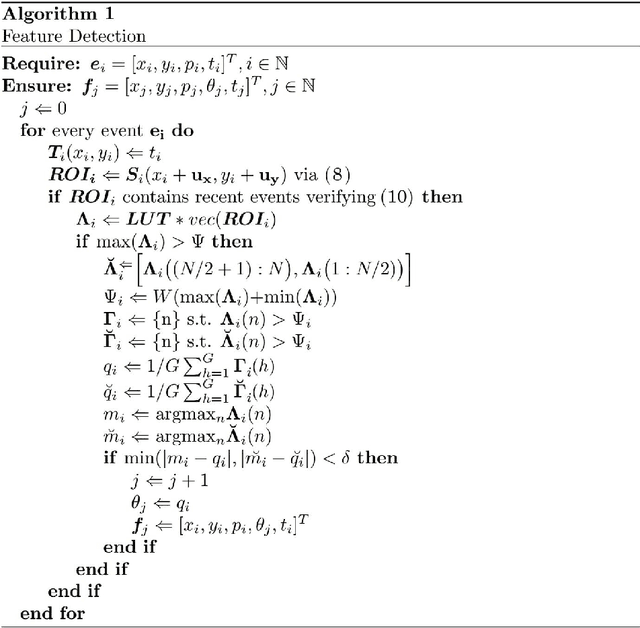

Abstract:Unsupervised feature extraction algorithms form one of the most important building blocks in machine learning systems. These algorithms are often adapted to the event-based domain to perform online learning in neuromorphic hardware. However, not designed for the purpose, such algorithms typically require significant simplification during implementation to meet hardware constraints, creating trade offs with performance. Furthermore, conventional feature extraction algorithms are not designed to generate useful intermediary signals which are valuable only in the context of neuromorphic hardware limitations. In this work a novel event-based feature extraction method is proposed that focuses on these issues. The algorithm operates via simple adaptive selection thresholds which allow a simpler implementation of network homeostasis than previous works by trading off a small amount of information loss in the form of missed events that fall outside the selection thresholds. The behavior of the selection thresholds and the output of the network as a whole are shown to provide uniquely useful signals indicating network weight convergence without the need to access network weights. A novel heuristic method for network size selection is proposed which makes use of noise events and their feature representations. The use of selection thresholds is shown to produce network activation patterns that predict classification accuracy allowing rapid evaluation and optimization of system parameters without the need to run back-end classifiers. The feature extraction method is tested on both the N-MNIST benchmarking dataset and a dataset of airplanes passing through the field of view. Multiple configurations with different classifiers are tested with the results quantifying the resultant performance gains at each processing stage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge